Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

AWS CEO Matt Garman said Trainium 3 early demand is strong, the company's hardware ambitions revolve around providing cloud services and enterprises are seeing strong returns amid AI bubble talk.

Here's a look at what Garman said at an ask me anything meeting with analysts.

Hardware ambitions. Garman said:

"Our focus is, is about cloud services and hardware in support of that. And so we don't sell hardware. We're not don't necessarily have plans to, although I wouldn't rule it out ever in the future, but it's not currently what we focus on, we're very focused on building the world's best infrastructure for customers to run on, and what we sell, the services and AI factories is no different than that."

Garman added that Amazon is obviously building hardware in Graviton and Trainium custom silicon, but that's in support of services.

SaaS-y efforts. Garman was asked about the success of Amazon Connect and AWS' recent moves to compile services into more of a suite. Garman said:

"When we launched Connect, nothing like that existed, and we thought that we could do a better job. We had a lot of learnings from internal use and I think that's resonated with customers. That's why it's a billion dollar plus business and there will be others like that."

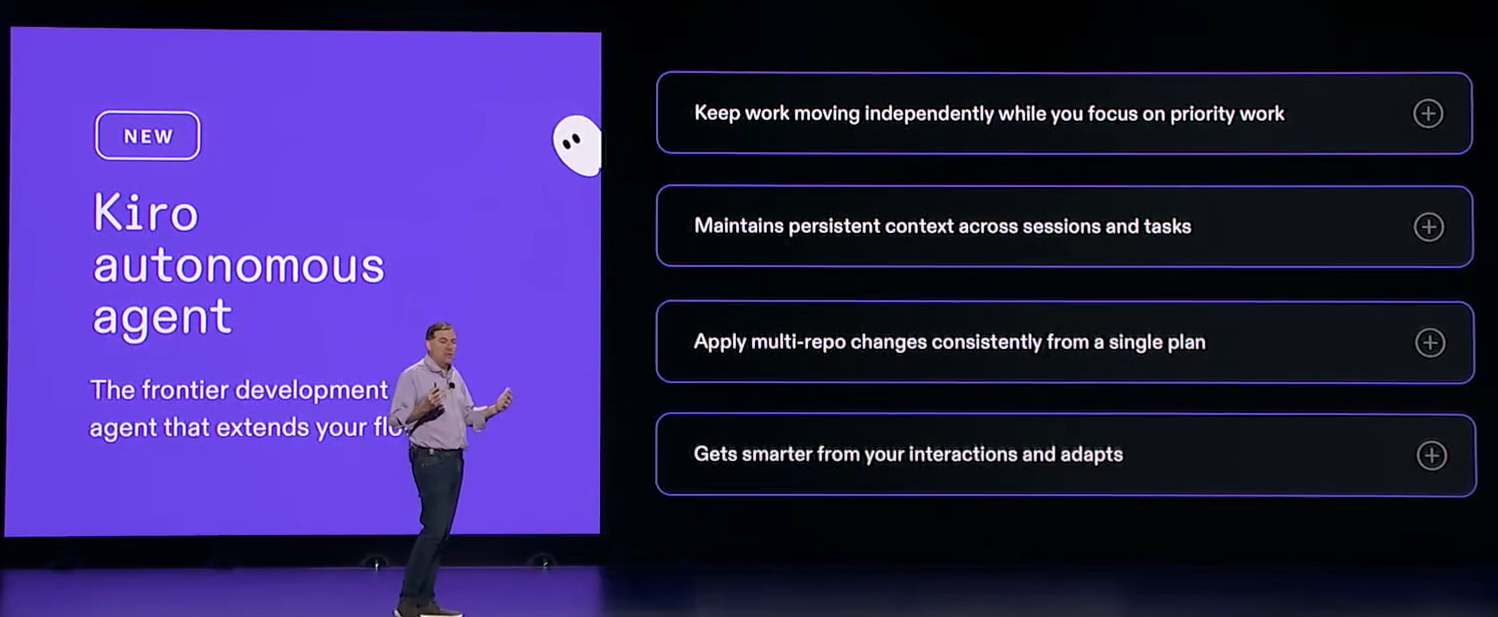

Garman said software development is another space where AWS can offer applications and cited Kiro. Healthcare is another possibility. He added:

"We don't have a concerted plan around SaaS, and we wouldn't go into it just because we want to go into it. And I think it's more there's an area where we think we have a differentiated idea that we can offer some interesting value to customers. We would always consider it. But it's more around that for us, we love leaning into our partners."

More from re:Invent 2025

Useful life of AI infrastructure. Amazon is on a 5-year depreciation, nothing others are pushing 6 years. "For AI infrastructure, we do five years because we think that there may be shorter life there," said Garman. "We have 20 years on our core infrastructure to know roughly how long CPUs last, drives last, network gear, data centers, etc.," said Garman.

Garman said AI infrastructure may be different since it's evolving so fast. As AI infrastructure moves from training to inference it's unclear what the useful life will be. "We're actually the same training infrastructure. And the benefits that go into that, whether it's larger models or better bandwidth or other things like that, actually benefit inference as well," said Garman.

Using multiple models, including smaller ones, will also impact the useful life of AI infrastructure. "I think that we're kind of trying to figure it out. I think as you think about a mixture of models, you actually are going to be able to send models to the right size of infrastructure to run it, ultimately, and take advantage of that," he said.

Building AI agents. Garman said AWS is focusing on offering building blocks as well as applications like AgentCore. Large enterprises want building blocks to build agents. Smaller firms will look for a complete package. "AWS has always been giving small customers the capabilities that only the largest companies used to have," said Garman.

AI bubble? It depends. "I don't expect capex to slow down. We'll keep spending and we'll keep growing. It's a capital intensive business and always has been."

He said if you're a VC funding a zero revenue business we may be in an AI bundle. Garman noted on a CIO panel multiple executives were seeing significantly positive ROI. "I've never met an enterprise that was seeing really good positive ROI investments just decide not to do it," said Garman. "That's my signal of how things are going currently. The industry is still supply constrained by something. Chip capacity, power capacity, laser capacity and things like that."

Developers. Garman was asked if AWS was refocusing on the developer and he said the company is always focused on developers.

Garman said:

"It's always been important. But the focus where we are in the world right now is how much developers are driving some of that innovation. It's an area where I think we can add a ton of value. It's a customer segment that's incredibly important for me and the team."

"We can bring a lot of differentiated value. We think that we can turbocharge what developers can do."

AI and jobs. Garman said, "I don't think AI is replacing jobs, but it is changing them."

Garman added that training will be critical. "We want them to understand how to use AI tools. We want them to figure out how they use AI to code. We want them to figure out how they use AI in their jobs," said Garman. "And because those rules are going to change, we'll continue to iterate on our trainings as well."

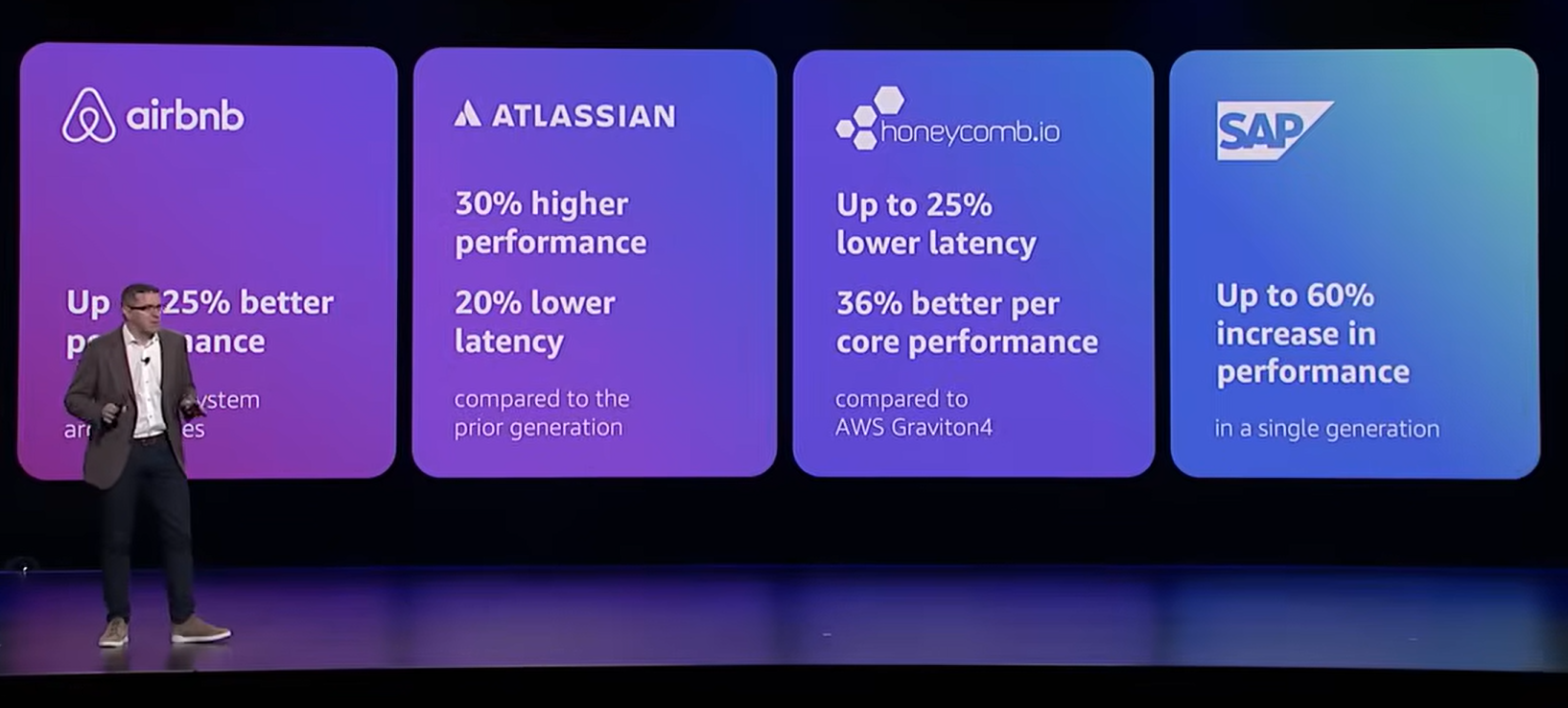

AI workloads. Garman was asked about whether AWS was getting AI workloads. he said the multi-model approach has paid off. "Most of our customers are building their AI production applications on AWS. They want them integrated where their applications are. They want them integrated where their data is. They want a choice of models. They want actually a platform to build inference that has enterprise controls that gives them the best price performance they're seeing price performance gains," said Garman, who said AWS will embrace multiple models whether they come from Google, OpenAI or anyone else. "I think we have a really differentiated story for customers on how they customize AI for them."

Multi-cloud. Garman said AI workloads will be inherently multi-cloud. The key will be to offer observability across all of the clouds as well as security and network connectivity.

Trainium. Garman has said Trainium 2 was oversubscribed. Trainium 3 is underway, but just became generally available. "I expect to sell those as fast as we land them as well," he said. "The response to Trainium 3 has been much stronger than Trainium 2."

When asked about AWS custom silicon vs. others, Garman said the company is buying plenty of Nvidia and AMD chips. AWS will follow demand.

On Trainium 4, Garman said AWS' custom silicon will link up with Nvidia's NVLink Fusion and others.

Quantum computing. Garman said:

"I think quantum was going to be a super powerful technology. It's a big investment area for us. Our lab is making some and there's much attacks, and who knows me right that way? There's much different paths on quantum. I like the way that we're going around the error correction. And the team has made some really big advancements over the last year.

But no one has made a useful quantum computer yet. People who should dig into it right now are researchers. There's not really good business reason right now."

Leo. Garman said Amazon was bullish on Leo and satellite internet services. "I think Leo is going to unlock a number of new use cases. I think there's a big consumer business as well as a big business opportunity. There are a lot of companies that would love getting a gigabit line in lightly connected areas or out in the field," said Garman.

Robotics and physical AI. Garman said he was excited about physical AI models and robotics, but noted that the models aren't ready just yet. "I think physical AI and agents are going to play a big role and be hugely transformative, but there just hasn't been a prevalence of data," said Garman.

He also noted that it's unclear whether startups sell the brain of the robot or the robot. The market is in its infancy--even though Amazon is one of the largest buyers of robots. "It's early but it's an area that I'm excited about," said Garman.

Data to Decisions

AWS reInvent

aws

Chief Information Officer