TCS Acquires Coastal Cloud: Filling a Critical Gap for Salesforce’s Agentic Future

TCS has announced the acquisition of Coastal Cloud, a leading US-based Salesforce Summit Partner, in a $700 million all-cash transaction. The deal moves TCS into the top tier of Salesforce advisory and consulting firms globally and strengthens its ability to deliver AI-first, agent-driven transformation programs. Coastal Cloud brings Salesforce-native advisory depth, strong mid-market relationships, and close alignment with Salesforce product leadership through its role on the Salesforce Partner Advisory Board.

What Salesforce buyers are increasingly looking for

In conversations with enterprise buyers, the focus has shifted beyond implementation capacity. Salesforce customers are looking for partners that can connect platform decisions to business outcomes, design operating models around AI and agents, and scale these programs across regions and business units. As Salesforce advances Agentforce 360, buyers consistently point to the need for help with data readiness, governance, integration, and continuous optimization. This has widened the gap between boutique Salesforce specialists with deep platform expertise and large GSIs that bring scale but have often lacked senior Salesforce advisory leadership.

How this acquisition fills a gap for TCS customers

This is where the Coastal Cloud acquisition matters for TCS. In buyer discussions, TCS has been viewed as strong in enterprise scale, industry context, and global delivery, but Salesforce programs often started deeper in execution rather than advisory. Coastal Cloud adds that missing front-end capability. For TCS customers, Salesforce engagements can now begin with Salesforce-native business and industry advisory and then scale globally with consistent delivery, AI engineering, and governance. This becomes increasingly important as Salesforce programs shift from CRM optimization to agent-driven, cross-functional transformation.

Why this matters for Coastal Cloud customers

From a buyer perspective, Coastal Cloud customers have historically valued deep Salesforce expertise and close partnership. However, in conversations about scaling, global rollout, and integration with enterprise platforms, limitations often emerged. With TCS, these customers gain access to global delivery, vertical accelerators, and enterprise-grade AI capabilities, while retaining Salesforce depth and continuity. This is particularly relevant as Agentforce programs extend across sales, service, marketing, and revenue operations.

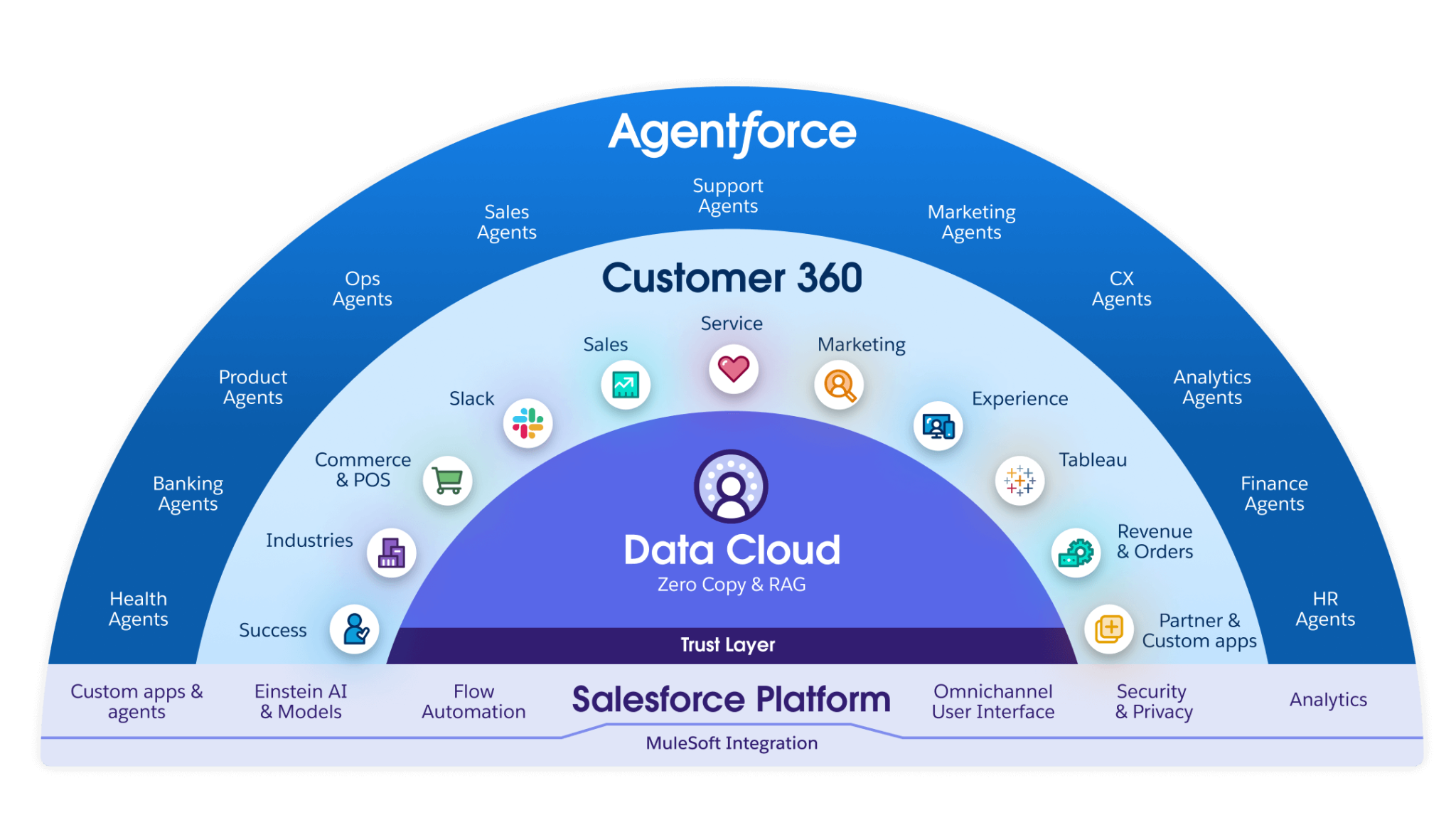

[Source: Salesforce]

Agentforce 360, GSIs, and the competitive landscape

Agentforce 360 signals a shift toward agents operating across business workflows, not just automating tasks. In buyer conversations, it is clear that delivering these programs requires process redesign, data unification, security, governance, and operational ownership at scale. This favors GSIs. Accenture and Deloitte have long paired Salesforce depth with strong business consulting. Cognizant and Infosys have invested heavily in Salesforce delivery and platform skills but are often perceived as more execution-led. Coastal Cloud gives TCS a clearer path to compete across this spectrum by strengthening Salesforce-native advisory leadership alongside its global delivery engine. The differentiator, as buyers note, will be who can operationalize agents reliably across the enterprise, not who can deploy them fastest.

What buyers should ask now

- Does my Salesforce partner combine Salesforce-native advisory depth with global delivery scale for agent-driven programs?

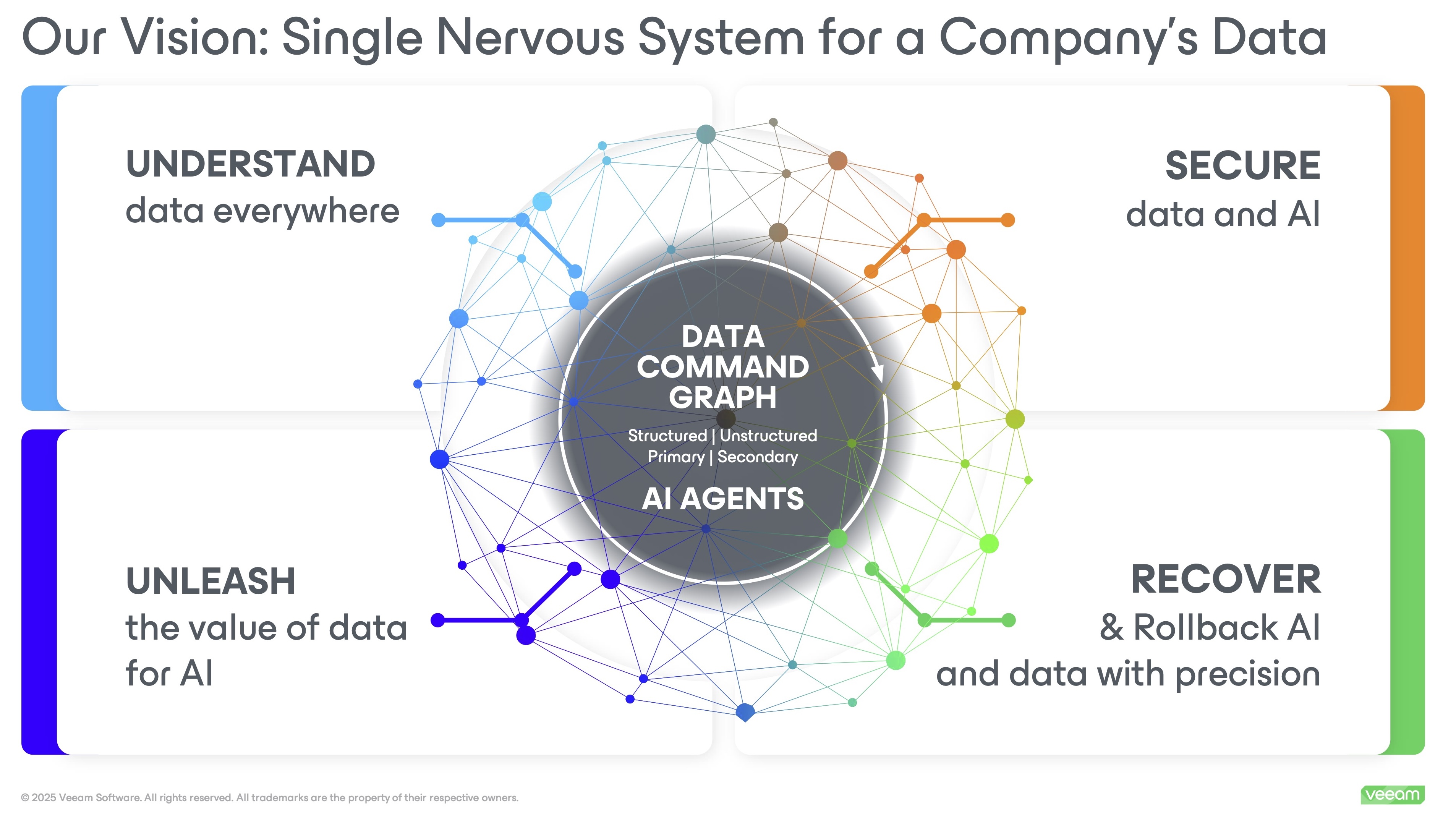

- How will Agentforce agents be governed, monitored, and evolved across regions and business units?

- Can Salesforce agents be integrated with enterprise data, security, and non-Salesforce systems?

- What industry-specific use cases and accelerators exist beyond generic Agentforce demonstrations?

Closing perspective

This acquisition reflects a clear market signal emerging in buyer conversations. Enterprises want fewer handoffs, stronger advisory up front, and partners that can carry agentic programs from design through sustained execution. With Coastal Cloud, TCS is closing a meaningful capability gap and positioning itself more directly for the next phase of Salesforce-led, agent-driven enterprise transformation.

Tech Optimization Innovation & Product-led Growth salesforce Chief Executive Officer Chief Information Officer Chief Product Officer

On its own, the OpenAI-Disney partnership is standard issue. However, Disney is opening the door for other media companies to license IP and characters to models. After this deal, it's not a stretch to see Google Gemini do something similar. This OpenAI-Disney deal is the equivalent of putting Mickey Mouse on the Apple Watch.

On its own, the OpenAI-Disney partnership is standard issue. However, Disney is opening the door for other media companies to license IP and characters to models. After this deal, it's not a stretch to see Google Gemini do something similar. This OpenAI-Disney deal is the equivalent of putting Mickey Mouse on the Apple Watch.