Vice President and Principal Analyst

Constellation Research

Chirag Mehta is Vice President and Principal Analyst focusing on cybersecurity, next-gen application development, and product-led growth.

With over 25 years of experience, he has built, shipped, marketed, and sold successful enterprise SaaS products and solutions across startups, mid-size, and large companies. As a product leader overseeing engineering, product management, and design, he has consistently driven revenue growth and product innovation. He also held key leadership roles in product marketing, corporate strategy, ecosystem partnerships, and business development, leveraging his expertise to make a significant impact on various aspects of product success.

His holistic research approach on cybersecurity is grounded in the reality that as sophisticated AI-led attacks become…

Read more

I recently spent a couple of days at Fortinet’s analyst summit in Sunnyvale. The conversations with Fortinet’s executive leadership team felt refreshingly grounded. No forced big-bang announcements. Instead, the focus was on how 25 years of engineering work shaped the company’s platform and why those choices matter more now as security shifts toward hybrid deployments and AI workloads.

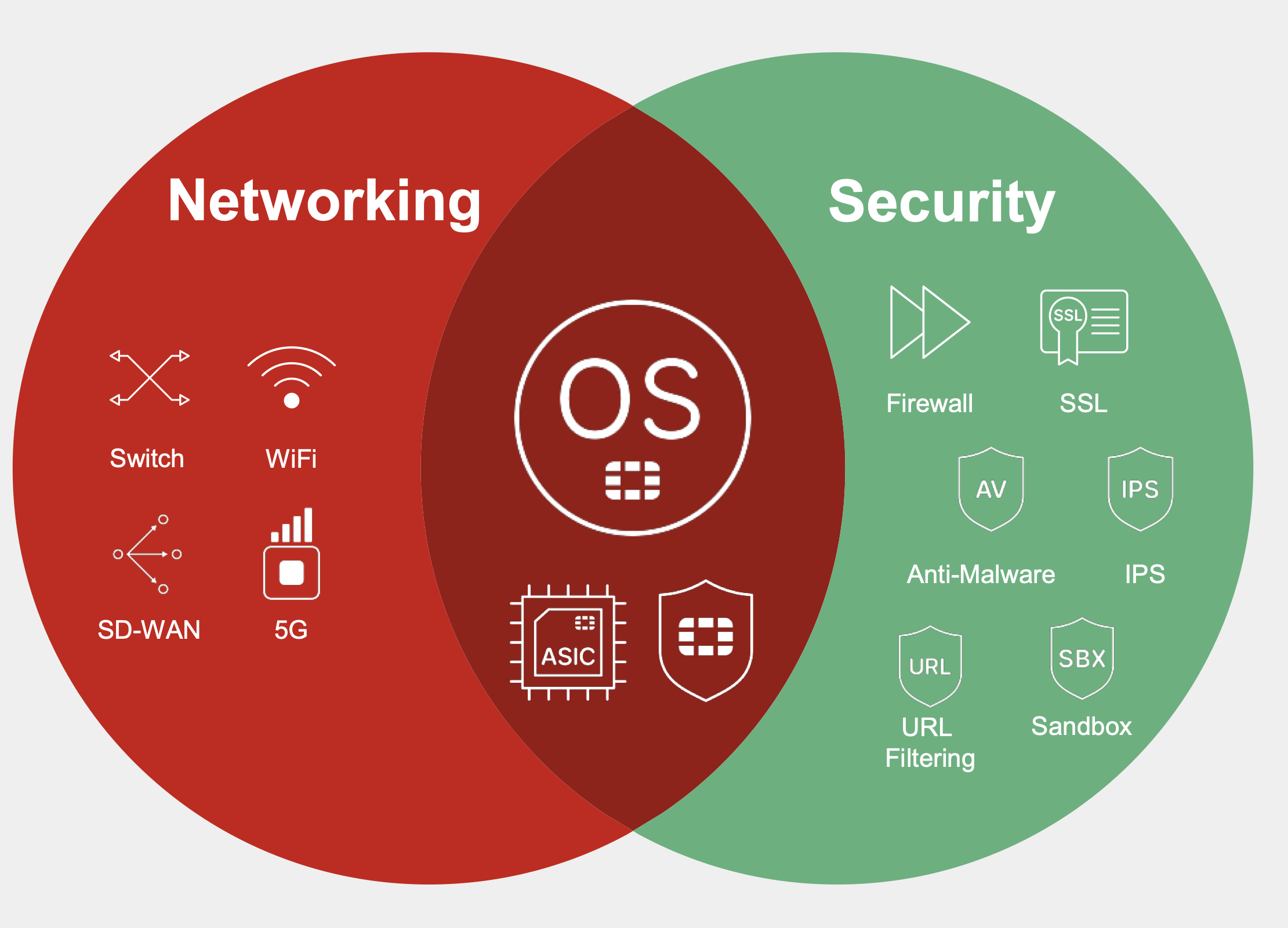

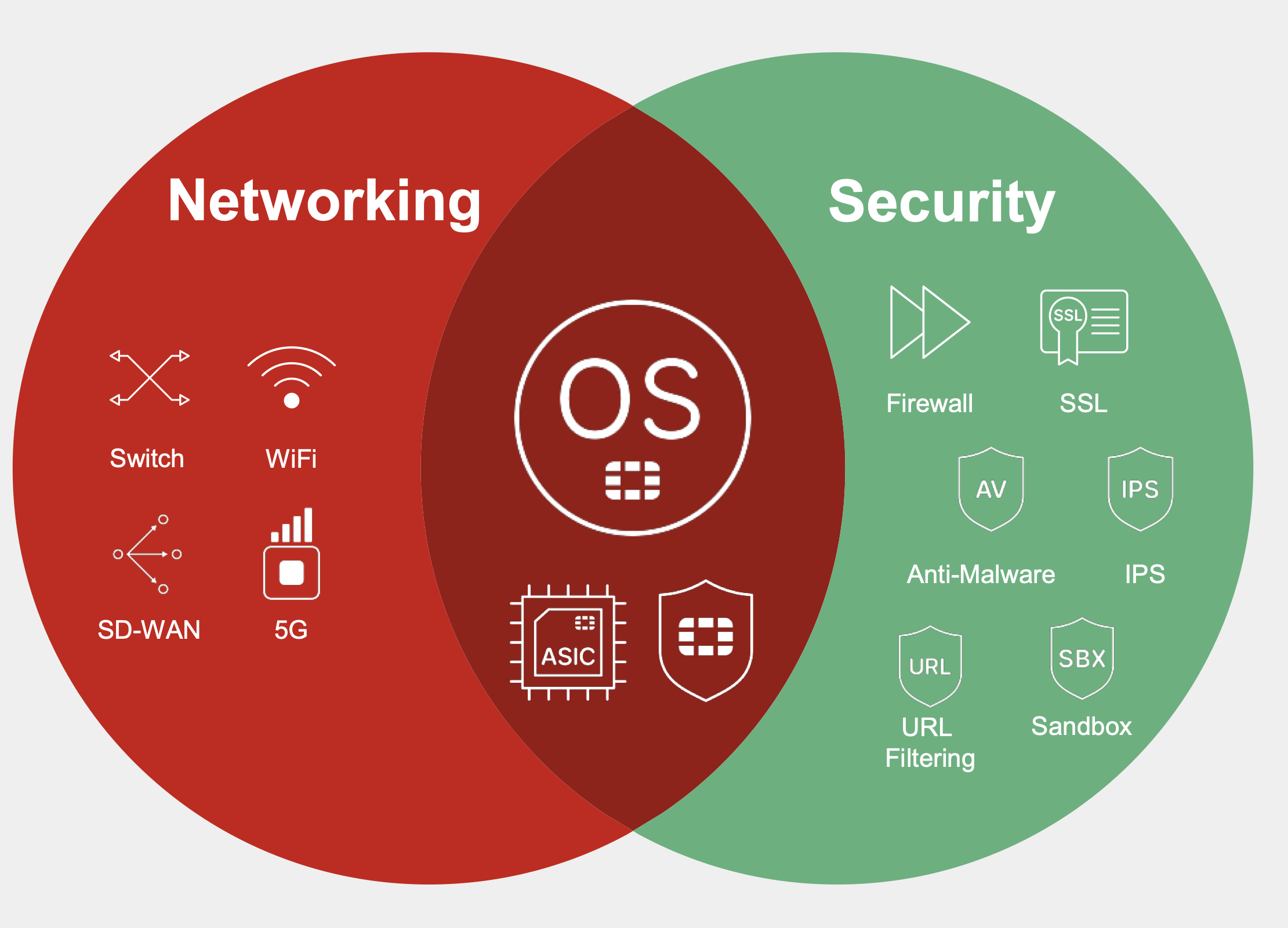

Many vendors today talk about platforms. Most mean a sales bundle rather than a true platform. Fortinet means shared OS, shared agent, shared silicon, and shared telemetry. That is the foundation it has been building toward for more than a decade, and much of the summit was about showing how that foundation is starting to pay off for customers.

DNA rooted in patient engineering

Fortinet’s trajectory begins with a founder-led culture focused on engineering quality and customer trust. Over time, three characteristics became clear differentiators:

Customer-centricity

The company has prioritized practical adoption paths rather than dramatic rip-and-replace moves. Many platform capabilities are accessible through existing deployments, which supports gradual evolution.

An engineering mindset

Building custom ASICs and a unified OS early on required patience and long-term investment. This approach helped Fortinet avoid short-term pivots and stay focused on performance, integration depth, and scale.

A slower but steadier approach

Fortinet avoided growth-at-any-cost and chose to invest in R&D and mostly organic execution. Today, it serves a large global customer base including a strong presence across Fortune 100 customers and critical infrastructure sectors. The company holds more than 1,300 patents, with a significant number focused on AI.

Strong roots, deliberate expansion

A helpful way to understand Fortinet’s strategy and growth is to look at three decisions that shaped its trajectory:

1) Build security around purpose-built silicon

From the beginning, Fortinet chose to design its own ASICs. This took more time and capital than relying on commodity CPUs, but it gave the company meaningful control over performance and efficiency. Today, as encrypted and east-west traffic grows and AI workloads stress networks, those chips allow customers to inspect and secure more traffic without unacceptable performance or power tradeoffs. Current FortiASIC generations support NGFW, IPS, SD-WAN, segmentation, and SSL inspection efficiently, giving customers scale without adding cost or architectural complexity. This investment is likely to matter even more in a post-quantum world.

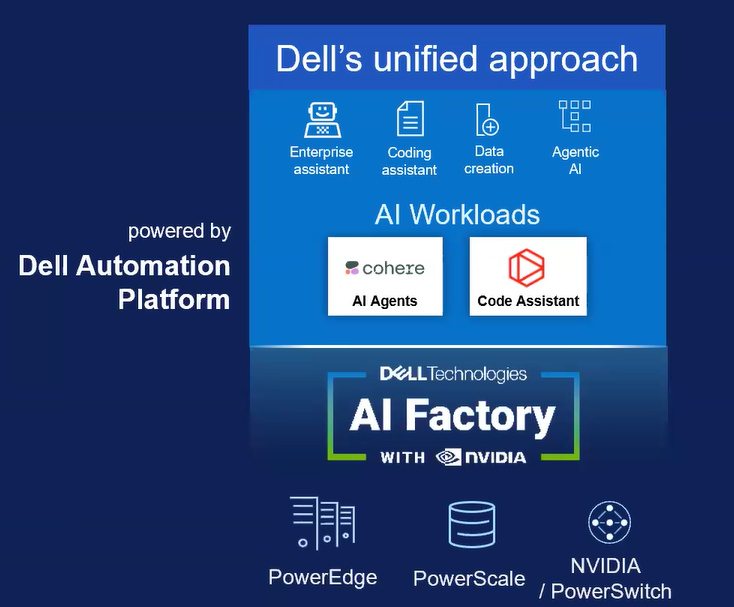

2) Treat software as a unifying engine

Fortinet’s core operating system, FortiOS, spans firewalls, SD-WAN, SASE, endpoint, and security operations. Early on, what began as a simple VPN agent grew into a unified agent supporting ZTNA, EPP/EDR, and DLP. Because everything runs on a single OS, Fortinet could expand capabilities without introducing new agents, consoles, or deployment paths. This gives customers a practical way to move toward modern access and endpoint controls using the footprint they already have, reducing integration lift and encouraging natural platform adoption.

3) Build a fabric that connects everything

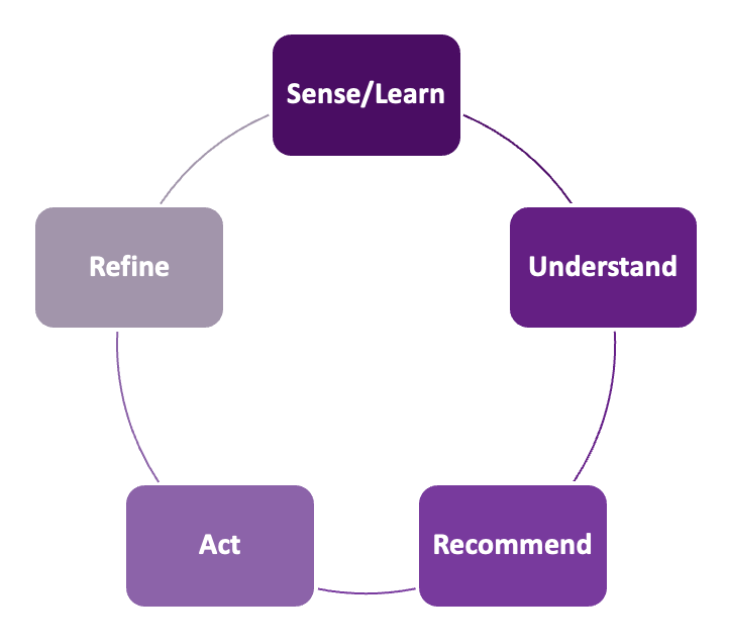

About 10 years ago, Fortinet introduced its Security Fabric to connect products through shared telemetry, shared policy, and shared analytics. FortiGuard Labs feeds this system with roughly seven billion threat signals per day, which strengthens detection and response across the environment. Since the fabric runs on the same OS, agent and silicon, new capabilities plug in cleanly, giving customers more value as they expand.This is platformization through architecture rather than SKU grouping, helping teams lower operational friction while improving context across networks, endpoints, and cloud environments.

[Source: Fortinet]

Unified SASE: users, branches and factories

Fortinet’s early choice to extend its original VPN agent rather than create new clients over time gives customers a straightforward path to modern access controls. Because the same agent now supports ZTNA, EPP/EDR, and DLP, organizations running SD-WAN and VPN often have the foundations to enable ZTNA with little added effort or cost. This helps them move away from perimeter-based access and toward user, device, and posture-driven policies without a major re-architecture.

That architecture also supports Fortinet’s growing SASE business. With FortiOS and the unified agent working across campus, branch, remote workers, and cloud traffic, customers can use a single approach for secure access. More than 170 global POP locations reinforce this model by placing security inspection and policy closer to users and applications, improving performance and consistency across hybrid deployments.

In operational technology (OT) environments, Fortinet focuses on enforcement through rugged FortiGate appliances, segmentation, and secure remote access. FortiGuard Labs adds OT-specific threat intelligence and protocol coverage, while partners such as Nozomi and Claroty provide deeper domain visibility. This pairing lets customers apply consistent security and policy where they need it, while still benefiting from partners who understand the nuance of industrial networks.

Together, ZTNA, SASE, and OT capabilities extend the same FortiOS + agent + enforcement model from the user edge through factories and field sites. Customers gain coverage without managing separate stacks or fragmented workflows.

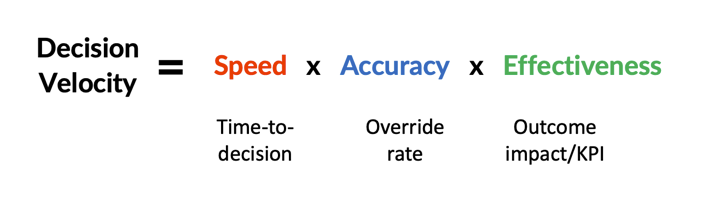

One platform, one data lake: modernizing the SOC

Fortinet sees traditional SIEM and SOAR deployments as powerful but difficult for many teams to operate. Most organizations struggle with integration, tuning, and staffing. By anchoring SIEM, SOAR, XDR, and threat intelligence to a single data lake through FortiAnalyzer, Fortinet intends to reduce that burden. Logs and telemetry across the fabric feed a common store, which keeps investigations, reporting, and automation aligned to the same data and context. FortiAI-Assist accelerates investigations and guided response, helping analysts work faster and focus on higher-value decisions.

This approach turns SOC growth into a staged journey rather than a disruptive transition. Customers keep building on the same data foundation as they mature, which helps them get more value from their telemetry while reducing complexity and overhead.

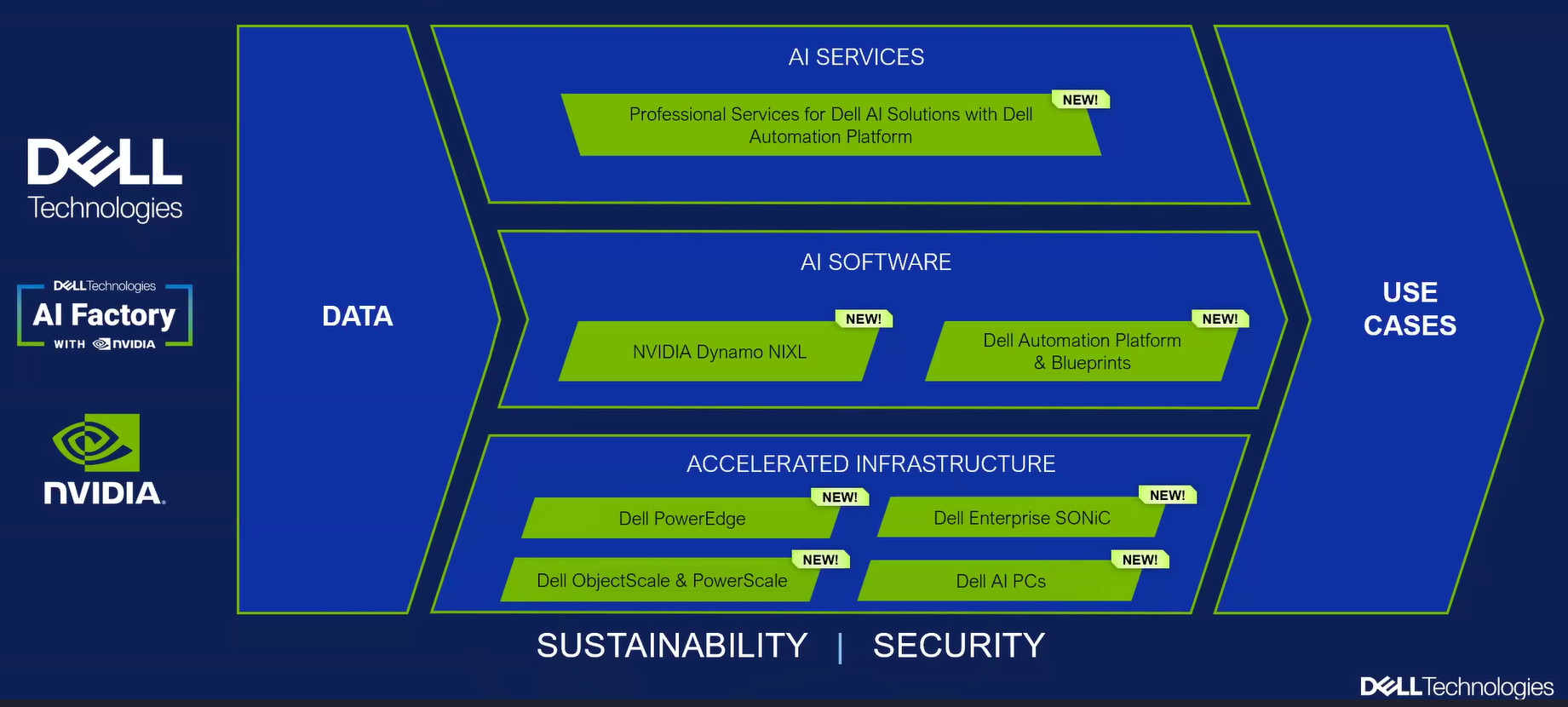

Cloud and AppSec: expanding the surface

Cloud security evolved quickly and created space for companies such as Wiz to gain early traction. Fortinet is now building more depth in this area through a mix of organic development and targeted acquisitions. Lacework adds CNAPP capabilities, and Next DLP strengthens data security. These join Fortinet’s tools such as FortiAppSec and FortiAIGate, which extend protection across web applications, APIs, and AI workloads.

Because these tools operate on the same FortiOS and fabric telemetry, customers can gain cloud and application coverage without managing isolated platforms. This helps them protect critical workloads across hybrid deployments with more consistent control, policy, and context. The opportunity ahead is to deepen visibility from code to cloud to runtime so that teams can follow application, identity, and data signals across environments. Customers want one view of workload posture, API exposure, and data flows, and Fortinet’s architecture gives it a path to grow into that space.

My View: Staying ahead means going deeper

1) Depth in cloud and AppSec needs to accelerate

Fortinet has a strong foundation in network security and enforcement, but customers are shifting toward protecting applications, data, and identities across hybrid multi-cloud environments. Lacework and Next DLP help, and products such as FortiAppSec and FortiAIGate show progress. The opportunity now is to deepen visibility and control from code to cloud to runtime so that security can follow applications wherever they live. Buyers today expect a single context across workloads, APIs, users, and data. This remains a competitive space, and deeper integration here will be important for long-term relevance.

2) Continued ASIC and POP expansion will reinforce the platform

Custom silicon gives Fortinet a real performance and power-efficiency story. As more data and AI workloads move through distributed environments, this advantage can matter even more. The build-out of more than 170 POPs supports hybrid deployments by placing compute and inspection close to users and applications. Continued investment in ASIC capability and POP footprint can strengthen Fortinet’s value in SASE and distributed cloud networking. The combination of silicon and POPs is a differentiator that many software-only security vendors cannot match.

3) The platform value story must become more explicit

The shared OS, agent, and data lake are the core of Fortinet’s platform. The company will benefit from showing where this architecture improves time to value, detection accuracy, and SOC productivity. Buyers continue to debate best-of-breed versus platform. Many still choose a mix of tools because the benefits of consolidation are unclear. Fortinet can win by explaining the practical benefits of shared policy, shared AI, and shared telemetry across endpoints, networks, cloud, and SOC. Customers appreciate simple integrations, consistent workflows, and measurable efficiency gains.

4) Transition to solution-centric and SaaS is healthy but will take discipline

Fortinet is moving from product-centric selling to solution-led outcomes delivered through SaaS and flexible pricing models, including marketplaces. Maintaining the “Rule of 45†during this shift will require careful execution. The company’s founder mentality has historically supported disciplined growth, which helps in transitions like this. The real test will be how quickly customers adopt cloud marketplaces, usage-based pricing, and managed offerings. Trust, quality, and strong economics would be key factors.

5) FedRAMP could open a new growth frontier

Fortinet already sells into state, county, and municipal accounts. Once it achieves FedRAMP, the federal market could unlock meaningful scale. Federal verticals value trusted vendors with performance, power efficiency, and strong OT/critical infrastructure capabilities. Fortinet checks those boxes. The combination of secure networking, SASE, and SOC platform could position the company well when certification arrives. This is likely a multi-year opportunity that could shift the growth mix in a meaningful way.

Final Thoughts

Fortinet has spent years building around a shared OS, agent, silicon and data foundation. That patience is becoming a competitive advantage as organizations move toward hybrid deployments and AI workloads. The company’s platform now spans networking, access, SOC and cloud, with the same architecture running underneath.

There is still meaningful work ahead, especially across cloud and application security. But the direction is clear. Fortinet is evolving beyond a products mindset toward solutions and SaaS models, while staying grounded in engineering fundamentals and financial discipline. If execution keeps pace, the platform’s consistency and breadth will remain a compelling option for customers looking for coherence in a fragmented security landscape.

Tech Optimization

Digital Safety, Privacy & Cybersecurity

Security

Zero Trust

Chief Information Officer

Chief Information Security Officer

Chief Technology Officer

Chief Privacy Officer