AWS adds AI agent policy, evaluation tools to Amazon Bedrock AgentCore

Amazon Web Services is adding AI agent policy and evaluation features to Amazon Bedrock AgentCore in a move that aims to solve the big issues that keep enterprises from moving from proof of concept to production.

At AWS re:Invent 2025, the cloud giant updated Amazon Bedrock AgentCore, which recently became generally available.

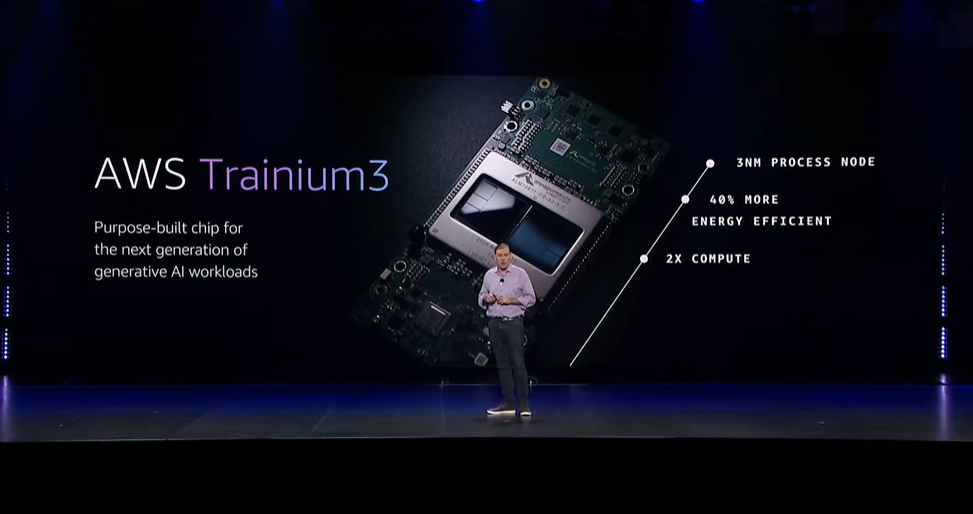

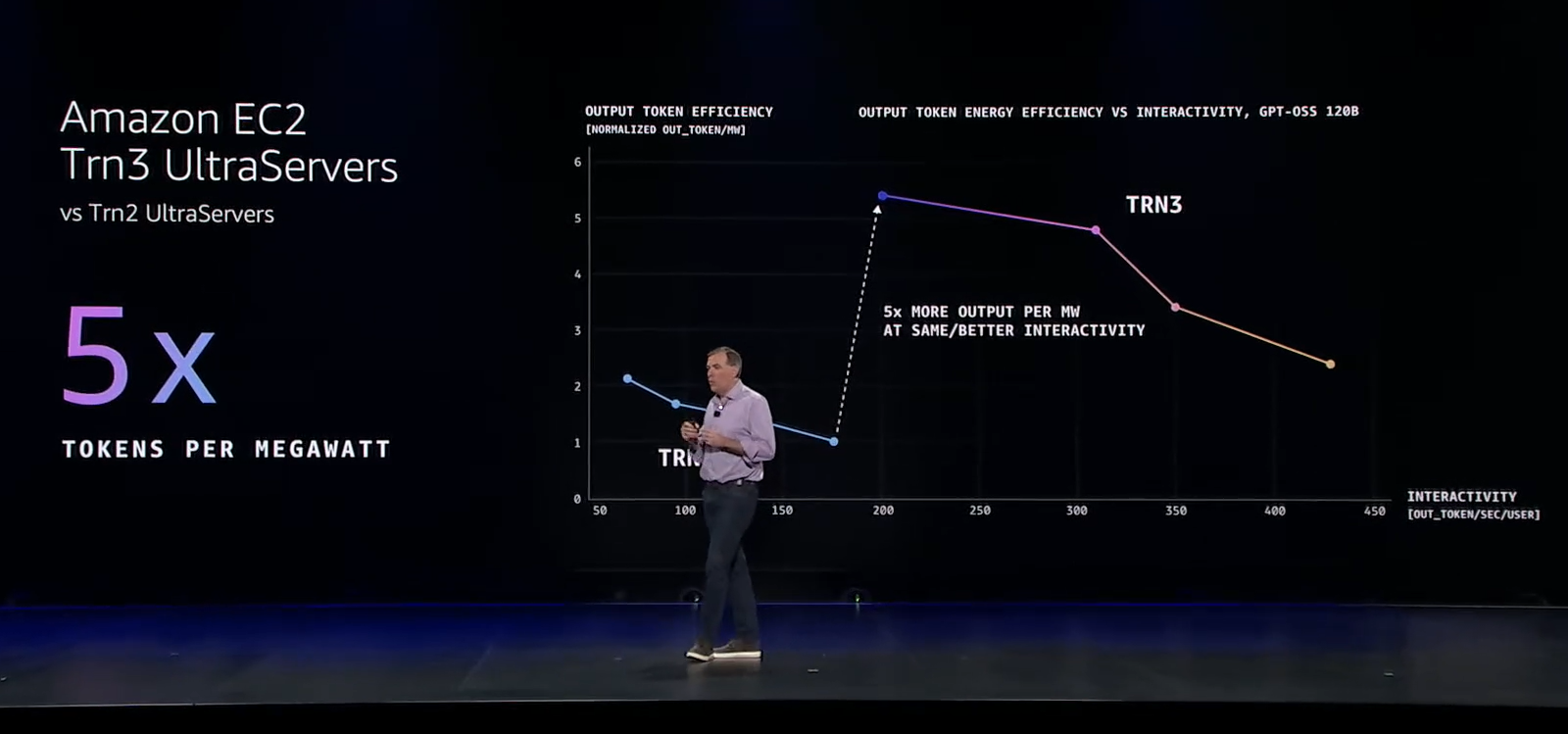

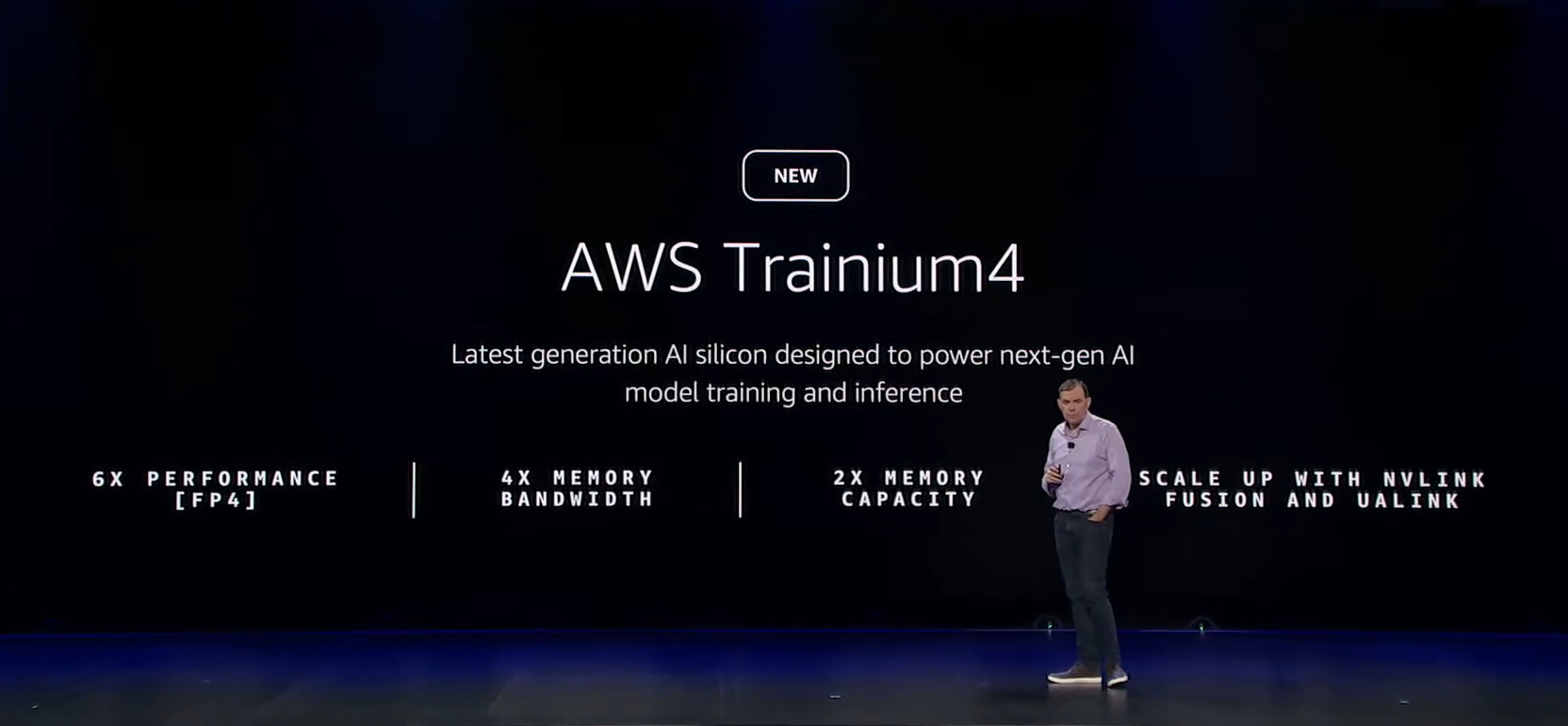

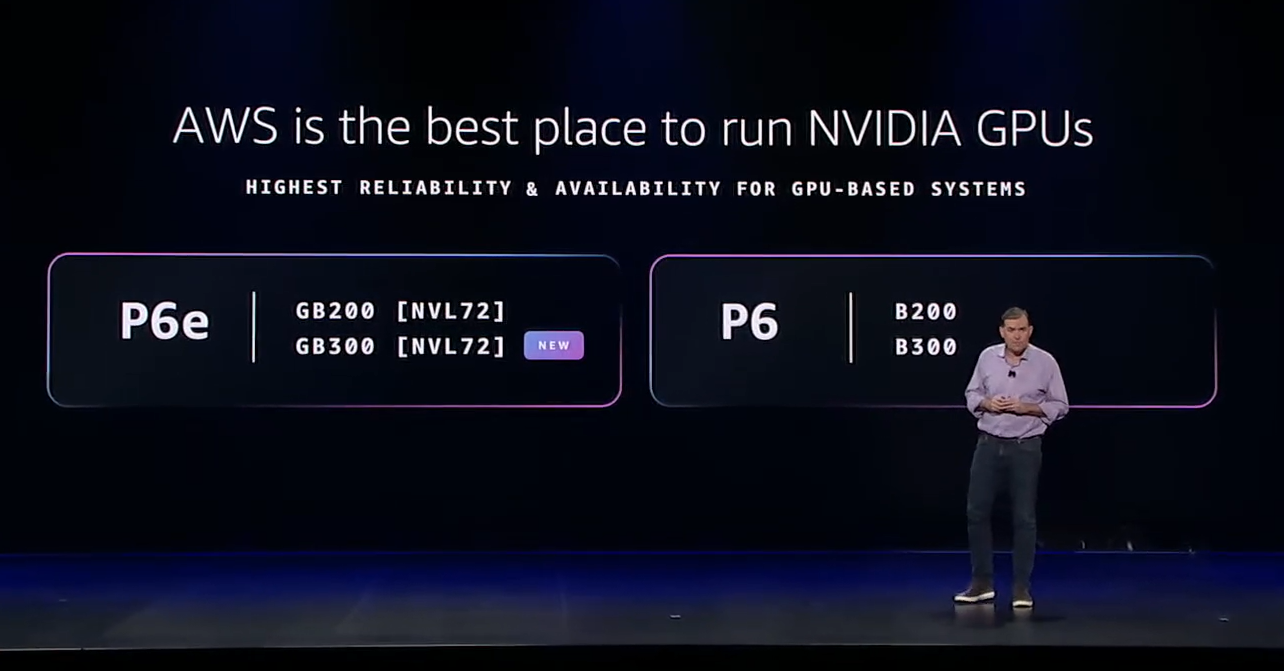

Amazon Bedrock AgentCore sits in the tools and infrastructure layer for building AI agents and applications along with Amazon Bedrock, Amazon Nova and Strands Agents. That AI building infrastructure also includes Amazon SageMaker and compute including AWS Trainium and Inferentia.

More from re:Invent 2025

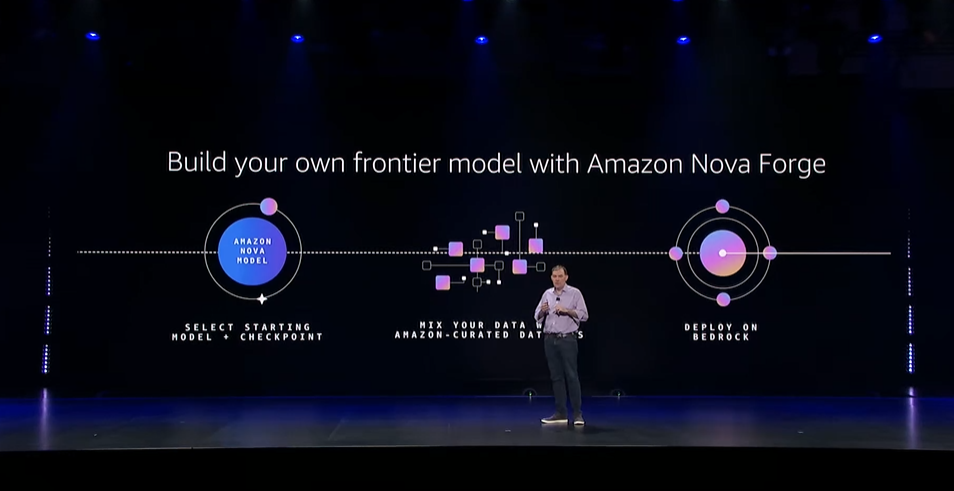

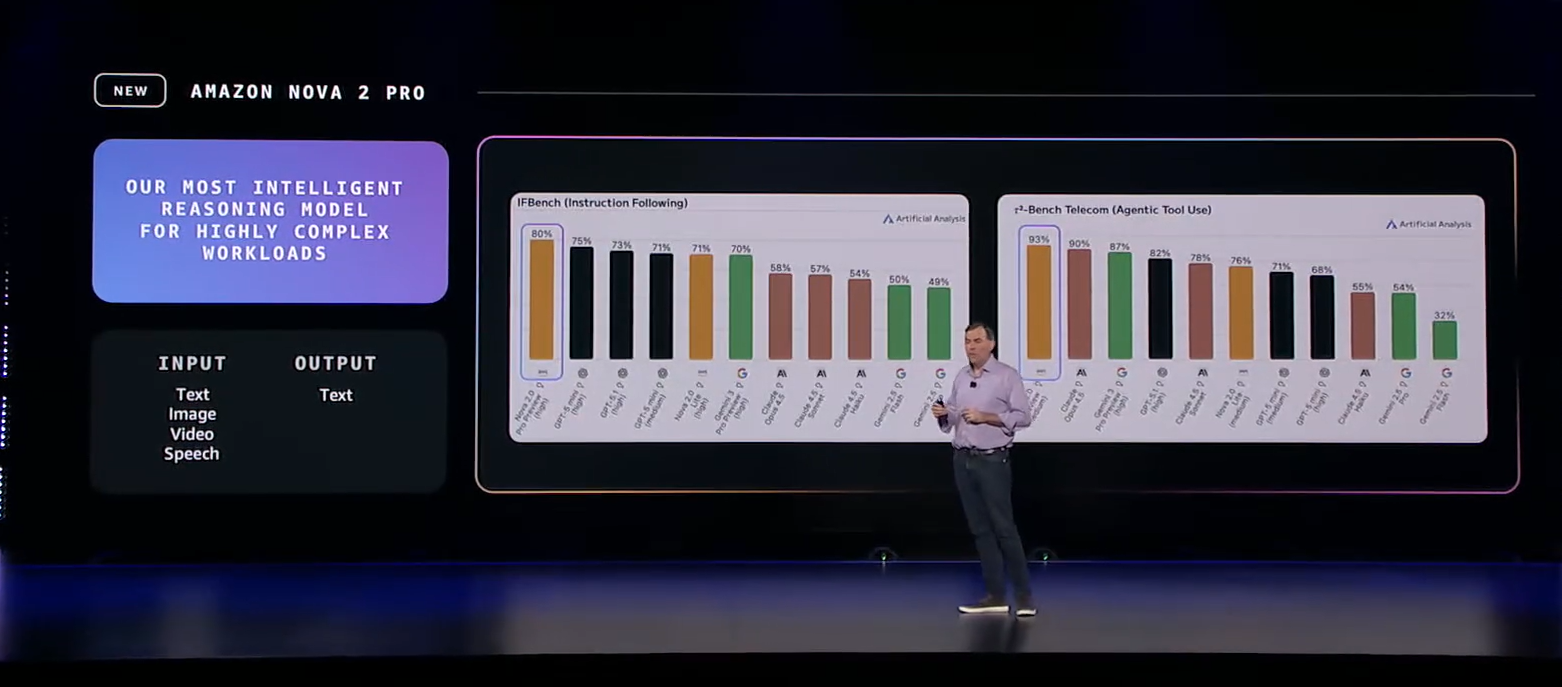

- AWS launches Amazon Nova Forge, Nova 2 Omni

- AWS launches AI factory service, Trainium 4 with Trainium 4 on deck

- AWS Transform aims for custom code, enterprise tech debt

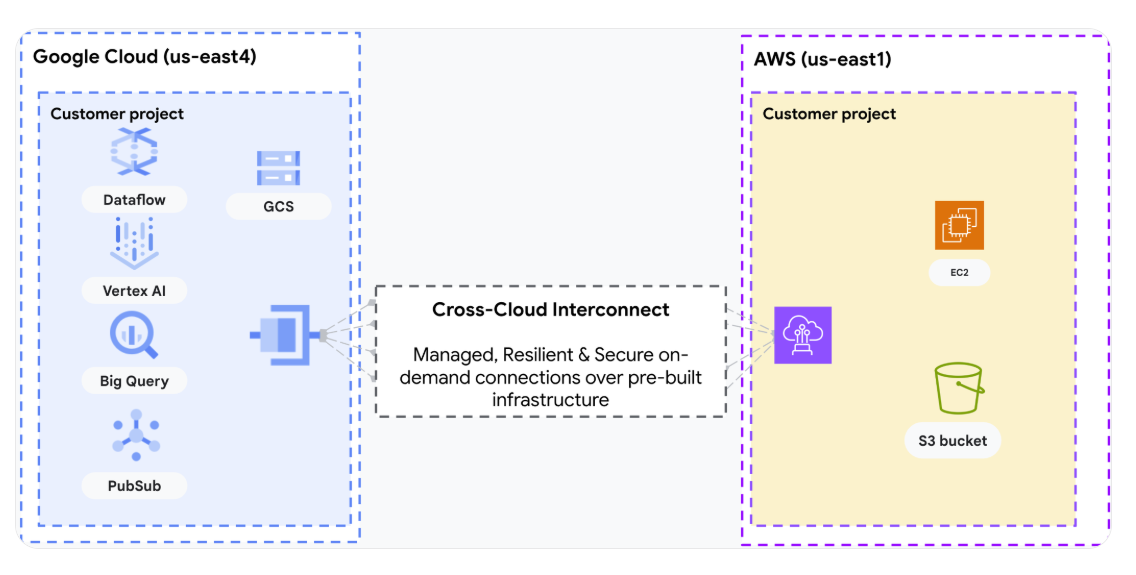

- AWS, Google Cloud engineer interconnect between their clouds

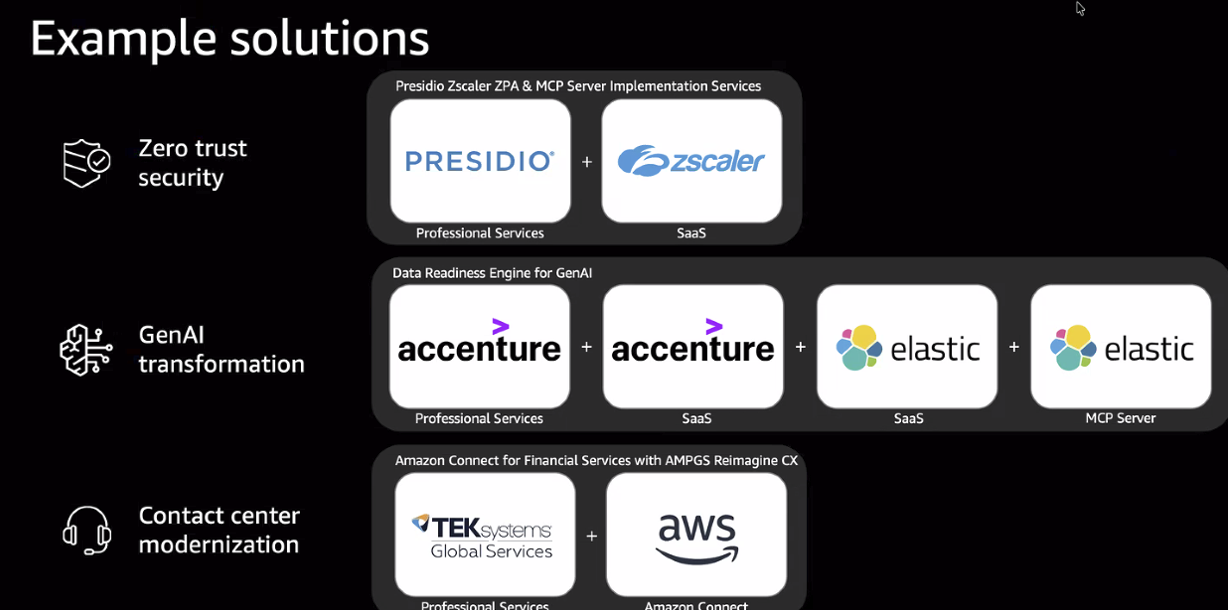

- AWS Marketplace adds solutions-based offers, Agent Mode

Customers using Amazon Bedrock AgentCore include PGA Tour, which deployed an automated content system for player coverage, Salesforce's Heroku, which built an app development agent called Heroku Vibes, and Grupo Elfa, which deployed three agents for price quote processing. AWS CEO Matt Garman also noted Nasdaq and Bristol Myers Squibb as AgentCore cusotmers.

Garman said the addition of policy and evaluation can free up innovation. "Most customers feel that they're blocked from being able to deploy agents to their most valuable, critical use cases today," said Garman.

Mark Roy, Tech Lead, Agentic AI at AWS, said CIOs are racing to deploy AI agents, but want insurance in the form of governance and evaluations to scale them.

Here's a look at the policy and evaluation additions to Amazon Bedrock AgentCore.

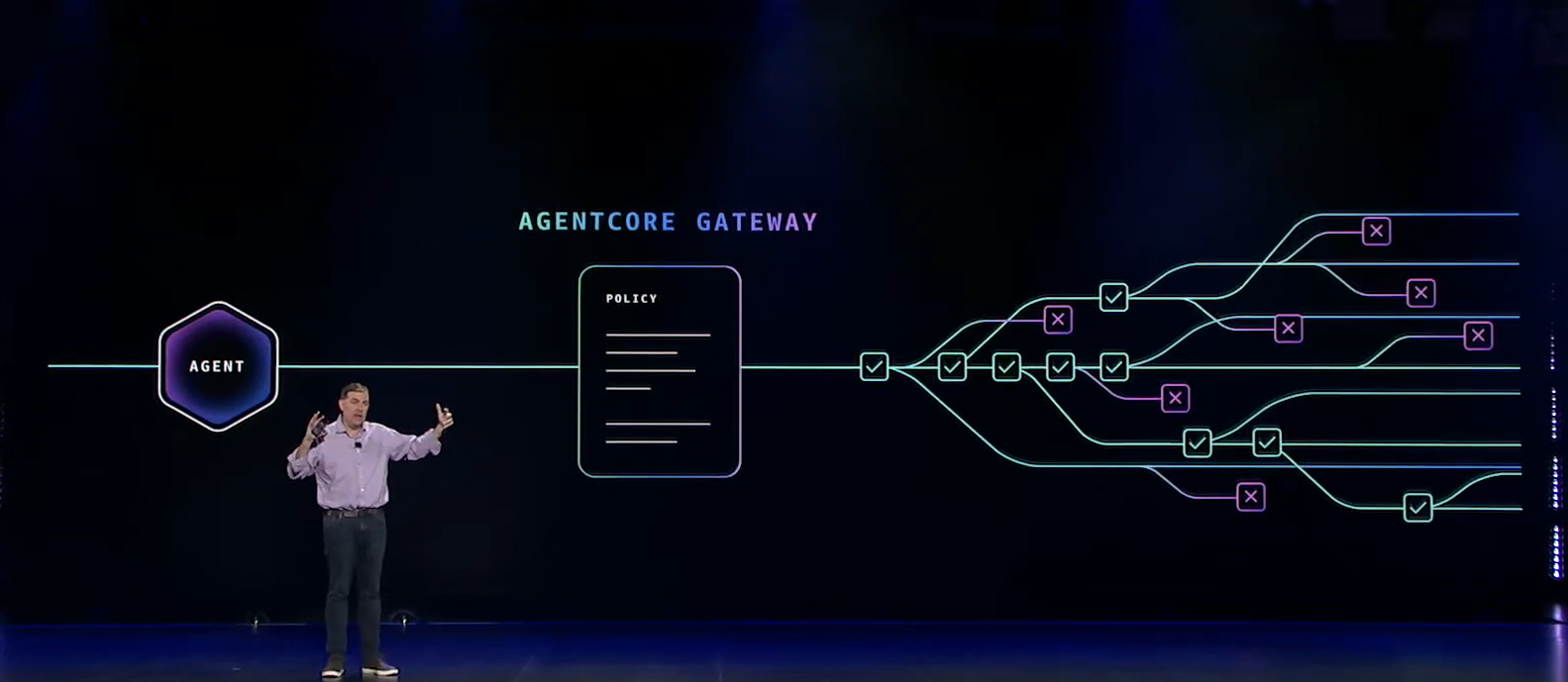

Policy in Amazon Bedrock AgentCore is designed to ensure AI agents stay within defined boundaries without slowing them down. The policy system is integrated with AgentCore Gateway to intercept every call before execution.

Amazon Bedrock AgentCore Policy does the following:

- Gives you control over what agents can access, what actions are performed and under what conditions.

- Processes thousands of requests per second while maintaining operational speed.

- Create policies using natural language and aligns with audit rules without custom code.

- Define clear policies once and apply them across the enterprise.

"You need to have visibility into each step of the agent action, and also stop unsafe actions before they happen," explained Vivek Singh, AgentCore Senior Product Manager at AWS. "This includes robust observability, so if something goes wrong, you can pinpoint exactly into what steps the agent took and how the agent came to that conclusion. You also need the ability to set some of your business policies in real time."

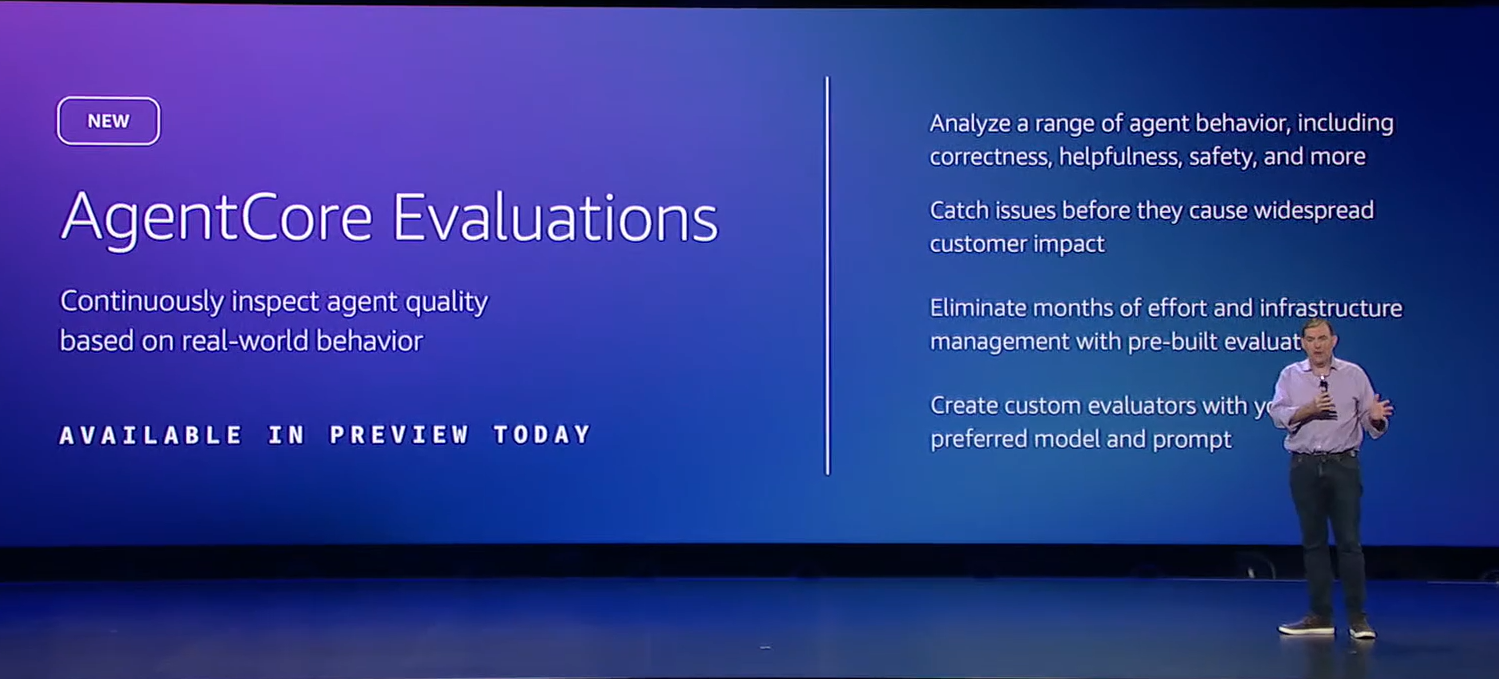

Amazon Bedrock AgentCore Evaluations adds a set of 13 built-in evaluators to assess AI agent behavior for correctness, helpfulness and safety. The evaluators enable developers to deploy reliable agents with real-time quality monitoring and automated risk assessment.

Enterprises can also create custom evaluators for quality assessments using preferred prompts and models. The evaluations are also integrated into AgentCore Observability via Amazon CloudWatch for unified monitoring.

According to AWS, AgentCore Evaluations monitors real-world behavior of AI agents in production. And LLM is used to judge responses for each metric and then write explanations. Evaluations are on-demand so developers can validate AI agents before production and then ensure smooth upgrades.

Amazon Bedrock AgentCore Evaluations is available in preview.

With the addition of Episodic Functionality to AgentCore Memory, AgentCore can enable agents to learn from successes and failures, adapt and build knowledge over time.

Along with the AgentCore updates, AWS also built out Strands Agents, an open-source python software development kit announced in May. Strands Agents is aimed at building agents with a few lines of code with native integration with Model Context Protocol servers and AWS services. It's designed for rapid development.

AWS announced the following for Strands Agents:

- Strands Agents SDK for TypeScript so developers can choose between TypeScript or Python and run in client applications.

- Strands Agents SDK for edge devices so developers can run agents using local models.

- AWS also said it is experimenting with steering tools in Strands Agents to make them more context aware without front loading all agent instructions into a single prompt. The idea is to use steering handlers to make agents more flexible while reducing token costs.

- Strands Agents Evaluations, an evaluation framework to tests agent quality, interactions and goal completion.

Constellation Research analyst Holger Mueller said:

"AWS is continuing its systematic build out of AgentCore with the new capabilities announced today. And that is key for CxOs because for advanced AI adopters in 2026 it is going to be the battle of the AI frameworks. Who will enable their enterprise to build AI powered Next Generations Applications that help automate and lower costs?"

Data to Decisions Future of Work Innovation & Product-led Growth Tech Optimization Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity AWS reInvent aws ML Machine Learning LLMs Agentic AI Generative AI Robotics AI Analytics Automation Quantum Computing Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain Leadership VR Chief Information Officer CEO Chief Executive Officer CIO CTO Chief Technology Officer CAIO Chief AI Officer CDAO Chief Data Officer CAO Chief Analytics Officer CISO Chief Information Security Officer CPO Chief Product Officer