Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

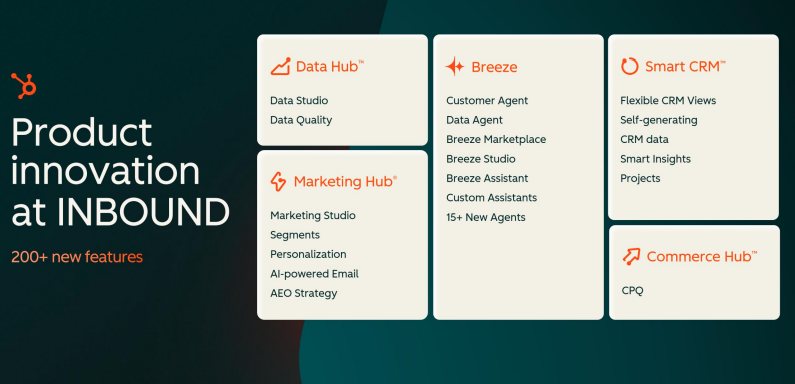

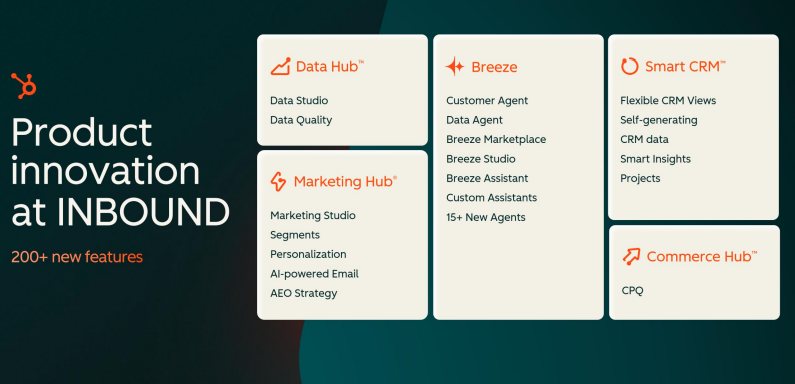

HubSpot is betting that a series of new services, Data Hub, new Breeze Agents, Breeze Marketplace and Studio, CPQ in Commerce Hub and Loop, a playbook for inbound marketers, as well as a hefty dose of AI and contextual data will differentiate the company.

At its Inbound 2025 conference, HubSpot outlined the following at a high level. The company rolled out more than 200 updates to its platform designed to build AI and human hybrid teams.

Here's a look.

- Data Hub brings together data from external sources and combines them with AI tools to connect, clean and act on data.

- Smart CRM gets updates to bring visualization, conversational and intent enrichment tools and insights.

- Marketing Hub gets AI-driven segmentation, personalized messages based on CRM data and AI engine optimization blueprints.

- CPQ in Commerce Hub AI-powered quote creation and an agent to close deals.

- More than 15 Breeze Agents including Data Agent, Customer Agent, Prospecting Agent and others designed to leverage context and unified data stores and connect to various models including Google Cloud Gemini and OpenAI ChatGPT. HubSpot is built on AWS so would have access to models in Amazon Bedrock too. HubSpot launched its first Breeze Agents last year. See: HubSpot launches Breeze AI agents, Breeze Intelligence for data enrichment

- Loop, which is an AI-driven playbook that aims to reinvent the marketing funnel. The playbook, which leverages various HubSpot services, revolves around expressing tastes, tone and point of view, tailoring messaging with AI, amplifying content with AI engine optimization, and evolving and iterating.

At HubSpot's investor day, CEO Yamini Rangan laid out the strategy.

Research: Martin Schneider on HubSpot’s strengths and weaknesses

"We are transforming our platform to be an AI-powered customer platform. We have rich customer context, which is our platform advantage. We are reimagining marketing beyond search with a new playbook, products that support it and an ecosystem behind it. And we are scaling upmarket and down-market to drive durable growth. And we are transforming as a company to be AI first," said Rangan.

Also see: Constellation Research’s Liz Miller posted live from the keynote at HubSpot’s Inbound conference. Martin Schneider highlighted the news in a LinkedIn video.

HubSpot's positioning is worth noting given Salesforce's Dreamforce conference will feature similar verbiage with Agentforce.

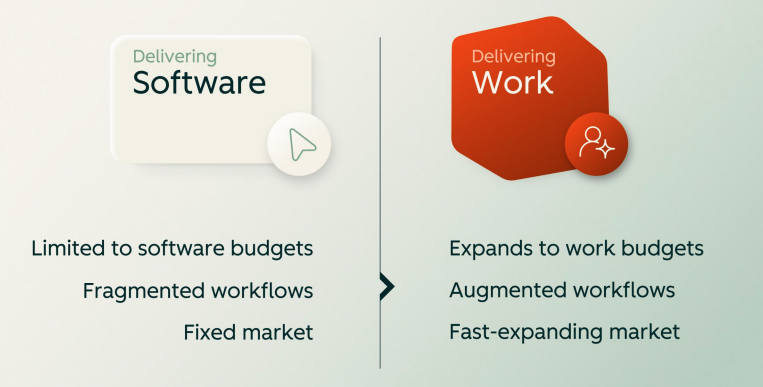

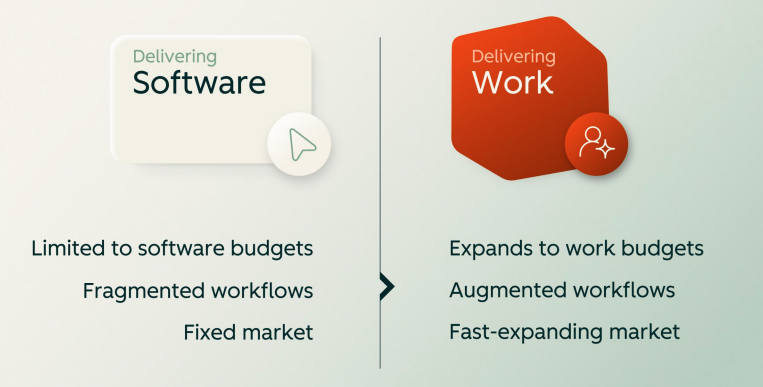

Rangan added that the shift for enterprise software vendors is profound. She said:

"Customers today expect us to resolve tickets, write blogs, schedule meetings, just like they would a coworker. So customers are expecting not just software that does the work for them, but actually does help them get more accomplished to grow.

That's a big shift, and that unlocks a huge opportunity for HubSpot. And when we look at this opportunity, -- we are moving from delivering software to delivering work. We're no longer limited by the software budgets. We are now tapping into the work budgets."

Moving upstream and downstream

Rangan laid out a heady goal for HubSpot--become the No. 1 AI-powered customer platform for scaling companies.

HubSpot made its name by building for SMBs, but is increasingly moving upstream. AI gives HubSpot a shot at larger enterprises.

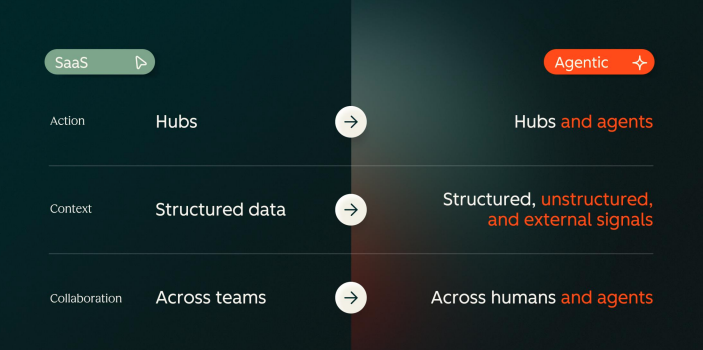

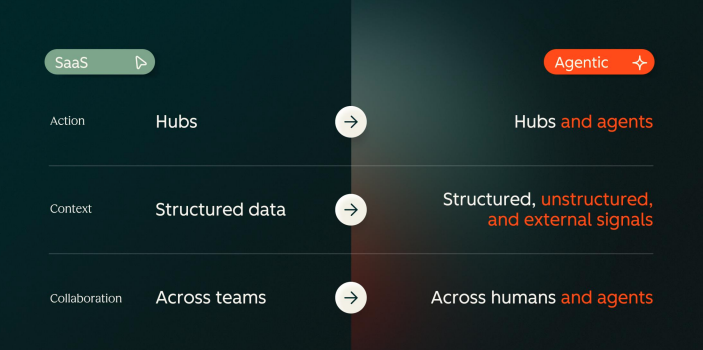

The company's previously built context for customers via structured data, company contacts, tickets, and deals. Now AI brings structured data, unstructured conversations and external intent signals to the mix, explained Rangan.

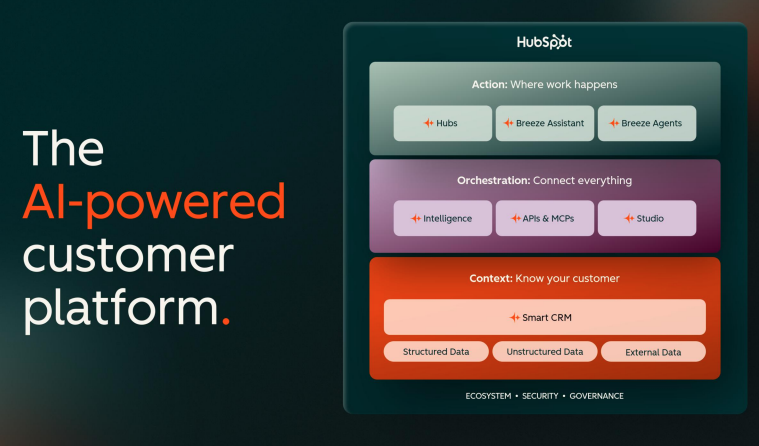

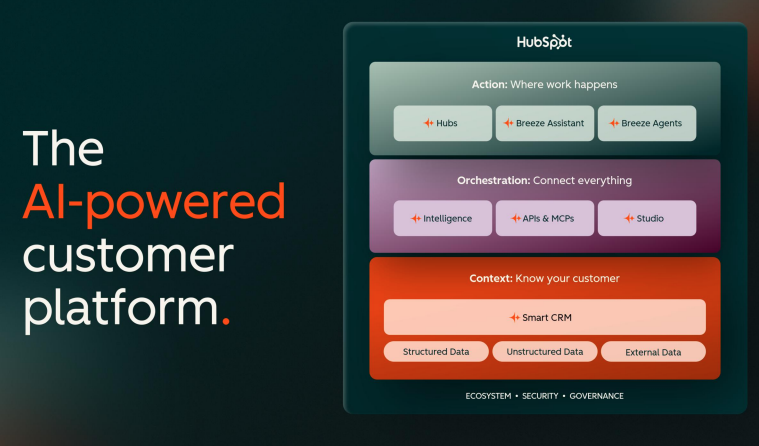

Rangan said HubSpot is gunning for three layers.

- A context layer that knows your customer.

- An action layer to do work.

- And an orchestration layer that connects everything together.

For AI to truly work, Rangan said all three layers have to be interconnected via APIs, Model Context Protocol (MCP) and connectors. "Now you put all of this together, this is our AI-powered customer platform, delivering value for customers," said Rangan.

HubSpot said customers are adopting the AI tools and strategies behind them. HubSpot's ability to provide context to customers will be what's durable.

"Data is what AI needs to do work, not just guess about the work to be done. And HubSpot has 19 years, 270,000 customers worth of those touch points," said Rangan. "Every campaign launched, every e-mail sent, every deal closed, every CPQ transaction across the entire customer journey. That is the data that AI needs in order to do great work. And we also need the user context. So AI knows who is asking and what permissions they can take based on the role."

Rangan said HubSpot's approach is resonating with larger enterprises as a way to consolidate legacy CRM systems and deliver better total cost of ownership.

HubSpot's focus on delivering value and use cases before monetization is also helping. HubSpot sells hubs, seats and credits that are primarily used for AI agents.

Key points about monetization:

- Persona seats provide access to hubs like Sales Hub and Service Hub. As companies grow they buy more hubs and seats.

- Core seats are sold for platform access and the ability to create custom objects and workflows. Breeze Assistant and data and contact enrichment are included in core seats.

- Credits revolve around usage based pricing for AI agent actions and other usage on the platform.

Rangan said the plan for HubSpot is to scale customers upmarket and down-market. "Going upmarket has been a multiyear focus for us. We want to build powerful tools that are super easy to use, and we have had consistent set of innovation, opening the markets for sensitive data, journey orchestration, multi-account management, new global data centers. All of this innovation proves that we scale with businesses," said Rangan.

HubSpot will also rely on integrators and partners.

The down-market strategy is to drive volume, deliver volume and grow wallet share with a freemium model designed for small companies. "When they get into a Starter or Pro, we deliver compelling value. We become that customer operating system that customers depend on. And when they grow, we grow," said Rangan.

Few enterprise software vendors have been able to do both upmarket and down-market at the same time. Salesforce CEO Marc Benioff recently noted that the company is also looking for growth from midmarket enterprises as long as the giant companies.

HubSpot CFO Kathryn Bueker said the company can cater to multiple enterprises to build durable and efficient growth.

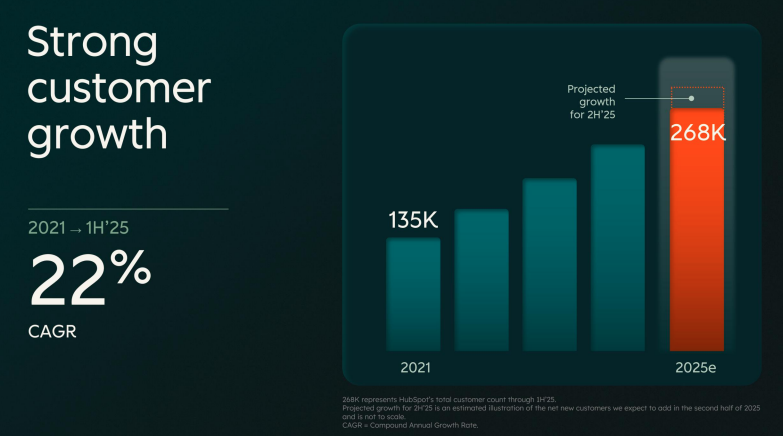

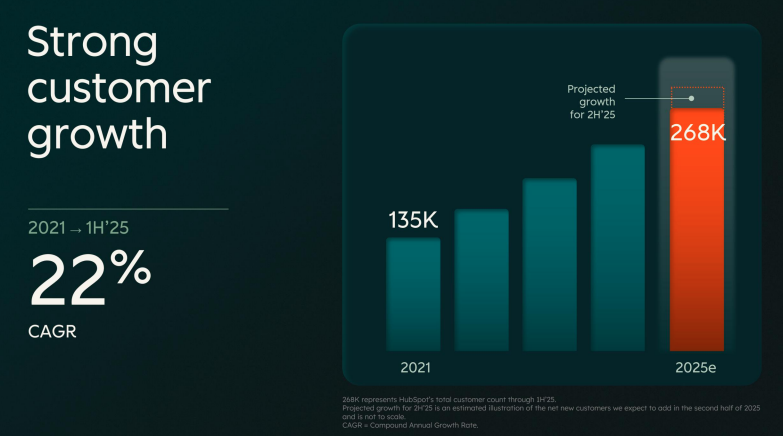

Since 2021, HubSpot has delivered a compound annual revenue growth rate of 24%. HubSpot is projecting 2025 revenue of $3.1 billion, up 17% in constant currency.

"We take a platform-oriented approach to address our market opportunity. And we believe that our platform is the key driver of our upmarket and down-market momentum as well as our strong customer retention," said Bueker. "New and existing customers are consolidating their go-to-market technology stack on HubSpot."

Starter customers are about half of HubSpot's total customer base.

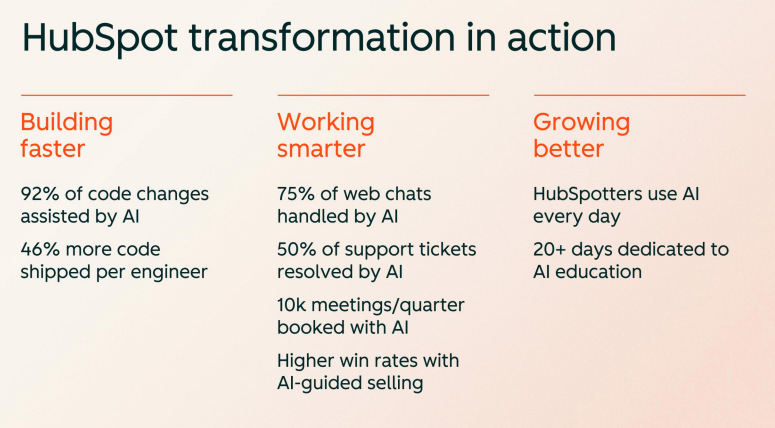

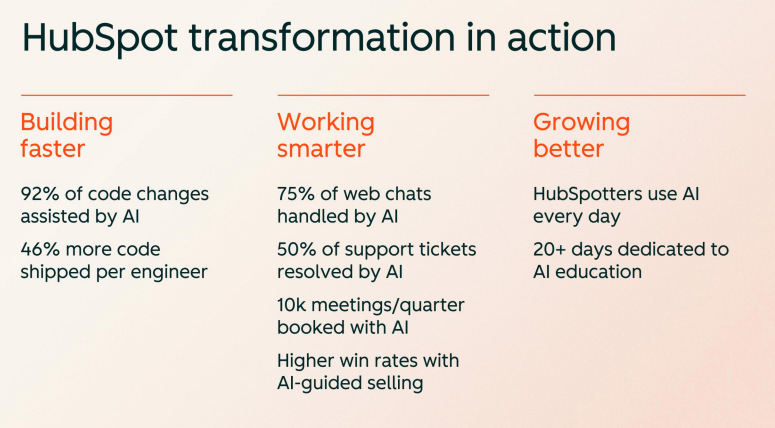

Leveraging AI internally

HubSpot executives noted multiple ways that the company is leveraging AI for customer support and sales and marketing.

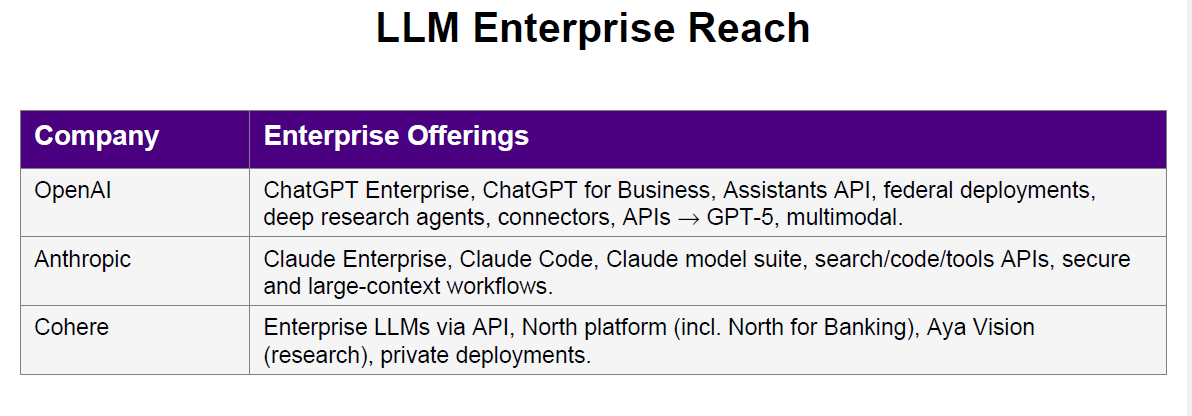

The more interesting point was made by HubSpot CTO Dharmesh Shah, who confirmed the good-enough LLM trend that other enterprises are noting.

Shah was asked about inference cost to provide AI agents to HubSpot customers. Shah said:

"We get very excited about the newer models and deep reasoning and deep research and all these kind of high-end features that are in Opus and GPT 5 Pro. But e-mail is a good example. The baseline capability that we need for a vast majority of go-to-market use cases are handled by something like a GPT 3.5 or a 4.0. So we don't need the frontier model capability for a vast majority of these use cases. And the cost of like GPT 4.0 has gone down 250x from the time it was released. That slope still goes down. Yes, the advanced models are getting more expensive, but most of the models we need for most of the work that we do, including e-mail, is not those frontier models."

Bueker noted that HubSpot hasn't seen any material cost of goods sold impact from use cases like personalizing emails. There's a team at HubSpot solely focused on the drivers of AI costs and optimization of the platform.

In addition, HubSpot is using AI to drive productivity and those savings are growing R&D spending.

"For sales and marketing, AI tooling and improved rep productivity upmarket, along with better conversion efficiency at the low end will be key drivers of S&M leverage. We will realize modest additional gains in G&A by leaning into AI and automation. As I've said in the past, we may see a bit more or less leverage in any given year depending on the opportunities we see, but we will stay on track to hit our interim and long-term margin targets," said Bueker.

The looming SaaS vs agentic question

No enterprise software vendor these days can get away from the question about whether agentic AI will eat software.

Benioff had his point of view and said SaaS won't be eaten by LLMs.

Rangan said SaaS may change, but software isn't going anywhere.

"SaaS effectively was a kind of deployment and business model kind of transformation, not that big of a like a technology transformation. What endures is software. Software is a high margin, high leverage, you can put investment and solve a bunch of customer problems. We have the largest opportunity as an industry in software than we've ever had before. The business models will change. I think SaaS in its purest form is unlikely to remain the way it is right now. That's why we see the hybrid pricing models.

But I'm just super bullish about the opportunity this creates because now we can solve problems with software that we were never able to do before. Before we built tools for humans to use, now we can actually do the work. The value that software is going to produce over the coming decades is like orders of magnitude higher. The TAMs are just going to be bigger, and we're just starting to see the early innings of that game. But I don't think software is dead. SaaS as a pure business model might transform over time, but software as a way to make money and put capital to work is going to be amazing."

Data to Decisions

Future of Work

Marketing Transformation

Matrix Commerce

Next-Generation Customer Experience

Sales Marketing

Innovation & Product-led Growth

Tech Optimization

Digital Safety, Privacy & Cybersecurity

Hubspot

Marketing

B2B

B2C

CX

Customer Experience

EX

Employee Experience

AI

ML

Generative AI

Analytics

Automation

Cloud

Digital Transformation

Disruptive Technology

Growth

eCommerce

Enterprise Software

Next Gen Apps

Social

Customer Service

Content Management

Collaboration

Machine Learning

LLMs

Agentic AI

Robotics

Quantum Computing

Enterprise IT

Enterprise Acceleration

IoT

Blockchain

Leadership

VR

Chief Information Officer

Chief Marketing Officer

Chief Customer Officer

Chief People Officer

Chief Executive Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer