Coreweave's Q2: Takeaways on AI training, inference demand

CoreWeave is building its AI infrastructure so it can easily switch back and forth from training to inference workloads as it works through strong demand and supply constraints.

The AI infrastructure provider reported a second quarter net loss of $290.51 million, or 60 cents a share, on $1.21 billion, up 207% from a year. Wall Street was looking for a loss of 49 cents a share on revenue of $1.08 billion.

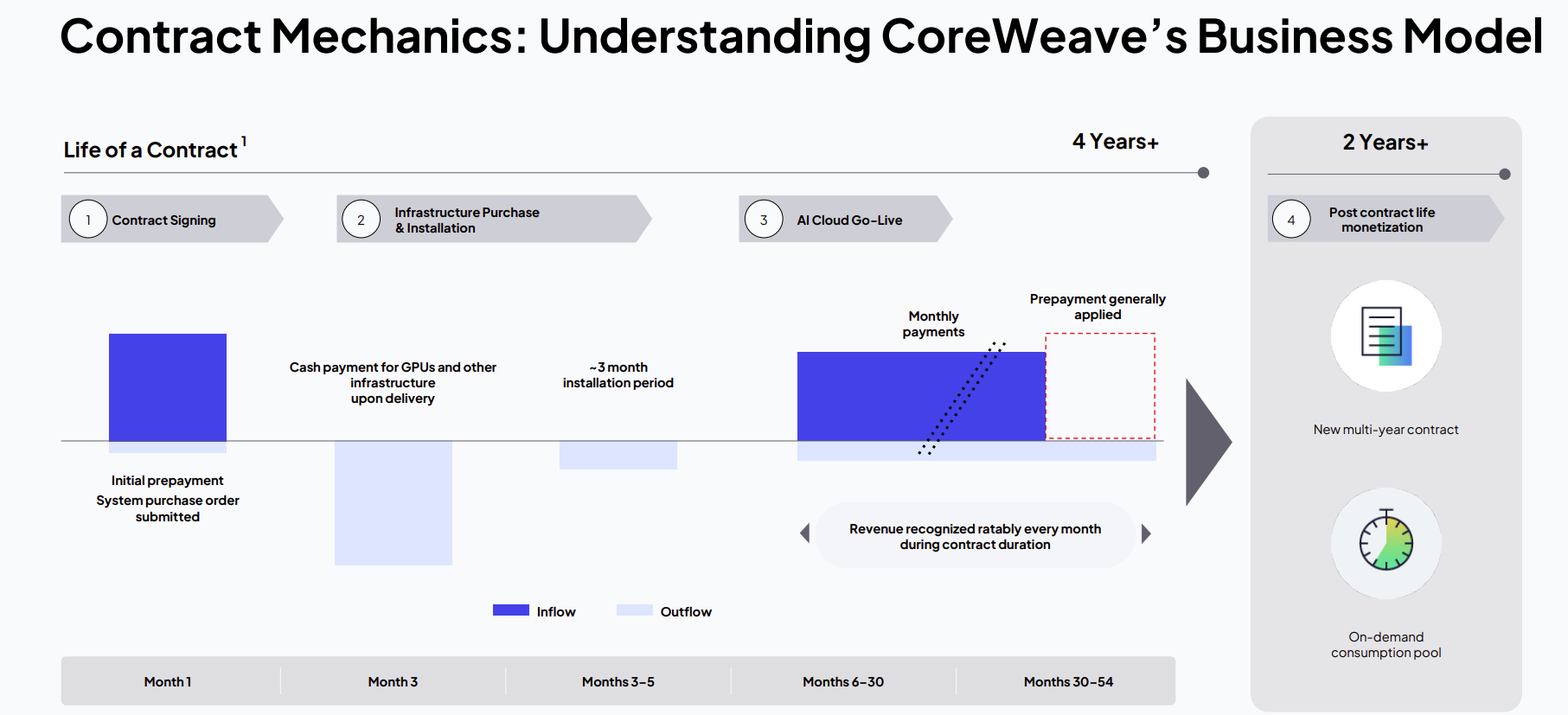

The company exited the second quarter with a revenue backlog of $30.1 billion, double the backlog from a year ago. CoreWeave landed a $4 billion expansion deal with OpenAI in addition to a previously announced $11.9 billion contract.

- CoreWeave buys Core Scientific for $9 billion

- CoreWeave's IPO: What you need to know

- CoreWeave to buy AI developer Weights & Biases for a reported $1.4B

CoreWeave raised its 2025 revenue guidance to $5.15 billion to $5.35 billion. "We ended the quarter with nearly 470 megawatts of active power, and we increase the total contracting power approximately 600 megawatts to 2.2 gigawatts. We are aggressively expanding our footprint on the back of intensifying demand signals from our customers," said CoreWeave CEO Michael Intrator.

Here's a look at the takeaways.

Overall demand. CoreWeave signed expansion contracts with both of its hyperscale customer in the past eight weeks. "Our pipeline remains robust, growing and increasingly diverse, driven by a full range of customers, from media and entertainment to healthcare to finance to industrials and everything in between," said Intrator. "The proliferation of AI capabilities into new use cases and industries is driving increased demand for our specialized cloud infrastructure and services."

Financial services demand. CoreWeave said it inked big bank deals with banking giants Morgan Stanley and Goldman Sachs for proprietary trading.

Healthcare scaling AI. "We're also seeing significant growth from healthcare and life science verticals, and are proud of our partnership with customers like Hippocratic AI who built safe and secure AI agents to enable better healthcare outcomes," said Intrator.

Training and inference. Intrator said CoreWeave is working with customers to easily transition between training and inference. "We're helping these customers redefine how data is consumed and utilized globally as their critical innovation partner, and we are being rewarded for our efforts," said Intrator. "As they shift additional spend to our platform, we continue to execute and invest aggressively in our platform, up and down the stack to deliver the bleeding edge AI cloud services, performance and reliability that our customers require to power their AI innovations."

Intrator said:

"We really build our infrastructure to be fungible, to be able to be moved back and forth seamlessly between training and inference. Our intention is to build AI infrastructure, not training infrastructure, not inference infrastructure."

Storage workloads. CoreWeave said it is gaining storage share for AI-centric workloads. "Customers are shipping petabytes of their core storage to core week in the form of multiyear contracts. We are providing support for additional third party storage systems," said Intrator.

Bleeding edge expansion. Intrator said customers are focused on the latest hardware--Nvidia systems--to remain on the bleeding edge. "Clients are purchasing hardware that is appropriately state of the art for their use case. And as new hardware comes out, as new hardware architectures are released, they tend to come back in and purchase the same top tier infrastructure their next renewal," said Intrator.

Power and supply constraints. Intrator said the AI infrastructure market is "structurally constrained." "It is a market that is really working hard to try and balance and there are fundamental constraints at the power shell through the grid to the supply chains that exist within the GPUs to the mid voltage transformers," said Intrator. "There are a lot of different pieces that are constrained, but ultimately the most significant challenge right now is accessing power shells."