Why Llama 2, open-source models change the LLM game

Meta recently open-sourced Llama 2 and made it free for research and commercial uses. The move quickly put Llama 2 on the open-source leaderboard for large language models (LLMs) and spurred enterprises to give it a spin.

While the move is notable, there are a bevy of nuances for enterprises to consider. Here's a look at moving parts to consider as enterprises put Llama 2 through its paces as they craft generative AI use cases. This research note is based in part on a transcription of a CRTV discussion between Constellation Research's Larry Dignan and Andy Thurai.

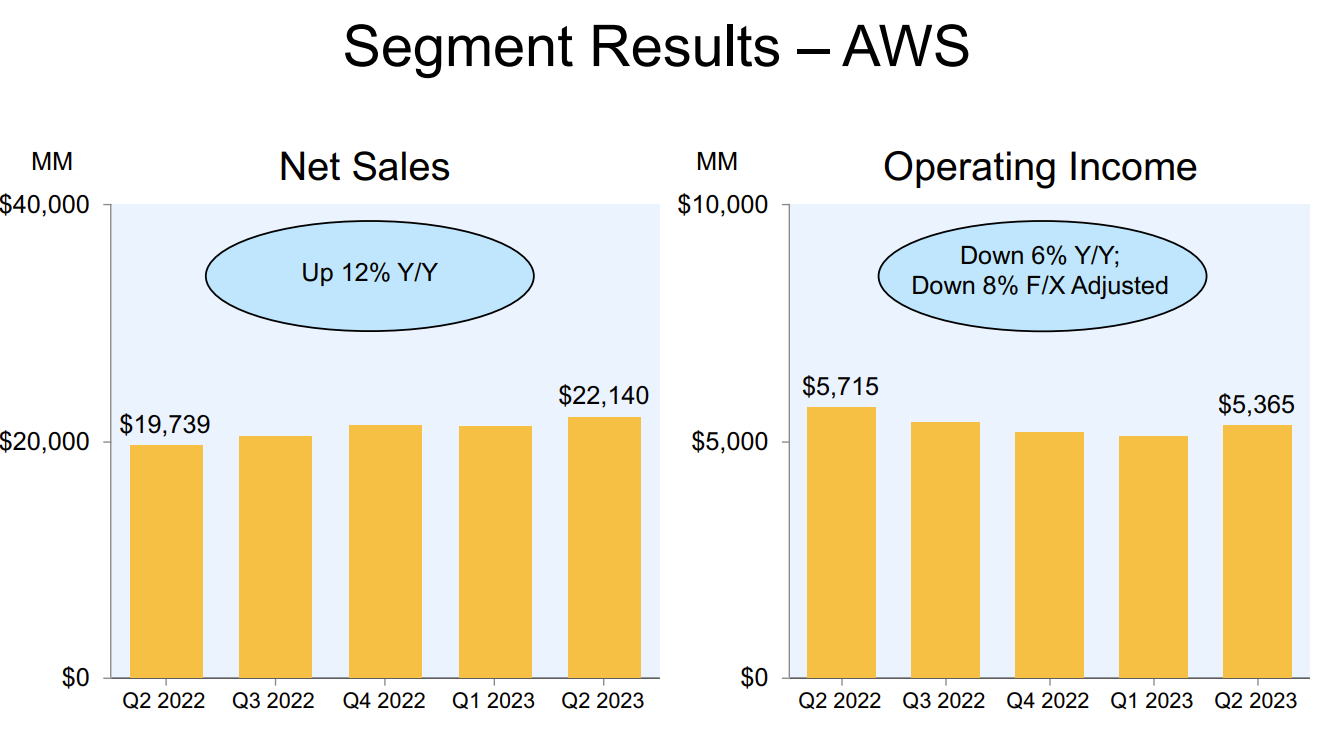

Why Llama 2 matters. Thurai said OpenAI captured the imagination of the enterprise, but there's a need for open-source large language models (LLMs). "Everybody is going crazy about OpenAI, but what people don't realize is that after your proof of concept there is a usage model that adds up," said Thurai. "It can get quite expensive, so companies are looking for alternatives with open-source models."

Thurai said:

"Meta's offering is the first that is fully open-sourced and free to use commercially, truly democratizing AI foundational models. It is easier to retrain and fine-tune these models at a much cheaper cost than massive LLMs. Meta also released the code and the training data set freely. And wider availability can make this popular sooner. It is available on Azure (through Azure AI model catalog), on Hugging Face, AWS (via Amazon Sagemaker Jumpstart), and even Alibaba Cloud."

Llama 2's sizes. Llama 2 is also interesting for enterprises because it can be used for small language models or specialized models, said Thurai. Llama 2 also offers more parameters and sizes. "There are three primary variations: 7 billion parameters, 13 billion and 70 billion," explained Thurai. "These are comparatively much smaller models than ChatGPT, but more accurate." Those sizes quickly put Llama 2 on the Hugging Face leaderboards.

Is Llama 2 open source? Thurai said there has been a good amount of debate about whether Meta's language model is open source. Usually, open-source software is available for anyone to use without restrictions. Llama 2 has conditions about commercial use, said Thurai. "The average enterprise isn't likely to hit that commercial use number so it's not much of a restriction. Meta put restrictions in because it doesn't want other companies to use Llama 2 against the company in a competitive situation," said Thurai.

Will enterprises use Llama 2? Thurai said enterprises will try Llama 2 for pilots and proof of concept projects, but beyond that point usage is debatable. "It's tough to say what will happen, you have to read the rules carefully to ensure Meta doesn't come after you for licensing infringement," said Thurai.

Using alternatives. Thurai said Llama 2 is worth exploring but the Falcon LLM is popular as is MosaicML, which now falls under Databricks' umbrella. Open-source models should be in the enterprise mix, but it's worth knowing the vendor business models. "The money is in helping you train your own models," said Thurai. For now, enterprises should try alternatives with an eye toward costs. After all, most companies won't have the resources to grab open-source models and train them with proprietary data. Managed model training will also be important.

What's next? Thurai said enterprises are exploring multiple LLM options and it's too early to tell where they'll land. Some enterprises will lean toward proprietary models with industry-specific use cases. Others will fine tune open-source models. "There will be a lot of variations," said Thurai.

Thurai also said there will be a divergence between proprietary and open source LLMs. "I expect to see more divergence and more closed garden models like GPT-4 and Bard grow and models like Falcon and Llama 2," he said.

Thurai added:

Data to Decisions Innovation & Product-led Growth Tech Optimization Next-Generation Customer Experience Future of Work Digital Safety, Privacy & Cybersecurity AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Disruptive Technology Chief Information Officer Chief Executive Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer"I expect more LLMs to come to market. Some will try to go big, and some will try to go small. But in order to differentiate, I expect domain-specific small language models to appear that will be very specific to industry verticals.

I also expect a lot of smaller companies to provide the missing pieces to make usage of LLMs much better. I also expect more open-source models to hit the market soon."