Vice President and Principal Analyst

Constellation Research

Title: About Dion Hinchcliffe

Dion Hinchcliffe is an internationally recognized business strategist, bestselling author, enterprise architect, industry analyst, and noted keynote speaker. He is widely regarded as one of the most influential figures in digital strategy, the future of work, and enterprise IT. He works with the leadership teams of large enterprises as well as software vendors to raise the bar for the art-of-the-possible in their digital capabilities.

He is currently Vice President and Principal Analyst at Constellation Research. Dion is also currently an executive fellow at the Tuck School of Business Center for Digital Strategies. He is a globally recognized industry expert on the topics of digital transformation, digital workplace, enterprise collaboration, API…...

Read more

I'm currently at the front row of the analyst area at Moscone North for the opening Google Cloud Next '23 keynote. While our Larry Dignan has covered all the bases with the major announcements this week, with generative AI-infused into nearly everything and much more besides, I'll be looking at all the announcements with an eye for what it means for Chief Information Officers (CIOs).

Today AI and cloud are the very top of the IT agenda. Like never before, enterprise data, intelligence, and massive compute applied in innovative ways will define the very future of our organizations. The announcements here at Google Cloud Next will tell the tale on whether Google Cloud is fully prepared to take their customers on that journey. Let's take a look at what they're saying.

If you wish, skip right to the CIO takeaways for Google Cloud Next '23.

The Google Cloud Next 2023 Keynotes: Blow-by-Bow

9:05am: Sundar Pichai arrives onstage talking about Thomas Kurian also coming on stage shortly and talking about digital transformation. Fast-forward four years, Google Cloud is one of the top enterprise companies in the world.

Sundar speaks about companies wanting a cutting-edge partner in cloud, and now a strategic partner with AI. He says Google has been working for 7 years to have an AI-first approach. Backdrop currently shows the PaLM-E language model, their enterprise LLM.

Pichar describes using generative AI to re-imaging the search experience, which they call Search Generative Experiences, or SG for short. You may even have had Google search ask you to try using SG lately. He says feedback from Google search users has been great so far.

Google has been for years to help deploy AI at scale. He knows that CIOs are on the hot seat to deliver gerneative AI today. Now cites how General Motors is using conversational AI in OnStar. How HCA Healthcare is using Google's medical domain LLM, Med-PaLM, to provide better care. United States Steel is using generative AI to summarize and extract information from repair manuals.

Sundar notes that these early use cases only scratch the surface. He lauds their Vertex AI initiative, then about their large inventory of different foundation models to provide rich choice in how companies use AI to get work done.

He announces that one million people are now using Duet AI in Google Workspace. Have been implementing rapid changes in the product from the outset and fast improvement using plenty of feedback.

Then Sundar announces that the general availability of Duet AI within Google Workplace is officially today, August 29th, 2023.

Now speaks about the important enterprise topic of Responsible AI, security and safety as well as their AI principles and best practices. Google is working hard to make sure users can more easily identify when generative AI content is being showed online, watermarked if needed, including invisible watermarks that don’t alter the content of images and video. Sundar says they are the first cloud provider to enable AI digital watermarking on images.

Bold and responsible is Sundar's message: “I truly believe we are embarking on golden age of innovation†building on his previous comment that AI is one of the biggest revolutions in our lifetimes.

9:17am: Now welcomes Thomas Kurian on stage. Kurian starts off, notably, thanking the many organizations working together with Google to bring Generative AI to market.

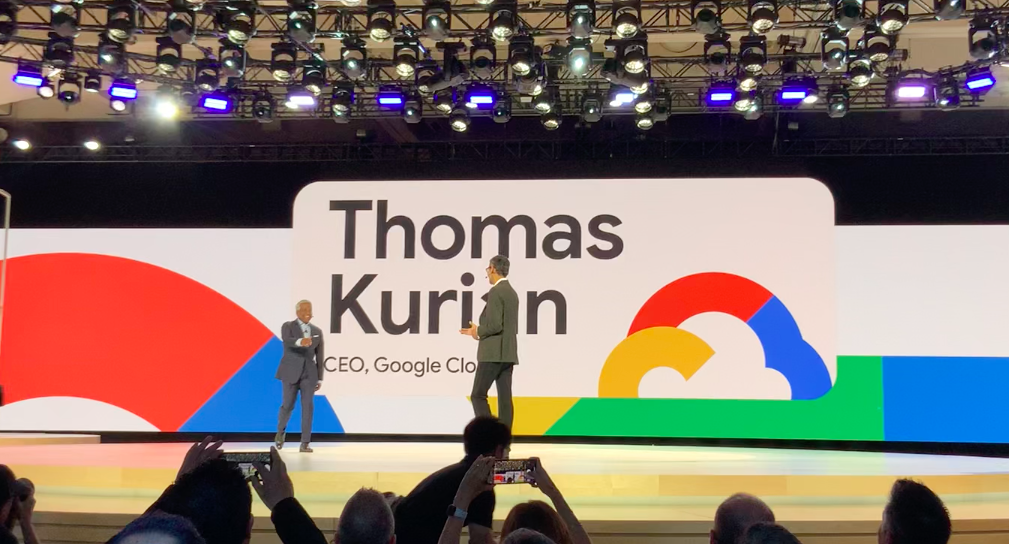

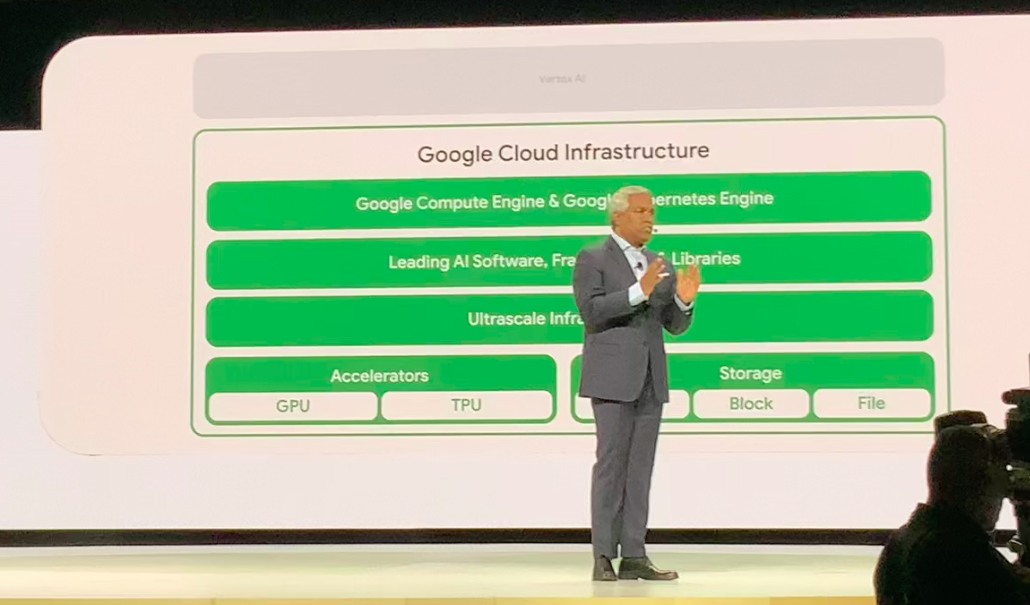

Says Google Cloud offers optimize environments for AI with Vertex AI. Everyone can innovate with AI he says. And every org can succeed in adopting AI.

Vertex AI and Duet AI are the big focus areas of what Thomas is speaking on. Cites a number of larger companies using Google Cloud like Yahoo! And Mahindra.

Kurian cites the brand-new GKE Enterprise, which creates an new more integrated container platform, making it even easier for organizations to adopt best practices and principles that Google has learned from running their global Web services. GKE Enterprise brings powerful new team management features. Kurian says it's now simpler for platform admins to provision fleet resources for multiple teams. He makes no mention of Anthos, but it's part of the same story.

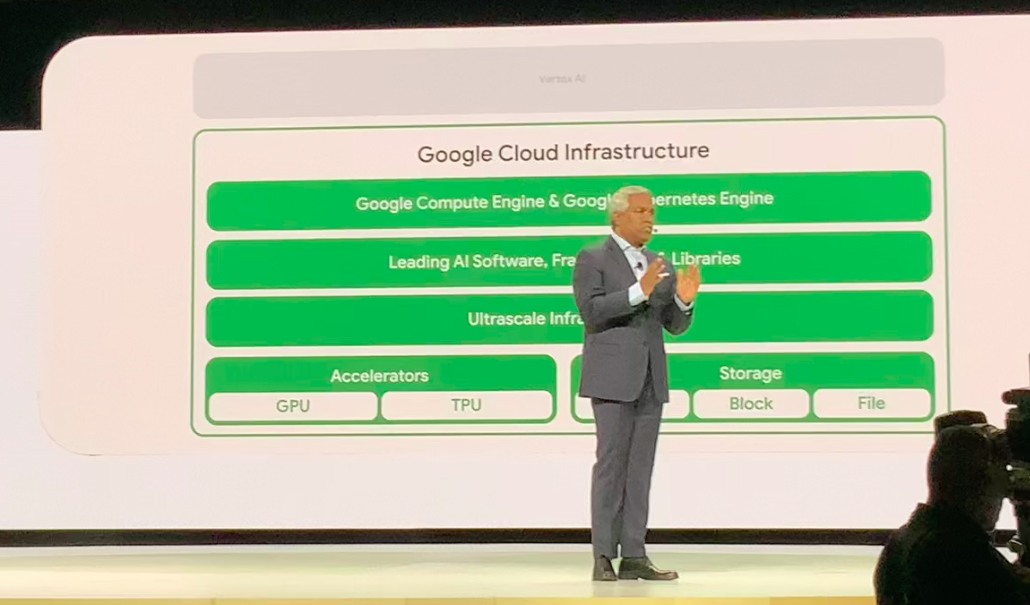

He then announces new availability of a very powerful new A3 GPU supercomputer instance powered by NVIDIA H100 GPUs, needed for today's largest AI workloads and for Google to stay in at the high-end of the generative AI game.

Then Kurian introduces the Titanium Tiered Offload Architecture. Offloads are dedicated hardware that perform essential behind-the-scenes security, networking, and storage functions that were previously performed by the server CPU. This allows the CPU to focus on maximizing performance for customer workloads.

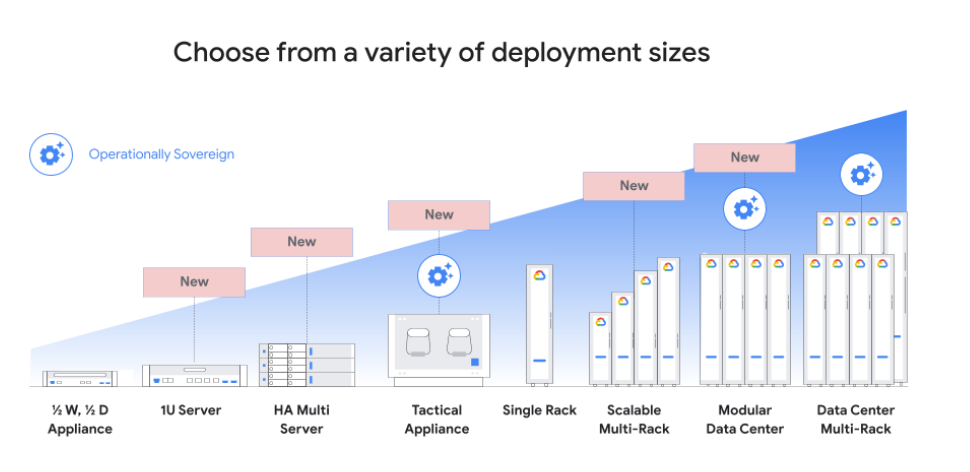

Then comes the 5th annnouncement, which is big private cloud news to support the on-prem cloud conversations that the industry has been having lately. The new solution is Google Distributed Cloud, for sovereign and regulatory needs (he doesn’t mention performance or control, but those are also notable use cases.)

Finaly 6th, he tips the hat on Google's new Cross Cloud Interconnect, for security and speed across “all clouds and SaaS†providers.

Now NVIDIA CEO, Jensen Huang, is up. He says they’re going to put their powerful AI supercomputer, DGX Cloud -- billed as an optimized multi-node AI training factory as-a-service -- right into Google Cloud.

My take: It's very smart to show off their very close relationship with NVIDIA. Kurian says they use NVIDIA GPU to build their next generation products. Kurian now asks Huang how NVIDIA is doing. Huang says “breakthrough, cutting-edge computer science. A whole new way of delivering computering. Reinventing software. Doing this with the highly-respected JAX and OPEN-XLA. To push the frontier of large language models. To save time, scale-up, save money, and save energy. All required by cutting-edge computing. He paints a compelling picture of an AI leader who knows where they are going and so it's key for Google Cloud to be seen as a strategic partner.

Huang announcies PAX-ML, building on top of JAX and Open XLA, a labor of love and groundbreaking for configuring and running machine learning experiments on top of JAX.

Says large teams at NVIDIA are building the next generation of processors and infrastructure. NVIDIA is working hard on the DGX-GH200, a massive system that can handle a trillion parameters, based on a revolutionary new "superchip" called Grace Hopper.

â€Google is a platforms company at the heart of it. What to attracts all the devs who love NVIDIA products to create new products" says Kurian. Clear that he wants to make sure how it's clear how close NVIDIA is to Google Cloud and the special nature of the relationship to bring some of NVIDIA latest innovations to Google's cloud and AI stacks.

Now Kurian is talking a bout Vertex AI and Is seeing “very rapid growthâ€, listing a whole swath of companies building with Vertex AI. Interesting how Vertex AI offers a robust and easy to select from AI “model gardenâ€. My take: Model choice is going to be a very big area for the large AI clouds to compete on and Google basically has the edge.

Then Kurian introduces the new PaLM 2 model, a major upgraded introduced in May, Has 3x the token input length, which is fast becoming another key metric that foundational models are fiercely competing on, as it determines the amount of input and output can be processed by the model as a whole, determining how complex a business or technical task can be handled. This is especially important in many of the highest impact scientific, engineering, and medical scenarios.

Kurian now touting that Google Cloud now supports over 100 AI foundation models, noting they are constantly adding many of the very latest new models, showing these off as a proof point that their AI model garden has one of the richest set of choices currently available.

He talks about about Google Cloud's strict control, protection, and security of enterprise data in VPC and private data for AI.

Now Nenshad Bardolliwalla, an old fellow Enterprise Irregular, is onstage talking about using 1st party models, along with Anthropic's new Claude 2 model and Meta’s LlaMA 2 right in the Vertex AI model garden.

9:40am: “Today if you go into the Vertex Model Garden that Llama 2 is available todayâ€. Huge cheer from the audience.

32K is the token input limit for most leading edge AI models these days. About. 80 pages of text. Nenhad then inputs the entire Department of Motor Vehicles Handbook into a Llama 2 prompt.

Then he asks Llama 2 to summarizer all the rules around pedestrians. Llama 2 does this in mere seconds.

Now Nenshad moves on to computer vision. Generates some images of trucks for the DMV web page. Now shows an example of using enterprise brand style and images to do style-tuning on the fly. Now images “will never go out of style.â€

Shifts over to Google's new AI digital watermarking. There are real challenges with them, but Google things they've handled them sucesfuly in rich media, but not in text. Notes that pixel based watermarking often disturbs the image. Works right in Vertex AI. He shows watermarked images that have totally invisible watermarks.

Kurian back on stage data talking about search and their new Gounding service in Vertex AI to ensure factual results and reduce hallucinations. My take: Groundings are a very important advance in making generative AI ready for higher maturity enerprise use cases. Also has an Embeddings service. Vertex AI conversation also makes it easy to have threaded conversations inside business applications in multiple language.

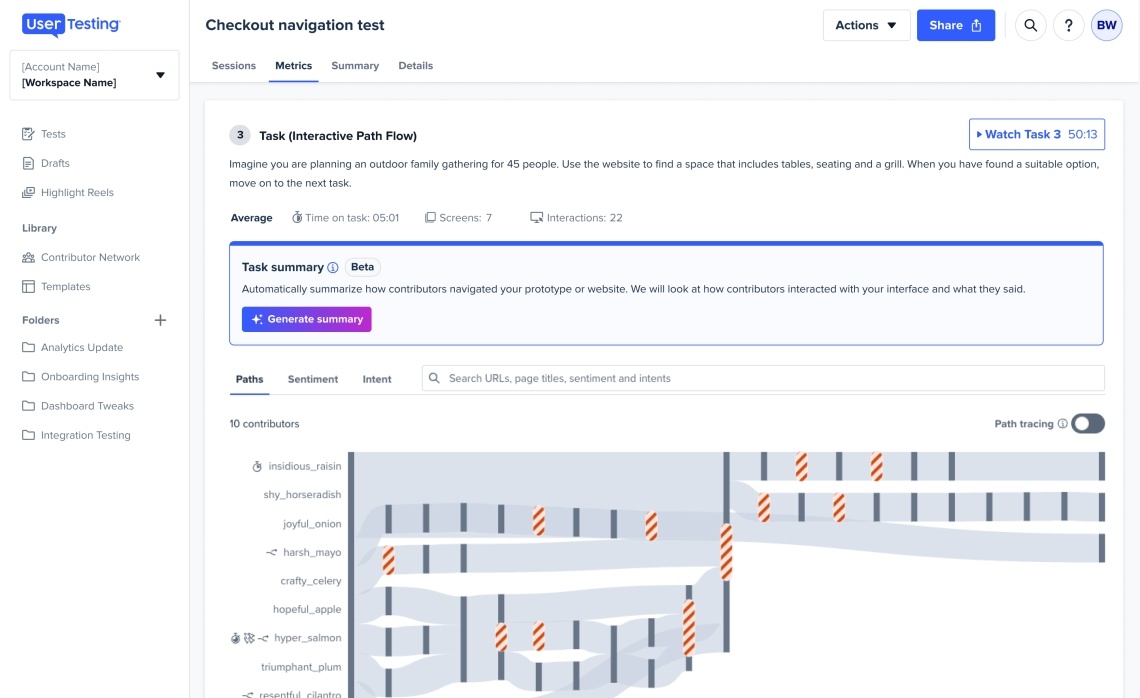

Now Kurian explores the new Vertex AI Extensions to connect models, search, and third party applications. Nenshad gives the demo. Says can build a search and conversational app in two minutes. Goes into Vertex AI and goes to ‘Create a new app’’ Can specify advanced capabilities to activate enterprise features and ability to query model. Announces, Jira, Salesforce, and Confluence as sources for enterprise data for these apps, which holds the promise to transform IT and sales processes. Nenshad works on building a drivers license flow from the DMS. Can use simple text outlines to then build conversational apps in.just a few minutes. My take: This will iindeed accerate building apps on top of enterprise content sources along with very simple to create narrative journeys the app should support using that knowledge. Now offers citations so that data is grounded.

Nenshad now shows the app working. Says “this is the ability to bring the power of our powerful consumer-grade site directly to your enterprise apps.â€

he demonstrates that Vertex Search and Conversation can build some amazing apps in surprisingly short time that have a lot of intelligence in them.

9:56am: Kurian emphasizes that Vertex AI can ensure that “AI is used in everything that you do.â€

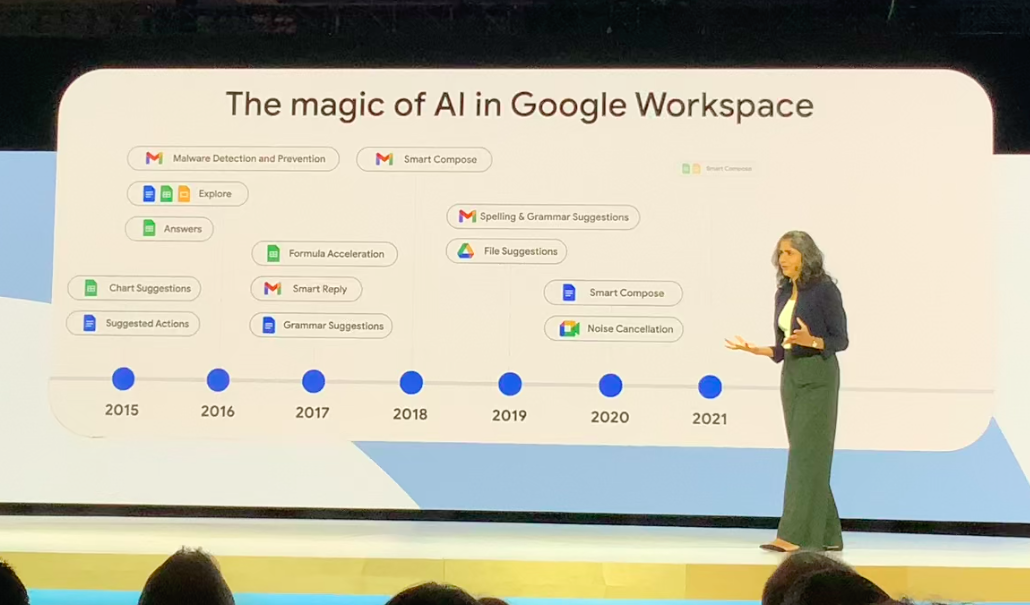

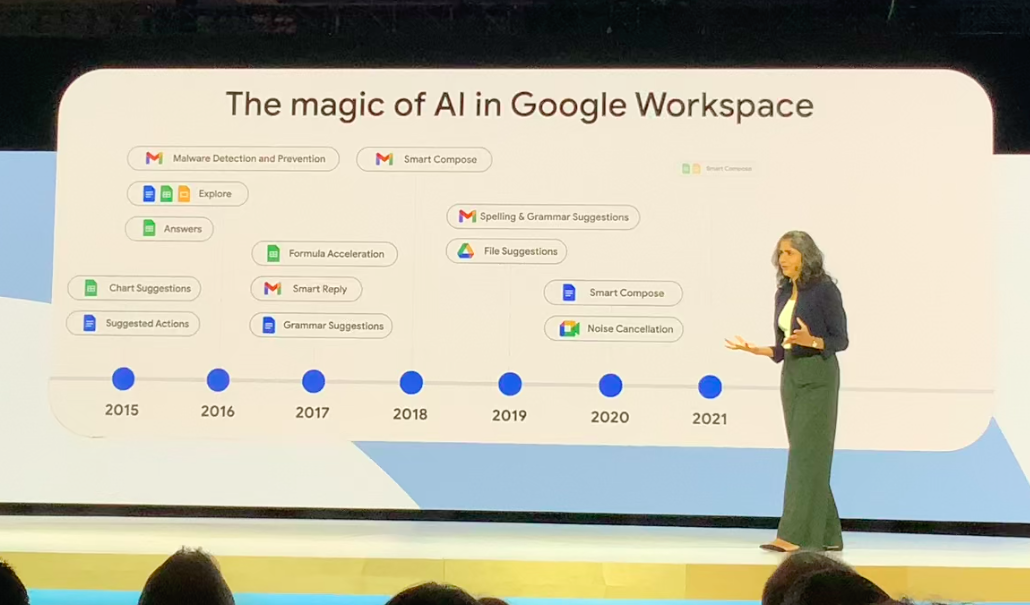

Now they talking about the decade long journey in developing AI features of Google Workspace.

Over 300 new features for Google Workspace launches recently. They say the product investments are paying off. Claims more and more customers are switching to Google Workspace wholesale, with 10 million paid customers today.

Will enhance Duet Ai to go from conversational to contextual. Duet AI will soon look at whatever you are doing and proactively suggesting improvements. My take: Will have to be careful about interruptive, but says can even take action on your behalf, if you want, presumably without interrupting work.

Demonstrates building a polished creative brief using Duet AI with text and relevant graphics, along with charts from the correct Google Sheet.

Shows a feature for people who join a Google meeting late benefit from the Duet AI “catch up†feature that will show late arrivals what happened in the meeting before they got there.

And Duet AI can even attending a meeting on your behalf, which Larry Dignan explored today on Constellation Insights. The Attend for Me features will be very interesting depending on how interactive it is.

Now directly addresses the AI elephant in the room, Kurian says "no data never leaves from users, departments, or organizations. Your data is your data. Google Cloud is uniquely positioned to ensure their models don’t learn from your data“ A very important strategic point, and data safety and sovereignty will be a very important capability to guarantee for many organizations though likely hard to prove.

10:07am: Duet AI in Apigee can make it easy to design, create, and publish your APIs.

Duet AI can refactor code from legacy to modern languages. On screen is full of language database C++ code. They will migrate the legacy C++ code right to the Go language onstage. The result uses Cloud SQL. It indeed takes seconds to take this important database connector. Converted the database connection to a cloud managed database. Duet AI is training on Google Cloud specific products and best practices.

Duet AI can understand the structure and meaning of your enterprise data deeply as well, and not just generate code. It will also pull out specific functionality in a company’s code base, “without comprising quality.†Duet AI can modernize and migrate code in a highly contextually-aware way. Definitely a major shift in performance and likely quality for overhauling and modernizing legacy code bases, especially to become more cloud-ready, even cloud-native.

Kevin Mandia from Mandiant cames out to explore cybersecurity capabilities, including secure-by-design. Cites how Google Cloud’s security vulnerabilities rates significantly better than two other major hyperscalers. Again, critical for the CISO to sign-off on activating powerful generative AI capabilities From their cloud providers.

Key Google Cloud Next Takeaways for CIOs

So what are the key takeaways from the Google Cloud Next keynote for those in the CIO role:

- Google has true enterprise-grade AI. Vertex AI and Duet AI should be regarded as leading generative AI capabilities, compete with the latest large token sizes to tackle non-trivial business use cases. Each offering is worthy of serious enteprise-wide consideration for both AI platform and app development as well as end-user AI enablement within Google Workspace and inside 3rd party business apps.

- Google Cloud has a unique AI value proposition. Differentiation in Google Cloud’s generative AI capabilities for enterprises specifically lies in a) best-in-class foundation model choice with their model garden, including the very latest competitively significant models, b) vital new “grounding†features to ensure AI results are factual and as accurate as possible, and c) versatility to securely run AI workloads in virtually all the ways that enterprises require, from on-prem to public multicloud, and D) sophisticated safety, privacy, and IP protection features, including born-cloud cybersecurity, strict Responsible AI compliance, and advanced digital watermarking features.

- Enterprise data is fundamentally safe with AI in Google Cloud. Critical for enterprise usage, Vertex and Duet AI always sandbox enterprise data within an enterprise’s virtual private cloud within Google Cloud in a highly secure way. They promise that their models never train themselves permanently on enterprise data. Though they must, in the moment, analyze enterprise data to answer queries, but always in a private, temporary way. Organizations are still advised to trust but verify this, however.

- Google Cloud has a very strong AI ecosystem play. Google Cloud can demonstrate many proof points that it is building one of the leading ecosystems of leading AI partners and technology providers, including those from leading foundation/ large language models and cutting-edge advanced AI hardware. The NVIDIA partnership is particularly important and will ensure Google Cloud can bring the latest and most powerful new AI technologies to bear long-term for their AI customers.

- Google's AI offerings are designed for rapid, pervasive, and strategic value. With Vertex AI and Duet AI, Google Cloud provided strong evidence it is delivering a major step towards enabling organizations to quickly put AI everywhere in their organization wherever they need it. They can do it extraindariily easily and safely, particularly given the versatile and easy-to-use app generation capabilities demonstrated today.

- Google Cloud's total AI offering is among best-in-class for the enterprise. In this analyst’s assessment, CIOs can be assured Google Cloud’s AI capabilities are current state-of-the-art in enterprise-grade AI. Google Cloud also demonstrated that they have a vision, plan, and many strategic partnerships to ensure they will remain so in the foreseeable future (which, however, may not be that far given the tech's fast-moving pace.)

- There's safety in a leading enterprise vendor, and AI has real risks. While CIOs can theoretically achieve some performance and innovation advantages by also leveraging the intense innovation taking place today in the AI open source space, it would only be at significant risk. This is perhaps the most central Google Cloud value proposition: It’s usually better to wait for Google Cloud to use its scale and expertise to apply its Responsible AI compliance as well as security and privacy reviews as it continues to seek to offer the most choice in model by figuring out how to incorporate the often less-safe public AI technologies into their Vertex AI fold.

Chief Information Officer