Google Cloud Next everything announced: Infusing generative AI everywhere

Google Cloud launched a series of updates, products and services designed to embed artificial intelligence and generative AI throughout its platform via Vertex AI, which is focused on builders, and Duet AI for front-end use cases.

The themes from Google Cloud at Google Cloud Next in San Francisco are use cases beyond IT, making it easier for developers to create with generative AI and large language models (LLMs) and driving usage throughout its services.

Google Cloud CEO Thomas Kurian said the game plan is to enable better framing of models, faster storage and infrastructure and tools to make AI more efficient and distributed all the way to the edge. Kurian added that it's critical to provide services that can address multiple use cases. Kurian also outlined customer wins and partnerships with GE Appliances, MSCI, SAP, Bayer, Culture AM, GM, HCA and others.

During a keynote, Kurian cited customer wins and projects. A few include:

- Yahoo is migrating 500 million mailboxes and 550PB of data to Google Cloud.

- Mahindra used Google Cloud for a traffic surge when it sold 100,000 SUVs in 30 minutes during its online car buying launch.

- Fox Sports is using Google Cloud to find clips in natural language as well as its models.

Google Workspace’s generative AI overhaul: Is ‘Attend for me’ the killer app? | At Google I/O 2023, Google Cloud launches Duet AI, Vertex AI enhancements | Generative AI features starting to launch, next comes potential sticker shock

"There are a lot of solutions being deployed in different ways across industries," said Kurian, who added content creation is a use case, as is training models for specific tasks and automating multiple functions from back office and production to customer service.

Ray Wang, CEO of Constellation Research, said:

“Every enterprise board is asking their technology teams the same question, ‘When will we be taking advantage of Generative AI to create exponential gains or find massive operational efficiencies?’ Customers are looking for vendors that can deliver not just generative AI but overall, AI capabilities. When we talk to senior level executives, they are all trying to figure out if they will have enough data to get to a precision level that their stakeholders will trust. So far, Google has shown that they are taking a much more thoughtful approach from chip to apps on AI than some other competitors.â€

Here's a look at everything Google Cloud outlined at Google Cloud Next.

Infrastructure

Google Cloud's big themes on infrastructure are platform-integrated AI assistance, optimizing workloads and building and running container-based applications. To that end, Google Cloud said Duet AI is now available across Cloud Console and IDEs. There's also code generation and chat assistance for developers, operations, security and data and low-code offerings.

The company also outlined the following for container-based applications:

- Google Kubernetes Engine (GKE) Enterprise.

- Cloud Run Multi-Container Support.

- Cloud Tensor Processing Units (TPUs) for GKE.

Google Cloud also launched new versions of its TPUs (TPUv5e) and A3 supercomputer based on Nvidia H100 GPUs, purpose-built virtual machines and new storage products--Parallelstore, Cloud Storage FUSE. Those announcements are designed for customers looking for infrastructure built for AI deployments.

Cloud TPU v5e supports both medium-sale training and inference workloads.

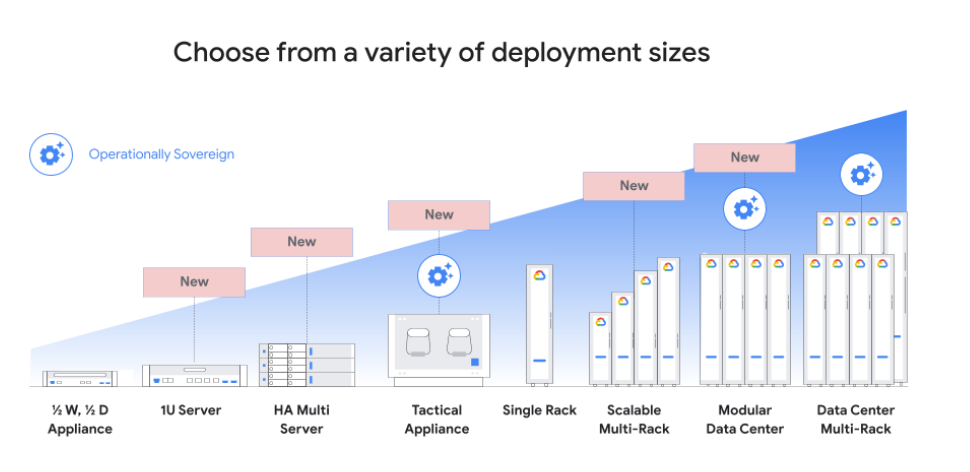

For traditional enterprises, Google outlined a series of new offerings--Titanium, Hyperdisk, Cross-Cloud networking and new integrated services on Google Distributed Cloud.

Databases

Google Cloud announced an AI-version of its AlloyDB database. With a series of launches, Google Cloud is looking to leverage its databases to make it easier for enterprises to run data where it is, provide a unified data foundation and create generative AI apps.

How Data Catalogs Will Benefit From and Accelerate Generative AI

The breakdown:

- AlloyDB AI, which will support vector search, in-database embedding, full integration with Vertex AI and open source generative AI tools.

- AlloyDB Omni, which is in preview. AlloyDB Omni is a downloadable edition of AlloyDB that can run on multiple clouds such as Google Cloud, AWS and Azure, on-premises and on a laptop. Omni delivers 2x faster transactional and up to 100x faster analytics queries compared to standard PostgreSQL, which is becoming more popular in the enterprise.

- Duet AI in databases to provide assistive database management and automation for migrations.

- Spanner Data Boost, which will offer workload isolated processing of operational data without impacting production systems.

- Memorystore for Redis Cluster, an open-source compatible scale-out database.

AI

Google Cloud made moves to create an integrated portfolio of open foundational models and tuning options. AWS hit similar themes lately as cloud giants see the ability to curate and offer foundational models as table stakes for enterprises.

Google Cloud outlined the following:

- Foundation model improvements and expanded tuning for PaLM (text and chat), Imagine, Codey and Text Embeddings.

- Meta's Llama 2 and Anthropic's Claude 2 will be available in the Vertex AI Model Garden. Google Cloud has more than 100 models in its Vertex AI Model Garden. Meta's Llama 2 and what that means for GenerativeAI

- Med-PaLM is now available for healthcare LLM use cases.

- Grounding for PaLM API and Vertex AI Search. Grounding was a key theme for Google Cloud executives because enterprises need high quality output when they layer in their data for specific use cases.

- Vertex AI Search and Conversation general availability, which includes major updates for generative search, image search and prompting with LLMs.

- Collab Enterprise on Vertex AI, an enterprise focused notebook experience with collaboration tools.

Analytics

Google Cloud outlined a series of data analytics tools that aim to enable enterprises to interconnect data, bring AI to your data and boost productivity. The themes from Google Cloud rhyme with industry developments from Databricks, a partner, along with MongoDB, Salesforce and a bevy of others.

Databricks Data + AI Summit: LakehouseIQ, Lakehouse AI and everything announced | MongoDB launches Atlas Vector Search, Atlas Stream Processing to enable AI, LLM workloads

Key items include:

- Open Lakehouse, an AI data platform that aims to work across all data formats and adding Hudi and Delta as well as fully managed Iceberg tables. There will also be cross-cloud joints and views in BigQuery Omni and one dashboard in Dataplex to data and AI artefacts.

- BigQuery ML, which will bring generative AI to enterprise data by using Vertex Foundation models directly on data in BigQuery. BigQuery ML inference engine will run predictions and Vertex Model and imports from TensorFlow, XGBoost and ONNX.

- BQ Embeddings and vector indexes including support for embeddings and vector indexes in BigQuery and synchronization with Vertex Features Store.

- BigQuery Studio, which will get a unified interface for data engineering, analytics and machine learning workloads.

- Duet AI in Looker and BigQuery for analysis, code generation and data workload optimization.

Security Cloud

Google Cloud moved to add Duet AI throughout its security offerings. The breakdown includes:

- Duet AI in Mandiant Threat Intelligence, which will use generative AI to improve threat assessments and create threat actor profiles.

- Duet AI in Chronicle Security Operations, which adds expertise to users.

- Duet AI in Security Command Center to bolster risk assessments and recommend remediation.

- Mandiant Hunt for Chronicle to combine front line intelligence with data.

- Platform security advancements for detection, network security and digital sovereignty.

Workspace

The big theme here was making Google Workspace AI-first and embedding Duet AI features throughout the platform.

Among the key items:

- Duet AI add on available Sept. 29. This new SKU will be available on a trial basis with pricing to be detailed later.

- Duet AI side panel, which will provide generative AI collaboration tools across the Workspace apps.

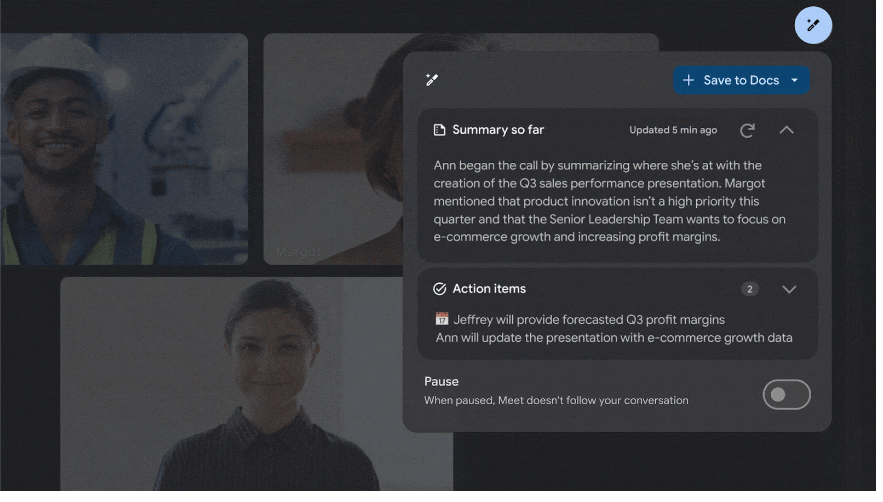

- Google Meet with Duet AI to improve visuals, sound and lighting as well as meeting management tools.

- Duet AI in Chat to provide updates and suggestions across Workspace apps.

- Zero trust and digital sovereignty controls automatically classify and label data for compliance and encryption.

Constellation Research’s take

Constellation Research analyst Doug Henschen said:

Data to Decisions Tech Optimization Digital Safety, Privacy & Cybersecurity Innovation & Product-led Growth Future of Work Next-Generation Customer Experience Google Google Cloud SaaS PaaS IaaS Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP CCaaS UCaaS Collaboration Enterprise Service AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Chief Information Officer Chief Technology Officer Chief Information Security Officer Chief Data Officer Chief Executive Officer Chief AI Officer Chief Analytics Officer Chief Product Officer“I’m mostly eager to see the demos and previews move into early trials and general availability. I’m sure early adopters will find out what works and what doesn’t, and they make some unexpected discoveries about gen AI that vendors didn’t foresee. Gen AI certainly has the potential to change analytics and BI as we know it very quickly, but it's time for reality to catch up with the promises.

Duet AI for both BigQuery and Looker is a potential game changer as it promises to make things easier for analysts and business users alike with natural-language-to-SQL generation, auto recommendations based on query context, and chat interactions with your data. Google execs say they are 'radically rebuilding Looker' with capabilities such as auto-generated slide presentations potentially replacing dashboards and promising a 'massive change in how Looker is used, and by whom.' I have yet to see generally available products, but there’s a palpable sense that the gen AI capabilities promised by Google, Microsoft and others may finally make analytics and BI broadly accessible and understandable to business users.

Openness and gen AI advances are the two big themes on the analytics front. To improve openness to third-party sources and clouds, Google BigQuery now supports Delta, Hudi and Iceberg table formats while Big Query Omni is gaining cross-cloud joins and materialized views. On gen AI -- beyond the addition of Duet AI to both BigQuery and Looker -- Google is integrating Big Query with Vertex AI, via a new BigQuery Studio interface, so there’s a single experience for data analysts, data scientists and data engineers. The integration between BigQuery and Vertex AI will also expose Vertex Foundation models directly to data in BigQuery for custom model training. Finally, Google is bringing Vertex AI into the Dataplex data catalog to provide unified access and metadata management over all data, models and related assets. This promises to improve data and model access and governance for all constituents and should help to accelerate the development of gen AI capabilities.

Microsoft partnered with Open AI to accelerate what it could do with AI, but in doing so it picked a fight with a formidable competitor in Google. Google initially had to react to Microsoft’s announcements earlier this year, but the company had a deep well of AI assets and expertise to draw on and I still see it as the leader among all three clouds in the depth and breadth of its AI capabilities, now including gen AI.â€