Former Vice President and Principal Analyst

Constellation Research

Steve Wilson is former VP and Principal Analyst at Constellation Research, leading the business theme Digital Safety and Privacy. His coverage includes digital identity, data protection, data privacy, cryptography, and trust. His advisory services to CIOs, CISOs, CPOs and IT architects include identity product strategy, security practice benchmarking, Privacy by Design (PbD), privacy engineering and Privacy [or Data Protection] Impact Assessments (PIA, DPIA).

Coverage Areas:

- Identity management, frameworks & governance- Digital identity technologies- Privacy by Design

- Big Data; “Big Privacy”- Identity & privacy innovation

Previous experience:

Wilson has worked in ICT innovation, research, development and analysis for over 25 years. With double…...

Read more

In this piece I provide more context on the Confidential Computing movement and reflect on its potential for all computing.

Acknowledgement and Declaration: I was helped in preparing this article by Manu Fontaine, founder of new CCC member Hushmesh, who did attend the summit. I am a strategic adviser to Hushmesh.

Down to security basics; all the way down

Confidential Computing is essentially about embedding encryption and physical security at the lowest levels of computing machinery, in order to better protect the integrity of information processing. The CC movement is a logical evolution and consolidation of numerous well understood hardware-based security techniques.

Generally speaking, information security measures should be implemented as far down the technology stack as possible; as they say, near to the “silicon” or the “bare metal”. By carrying out cryptographic operations (such as key generation, hashing, encryption and decryption) in firmware or in wired logic, we enjoy faster execution, better tamper resistance and above all, a smaller attack surface.

The basics are not new. Many attempts have been made over decades to standardize hardware and firmware-based security, and make these measures ubiquitous to software processes and general computing. Smartcards led the way.

Emerging in Europe in the 1990s, the whole point of a smartcard was to provide a stand-alone, compact, well-controlled computer, separated from the network and regular computers, where critical functions could be carried out safely. Cryptography was of special concern; smartcards were capable enough to offer a complete array of signing, encryption, and key management tools, critical for retail payments, telephony, government ID and so on.

The smarts

So the discipline of smartcards led to clearer thinking about security for microprocessors in general, and spawned a number of special purpose processors.

- From the early 1990s, the digital Global System for Mobile communications (GSM) cell phone system featured SIM cards — subscriber identification modules — essentially cryptographic smartcards holding each individual’s master account number in a digital certificate signed by her provider. The start and end of each new phone call is digitally signed in the SIM, thus providing secure metadata to support billing (and thus the SIM by the way is probably the world’s first cryptographically verifiable credential).

- In 2003, Bill Gates committed Microsoft to smartcard authentication, writing to thousands of customers in an executive e-Mail that “over time we expect most businesses will go to smart card ID”.

- ARM started working on the TrustZone security partition for its microprocessor architecture sometime before 2004.

- Trusted Platform Modules (TPMs) were conceived as security co-processors for PCs and all manner of computers, to uplift cyber safety across the board (in only adoption was as widespread as anticipated)

- NFC (near field communications) chip sets enable smartphones to emulate smartcards and thus function as payment cards. Security is paramount or else banks wouldn’t countenance virtual clones of their card products. But security was weaponized in the first round of “wallet wars” around 2010, with access to the precious NFC secure elements throttled, and Google forced to engineer a compromise “cloud wallet”.

Now, security wasn’t meant to be easy, and hardware security especially so!

Standardization of smartcards, trusted platform modules and the like been tough going, for all sorts of reasons which need not concern us right now.

Strict hardware-based security is also unforgiving. The

FIDO Alliance originally adopted a strenuous key management policy where private authentication keys were never to leave the safety of approved chips. But the impact on users when their personal devices need to be changed out is harsh, and so FIDO has pivoted — very carefully mind you — to “synchronized” private keys in the cloud, a solution branded

Passkeys.

TEE time!

The Confidential Computing Consortium (

CCC) is a relatively new association comprising hardware vendors, cloud providers and software developers aiming to “accelerate the adoption of Trusted Execution Environment (TEE) technologies and standards”.

The CCC is certainly not the only game in town, with the long running Trusted Computing Group (

TCG, est. 2003) continuing to develop standards for the important Trusted Platform Module (TPM) architecture. Membership of these groups overlaps. I do not mean to compare or rank security industry groups; I merely take this opportunity to report on the newest thinking and developments.

So TEEs sit at the centre of Confidential Computing.

Confidential Computing protects data in use by performing computation in a hardware-based, attested Trusted Execution Environment. These secure and isolated environments prevent unauthorized access or modification of applications and data while in use, thereby increasing the security assurances for organizations that manage sensitive and regulated data.

So Confidential Computing crucially goes beyond conventional encryption of data at rest and in transit, to protect data in use.

Attestation of the computing machinery is a central idea. This is the means by which any user or stakeholder can tell that a processing module is operating correctly, within its specifications, with up-to-date parameters and code. The CCC updated its definition of confidential computing, not long before the CCC Summit, to make attestation essential.

There’s more to CC than meets the eye

Confidential Computing as a field has yet to register with most IT professionals. I find that if people know anything at all about CC, they tend to see it in terms of secure storage, data vaults, and “hardened” or “locked down” computers.

But there is so much more to it.

At Constellation Research we have always taken a broad view of digital safety, beyond data privacy and cybersecurity. Safety must also mean confidence, even certainty for practical purposes. We believe stakeholders must have evidence for believing that a system is safe. Safety is about both rules and tools.

Security and privacy are always context dependent. Safety is judged relative to benchmarks, so we need to know the specifics behind calling a system fit for purpose.

What are the conditions in which a system is safe? What has it been designed for? What standards apply and who determined they are being followed? And do we know the detailed history of a system, from its boot-up through to the present minute?

This type of thinking leads to the need for finer grained signals to help users be confident that a system is safe and that given information is reliable. Data today has a life of its own, created from complex algorithms, training sets and analytics, typically with multiple contributions over time. We often need to know the story behind the data.

With Confidential Computing we should be able to account for the entire life story of all important devices and all important data, and make those details machine readable and verifiable.

Recapping the CC Summit

As reported, the #CCSummit on June 29 featured a breadth of topics and perspectives.

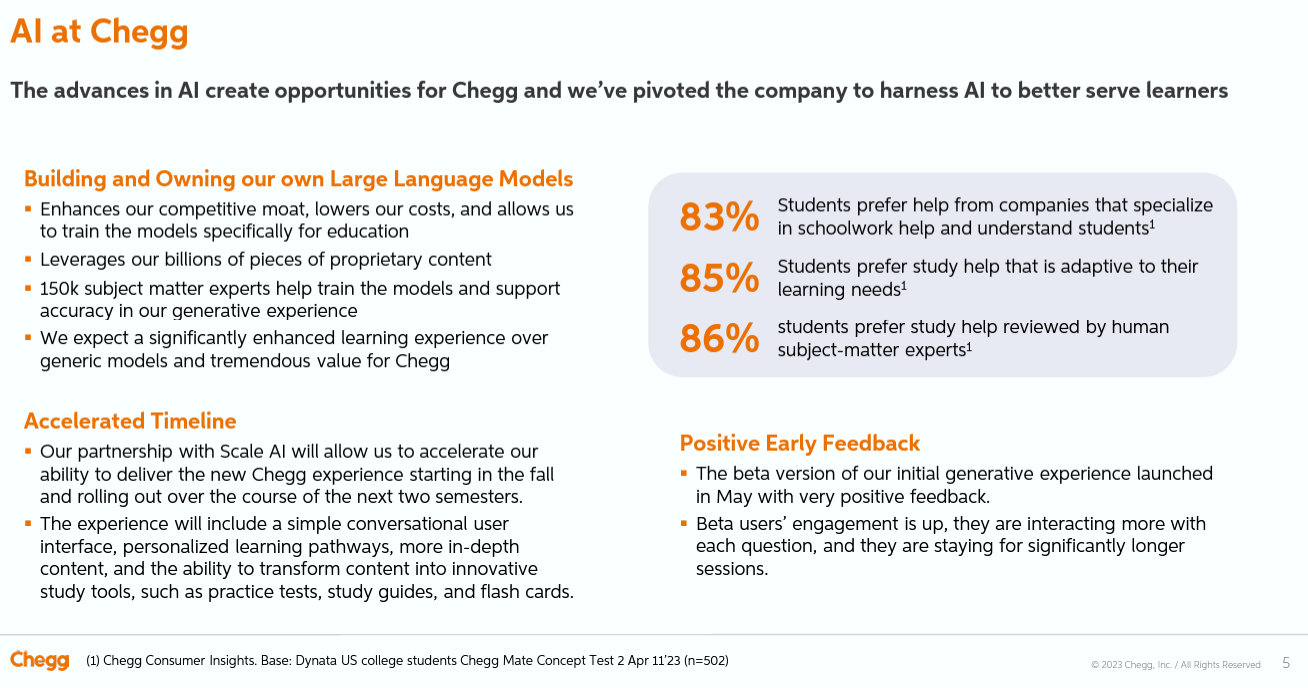

- The provenance of machine learning training data, algorithmic transparency and the pedigree of generative AI products are all excellent CC use cases.

- Intel Chief Privacy Officer Xochitl Monteon argued for protecting data through its entire lifecycle in a CC ecosystem.

- Google’s Head of Product for Computing and Encryption Nelly Porter explained how CC strengthens digital sovereignty in emerging economies.

- Opaque Systems founder Raluca Ada Popa advocated for “Privacy-preserving Generative AI” including secure enclaves to protect machine learning models in operation.

Reflections: Can all computing be Confidential Computing?

Well, perhaps not all, but Confidential Computing should be the norm for most computing in future.

However, in my opinion the label “confidential” is limiting. Of course, some things need to be kept secret but the real deal with CC is certainty about the cryptographic state of our IT. Admittedly that’s a bit of a mouthful but let’s be clear about the requirement.

Cryptography is now so critical in digital infrastructure, it has to be a given. Cryptography is ubiquitous, and not just for encryption to keep things secrecy that matters; encryption for authentication is actually far more pervasive. Digital signatures, website authentication, code signing, device pedigree, version numbering and content watermarking are all part of the digital fabric. These techniques all rest on cryptographic processors operating properly without interference, and cryptographic keys being generated faithfully and distributed to the proper holders.

Yet as Hushmesh founder Manu Fontaine observes, “Cryptography is unforgiving but people are unreliable”.

That is, cryptography can’t be taken for granted – not yet.

If cryptography is to be a given, we must automate as much of it as possible, especially the attestation of the state of the machinery, to put certainty beyond the reach of tampering and human error.

Hushmesh has one of the most innovative applications for Confidential Computing. They have re-thought the way cryptographic relationships (usually referred to as bindings) are formed between users, devices and data, and turned to CC to automate the way these relationships are formed, so that users and data are fundamentally united instead of arbitrarily linked.

No room for error

Botnet attacks show us that the

most mundane devices (all devices these days are computers) can become the locus of gravely serious vulnerabilities.

The scale of the IoT and the ubiquity of microprocessors (MCUs) and field upgradable software means that

even light bulbs actually need what we used to call “military grade” security and reliability.

The military comparisons are obsolete. We really need to shift the expectation of consumer grade security and make serious encryption the norm everywhere.

The state of all end-points in cyberspace needs to be standardized, measurable, locked down, and verifiable. So many end-points now generate data and send messages back into the network. As this data spreads, we need to know where it’s really come from and what it means, not only to protect against harm but to maximize the value and benefits data can bring.

Privacy and data control

Remember that privacy is more to do with controlling personal data flows than confidentiality. A rich contemporary digital life requires data sharing, not data hiding.

A cornerstone of data privacy is disclosure minimization. A huge amount of extraneous information today is disclosed as circumstantial evidence collected in a vain attempt to lift confidence in business transactions, to support possible forensic activities, to try and deter criminals. Think about checking into a hotel: in many cases the clerk takes a copy of your driver licence just in case you turn out to be a fraudster.

If data flows such as payments by credit card were inherently more reliable, merchants wouldn’t need superfluous details like the card verification value (CVV).

Better reliability of core data would help stem the superfluous flow of personal information. Reliability here boils down to data signing, to mark its origin and provenance.

MyPOV: the most important primitive for security and privacy is turning out to be data signing. All important data should be signed at the origin and signed again at every important step as it flows through transaction chains, to enable us to know the pedigree of all information and all things.

Digital Safety, Privacy & Cybersecurity

FIDO

Chief Analytics Officer

Chief Data Officer

Chief Digital Officer

Chief Information Officer

Chief Information Security Officer

Chief Privacy Officer

Chief Technology Officer