Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…...

Read more

View full PDF

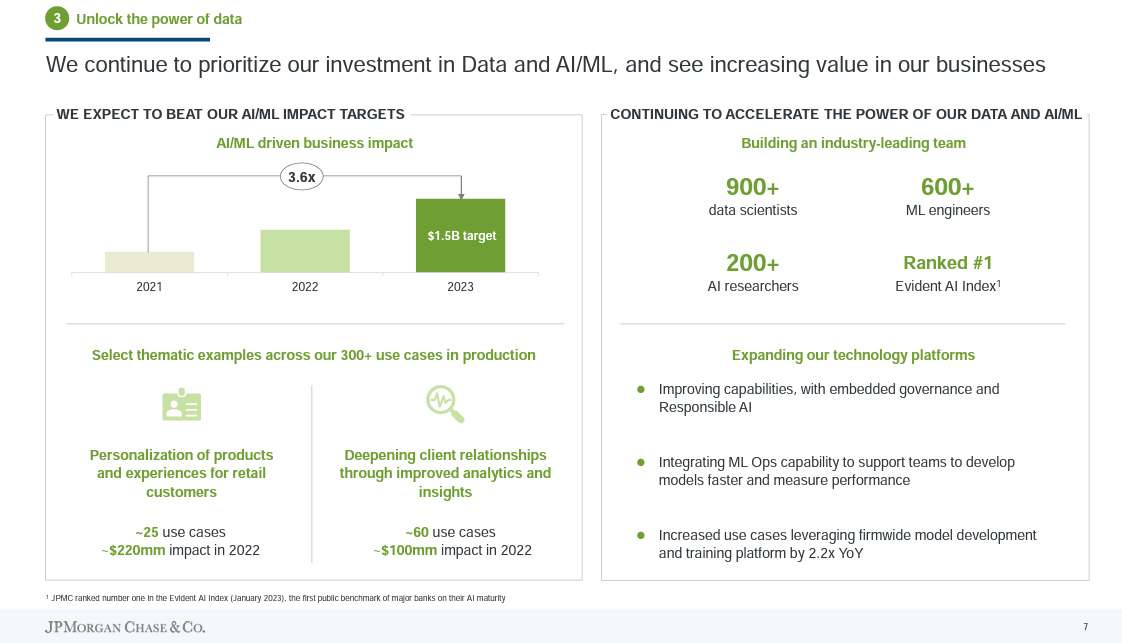

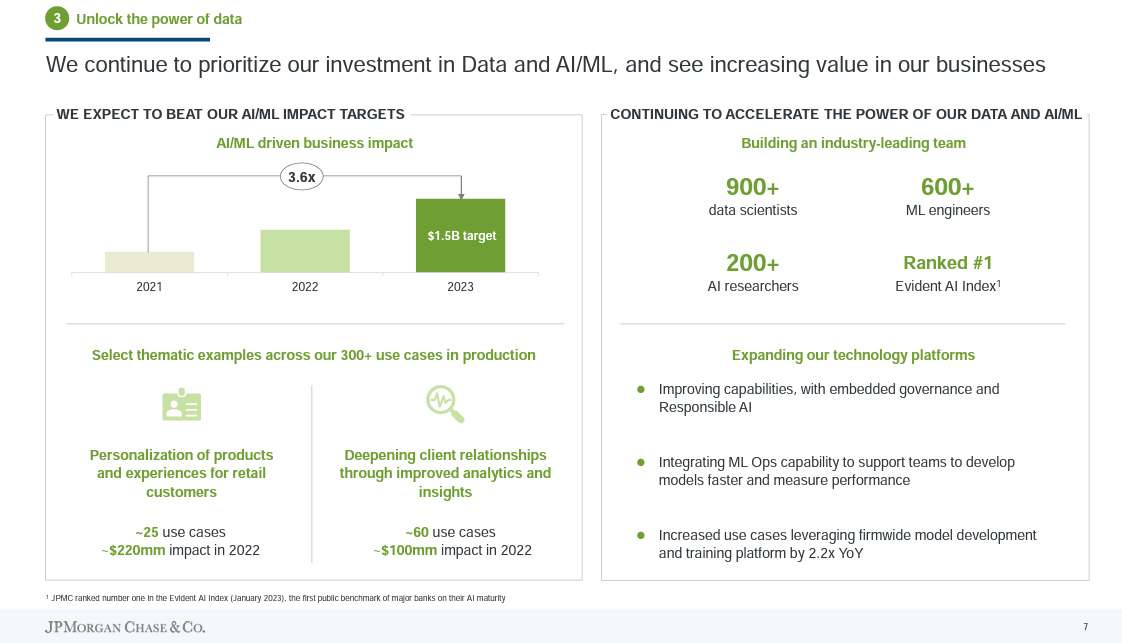

JPMorgan Chase will deliver more than $1.5 billion in business value from artificial intelligence and machine learning efforts in 2023 as it leverages its 500 petabytes of data across 300 use cases in production.

"We've always been a data driven company," said Larry Feinsmith, Managing Director and Head of Technology Strategy, Innovation, & Partnerships at JPMorgan Chase. Feinsmith, speaking with Databricks CEO Ali Ghodsi during a keynote at the company’s Data + AI Summit, said JPMorgan Chase has been continually investing in data, AI, business intelligence tools and dashboards.

Indeed, JPMorgan Chase said it will spend $15.3 billion on technology investments in 2023. JPMorgan Chase's technology budget has grown at a 7% compound annual growth rate over the last four years.

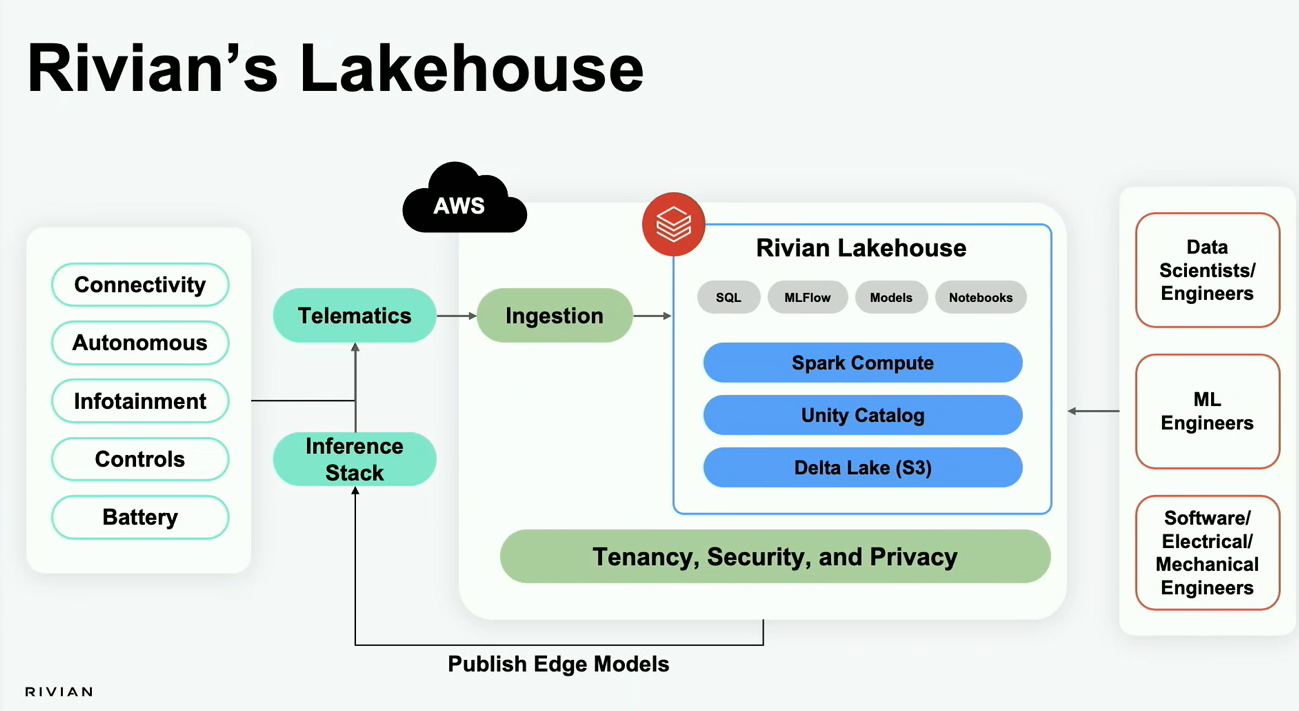

Feinsmith said the bank's AI/ML strategy is one of the big reasons JPMorgan Chase migrated to the public cloud. "If you look at our size and scale, the only way to deploy at scale is to do it through platforms," said Feinsmith. "Everyone has an opinion on data platforms, but you can efficiently move the data once and manage. Once you start moving data around it's highly inefficient and breaks the lineage."

JPMorgan Chase, a customer of Databricks, Snowflake and MongoDB, has multiple platforms, according to Feinsmith. It has an internal platform, JADE (JPMorgan Chase Advanced Data Ecosystem) for moving and managing data and one called Infinite AI for data scientists. "Equally as important as the data is the capabilities that surround that data," said Feinsmith, adding that data discovery, data lineage, governance, compliance and model lifecycle are critical.

According to Feinsmith, JPMorgan Chase's AI efforts start with a business focus with data scientists and AI/ML experts embedded into each business.

Feinsmith said JPMorgan Chase is leveraging streaming data and said he was a fan of Databricks' Lakehouse architecture and new AI features because it's easier to move and process data in one environment instead of two architectures, a data warehouse for business intelligence and a data lake for AI. JPMorgan deploys a central but federated data strategy and interoperability between data platforms is important. "Data has to be interoperable," Feinsmith told Ghodsi. "Not all of our data will wind up in Databricks. Interoperability is very important."

That comment rhymes with what other enterprise technology buyers have said. Despite a lot of talk about consolidating vendors--mostly from vendors looking to gain share--enterprise buyers want to keep options open. How JPMorgan Chase has approached its tech stack is instructive.

The digital transformation behind the AI

At JPMorgan Chase's Investor Day in May, Lori Beer, Global CIO at the bank, gave an overview of the bank's technology strategy. In 2022, JP Morgan launched a plan to deliver leading technology at scale with its team of 57,000 employees.

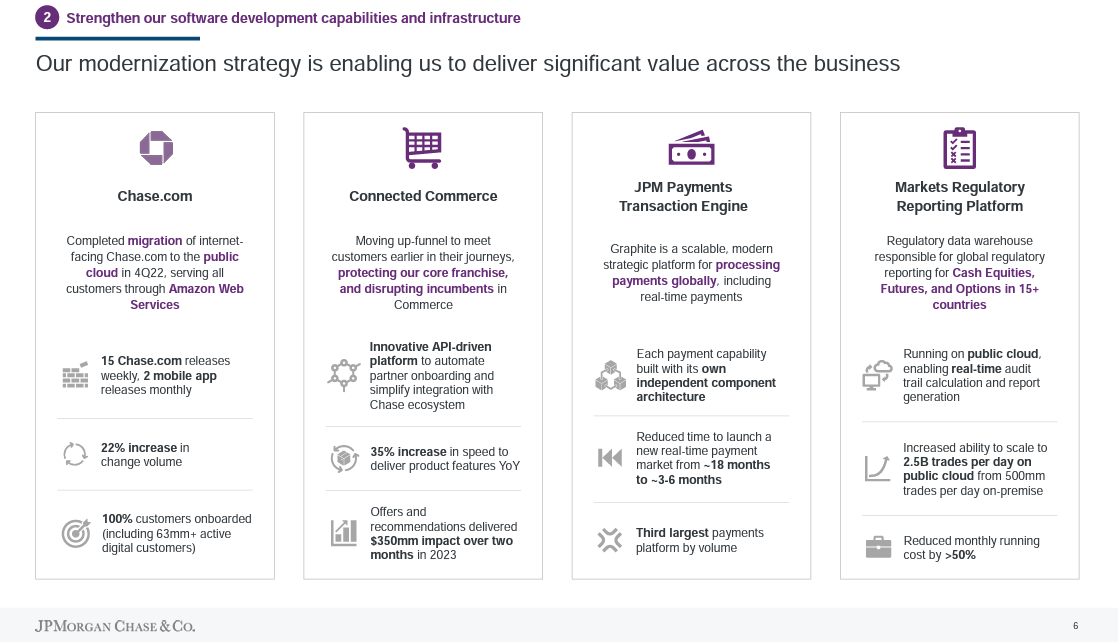

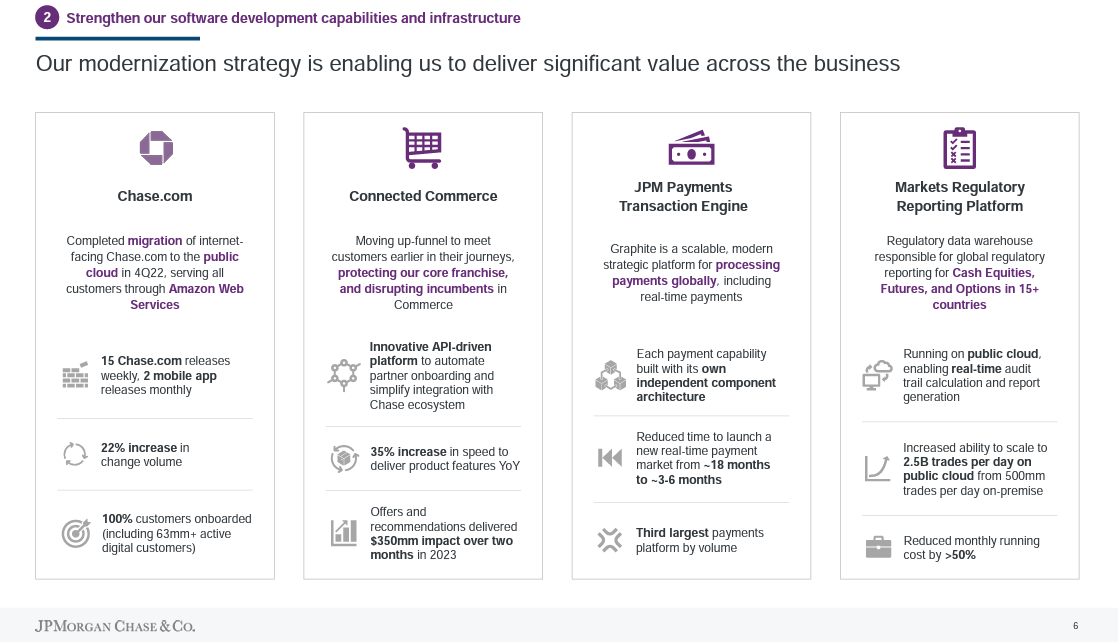

"Products and platforms need a strong foundation to be successful, and ours are underpinned by our mission to modernize our technology and practices," explained Beer. "We are already delivering product features 20% faster than last year, and we continue to modernize our applications, leverage software as a service and retire legacy applications."

JPMorgan Chase is moving to a multi-vendor public cloud approach while optimizing its owned data centers. The company is also embedding data and insights throughout the organization, said Beer. Those efforts will pave the way for large language models (LLMs) and other advances in the future.

"We have driven $300 million in efficiency through modern engineering practices and labor productivity, and we have developed a framework that enables us to identify further opportunities in the future. Our infrastructure modernization efforts have yielded an additional $200 million in productivity, driven by improved utilization and vendor rationalization," said Beer.

Here's a look at the key pillars of JP Morgan Chase's digital transformation.

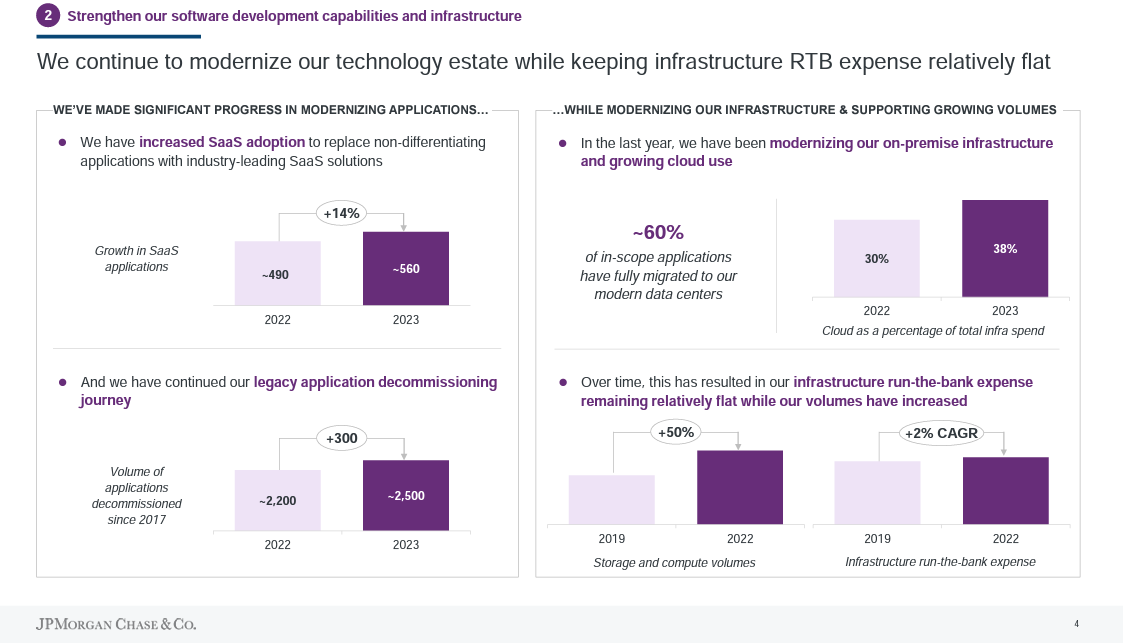

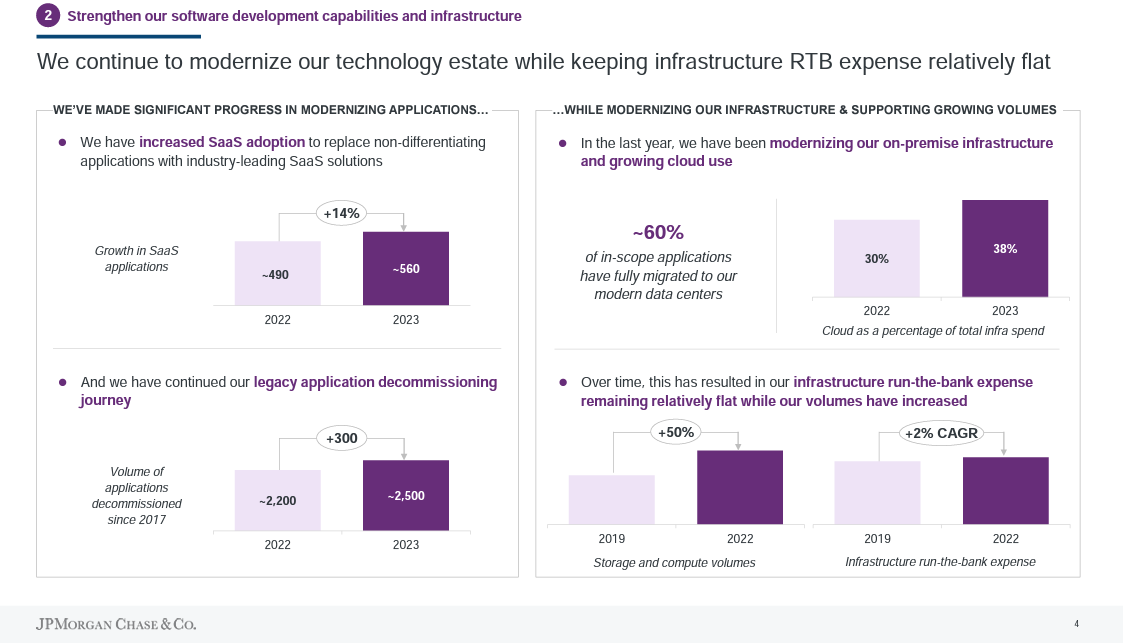

Applications. Beer said the bank has decommissioned more than 2,500 legacy applications since 2017 and is focusing on modernizing software to deliver products faster. The bank has more than 560 SaaS applications, up 14% from 2022. By using industry-leading SaaS applications, Beer said it will be easier to scale new products to more than 290,000 employees.

Infrastructure modernization. Beer said:

"To date, we have moved about 60% of our in-scope applications to new data centers, which are 30% more efficient, and this translates to 16,000 fewer hardware assets. We are also migrating applications to utilize the benefit of public and private cloud. 38% of our infrastructure is now in the cloud, which is up 8 percentage points year-over-year. In total, 56% of our infrastructure spend is modern. Over the next three years, we have line of sight to have nearly 80% on modern infrastructure. Of the remainder, half are mainframes, which are highly efficient and already run in our new data centers."

JPMorgan Chase has been able to maintain infrastructure expenses flat even though compute and storage volumes have increased 50% since 2019, said Beer. One example is Chase.com is now being served through AWS and has an average of 15 releases a week.

Engineering. Beer said JPMorgan is equipping its 43,000 engineers with modern tools to boost productivity. JPMorgan Chase has adopted a framework to speed up the move from backlog to production via agile development practices.

Data and AI. Beer said:

"We have made tremendous progress building what we believe is a competitive advantage for JPMorgan Chase. We have over 900 data scientists, 600 machine learning engineers and about 1,000 people involved in data management. We also have a 200-person top notch AI research team looking at the hardest problems in the new frontiers of finance."

Specifically, Beer said AI is helping JPMorgan Chase deliver more personalized products and experiences to customers with $220 million in benefits in the last year. At JPMorganChase's Commercial Bank, AI provided growth signals and product suggestions for bankers. That move provided $100 million in benefits, said Beer.

The data mesh

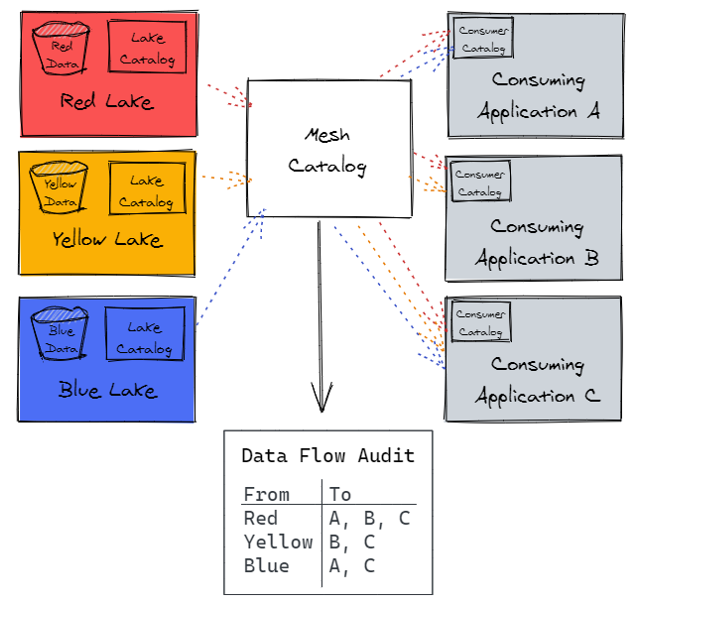

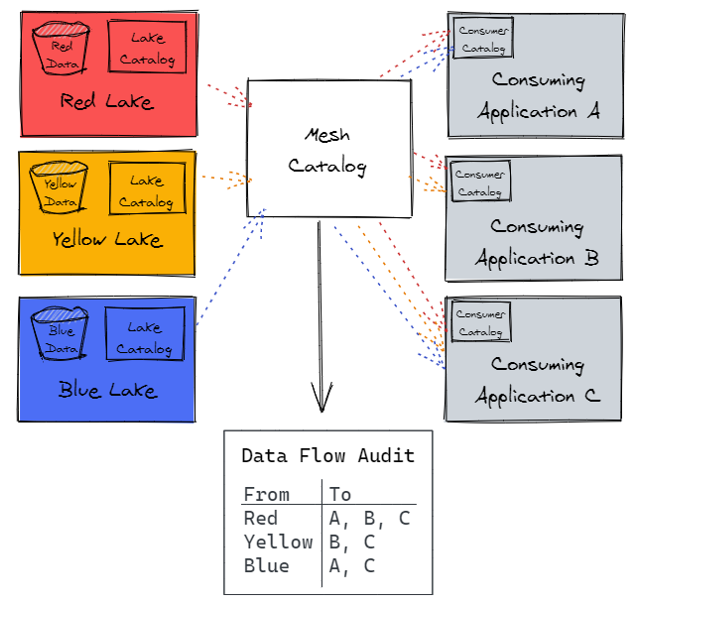

To capitalize on AI, JPMorgan Chase created a data mesh architecture that is designed to ensure data is shareable across the enterprise in a secure and compliant way. The bank outlined its data mesh architecture at a 2021 Data Mesh Learning meetup.

JPMorgan said its data approach is to define data products that are curated by people who understand the data and management requirements. Data products are defined as groups of data from systems that support the business. These data groups are stored in its product specific data lake. Each data lake is separated by its own cloud-based storage layer. JPMorgan Chase catalogs the data in each lake using technologies like AWS S3 and AWS Glue.

Data is then consumed by applications that are separated from each other and the data lakes. JPMorgan Chase said it makes the data lake visible to data users to query it.

At a high level, JPMorgan Chase said its approach will empower data product owners to manage and use data for decisions, share data without copying it and provide visibility into data sharing and lineage.

In a slide, this architecture looks like this.

According to JPMorgan Chase, its architecture keeps data storage bills down and ensures accuracy. Since data doesn't physically leave the data lake, JPMorgan Chase said it's easier to enforce decisions product owners make about their data and ensure proper access controls.

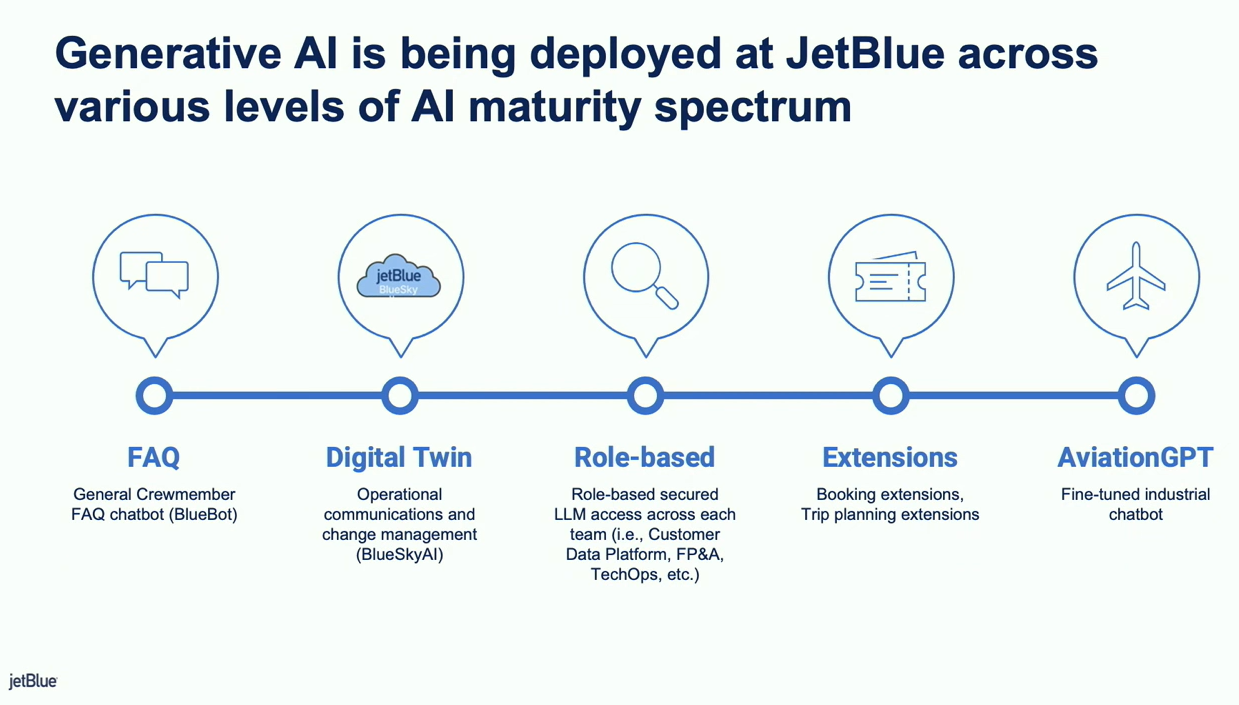

How JPMorgan Chase will address generative AI

Given JPMorgan Chase's data strategy and architecture, the bank can more easily leverage new technologies like generative AI. Feinsmith at the Databricks conference said JPMorgan Chase was optimistic about generative AI but said it's very early in the game.

"There's a lot of optimism and a lot of excitement about generative AI. Businesses all know about it and generative AI will make us more productive," said Feinsmith. "But we won't roll out generative AI until we can do it in a responsible way. We won't roll it out until it's done in an entirely responsible manner. It's going to take time."

In the meantime, JPMorgan Chase's Feinsmith said the bank is working through the generative AI risks. The promise for JPMorgan Chase is obvious: Take 500 petabytes of data, train it, make it valuable and then add value to open-source models.

Beer outlined the JPMorgan Chase approach during the bank's Investor Day in May.

"We couldn't discuss AI without mentioning GPT and large language models. We recognize the power and opportunity of these tools and are committed to exploring all the ways they can deliver value for the firm. We are actively configuring our environment and capabilities to enable them. In fact, we have a number of use cases leveraging GPT4 and other open-source models currently under testing and evaluation.â€

With Databricks, MongoDB and Snowflake all adding generative AI and large language model (LLMs) capabilities to the data stack, enterprises will have the tools when ready.

JPMorgan Chase has named Teresa Heitsenrether its chief data and analytics officer, a central role overseeing the adoption of AI across the bank. Heitsenrether oversees data use, governance and controls with the aim of harnessing AI technologies to effectively and responsibly develop new products, improve productivity and enhance risk management.

Heitsenrether is a 35-year veteran at JP Morgan Chase and previously was Global Head of Securities Services from 2015 to 2023.

Beer said explained JPMorgan Chase’s approach to responsible AI:

“We take the responsible use of AI very seriously, and we have an interdisciplinary team, including ethicists, data scientists, engineers, AI researchers and risk and control professionals helping us assess the risk and build appropriate controls to prevent unintended misuse, comply with regulation, and promote trust with our customers and communities. We know the industry is making remarkably fast progress, but we have a strong view that successful AI is responsible AI."

Data to Decisions

New C-Suite

Innovation & Product-led Growth

Tech Optimization

Future of Work

Next-Generation Customer Experience

Digital Safety, Privacy & Cybersecurity

AI

ML

Machine Learning

LLMs

Agentic AI

Generative AI

Analytics

Automation

B2B

B2C

CX

EX

Employee Experience

HR

HCM

business

Marketing

Metaverse

developer

SaaS

PaaS

IaaS

Supply Chain

Quantum Computing

Growth

Cloud

Digital Transformation

Disruptive Technology

eCommerce

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

Blockchain

CRM

ERP

Leadership

finance

Social

Healthcare

VR

CCaaS

UCaaS

Customer Service

Content Management

Collaboration

M&A

Enterprise Service

GenerativeAI

Chief Information Officer

Chief Data Officer

Chief Executive Officer

Chief Digital Officer

Chief Technology Officer

Chief Information Security Officer

Chief AI Officer

Chief Analytics Officer

Chief Product Officer