Data fabrics improve access to data wherever that data resides. Now Tableau wants to expose more business users to fabrics using its Tableau Prep, Tableau Catalog and data management capabilities.

Most organizations have embraced the imperative to harness as much data as possible to drive better decisions and innovative services, but that can be a challenge in an era in which data is distributed across on-premises data centers, software-as-a-service applications, multiple public clouds, and various other sources.

The proponents of old, centralized models, such as enterprise data warehouses and even data lakes, have largely acknowledged that organizations are likely to have multiple warehouses, multiple lakes and a mess of other systems and sources that will never be copied into one, centralized management system.

That does not mean, however, that organizations have given up on centralized data access and governance. The data fabric is an architecture that has emerged to simplify timely access to data across an organization. Leading organizations are developing fabrics to provide fast, secure, and well-governed access to data without having to move it, particularly where egress costs, data-residency requirements or just expediency and convenience would dictate otherwise. Data fabrics are also built to evolve to accommodate changes in sources and data locations caused by changing business conditions or corporate moves such as mergers and acquisitions.

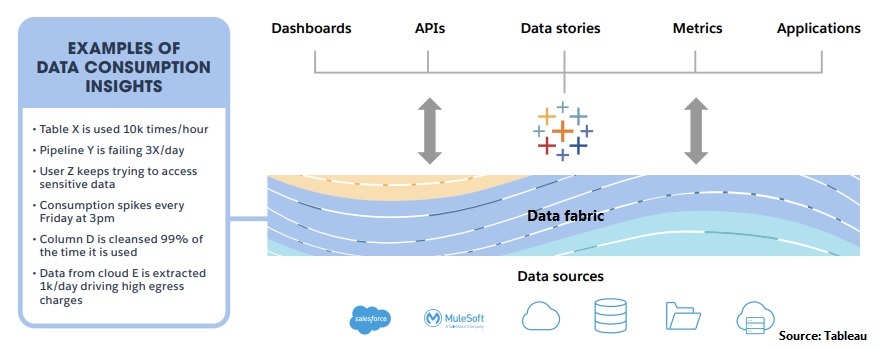

Tableau understands data fabrics to be “a combination of semantics, data integration processes, and prebuilt services optimized for delivering data to the data consumers,” says Chandrika Shankarnarayan, a vice president of product for Tableau at Salesforce. “The goal is to make it easy to deliver and share data by automating away many of the barriers that exist. We want to get to reusable, flexible analytics pipelines that can be automated for data access and sharing.”

Shankarnarayan is also co-author of a new white paper on “Building a Data Fabric for Analytics with Tableau,” which has been published just in time for next week’s Dreamforce event. “Organizations that pivot to this rapidly composable [fabric] design empower more people—developer and non-developer alike—to use data in a secure and frictionless way,” according to the white paper. “The Tableau suite of products… support and enhance data fabrics to accelerate this transformation.”

While the typical data fabric employs a “hub and spokes” model in which a central data management system determines which data can be pushed out to business units, Tableau wants to complement and extend this approach with a bottom-up methodology that “meets people where they work.” The approach is expected to enable subject matter experts to “create metadata, business rules, and reporting models to inform governance and security at the enterprise level,” says Tableau’s white paper.

Tableau intends to extend and support data fabric architectures in part by using automation and AI to enhance “last mile” consumption insights and deliver recommendations.

Needles and Thread

So just what are the capabilities that will facilitate a bottom-up approach supporting and extending existing fabrics? For starters there’s the Tableau semantic layer, introduced in 2020, which is used to associate business-friendly metadata with raw data and to create schemas for quick analysis. Shankarnarayan also cites Tableau Prep and all of the components of Tableau Data Management including Tableau Catalog and the data-connectivity layer.

With its visual preparation interface for combining, shaping, cleansing and applying metadata to sources, Tableau Prep is used by data professionals and data-savvy business types using Tableau Analytics. Tableau Prep also facilitates the building of automated data pipelines that make the propagation of metadata and appropriate data-access controls far easier. But if Tableau Prep and other Data Management tools are to democratize data fabrics, Shankarnarayan acknowledges that certain licensing approaches will have to evolve.

“We currently have a split experience with Tableau Prep. Most users can download Prep Builder, but the Prep Conductor piece—where flow automation happens—requires a Tableau Data Management license,“ she explains. “We are looking to alleviate the friction in the future to help customers leverage Prep capabilities to the fullest extent within their Analytics experience.”

Tableau expects to take a number of steps over the next 18 months to remove some of this friction. Future enhancements will include better alignment of Tableau Prep Web Authoring into Workbook Web Authoring, thereby exposing Tableau Prep more broadly in the context of Tableau Analytics in the cloud.

Additionally, Tableau plans to streamline and simplify workflows between workbook creation and Tableau Prep Web Authoring.

“We also want to enable smart management between Tableau Prep and Tableau Analytics so users can click a button to go to an upstream Prep flow to publish a data source and vice versa,” says Shankarnarayan. “That's not easy today.”

Another development to expect, over the next 18 months, will be more numerous and more complete integrations with third-party enterprise data catalog and data fabric vendors, because “customers are not going to make a choice between top-down versus bottom-up and just go with one,” she says. “We will see how we can partner with these vendors where they work best, regardless of which specific data fabric initiative customers use.”

Today, for example, Tableau can egress metadata to partners Alation, Collibra, Data.World and Atlan, but the company plans to add the ability to ingress metadata from these data catalog and governance vendors to bring their metadata into the Tableau and Salesforce ecosystem.

The most visionary development that Tableau and Salesforce are working on is a data cockpit that will provide visibility into the relationships among the various semantics associated with metadata. The goal is to support impact analysis and, eventually, AI/ML recommendations, not just over that metadata, but also for downstream operations, whether they’re data-preparation or analytics steps. At this point the cockpit is in the crawl stage of the crawl-walk-run development cycle, says Shankarnarayan.

Doug’s Take

Not so long ago during a video panel discussion with a few analyst colleagues, one of my peers suggested that the number of data catalog users might one day surpass the number of BI/analytics users. I scoffed, instinctively feeling that end users just want to get their work done. In fact, that instinct is also why I’ve been an advocate of the growing trend toward embedding concise analytics into applications at decision points to eliminate the need to navigate to separate dashboards to gain insights before taking action.

I’m not maligning data catalogs, but I believe their appeal and utility is mostly among data professionals and analysts – the few who prepare data and insights for the many. Tableau’s push to make data fabrics more accessible to the many “where they work” is spot on, in my view, but there’s work left to be done by Tableau. Exposing Tableau Prep and Data Management capabilities more broadly would be a good start, but automation options and AI/ML-powered ways of applying metadata, sharing data insights and recommending sources will also be crucial to making fabrics more accessible to business users.

I’ll be there at next week’s Tableau Keynote at Dreamforce and hope to hear a hint of these plans. But for those who are most interested in the data fabric topic, you can find more detail and roadmap plans by downloading the company’s “Building a Data Fabric for Analytics with Tableau” white paper.

Related Reading:

Constellation ShortList™ Metadata Management, Data Cataloging and Data Governance

Constellation ShortList™ Augmented Business Intelligence and Analytics

Constellation ShortList™ Environmental, Social, and Governance (ESG) Reporting