How much generative AI model choice is too much?

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly.

With enterprises still kicking the tires on large language models (LLMs), use cases and generative AI applications, vendors are big on providing choice, bring-your-own-models and the ability to mix and match foundations.

How much LLM choice is too much? We'll probably find out in the months ahead, but I’d argue we’re getting close. And the OpenAI kerfuffle is only going to invite more startups with LLMs looking to take share.

Consider the following:

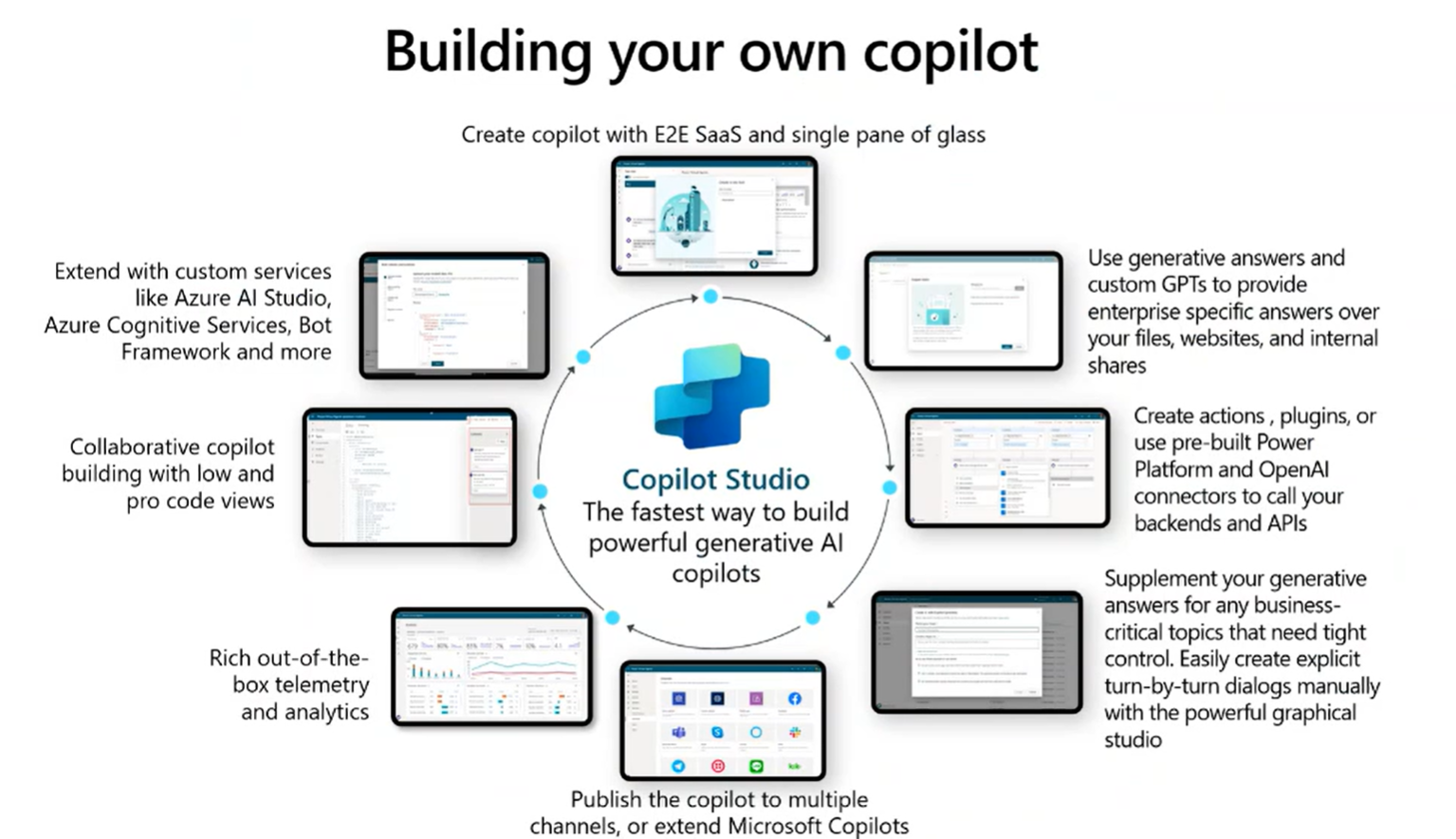

- Microsoft's Ignite conference featured Copilot Studio, a platform that will enable enterprises to use multiple models, create custom ones and extend Microsoft copilots. Microsoft will have a copilot for every experience. Meanwhile, Scott Guthrie, Executive Vice President, Cloud + AI Group at Microsoft, told analysts the company is committed to model choice beyond its OpenAI partnership. Guthrie said enterprises will ultimately be able to use one model to fine tune another and mix models with the same interaction context.

- Dell Technologies and Hugging Face announced a partnership aimed at on-premises generative AI. The aim is to give enterprises the ability to procure systems and tune open-source models more easily.

- Microsoft launched Azure Models as a Service with Stable Diffusion, Llama2 from Meta, Mistral and Jais, the world's largest Arabic language model. Command from Cohere will also be available.

- Google Cloud has its Model Garden, which features more than 100 models including foundational ones and task-specific ones.

- Amazon Web Services has Amazon Bedrock, which features models from AI21 Labs, Anthropic, Cohere, Meta, Stability AI and Amazon in a managed service. I'm no psychic but rest assured there will be a heavy dose of Bedrock at re:Invent 2023 later this month.

- OpenAI outlined plans to build out its ecosystem with custom GPTs and a marketplace to follow.

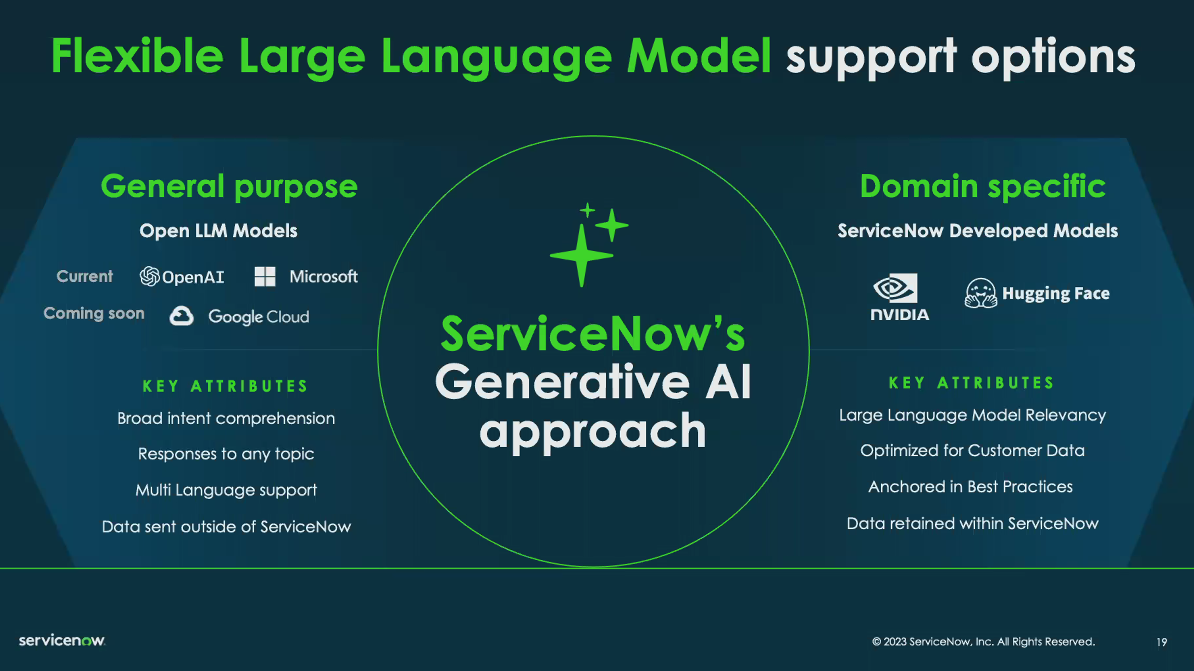

- Software vendors are also enabling bring your own model arrangements, partnerships and development of domain specific models. ServiceNow's strategy plays both sides of the LLM equation--open LLMs as well as ServiceNow developed models. ServiceNow's Jon Sigler, senior vice president of Now Platform, said:

"We have a dual approach to models. We integrate general purpose models through a controller. We are also very focused on the smaller faster, more secure, less expensive models that do domain specific things. The combination of the large language models for general purpose and domain specific gives us the opportunity to do something that nobody else can do."

My hunch is that we'll reach some level of appropriate foundational model choice and then focus on small models aimed at specific use cases. Consider what Baker Hughes did with C3 AI and ESG materiality assessments. Specific model for a specific use case.

The current LLM landscape rhymes with that early big data buildout where the industry initially assumed they'd adopt open-source technology and then expand. The problem: For many enterprises, big data implementations never moved past the science experiment stage. There was no need for enterprises to run their own Hadoop/MapReduce clusters.

Will the average enterprise really know the difference between the latest GPT from OpenAI vs. Meta's Llama 2 vs. Anthropic's Claude vs. MosaicML? Probably not. That choice just brings complexity. The real market in LLMs will be domain-specific models that drive returns. Foundational models will be commoditized quickly and the difference between choosing between 50 models, 100 models and 1,000 models won't matter. A booming marketplace of custom models, however, will drive returns.

In the end, the massive selection of models will require an abstraction layer to make enterprise life easier.