Microsoft launches AI chips, Copilot Studio at Ignite 2023

Microsoft fleshed out its generative AI infrastructure plans with the launch of its custom processors for model training and inference along with Copilot Studio for new use cases.

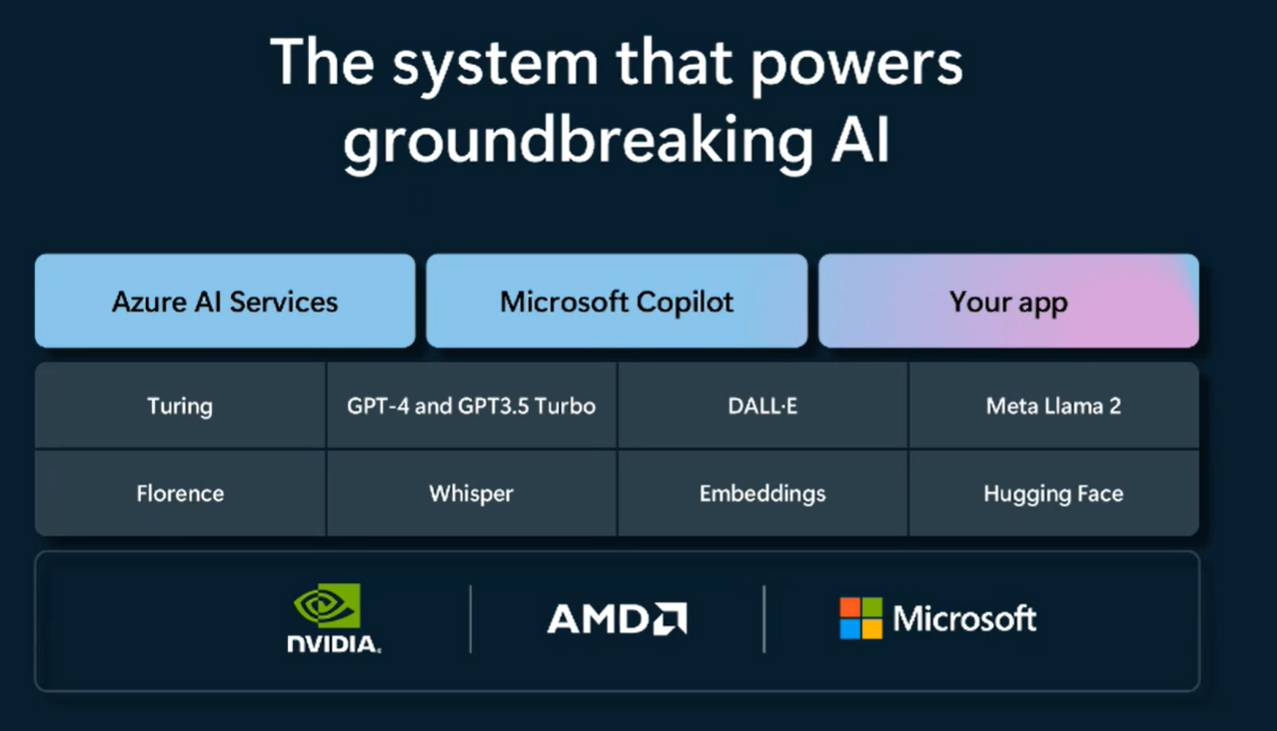

At its Ignite 2023 conference, Microsoft Azure joined its rivals in offering custom processors for model training. Nvidia has been dominating the field, but now has competition from AMD along with custom processors such as Amazon Web Services Trainium and Inferentia and Google Cloud TPUs.

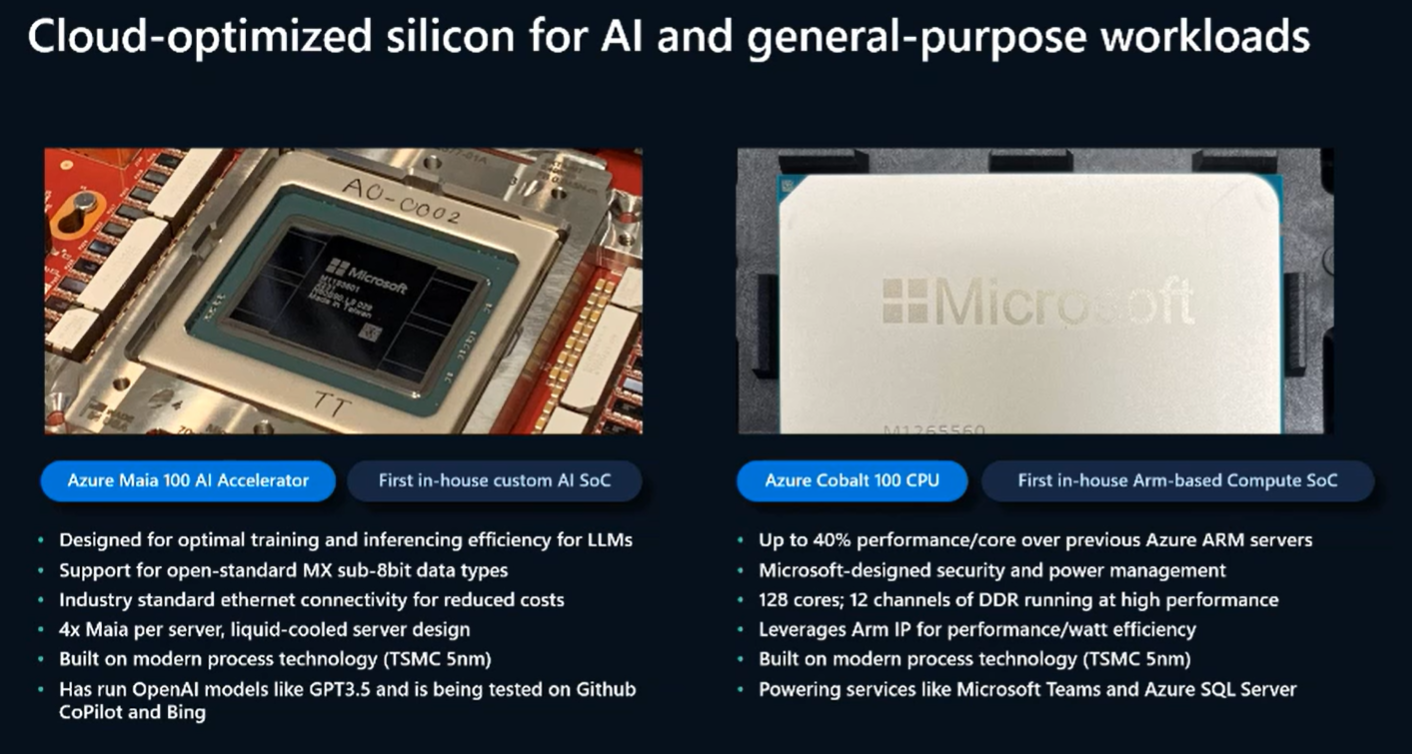

Microsoft launched Azure Maia 100 AI Accelerator, the company's first in-house custom AI system on a chip. Azure Maia is designed to optimize training and inferencing efficiency for large language models (LLMs). The launch of Azure Maia highlights how Microsoft is becoming a systems company and more than software. Microsoft in a blog post reiterated that it will work closely with Nvidia and will offer its H200 GPUs as well as AMD's MI300x

"We need to be even the world's best systems company across heterogeneous infrastructure," said Microsoft CEO Satya Nadella during his Ignite 2023 keynote. "We work closely with our partners across the industry to incorporate the best innovation. In this new age, AI will be defining everything across the fleet in the data center. As a hyperscaler, we see the workloads, we learn from them, and then get this opportunity to optimize the entirety of the stack."

According to Microsoft, Azure Maia features:

- Support for open-standard MX sub-8bit data types.

- Industry standard ethernet connectivity.

- 4x Maia per server with liquid-cooled design.

- 5nm process technology from TSMC.

- The ability to run OpenAI models like GPT3.5 with testing for GitHub Copilot and Bing.

Rani Borkar, commercial vice president, Azure Hardware Systems and Architecture, said Microsoft is taking a systems approach to its infrastructure and optimizing for AI workloads. Azure has 60 data center regions today.

Microsoft uses Oracle Cloud Infrastructure for Bing conversational workloads

Borkar said Microsoft Azure is supporting a wide range of models from OpenAI and others. She also emphasized that Azure would continue to roll out instances based on the latest GPUs from Nvidia and AMD. Borkar said:

"To support these models, we are in that reimagining the entire cloud infrastructure, with a systems approach top to bottom end to end systems approach with a deep understanding of model architecture and workload requirements. We are optimizing our systems with our custom silicon so that our customers can benefit from performance, power efficiency, and the cloud promise of trust and reliability."

Borkar said that Azure plans to optimize every layer of the AI stack to reduce costs and improve energy efficiency.

To that end, Microsoft also outlined Azure Cobalt 100 CPU, the first Arm-based compute system on a chip from the company. Cobalt has up to a 40% performance/core improvement over previous Azure Arm servers. Features include:

- Microsoft designed security and power management.

- 128 cores; 12 channels of DDR.

- Built on 5nm process technology.

- Powers Microsoft Teams and Azure SQL Server.

When asked about better price/performance with Azure Maia, Borkar said each workload is different. The game plan is to give customers options to choose from a wide range of chips and ecosystems.

Constellation Research analyst Andy Thurai said:

"If Microsoft can prove that training and inferencing LLM custom models on their chipset can be more cost/power effieicient than the competitors there may be an opportunity to get customers to engage. At this point, I don’t see much traction for the chips when they are released next year."

Copilot Studio

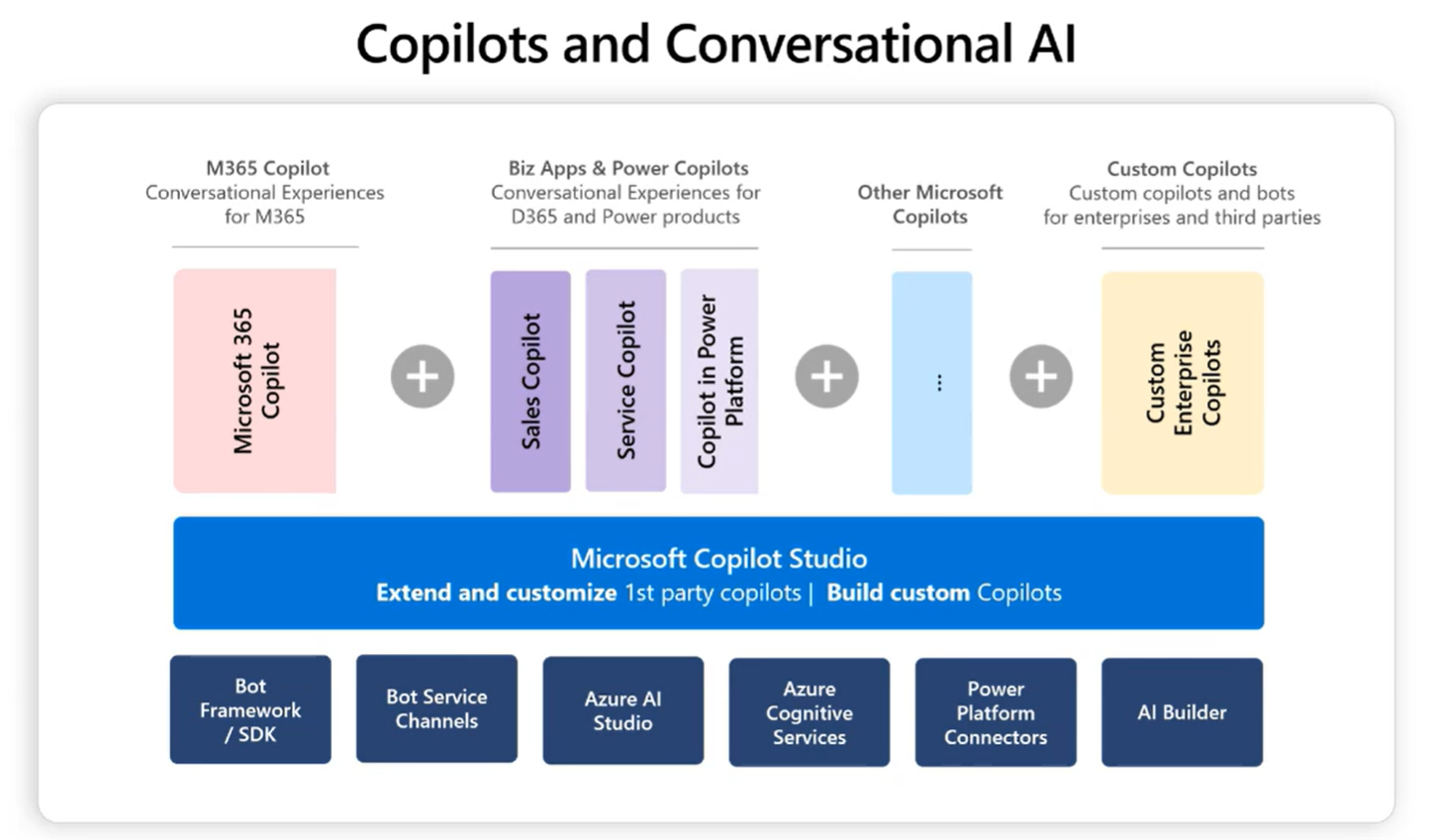

Along with Microsoft's custom processors, the company also launched Copilot Studio. Copilot Studio will be of interest to enterprises looking to customize copilots for new use cases.

GitHub plans to infuse Copilot throughout its platform | OpenAI launches GPTs as it courts developers, models for use cases | Software development becomes generative AI's flagship use case

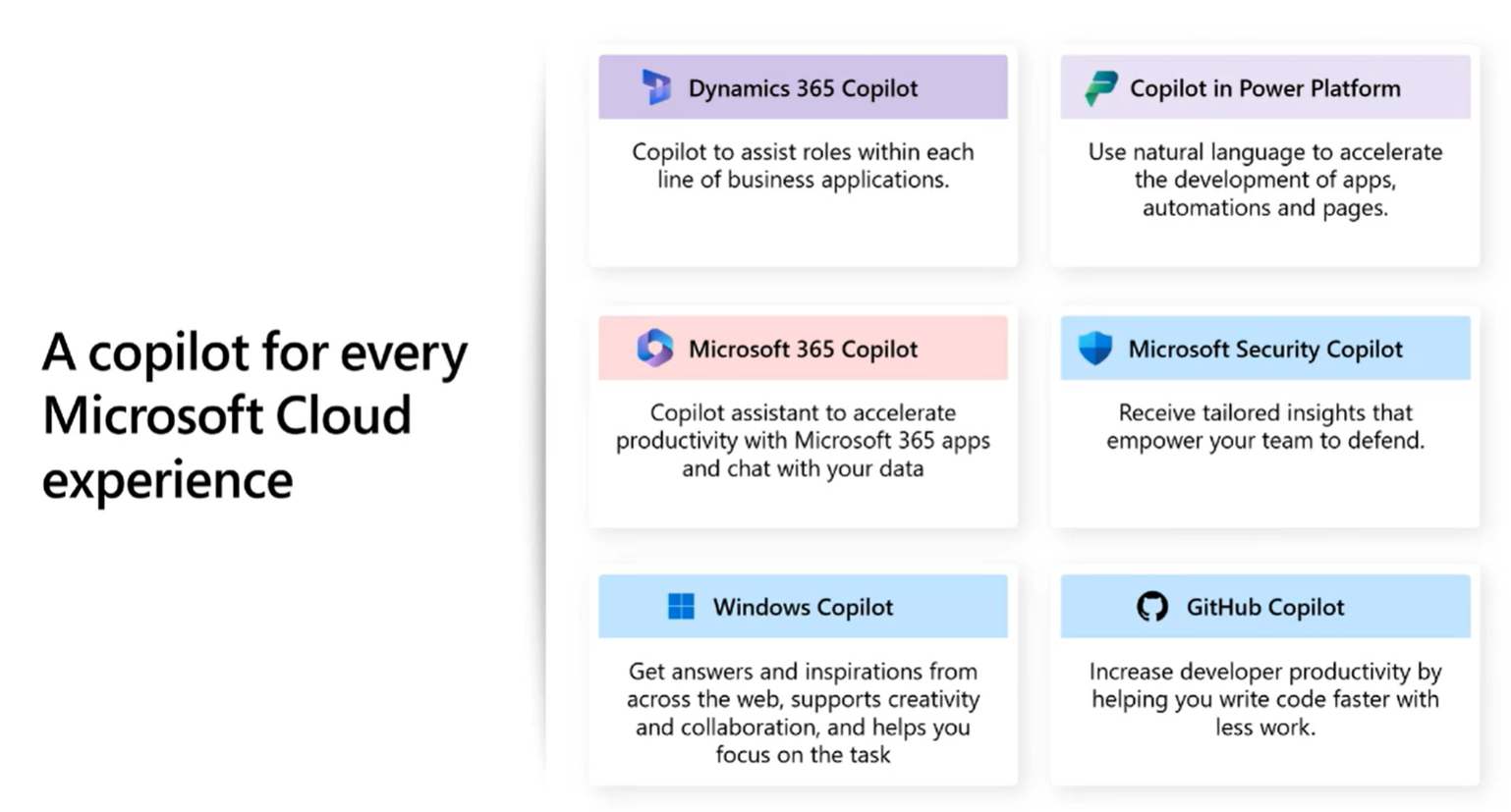

The game plan for Microsoft and Copilot Studio is straightforward: Build copilots for every Microsoft experience. Inspire features copilot hooks for everything from Teams to PowerApps to security and various business applications.

Omar Aftab, Vice President of Microsoft Conversational AI, said Microsoft is building out a copilot ecosystem and copilot Studio is the next iteration of the strategy. "Copilot Studio allows users to extend various first party Copilots or build their own custom enterprise Copilots," said Aftab. "You essentially have a number of conversational tools at your fingertips."

Microsoft's Copilot Studio launch will also enable customers to build custom GPTs from OpenAI. Copilot Studio is also integrated with Microsoft Azure's various services.

Key points about Copilot Studio include:

- End-to-end lifecycle management.

- Managed components, APIs, Azure services and low-code tools and plug-ins in one place.

- Ability to supplement LLMs with business-critical topics.

- Built-in analytics and telemetry on Copilot performance.

- Access to corporate documents as well as public searches.

- Publishing on websites, SharePoint and other options.

- Ability to segment access by roles.

Ultimately, Microsoft plans to build a Copilot marketplace, but that will happen over time, said Aftab.