Enterprises Must Now Cultivate a Capable and Diverse AI Model Garden

My conversations with Chief Information Officers (CIOs) in 2024 continues to show they remain under steady pressure from corporate boards to rapidly harness the strategic potential of Artificial Intelligence (AI) ahead of their competition, while heading off disruption due to the market changes AI is causing. This expectation places a significant burden on CIOs to not only understand and integrate AI into their existing IT portfolios faster than the technology is actually maturing, but also to do so in a way that genuinely meets diverse business demands across departments, divisions, and geographies. The challenge is particularly daunting because no single AI model or vendor offers anything close to a one-size-fits-all solution. Therefore, CIOs must navigate a complex technology landscape, selecting from an array of AI models from a fast-evolving set of offerings that can address specific needs while aligning with the enterprise's overall technology strategy.

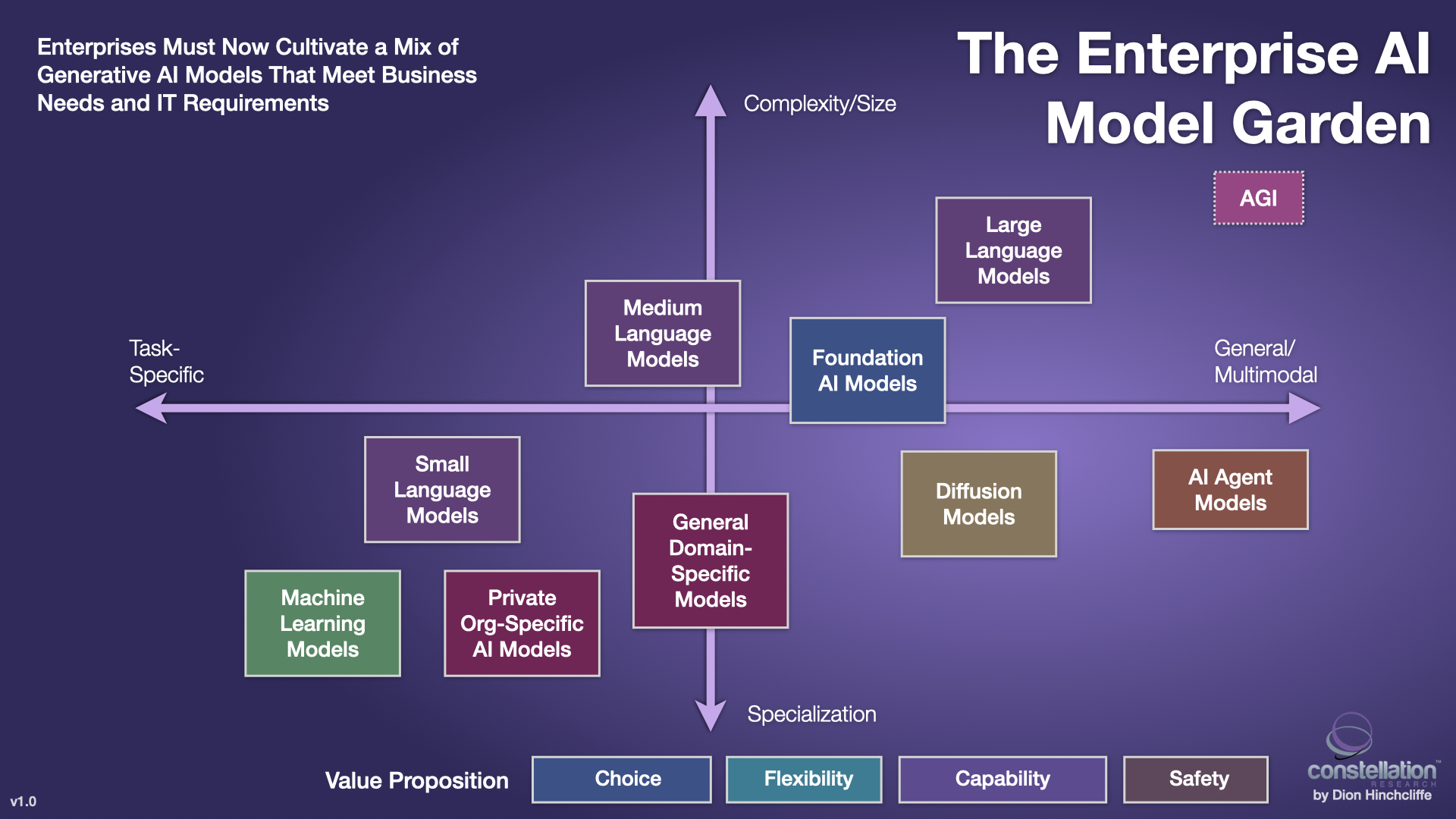

Developing a robust AI model portfolio—often visualized these days as a diverse 'garden' of different AI models, large and small—requires capability development, enthusiastic experimentation, thoughtful planning and strategic foresight. AI models not only have to be able to provide good answers, they must also be selected to be cost-effective, as measured by metrics like cost per kilo-inference, as well as technologically sound to integrate seamlessly into the broader IT infrastructure. Additionally, these models must align with strict corporate governance standards, including adherence to centralized IT, AI, and cybersecurity policies, safeguarding of personally identifiable information (PII), and compliance with regulatory requirements. This task involves a delicate balance of technical acumen and strategic management, ensuring each model performs efficiently and ethically within the corporate framework.

To effectively manage this complex integration and ensure AI models deliver tangible business value, the role of the CIO has emerged as virtually the only leader in the organization with the resources, insights, and ability to deliver well on enterprise-wide AI. The CIO must champion the strategic deployment of AI technologies across the enterprise, fostering a culture of innovation while navigating the technical and ethical challenges involved. By effectively overseeing the development of a healthy and effective AI model mix, the CIO ensures that the enterprise not only keeps pace with technological advancements but does so in a way that is both sustainable and aligned with the company's long-term goals. This strategic leadership is essential for translating AI investments into competitive advantages, fulfilling both the board's expectations and the company's operational needs.

Organizations Must Become Fluent in a Wide Variety of AI Models

The single largest differentiator in AI is now the specific model that is used to produce inferences for the business. While the biggest discriminator currently is the size of the model, due to the cost of training and operating them, domain specific models are the next big frontier, especially in high-value use cases in healthcare, finance, and insurance. Organizations must have enough models to meet different business requirements, but not so many they cannot properly oversee or support them. A smart mix of models of various capabilities, with as few gaps as possible, is therefore a top level requirement.

|

Aspect |

Small Models |

Medium Models |

Large Models |

|

Abilities |

Handle specific tasks; limited complexity |

Broader capabilities; moderate complexity |

Advanced capabilities; high complexity, multimodal |

|

Limitations |

Specific, narrow use cases; struggles with complex tasks |

Improved generalization but has limitations |

Best generalization; can handle very complex reasoning tasks |

|

Use Cases |

Mobile apps, embedded systems, simple web services |

Consumer applications, enterprise solutions, moderate analytics |

Large-scale analytics, high-end services, sophisticated multimodal applications |

|

Range of Parameters |

Tens of thousands to a few billion |

Few million to tens of billions |

A hundred billion to trillions |

|

Typical Hardware |

CPUs, low-end GPUs |

Mid-range GPUs, TPUs |

High-end GPUs, TPUs, specialized AI hardware |

|

Training Cost |

Low |

Moderate |

High |

|

Inference Speed |

Fast |

Moderate |

Can be slower due to model size, depends on optimization |

|

Data Efficiency |

Requires less data to train, but less effective with new data |

More data-efficient than small models, adaptive |

Highly data-efficient, very adaptive, highest zero-shot probability |

|

Flexibility |

Low; often task-specific |

Moderate flexibility; can adapt to various tasks |

High; highly adaptable to new tasks and environments |

|

Cost of 1,000 Inferences |

$0.001 - $0.10 |

$0.10 - $0.50 |

$0.50 - $10.00 |

|

Examples |

Figure 1: The different sizes and capabilities of AI language models today

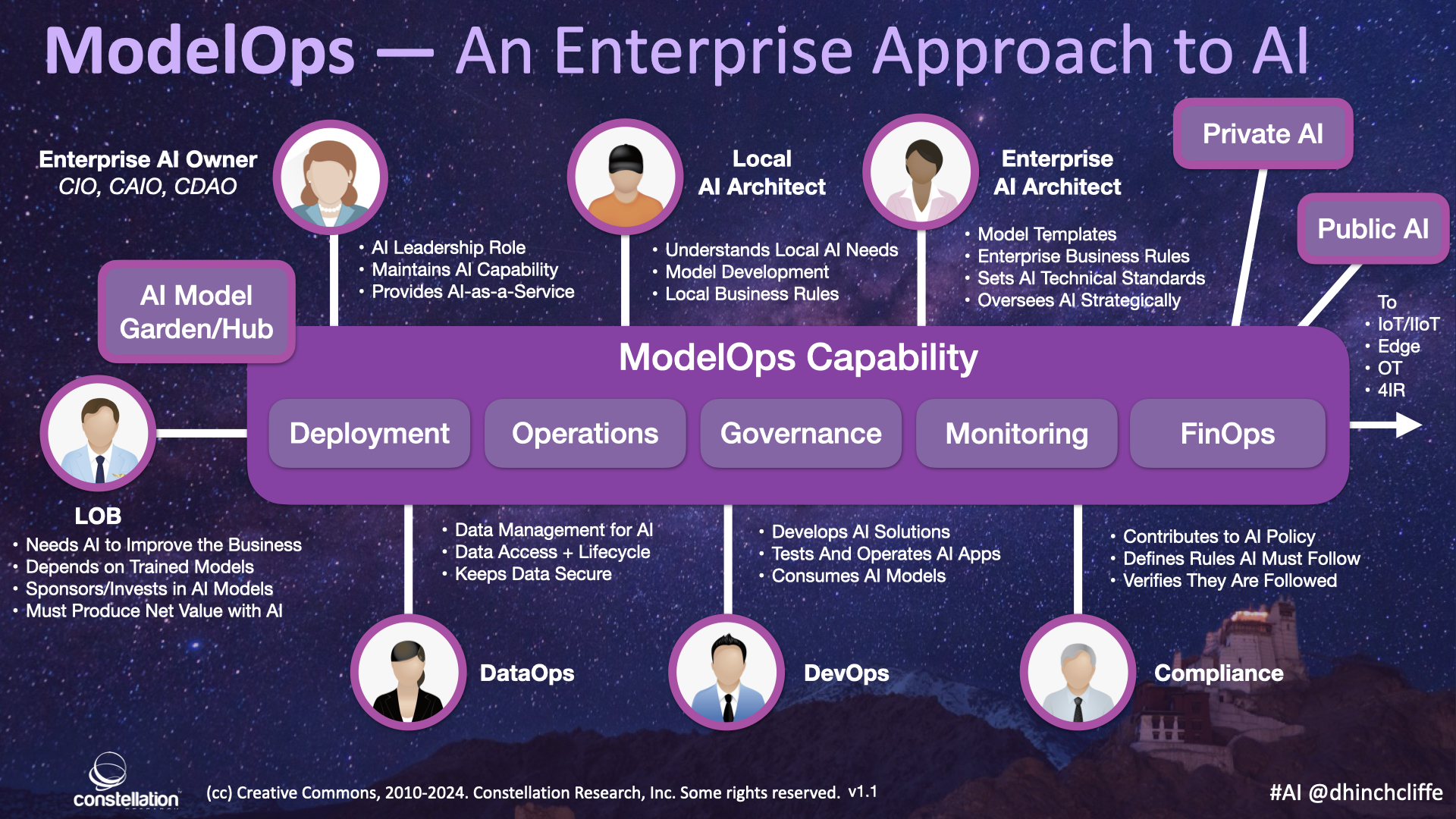

To successfully navigate the ever-changing landscape of AI requires a robust supporting ModelOps strategy with the accompanying creation of centralized AI model gardens/hubs, which are typically overseen by IT as well as the emerging Chief AI Officer, or whomever is in charge of enterprise-wide AI. This centralized infrastructure is critical for ensuring that AI initiatives are implemented effectively, ethically, and in accordance with regulations. By establishing an overarching ModelOps practice and fostering a collaborative culture around AI development, CIOs can empower their organizations to unlock the transformative potential of AI and achieve significant business value, while providing a central capability to operate, monitor, measure, govern, and secure AI models.

ModelOps: The Engine Room of Enterprise AI

ModelOps encompasses the entire lifecycle of AI models, from development and testing to deployment, monitoring, and governance. A well-defined ModelOps strategy ensures that AI models are operationalized efficiently and effectively. It streamlines workflows, fosters collaboration between data scientists and IT operations teams, and promotes the responsible use of AI.

AI Model Gardens/Hubs: A Single Source of Truth

AI model gardens/hubs serve as centralized repositories for vetted and approved public -- and especially private -- AI models. These hubs provide data scientists and business users with easy access to reusable AI models, reducing redundancy and development time. Additionally, AI model gardens/hubs facilitate governance by ensuring that all deployed models adhere to organizational standards and regulatory requirements.

The Need for a Sustainable AI Capability

The landscape of AI vendors, open source AI projects, and their models is constantly evolving. By building a strong internal capability for ModelOps and AI model management, CIOs can avoid vendor lock-in and ensure that their organizations are not dependent on any single provider. This future-proofs their AI strategy and empowers them to adapt to new technologies and business needs. ModelOps can also ensure the energy consumption of AI models is understood and well-managed, an issue that is gaining visibility and importance rapidly.

The Strategic Imperative for AI Readiness

While the real business impact hasn't even arrived yet, AI has clearly become a strategic technology. By taking a proactive approach to AI readiness and establishing a robust ModelOps practice, CIOs can ready their organizations to seize the transformative potential of AI. This not only positions them to achieve significant financial gains in the medium-term and operational efficiencies right away, but also enables them to deliver a superior customer experience to gain a competitive edge.

Key Components of the ModelOps Enterprise Approach to AI

The visual above depicts a ModelOps enterprise approach to AI, which outlines the various roles and stakeholders involved. Here's a breakdown of the key components and roles involved:

- Enterprise AI Owner (CIO, CAIO, CDAO): Provides strategic oversight and leadership for the organization's AI initiatives.

- AI Architect: Establishes technical standards and best practices for AI development and deployment.

- Local AI Architect or SME: Understands the specific needs of different lines of business and translates them into actionable AI requirements.

- Line of Business (LOB): Represents the various business units within the organization that can benefit from AI solutions. Identifies use cases and champions AI adoption within their departments.

- Data Management for AI: Ensures that high-quality data is available to train and fuel AI models.

- Model Development: Develops, tests, and iterates on AI models to meet specific business needs.

- ModelOps Capability: Operationalizes AI models, including deployment, monitoring, and governance.

- Governance, Monitoring, and Compliance: Oversees the ethical and responsible use of AI models and ensures adherence to regulations.

- DevOps, DataOps: Integrates AI development and operations processes to streamline workflows.

- FinOps: Manages the financial costs associated with AI model development, deployment, and maintenance.

While the allure of specific AI models and vendors remains a siren song, even as there are growing worries some organizations won't see immediate returns (which is typical of many advanced technologies), building a sustainable internal capability for ModelOps and AI model management remains crucial for long-term sustainability of the business. Preparing a strong foundation for data, AI models, and associated operations future-proofs the organization's AI strategy, allowing for adaptation to evolving technologies and business needs, ultimately maximizing the return on investment in AI and ensuring its readiness and strategic alignment with an organization's goals as vital opportunities arise.

My Related Research

Enterprises Must Now Rework Their Knowledge into AI-Ready Forms: Vector Databases and LLMs

AI is Changing Cloud Workloads, Here’s How CIOs Can Prepare

A Roadmap to Generative AI at Work

Spatial Computing and AI: Competing Inflection Points

Salesforce AI Features: Implications for IT and AI Adopters

Video: I explore Enterprise AI and Model Governance

Analysis: Microsoft’s AI and Copilot Announcements for the Digital Workplace

How Generative AI Has Supercharged the Future of Work

How Chatbots and Artificial Intelligence Are Evolving the Digital/Social Experience

The Rise of the 4th Platform: Pervasive Community, Data, Devices, and AI