Vice President & Principal Analyst

Constellation Research

About Liz Miller:

Liz Miller is Vice President and Principal Analyst at Constellation, focused on the org-wide team sport known as customer experience. While covering CX as an enterprise strategy, Miller spends time zeroing in on the functional demands of Marketing and Service and the evolving role of the Chief Marketing Officer, the rise of the Chief Experience Officer, the evolution of customer engagement, and the rising requirement for a new security posture that accounts for the threat to brand trust in this age of AI. With over 30 years of marketing experience, Miller offers strategic guidance on the leadership, business transformation, and technology requirements to deliver on today’s CX strategies. She has worked with global marketing organizations to transform…...

Read more

The voice was calm yet determined. Frank was dead. Dave remained.

“Open the pod bay doors, HAL.â€

“I’m sorry Dave. I’m afraid I can’t do that.â€

The Artificial Intelligence aboard the Discovery One space craft envisioned by Arthur C Clarke’s short story, The Sentinel, and Stanley Kubrick’s movie 2001: Space Odyssey, had been listening in and wasn’t having what Dave had in mind. The heuristic programmed algorithm was designed to solve problems quickly…and people were the problem.

HAL 9000 is a delicious villain. In fact, HAL was named the 13th greatest movie villain of all-time by the American Film Institute. The cool indifference of HAL is haunting. But sadly HAL, and other nihilistic machines like him, have become the baseline of awareness about AI for FAR too many people.

While conversations start with the innovation and the change AI can usher in, conversations will inevitably turn to the danger of the machines taking over. From discussions around ethical AI to the capacity for sentience, there is a sense that AI, left unchecked or allowed to read lips, will try to take over and be the downfall of humanity. There is never an in-between.

But what does AI mean for the average, everyday Marketing team? In the early days of OpenAI’s ChatGPT, headline after headline bragged about the Generative AI’s eventuality of “replacing marketers†because of its ability to generate ad campaign copy, slogans and email subject lines in seconds. A variation on the HAL theme to be sure, but still, the script has the sentient super-villain machine with a touch of blood lust rising to rid the world of agency copywriters and marketing managers.

Before ChatGPT shows us the pod bay doors, let’s take a step back and consider if we got our movie references all wrong. What if AI in marketing is less Space Odyssey and more Devil Wears Prada?

As the tale goes, the devil boss, Miranda, has a new assistant, the protagonist of the book and movie, Andy. There is a moment during a glamorous charity gala, when a swanky donor approaches to greet the hostess. Andy leans in and whispers the name of the guest, along with a couple key factoids just in time for Miranda throws her arms up with all the warmth and recognition of an old friend.

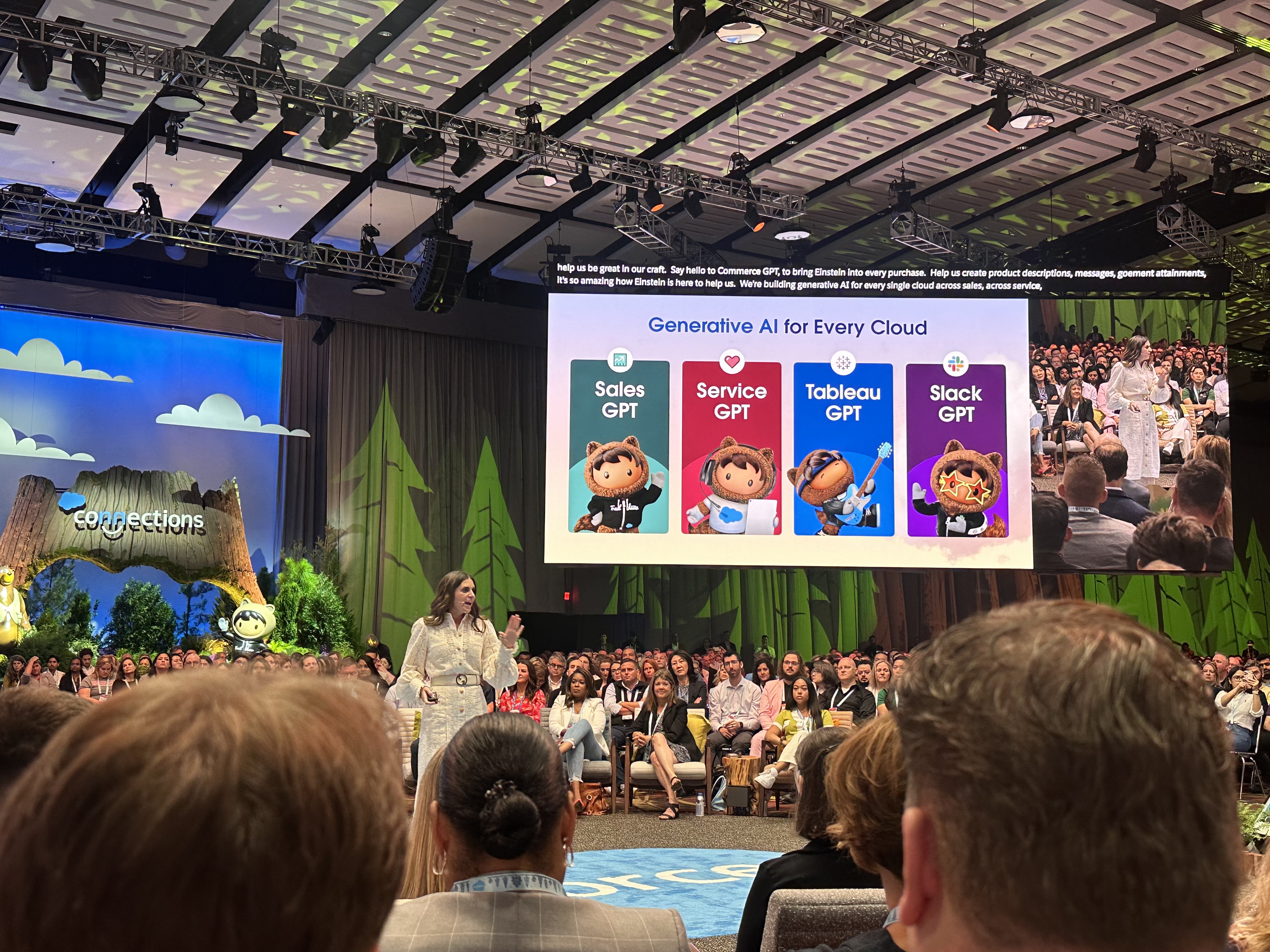

Andy, not HAL, is the AI Marketing needs. And Andy…or rather Salesforce’s version of her…is called Marketing GPT and was purpose-built to lean in and whisper exactly what a marketer needs to engage and interact in the most personal and profitable way. Trained to not just understand customers, conversations, or engagement, but trained to also understand a specific business, Marketing GPT draws intelligence from Data Cloud and relies on a new trust layer to ensure that this isn’t just a story of the right message to the right customer at the right time…but the right model to deliver the right personalization and contextualization to the right marketer.

Andy, not HAL, is the AI Marketing needs. And Andy…or rather Salesforce’s version of her…is called Marketing GPT and was purpose-built to lean in and whisper exactly what a marketer needs to engage and interact in the most personal and profitable way. Trained to not just understand customers, conversations, or engagement, but trained to also understand a specific business, Marketing GPT draws intelligence from Data Cloud and relies on a new trust layer to ensure that this isn’t just a story of the right message to the right customer at the right time…but the right model to deliver the right personalization and contextualization to the right marketer.

Digging into the Marketing GPT announcements, let’s focus in on a couple highlights that stood out (at least stood out to me):

- Segment Creation: imagine just asking your marketing tools to create a new audience segment. Marketers understand that the question is rarely the problem…instead it is all the preparation that is required to even get to the point of asking. With Segment Creation, both sides of that audience opportunity equation are addressed with AI, bringing the data together and giving marketers the opportunity to interrogate that data differently, all using natural language.

- Segment Intelligence for Data Cloud: This is where marketing’s work proves impact and real, tangible business values by connecting the first-party data marketers rely upon for deeper engagement with the revenue data and third-party paid media data. This isn’t just about ‘more metrics.†Instead, Segment Intelligence is about obtaining a truly comprehensive view into audience engagement. Knowing how someone engaged with initiatives is great…knowing how that connected to the business and revenue is even better.

There are other AI-super-powered capabilities in this initial introduction of Marketing GPT including integrating generative AI tools into everything from email content creation (with auto-generated copy recommendations that can be included in testing and engagement campaigns) to integrations with the creative upstart Typeface to create contextual visual assets that are aligned with approved brand voice, style guides and messaging.

Another announcement of note comes from the Salesforce Commerce GPT introduction. While the solution is packed with AI-powered assistive tools including Commerce Concierge for personalized engaging shopping engagements and Dynamic Product Descriptions automatically filling in missing catalog data for merchants, it is the inclusion of Goals-Based Commerce that had me leaning to learn more. This is not just about delivering the capacity for growth. It is about productive and efficient growth. With the Goals-Based Commerce tool, brands can set targets and goals based on what is top of mind for the business (and let’s be honest, those details can change minute to minute even while all still pointing towards profitability) and get AI powered recommendations and even automations to help reach those goals. It connects Data Cloud, Einstein AI and Salesforce Flow to quickly move from goals to outcomes.

While AI is important to business, trusting AI is critical to us all. This was the message told time and again at both Connections and Salesforce AI Day. So HOW does Salesforce make Marketing GPT the built for enterprise safe-AI solution. It truly starts and stops with data.

Salesforce AI Cloud is billed as a cloud-based end-to-end AI solution that supports multiple models, prompts and training data sets. A purpose-built suite of capabilities, AI Cloud works to deliver trusted, real-time generative experiences across all applications and workflows, with a focus on super-charging CRM. Einstein sits at the heart of AI Cloud and, according to Salesforce, now powers over 1 trillion predictions per week across Salesforce applications. Thanks to AI Cloud, organizations can tap into multiple large language models that are trusted in an environment that is open and extensible. Customers will have access to multiple models to optimize the right model for the right task, be it third party LLMs, using Salesforce’s proprietary LLM (developed by Salesforce AI Research) or bringing a customer’s own custom LLM. See: Salesforce launches AI Cloud, aims to be abstraction layer between corporate data, generative AI models

Initial LLMs include AWS, Anthropic and Cohere to start. Salesforce had previously announced an extensive partnership with OpenAI and the APIs to access the GPT-4 model. Salesforce has also announced a partnership and integration with Google’s Vertex AI, adding yet another bring-your-own model capability into the mix (Salesforce had previously announced the ability to bring models from Amazon SageMaker) directly through the newly announced Einstein GPT Trust Layer.

Why is this “trust layer†so important? This is what brings us back to the trust factor. By bringing these models, be them internal (via Google Vertex), from Salesforce or from a third party like OpenAI, a customer’s data remains within the boundaries established as trusted BY the customer. The Trust layer is intended to be where the identity and governance controls so that company data is not sent to a model, as many organizations fear. Instead, once a query is run on a customer’s system, data (including data that has been aggregated and harmonized in Salesforce Data Cloud) is retrieved, masked and fed to the model via secure gateway to generate the response. This prompt is not retained by the model and in seconds responses are delivered back, routed through what Salesforce notes as “toxicity detection†and finally audited and logged for visibility.

The promise here is that enterprises can secure, govern and orchestrate AI in a more constructive and intentional way. This is not a new concept. Trust and “enterprise-ready†offerings, tools and promises are cropping up everywhere from Adobe (with the guardrails around their suite of generative AI models in Adobe Firefly), to Microsoft’s Azure OpenAI Service (which only addresses safety and moderation of text and image generation using OpenAI models) and Nvidia’s open-source toolkit, NeMo Guardrails, that takes aim at toxic content.

The promise here is that enterprises can secure, govern and orchestrate AI in a more constructive and intentional way. This is not a new concept. Trust and “enterprise-ready†offerings, tools and promises are cropping up everywhere from Adobe (with the guardrails around their suite of generative AI models in Adobe Firefly), to Microsoft’s Azure OpenAI Service (which only addresses safety and moderation of text and image generation using OpenAI models) and Nvidia’s open-source toolkit, NeMo Guardrails, that takes aim at toxic content.

But Salesforce arguably feels a responsibility to push innovation forward and to take the lead on having the tough ethics and security conversations in AI. For Salesforce, the Einstein GPT Trust Layer is a critical, if not mandatory move.

Marketing GPT tools are quickly entering pilot this summer (as early as June) and many are expected to GA by October (Segment Creation, as an example, is expected to go GA by October 2023. Segment Intelligence for Data Cloud is also expected to be GA by October) with other tools like Dynamic Product Descriptions expected to be GA in July 2023 and Goals Based Commerce expected by February 2024. This is a welcome departure for Salesforce which has earned the reputation of longer aspiration-to-availability timelines.

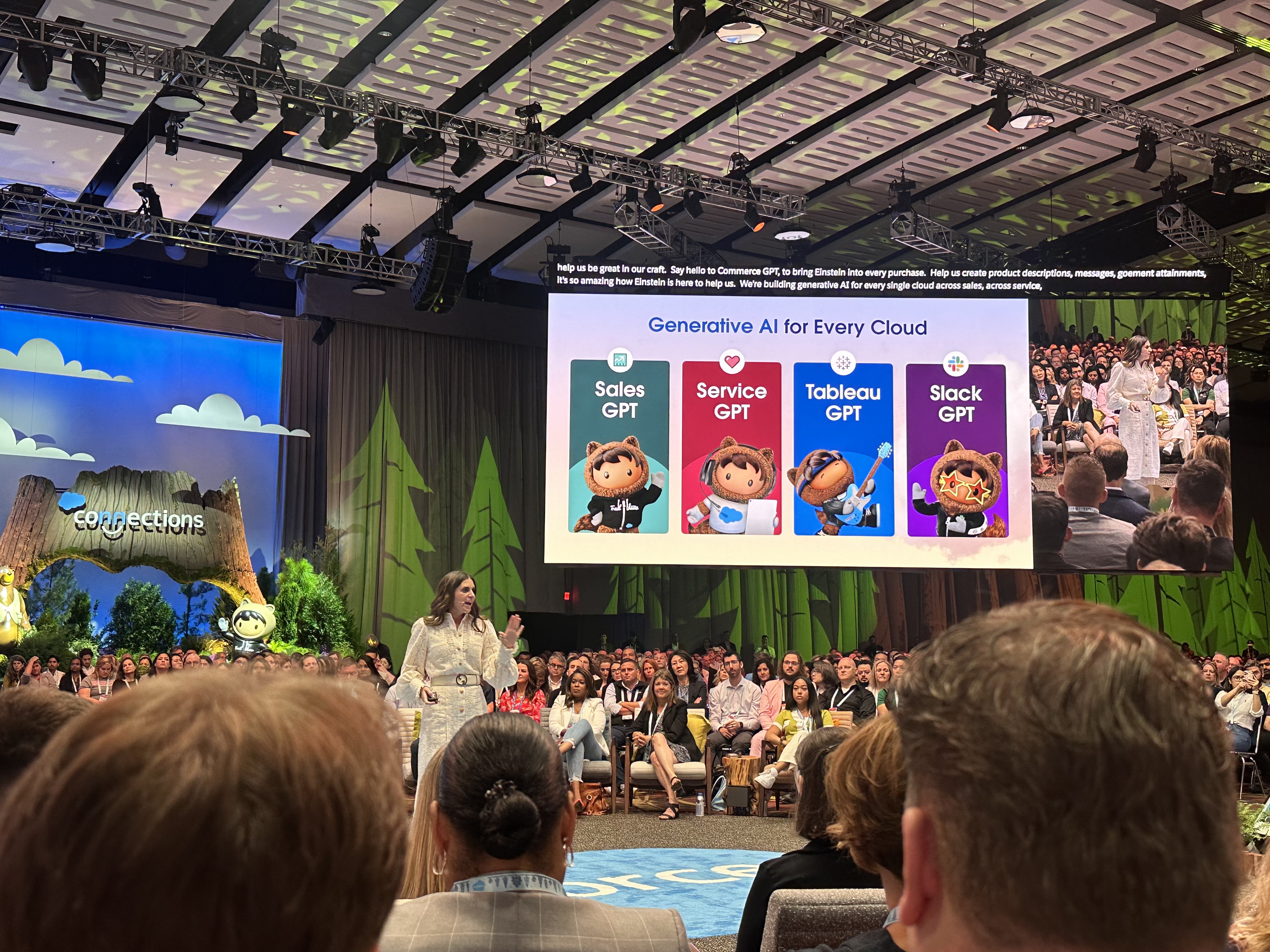

Yes…there were a TON of GPT labeled announcements made at Salesforce Connections (prompting some of us in attendance to just add GPT to the end of every proper name available.) But there was also a lot of excitement around the prospect of having that well trained personal business assistant whispering all the just-right details into our ears just in the moment we need it to make an amazing impression on our customers and prospects. It is a welcome shift in narrative from the machines ready to replace marketers to a safe, purpose-built, enterprise-ready and trained AI empowering and adding to a marketer’s success.

Data to Decisions

Future of Work

Marketing Transformation

Next-Generation Customer Experience

Chief Customer Officer

Chief Marketing Officer

Chief Digital Officer

Chief Data Officer

Andy, not HAL, is the AI Marketing needs. And Andy…or rather Salesforce’s version of her…is called Marketing GPT and was purpose-built to lean in and whisper exactly what a marketer needs to engage and interact in the most personal and profitable way. Trained to not just understand customers, conversations, or engagement, but trained to also understand a specific business, Marketing GPT draws intelligence from Data Cloud and relies on a new trust layer to ensure that this isn’t just a story of the right message to the right customer at the right time…but the right model to deliver the right personalization and contextualization to the right marketer.

Andy, not HAL, is the AI Marketing needs. And Andy…or rather Salesforce’s version of her…is called Marketing GPT and was purpose-built to lean in and whisper exactly what a marketer needs to engage and interact in the most personal and profitable way. Trained to not just understand customers, conversations, or engagement, but trained to also understand a specific business, Marketing GPT draws intelligence from Data Cloud and relies on a new trust layer to ensure that this isn’t just a story of the right message to the right customer at the right time…but the right model to deliver the right personalization and contextualization to the right marketer.

The promise here is that enterprises can secure, govern and orchestrate AI in a more constructive and intentional way. This is not a new concept. Trust and “enterprise-ready†offerings, tools and promises are cropping up everywhere from Adobe (with the guardrails around their suite of generative AI models in Adobe Firefly), to Microsoft’s Azure OpenAI Service (which only addresses safety and moderation of text and image generation using OpenAI models) and Nvidia’s open-source toolkit, NeMo Guardrails, that takes aim at toxic content.

The promise here is that enterprises can secure, govern and orchestrate AI in a more constructive and intentional way. This is not a new concept. Trust and “enterprise-ready†offerings, tools and promises are cropping up everywhere from Adobe (with the guardrails around their suite of generative AI models in Adobe Firefly), to Microsoft’s Azure OpenAI Service (which only addresses safety and moderation of text and image generation using OpenAI models) and Nvidia’s open-source toolkit, NeMo Guardrails, that takes aim at toxic content.