Retailers hope business, digital transformation efforts pay off for holiday shopping 2023

With holiday shopping season underway, retailers see uncertain consumer spending, better inventory positions, lower supply chain costs and customer experience investments they hope deliver.

Adobe is forecasting US online holiday sales of $221.8 billion (Nov. 1 through Dec. 31) in 2023. Salesforce noted that 2023 is all about keeping loyal customers happy and focusing on the experience metrics that matter.

For our purposes, retail in 2023 matters because the industry is arguably the best lab for digital and business transformation, analytics, customer experience and a bevy of technologies.

Here's a tour of how retailers are setting up for the holidays.

Walmart

Walmart's results are the barometer for consumer health and digital transformation.

"In the U.S., we may be managing through a period of deflation in the months to come. And while that would put more unit pressure on us, we welcome it because it's better for our customers," said Walmart CEO Doug McMillon.

Those comments rattled the stock market. CFO John Rainey added that consumers are making trade-offs and weekly performance metrics were softening at the end of October.

Like other retailers, however, Walmart is focusing on what it can control across its omnichannel experience. McMillion said:

"We're making shopping easier and more convenient. Our net promoter scores for pickup and delivery in Walmart U.S. are improving and we've started using generative AI to improve our search and chat experience. We've released an improved beta version of search to some of our customers who are using our app on iOS. In the coming weeks and months, we will enhance this experience and roll it out to more customers."

Rainey said omni-channel services such as pickup and store fulfilled delivery are driving growth. Walmart 24% e-commerce revenue growth in the third quarter and share gains among higher income households.

On the digital front, McMillon said Walmart is looking to blend its core retail business with new services including membership, third-party marketplaces, and advertising. The combination of these businesses with automation in the supply chain should provide a good mix of operating margins, growth and predictability.

- Walmart, Target highlight intersection of supply chain, customer experience

- Walmart Chief Sustainability Officer: ESG is integrated into operations, business

- Amazon vs. Walmart: 8 innovation takeaways

Retail experiences all start at the back end. To that end, Rainey said:

"During the quarter, we opened our third next-generation e-commerce fulfillment center. These 1.5 million square feet facilities are expected to more than double the storage capacity, enable 2X the number of customer orders fulfilled daily, and will expand next and two-day shipping to nearly 90% of the U.S. including marketplace items shipped by Walmart Fulfillment Services. They also unlock new opportunities for our associates to transition into higher skilled tech focused positions."

Macy's

Macy's CEO-Elect Tony Spring said its customers at Macy's, Bloomingdales and BlueMercury will continue "to be under pressure and discerning and how they spend in discretionary categories we offer." He said Macy's is ready to fulfil orders online, in store and through its gift guides.

Spring also argued that the company's department stores can pivot with content and merchandise that goes where customers are headed.

On the digital front, Spring said that Macy's transformation is on track. The company is looking to run three distinct retailers with their own identities and a common data and technology approach. Spring said:

"We can learn from each other without becoming one another as we remove silos to optimize our collective customer insights.

We are also balancing art and science. I like to say that this is STEAM, not STEM. We are embracing data science tools, including AI and machine learning, to drive more accurate and agile decision-making based on changes in demand. This, married with the art of human judgment, helps us become more proactive and customer influenced."

Spring said Macy's will leverage data to create better experiences and scale its growth vectors. The growth vectors include private brands, small format stores, a digital marketplace, a focus on luxury and personalized offers and communications.

Macy's CFO and COO Adrian Mitchell said the company's supply chain is working well and the retailer has a good inventory position that's down 6% from a year ago and down 17% from 2019 levels. The company is seeing lower freight expenses and a better merchandise mix to boost gross margins. Mitchell said Macy's has also improved delivery expenses due to reductions in packages per order and distance traveled.

More retail and commerce: How Home Depot Blends Art and Science of Customer Experience | How Wayfair's tech transformation aims to drive revenue while saving money | Connecting Experiences From Employees to Customers

Target

Target CEO Brian Cornell said, "consumers continue to rebalance their spending between goods and experiences and make tough choices in the face of persistent inflation."

Cornell added that Target is cautious about the near-term outlook but playing the long game by "investing in our stores, our supply chain, our team, our digital capabilities and our assortment."

Same-day services saw high single digit growth on a same-store basis. Cornell said customers are pressured by interest rates, loan payments and less savings. With discretionary income down, consumers are making tradeoffs and waiting for sales.

John Mulligan, Chief Operating Officer at Target, said the retailer has faced multiple challenges over the last 3.5 years including a lack of inventory, a demand boom, a downturn, inventory bloat and normalization.

Mulligan added that Target is cautious about its inventory position, but in a good place so far. Target has also benefited from lower supply chain costs. Mulligan said:

"We've seen improvements in metrics relating to backroom inventory accuracy and the percentage of new assortments set on time. In the digital channel, the percentage of orders picked and shipped on time and the average Drive-Up wait time have all improved from last year. Also, in support of the digital business, the percentage of items ordered but not found has declined from a year ago, meaning that we are fulfilling more items per order and canceling fewer, a key factor in guest satisfaction."

Target is also looking to improve its guest experience as measured by Net Promoter Scores in categories such as checkout, front-of-the store interactions, and digital services.

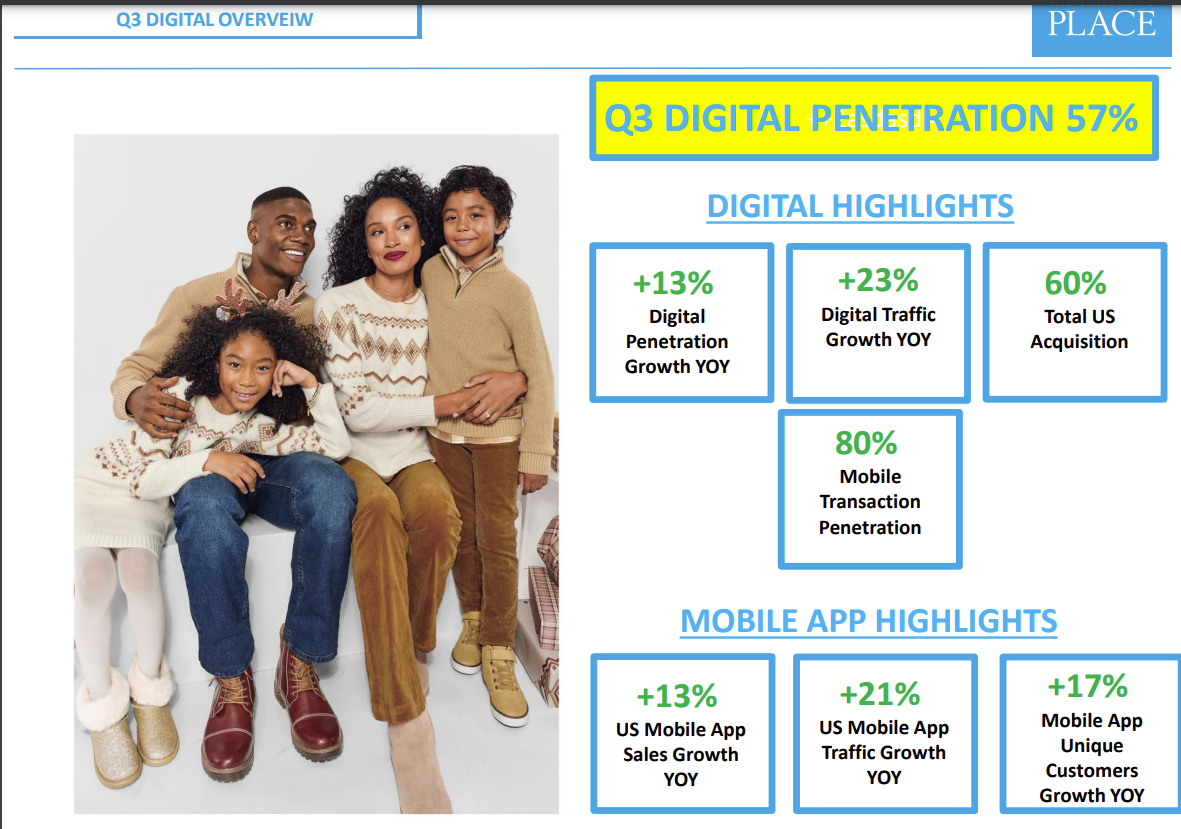

The Children's Place

Jane Elfers, CEO of The Children's Place, said the company has managed inventory well in the third quarter, but saw higher distribution and fulfilment costs as it pivots to more e-commerce.

The plan for The Children's Place is to hone its digital game given that's how Gen Z will buy when that generation has children.

Elfers said:

"Our digital channels are clearly where our current core millennial customer prefers to shop for her kids. And based on the data, digital is where our future Gen Z moms will overwhelmingly prefer to transact. Almost all new digital buyers will come from Gen Z. Gen Z digital buyers nationwide are expected to surge from 45 million today to over 61 million in 2027, only four short years away. The importance of the digitally native Gen Z demographic to our future business cannot be underestimated, and we remain laser-focused on ensuring that digital is at the core of everything we do."

The current generation of core customers for the retailer remains under pressure, but Elfers said The Children's Place is playing the long game with accelerated digital transformation and fleet optimization. The goal is to "operate the company with less resources, including less stores, less inventory, less people and less expense, allowing us to better service our customer online where she prefers to shop, resulting in what we believe will translate to more consistent and sustainable results over time," she added.

According to The Children's Place, the pivot to digital marketing has paid off including a focus on social media presence.

Gap Inc.

New Gap CEO Richard Dickson said the company is looking to strengthen its "operating platform" as it is retooling its core brands--Gap, Old Navy, Banana Republic and Athleta. He said:

"In some areas, we are in good shape, but we have more work to do. Our supply chain is a pillar of strength at Gap Inc., where our scale gives us unique cost leverage, but we need to accelerate innovation. Our financial strategy is driving early value, but we need to continue our focus on rigor and efficiency. In technology, we’ve made strategic investments and now it’s about optimizing those investments and driving adoption across the organization."

Dickson said the scale of Gap Inc. should be able to boost operating margins. Gap Inc. has four billion-dollar brands with 2,600 company operated stores and 1.4 billion annual visits to the company's websites. The company also has 58 million active customers.

Williams Sonoma

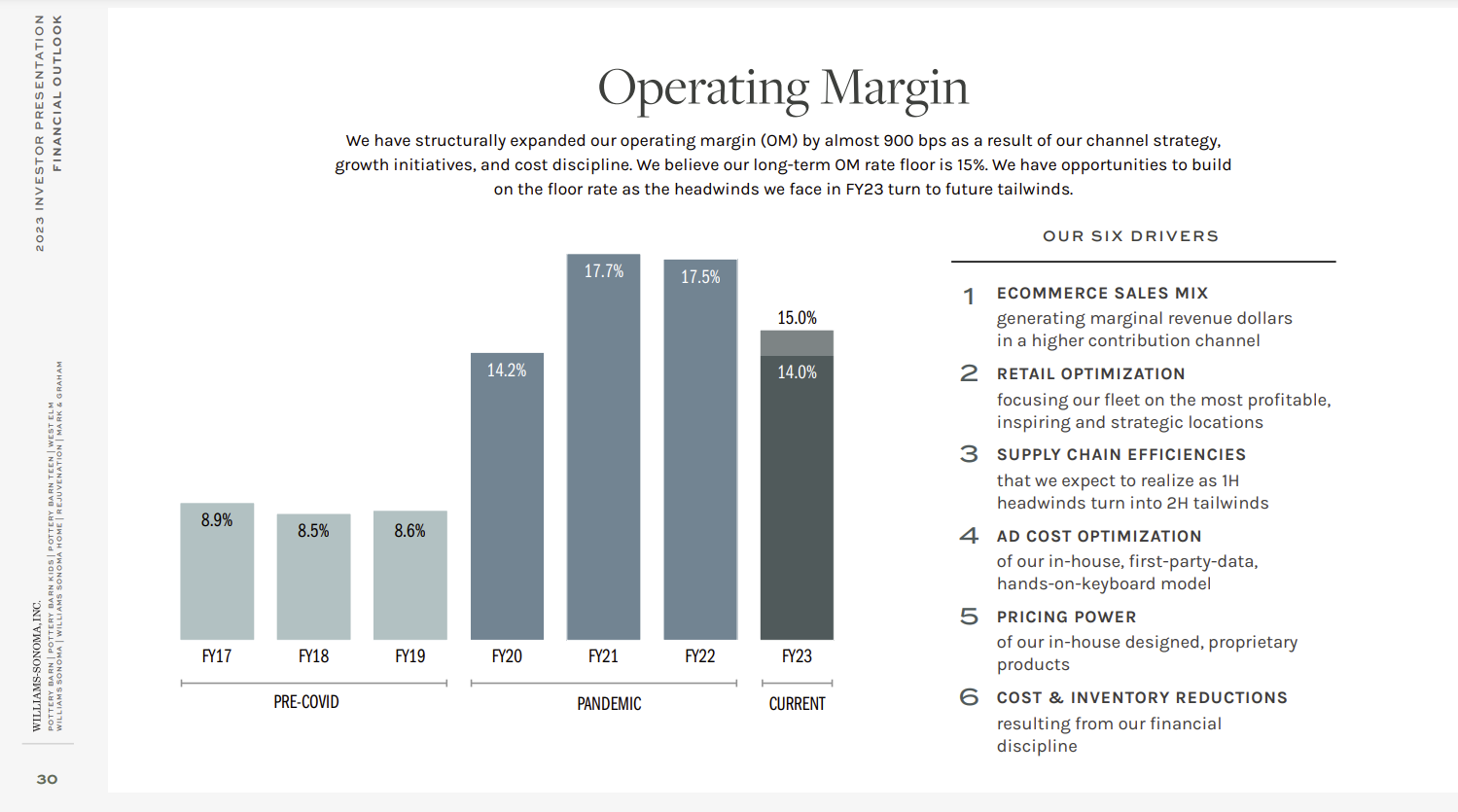

Williams Sonoma CEO Laura Alber's third quarter results delivered record operating margins of 17% as it benefited from customer experience improvements and lower supply chain costs.

The company projected fiscal year revenue to be down 10% to 12% but raised its operating margin outlook to 16% to 16.5%.

Alber acknowledged that consumer spending remains challenged, but a portfolio of brands has enabled the company to weather uncertainty even as same-store sales fall.

"Our in-house design capabilities and vertically integrated supply chain are also key in producing proprietary products at the best quality value relationship in the market," she said.

During the company's third quarter earnings call, Alber outlined the following strategies going into the holiday season.

- Introducing more new products at mid-tier and lower-tier price points without discounting.

- Sell through inventories with lower supply chain costs by reducing out of market and multiple shipments.

- Customer service improvements. Alber noted that Williams Sonoma's customer service metrics have returned to pre-pandemic levels as have on-time deliveries.

- Leverage investments in last-mile delivery to reduce customer accommodations, returns, damages and replacements.

- Continue to invest in digital experiences with content, tools for design projects and AI.

Dick's Sporting Goods

Dick's Sporting Goods Lauren Hobart said the company is targeting omnichannel athletes and giving them a good digital experience.

Hobart said:

"In combination with our stores, our digital experience remains an integral part of our success, and the investments we are making in technology are strengthening our athletes’ omnichannel experience and driving increased engagement. This quarter, we added 1.6 million new athletes and are further growing our base of omnichannel athletes. Omni channel athletes make up the majority of our sales and they spend more and shop with us more frequently than single channel athletes."

Hobart also said that the sporting goods retailer is investing in data science and personalization to create one-to-one relationships with athletes.

The company said that its consumer base is holding up well as they prioritize a healthy and active lifestyle.

Best Buy

Best Buy CEO Corrie Barry said "consumer demand has been even more uneven and difficult to predict" and the company lowered its fourth quarter revenue outlook. The technology retailer is focused on customer experiences, driving recurring revenue and offering new services.

"We continue to increase our paid membership base and now have 6.6 million members. This compares to 5.8 million at the start of the year," said Barry. My Best Buy Total is a $179.99 a year service that includes 24/7 Geek Squad Service, AppleCare Plus and two-years of product protection. My Best Buy Plus is a $49.99 a year tier that includes access to new products, two-day shipping, a 60-day return and exchange window and exclusive pricing.

Barry said the company is looking to drive interactions too.

"We have also seen growth in sales from customers who are getting help from our virtual sales associates. These interactions, which can be via phone, chat or our virtual store, drive much higher conversion rates and average order values than our general dot.com levels. This quarter, we had 140,000 customer interactions by a video chat with associates, specifically out of our virtual store locations."

In addition, the company continues to invest in its multichannel fulfillment operations. "As a reminder, while almost one-third of our domestic sales are online, 43% of those sales were picked up in one of our stores by customers in Q3. And most customers shop us in multiple channels," said Barry.

Best Buy is also bolstering its supply chain network to optimize the company's ship-from-store-hub and shipping locations to deliver with speed. About 62% of e-commerce small packages were delivered to customers from automated distribution centers. Those operations are supplemented with a delivery partnership with DoorDash.

Marketing Transformation Matrix Commerce Next-Generation Customer Experience Innovation & Product-led Growth Sales Marketing Revenue & Growth Effectiveness B2B B2C CX Customer Experience EX Employee Experience business Marketing eCommerce Supply Chain Growth Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP Leadership finance Social Customer Service Content Management Collaboration M&A Enterprise Service AI Analytics Automation Machine Learning Generative AI Chief Information Officer Chief Customer Officer Chief Data Officer Chief Digital Officer Chief Executive Officer Chief Financial Officer Chief Growth Officer Chief Marketing Officer Chief Product Officer Chief Revenue Officer Chief Technology Officer Chief Supply Chain Officer