How Baker Hughes used AI, LLMs for ESG materiality assessments

Baker Hughes' Marie Merle Caekebeke admits she was a bit skeptical about artificial intelligence, but she wanted a way to speed up environmental, social and governance (ESG) materiality assessments so her team could focus on the big picture and stakeholder needs at the energy technology company.

"I was actually quite pleasantly surprised,†said Caekebeke, Sustainability Executive – Strategic Engagement, Baker Hughes. "I wanted the individuals on my team to take ownership of sustainability and to move the needle on progress. I felt that we could leverage a machine, but the decisions will be made by individuals."

Caekebeke (right), a 2023 SuperNova Award winner in the ESG category, started with a pilot with C3 AI to parse 3,500 stakeholder documents in 9 weeks and train natural language processing and large language models (LLMs) to identify and label paragraphs aligned to ESG topics via more than 1,700 training labels. The project quickly went to production and saved 30,000 hours in a two-year cycle time to complete the ESG materiality assessment. Today, Baker Hughes' sustainability executives can be more proactive with stakeholders.

Baker Hughes is an energy technology company that specializes in oil field services and equipment and industrial energy technology. The company aims to be a sustainability pioneer that minimizes environmental impact and maximizes social benefits.

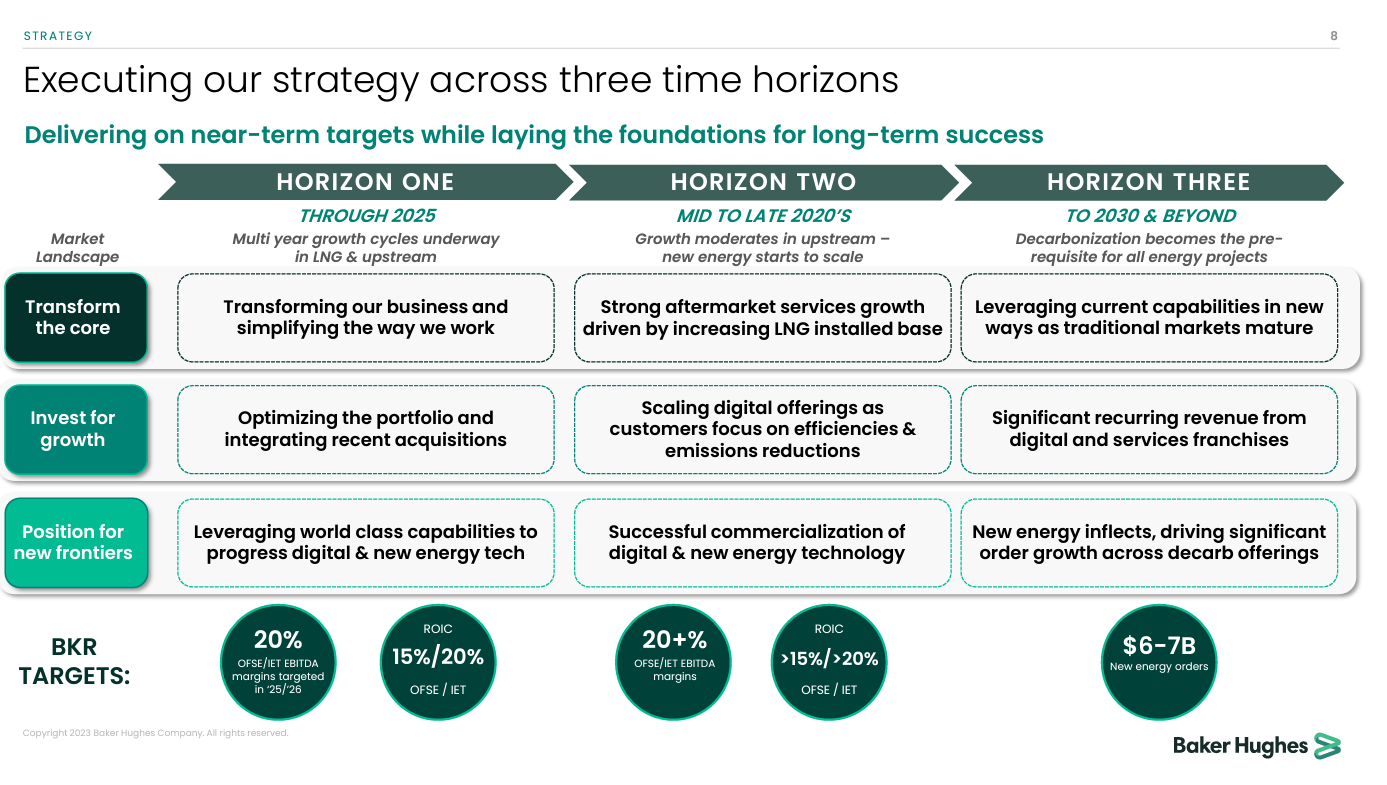

Speaking on Baker Hughes' third-quarter earnings conference call, CEO Lorenzo Simonelli said the company sees strong orders for natural gas markets and electric machinery. The company's plan revolves around delivering financial results while investing in the future, said Simonelli.

"We are focused on our strategic framework of transforming our core to strengthen our margin and returns profile, while also investing for growth and positioning for new frontiers in the energy transition," said Simonelli, who noted that the company is working through three time frames. In 2027, Baker Hughes expects to focus on investing to solidify the company's presence in new energy and industrial sectors with an emphasis on decarbonization in 2030.

Simonelli added that Baker Hughes' execution over the coming years will position it to compete in carbon capture, usage and storage (CCUS), hydrogen, clean power and geothermal. "We expect decarbonization solutions to be a fundamental component, and in most cases, a prerequisite for energy projects, regardless of the end market. The need for smarter, more efficient energy solutions and emissions management will have firmly extended into the industrial sector," said Simonelli, who said Baker Hughes will focus on industry-specific use cases.

Baker Hughes is projecting new energy orders will grow to $6 billion to $7 billion in 2030 from $600 million to $700 million in 2023.

With that backdrop, Baker Hughes' sustainability team has to keep tabs on emerging trends and topics across multiple sources and ultimately customize the insights for various stakeholders, said Caekebeke. In other words, materiality assessments for ESG will become more of a living document.

"The sustainability space is shifting so quickly that I wanted more strategic engagements with our stakeholders," she said. "We're always going to have customer conversations; we're always going to have investor conversations and speak to our employees as well. But I wanted something to supplement it and look at those topics that matter to our stakeholders, weigh information and make sense for our assessment."

The project

Baker Hughes publishes a biennial ESG assessment that informs strategy at the company and creates a listening exercise for internal and external stakeholders. With the assessment, Baker Hughes aligns its strategic priorities and commercial strategy.

Caekebeke said the project started by weighting sources and information that is trustworthy. For instance, filings with the Securities and Exchange Commission (SEC), sustainability reports and annual reports had a higher weighting than something like social media where "everyone is a sustainability expert," she said. Reports from customers, competitors, investors and NGOs were also included.

In nine weeks, the data collection was complete and then Caekebeke's team focused on stakeholder expectations by role and what kind of decisions needed to be made. The lens of the project wasn't about automation as much as it was priorities. "We have a strong sustainability team, and I had enough humans and employees," explained Caekebeke. "It wasn't about running out of sweat equity as much as it was wanting individuals on my team focusing on implementation and change rather than manual tasks."

Baker Hughes, a long-time C3 AI customer, already had a strong partnership, systems in place and data. Caekebeke said C3 AI is a "progress partner" and more strategic vendor. "We reached out to see what C3 AI had and then continued to build together a solution that would demonstrate the ROI and then create sound data to make decisions on," she added.

Previously, Baker Hughes manually collected interviews, surveys, and documents on around 50 topics. At first, Caekebeke's team took a subset of those topics for a pilot. The team also narrowed down the list of stakeholders in the pilot. Baker Hughes collected employee insights and feedback from community resource groups within the company. Once KPIs, users and objectives were defined and the pilot proved the use case worked, the C3 AI application expanded topics, targeted a full list of stakeholders, and went into production.

One critical project consideration was identifying topics and aligning them to roles. "I wanted a tool that would be nimble enough that if I wanted to run a report only on emissions, I could do that. If I wanted to run a report only on just transition and how environmental justice was playing a part, especially after a key event, I could do that too," said Caekebeke.

Using C3 AI as a platform, Baker Hughes was able to train LLMs to fill gaps in the ESG materiality process including:

- Parsing 3,500 stakeholder documents to produce more than 400,000 paragraphs.

- Training Natural Language Processing machine learning pipelines to identify and label paragraphs to align with ESG topics and training labels.

- Deploying a workflow to compute time series ESG materiality scores for source documents at the paragraph, document, stakeholder and stakeholder group levels.

- Configuring an interface to visually represent ESG scores, analysis, evidence packages and benchmarks.

The returns boiled down to time. A human would have taken 790 hours to analyze the volume of content for the ESG materiality report, while the C3 AI ESG application took less than an hour and was able to focus on the 10% of relevant content. The manual process required nearly 30,000 hours and a 2-year cycle time to complete the ESG assessment without AI.

What's next

Caekebeke said the AI-driven ESG materiality process will enable Baker Hughes to keep better tabs on new topics, impacts of events, legislation and policy around the world. "We work in over 120 countries. We have 55,000 employees so we have a broad reach. And so, it is important to really look across the world at what's happening,†she said.

Going forward, the plan is to use more AI to drive decisions faster with more data transparency. Caekebeke said using AI is also likely to curb unconscious bias in ESG materiality assessments.

"When we were looking at the way that we did it manually, you will have some stakeholders that answer the surveys and then ones that don't. If you make an analysis, you read through their information and then essentially translate that into what you think you're hearing. But there's that kind of unconscious bias that we all have as we're reading through it," said Caekebeke "An engine doesn't really have that bias."

Caekebeke is also betting that the C3 AI ESG application will also connect dots between environmental impacts and social issues.

"Where communities are marginalized, they are feeling the deepest impact of climate change. Those areas are also where you have human rights violations and people that are not making a fair wage," said Caekebeke. "It's about looking at ESG holistically and leveraging AI to look at it so you could draw some parallels."

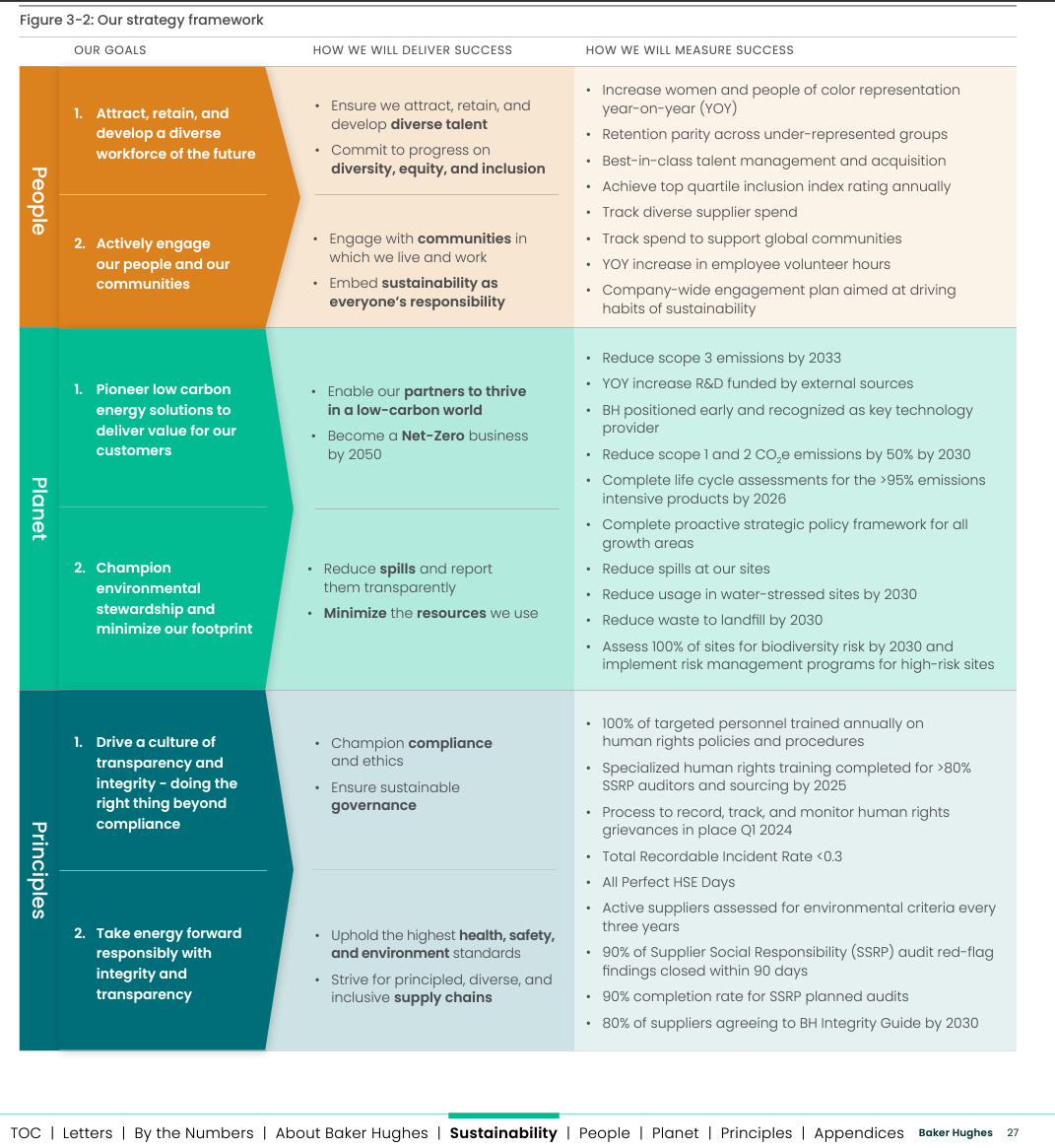

Baker Hughes released its sustainability framework in April and the goal is to use the lessons from the C3 AI tool to deploy the strategy across the organization. "Moving forward in 2024 is about making sure that the deployment of our sustainability strategy is well understood and that initiatives are pushed all the way to the deepest level," said Caekebeke. "My vision is that I want everyone to have that same focus on sustainability, understand the value and understand our environmental and social footprint. For 2024, it will be a deeper engagement with employees all from the top to the bottom, across regions where we work and also across the functions."

Lessons learned

Caekebeke said the project surfaced a few lessons learned about the intersection of ESG and AI. Here's the breakdown.

Get high level support from executives. Baker Hughes leadership supported the effort and that helped overcome concerns about using AI. "There's a lot of skepticism around AI. Some people love it. Some people are nervous. There should be a bit of both," she said.

Governance is critical. Caekebeke said governance should be laid out in advance of pilots and deployment.

Have a strong partner. Caekebeke said that C3 AI worked closely with her time to customize the application and produce something that works with transparency. Training models require collaboration and back and forth between customer and vendor teams.

Time is a core metric. "We are mindful of the fact that as sustainability requirements are increasing, people have less time," she said.

Start small. There are so many metrics to follow in ESG, but it's critical to narrow them down to the ones that are risks to your enterprise. "It's easier actually to build that up than to go the other way around. A lot of the times we want to please every stakeholder you know, and it's important to listen, but then you have to prioritize," said Caekebeke.

Efficiency and optimization are also sustainability. Internal stakeholders need to realize that "when you make something efficient, you're also making it more sustainable," said Caekebeke.

Keep iterating. "I was an AI skeptic. And I was really surprised to see the efficiency of the tool to the point where we're now in production phase, and we're working on the next iteration," said Caekebeke. "Pick the three or four things you want to do this year and then the next phase, so you have measurable projects from year to year. Just incremental steps in the right direction will really help the company move forward."

Related:

- Constellation Research 2023 ESG 50 highlights rise of Chief Sustainability Officers

- Walmart Chief Sustainability Officer: ESG is integrated into operations, business

- The Top 50 ESG Executives Leading the Charge for a Sustainable Future

Watch the full interview here:

New C-Suite Data to Decisions Innovation & Product-led Growth AR Executive Events Chief Information Officer