Tableau Conference 2017: What’s New, What’s Coming, What’s Missing

Tableau unveils high-scale Hyper engine, previews self-service data-prep and ‘smart’ capabilities. Here’s the cloud agenda for next year's event.

Tableau Software is the Apple of the analytics market, with a huge fan base and enthusiastic customers who are willing to stand in long lines for a glimpse at what’s next. Last week’s Tableau Conference in Las Vegas proved that once again with record attendance of more than 14,000.

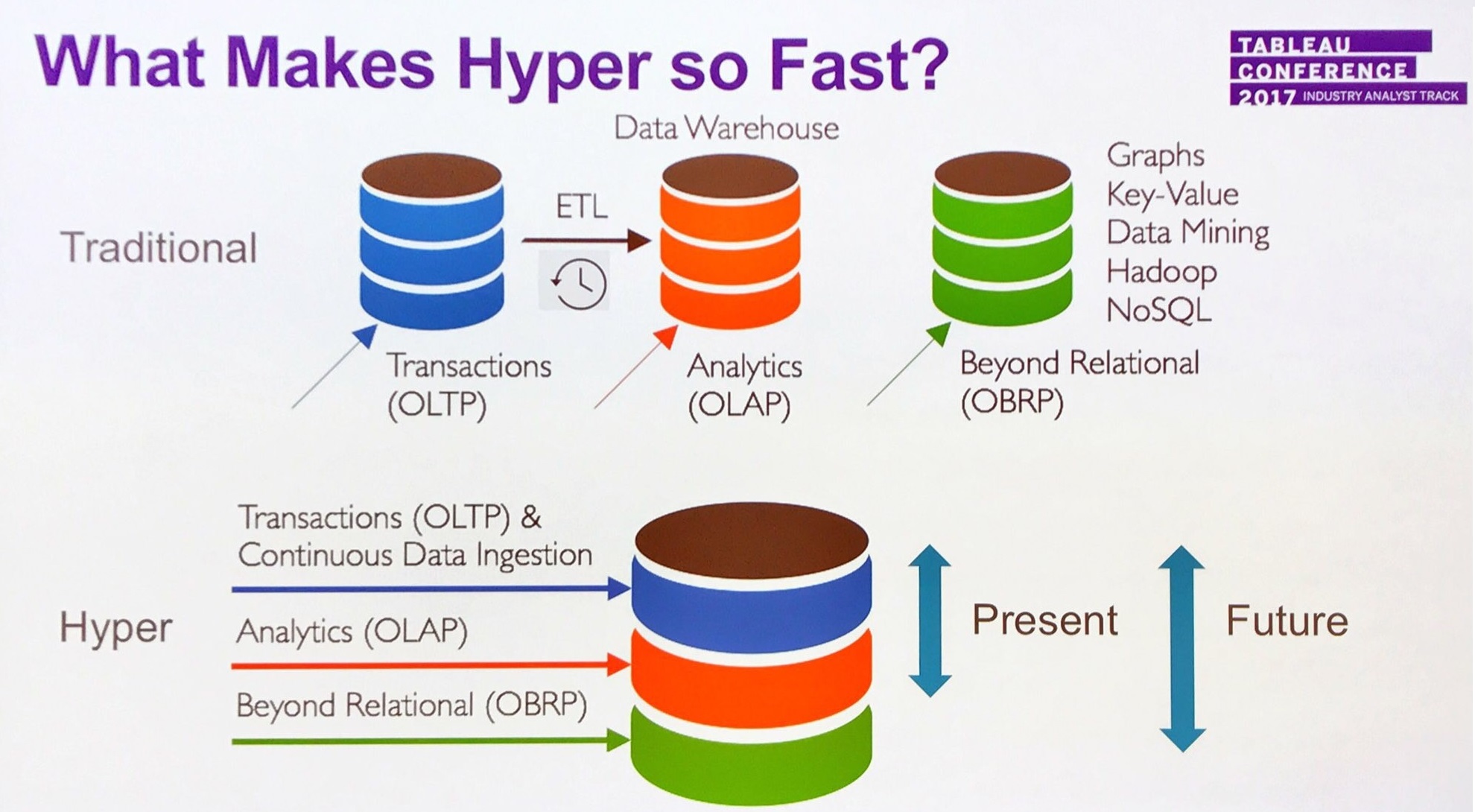

The Tableau fan boys and fan girls were not disappointed, as the company detailed plenty of new capabilities. The highly anticipated Hyper engine, for example, is now in beta release 10.5 and is sure to be generally available by early next year. Hyper solves Tableau performance problems when dealing with high-scale data extracts. The columnar, in-memory technology speeds the creation of data extracts, makes it possible to deal with larger-scale extracts and better supports scalability for enterprise-scale deployments.

Also in 10.5 are a slew of upgrades including nested projects, for more granular administrative control, mapping and Web authoring improvements, and a “Viz in Tooltip†feature that provides deeper, sparkline visualizations when you hover over a data point. A new Extensions API will enable developers to bring third-party application functionality into Tableau. For example, natural-language interpretations from Automated Insights can be embedded into Tableau Dashboards to help explain the data visualizations. Or users looking for data sources to explore could be exposed to suggestions from Alation, the third-party data catalog.

For now Tableau's new Hyper engine meets scale and performance demands tied to

handling structured data extracts. In future it will address NoSQL and graph workloads.

A bit farther over the horizon, Tableau offered a preview of its Project Maestro self-service data-prep option. Tableau executives said there would still be a place for the deeper self-service data-prep functionality offered by partners, but it looks like Maestro will deliver intuitive, visual tools that will enable many business users to combine, clean and transform data. (Thus, data-prep partners like Alteryx and Trifacta are moving to provide more advanced capabilities, such as prediction and machine learning).

Maestro is expected to be in beta release by year end. General availability typically follows beta release within a quarter, but Tableau execs weren’t ready to discuss packaging or pricing of what will be an optional module that’s integrated with, but separate from, Tableau Desktop and Tableau Server.

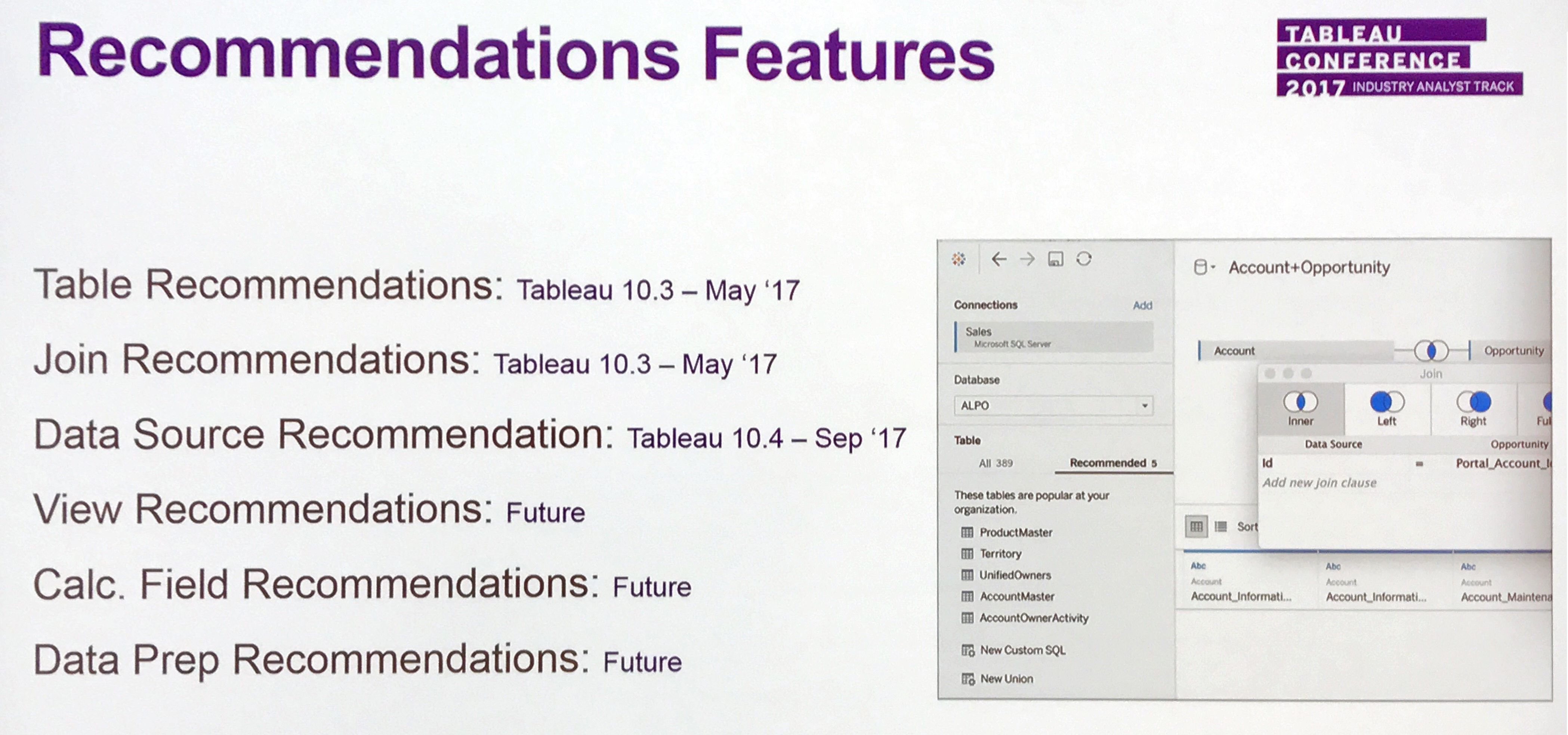

Even farther over the horizon, Tableau outlined plans for more “smart†capabilities powered by machine learning and natural language query. Tableau already recommends data sources based on historical behavior by user, group, role and access privileges, but more discovery and analysis recommendations are in the works. Having recently acquired ClearGraph, Tableau is also working on natural language query capabilities that will enable users to have more of a dialogue with the software. Using a technique called query pragmatics, ClearGraph’s technology can retain the context of a previous query to drill down to deeper insight. The queries can be typed in or, with third-party voice-to-text capabilities, spoken into mobile devices.

Tableau' plans to extend machine-learning powered recommendations from data sources

into the areas of suggested visualizations and suggested data-prep steps.

What Tableau didn’t talk about so much were next steps for cloud deployment. Yes, the 10.5 release introduced Linux support for Tableau Server, which is clearly a boon for cloud deployment, where Linux is usually the default operating system. The release also made strides in Tableau’s long-running goal to bring functional parity between Tableau Desktop and the Tableau Web client. Finally, Tableau has also made strides in supporting deployments on Microsoft Azure. But we didn’t hear much at all about what’s coming in the next generation of Tableau Online, which is the vendor’s software-as-a-service offering of Tableau Server. Big enterprise customers more typically deploy Tableau Server themselves on AWS, Azure or the Google Cloud Platform, but here, too, there wasn’t much said about making such cloud deployments easier.

My Take on Tableau Conference 2017

The Tableau Conference grew yet again this year, surpassing the scale of most conferences I attend – and certainly any analytics vendor conference. That’s a testament to the level of customer enthusiasm, which was once again palpable. The love of the product grows from the grassroots level, with Tableau Desktop (as well as the mobile and web clients), but the company was careful to put huge, enterprise-scale deployments at the forefront of this year’s event. In the opening keynote, for example, Sherri Benzelock, VP of Business Analytics at Honeywell, explained how the company achieved a “balance between data access, trust and governance†in a “viral†deployment that reached 20,000 employees within two years.

Another take on creating a well-governed but democratized self-service experience was shared by Steven Hittle and Jason Mack of JP Morgan Chase. Hittle, VP of BI Innovation and a member of the IT team, said Tableau has enabled people to “build in hours what took weeks or months in other tools.†That flexibility has led to broad adoption since 2011, with more than 20,000 users across JP Morgan Chase. It was Hittle’s job to convince risk officers that they could trust people with direct access to data. Governance is largely achieved at the data layer, with robust permissions and access controls. Another safeguard is monitoring all data extracts to enforce the rule that no personally identifiable information is brought into Tableau Server.

Tableau is deployed on premises at JP Morgan Chase, with 15 Tableau Server instances around the world, but Hittle’s advice to fellow enterprise-scale customers speaks to what should be next on the company’s cloud agenda. For starters, managing Tableau Servers is not dead easy, and as deployments scale up, cost-management becomes a concern. It helps that Tableau has introduced subscription-based pricing over the last year, which JP Morgan Chase and many other customers have adopted, but the banking giant has done a lot of work to mine performance and usage statistics and to come up with a shared allocation module – by users as well as by CPU, disk, and network utilization – to guide judicious purchasing of Tableau Server capacity. Hittle said that JP Morgan Chase has also had to work on automating server migrations and version control.

The buzz from big cloud providers, meanwhile, is all about serverless computing, automation and “autonomous†capabilities, as discussed at the recent Oracle Open World event. Tableau obviously has to learn how to make the most of automation through its own Tableau Online cloud service, but that SaaS offering is not suitable for every customer. At the Google Next event this spring, for example, an executive from a major university told me that he couldn’t use Tableau Online because it’s not HIPAA compliant. The university uses Google Big Query and other managed services wherever possible, but it has to run Tableau Server on virtual machines on Google Cloud Platform infrastructure, which he described as “the most expensive and complicated thing that we do.â€

I came away from the Tableau Conference impressed by the company’s growth, its many new features and by current and planned smart capabilities powered by machine learning and natural language processing. But the bar continues to rise on cloud expectations. At Tableau Conference 2018 I hope to hear about the maturation of Tableau Online for enterprise needs. And for those who choose to deploy Tableau Server, whether on public cloud infrastructure or on premises, I hope to hear more about automation options and streamlined deployment and management capabilities geared to a hybrid, multi-cloud world.

Related Reading:

Oracle Open World 2017: 9 Announcements to Follow From Autonomous to AI

Microsoft Stresses Choice, From SQL Server 2017 to Azure Machine Learning

Qlik Plots Course to Big Data, Cloud and 'AI' Innovation