Snowflake launches Arctic LLM, to release details under Apache 2.0 license

Snowflake launched Arctic, an open-source large language model (LLM) optimized for enterprise workloads and efficiency. The move highlights how data platforms are increasingly launching LLMs to combine with their data platforms.

For Snowflake, Arctic is also among the first launches under new CEO Sridhar Ramaswamy's tenure. Arctic will be part of a larger LLM family built by Snowflake.

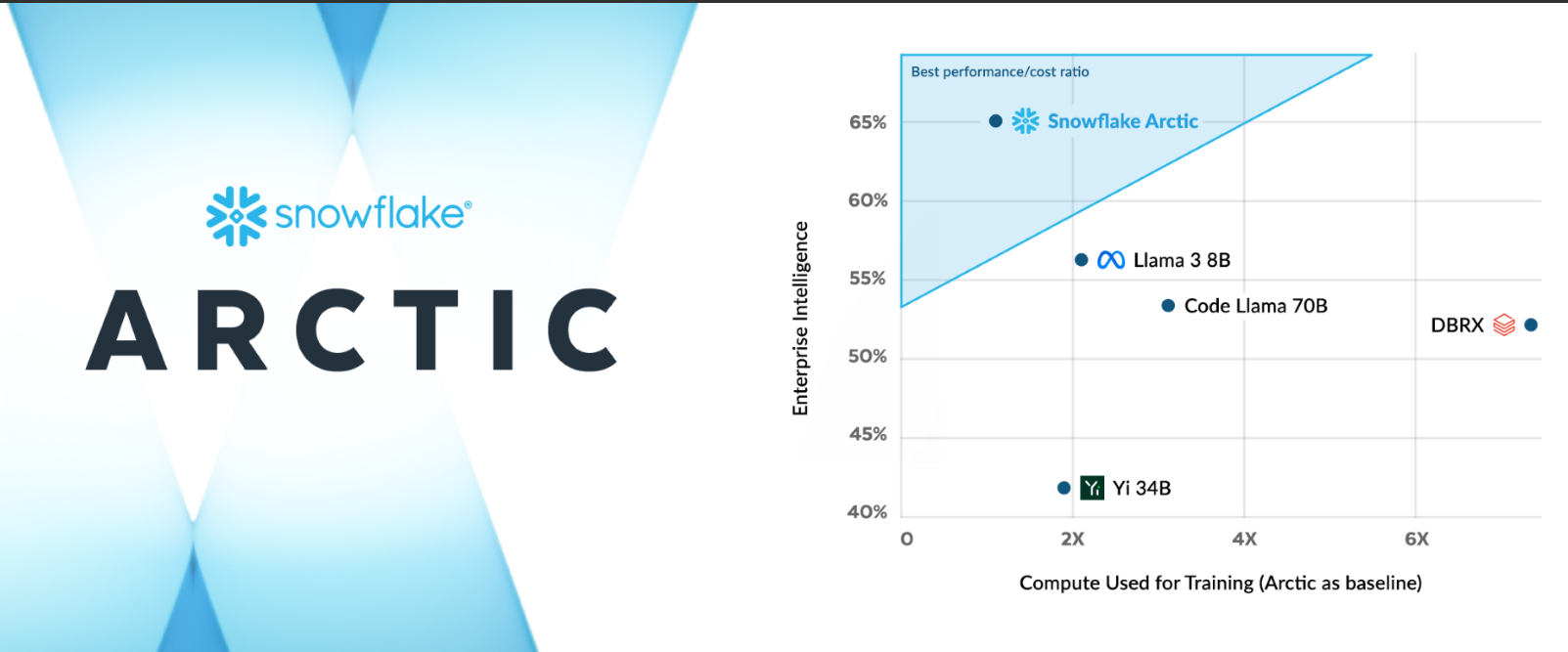

The Arctic launch also lands as Databricks launched DBRX, an LLM that has been well-received. Ramaswamy said Arctic represents a "watershed moment for Snowflake" and highlights what open-source AI can do. For good measure, Meta launched its Llama 3 LLM last week. Ramaswamy's mission is to speed up Snowflake's product cycles and innovation.

- Snowflake CEO Ramaswamy on product cycles, AI, scaling

- Snowflake CFO Scarpelli: Don't pigeonhole new CEO Ramaswamy as technologist

- Snowflake's Slootman steps down as CEO, technologist Ramaswamy takes over

Snowflake said it will release Arctic's weights under an Apache 2.0 license and detail how the LLM was trained. Snowflake pitching Arctic as an LLM that can balance intelligence and compute. Snowflake's plan is clear: Scale Arctic usage to the 9,400 companies on its data platform. These companies will then consume more of Snowflake's platform.

Key points to note about Snowflake Arctic:

- Snowflake is ensuring it has open-source credibility. Snowflake said it will provide code templates, flexible inference and training options and the ability to customize Arctic via multiple frameworks.

- Frameworks for Arctic will include Nvidia NIM with Nvidia TensorRT-LLM, vLLM, and Hugging Face.

- Arctic will be available for serverless inference in Snowflake Cortex, which offers machine learning and AI in the Data Cloud along with model gardens and catalogs from Nvidia, Hugging Face, Lamini, Microsoft Azure and Together.

- Snowflake said Arctic's mixture of experts (MoE) architecture is designed to activate 17 out of 480 billion parameters at a time for token efficiency. Snowflake claims it activates roughly 50% fewer parameters than DBRX.

Although Snowflake launched Arctic, the company said it will still give access to multiple LLMs in its Data Cloud.

Constellation Research's take

Constellation Research analyst Doug Henschen said:

"It's good to see Snowflake moving quickly, under new CEO Sridhar Ramaswamy, to catch up in the GenAI race. Snowflake rival Databricks started introducing LLMs last year with its release of Dolly and it followed up this March with the release of DBRX, an open-source model that seems to be getting lots of traction. Snowflake is clearly responding to the competitive threat, given the press release’s comparisons between the new Arctic LLM and DBRX. I’d like to know more about the breadth of intended use cases. Snowflake says Arctic outperforms DBRX, Llama 2 70B and Mixtral-8x7B on coding and SQL generation while providing “leading performance†on general language understanding. I’d like to see independent tests, but the breadth of customer adoption will be the ultimate gauge of success. It’s important to note that Snowflake Cortex, the vendor’s platform for AI, ML and GenAI development and development, is still in preview at this point. As a customer I would want to look beyond the performance claims and know more about vendor indemnification and risks when using LLMs in conjunction with RAG techniques."

Constellation Research analyst Andy Thurai said:

The massive war between open-source and closed-source LLMs is heating up with multiple competitors. Massive LLMs are available for free and allowing enterprise users to fine-tune their models with their enterprise data. On that note, a few items from this release are notable:

- This is licensed under Apache 2.0 which permits ungated personal, research, and commercial use. This is different than many other open-source LLM providers such as Meta’s Llama series, which allows use for personal and research purposes with limitations on commercial use.

- DataBricks, which is gaining market share and momentum fast, had a massive leg up with their acquisition of MosiacML in knowledge, skilled resources, stockpile of GPUs, and expertise to train massive LLMs. Every big cloud and data vendor is going after this market by announcing their own variations including Google, AWS, Databricks, Microsoft, IBM, DataBricks, Anthropic, Cohere, Salesforce, Twitter/X, and now Snowflake.

- Snowflake aims to make this process easier by providing code templates and flexible inference and training options to deploy in existing AI, and ML frameworks.

- Snowflake provides options for serverless inference which could help with expanding massively distributed inference networks to operate on demand.

- The company is trying to go after two specific markets--search and code generation.

- Snowflake has an advantage over other LLM providers from a data lake standpoint. If Snowflake can convince the users to keep the data in their data lakes to train their custom models, or have them fine tune or RAG it, it can compete easily. Given Snowflake is late as many enterprises are already experimenting with many LLM providers. By providing hosting options for many other open source LLMs in Cortex and Arctic, Snowflake is hoping to catch up.