Databricks launches DBRX LLM for easier enterprise customization

Databricks launched DBRX, an open-source large language model that aims to enable enterprises to customize models with their own data.

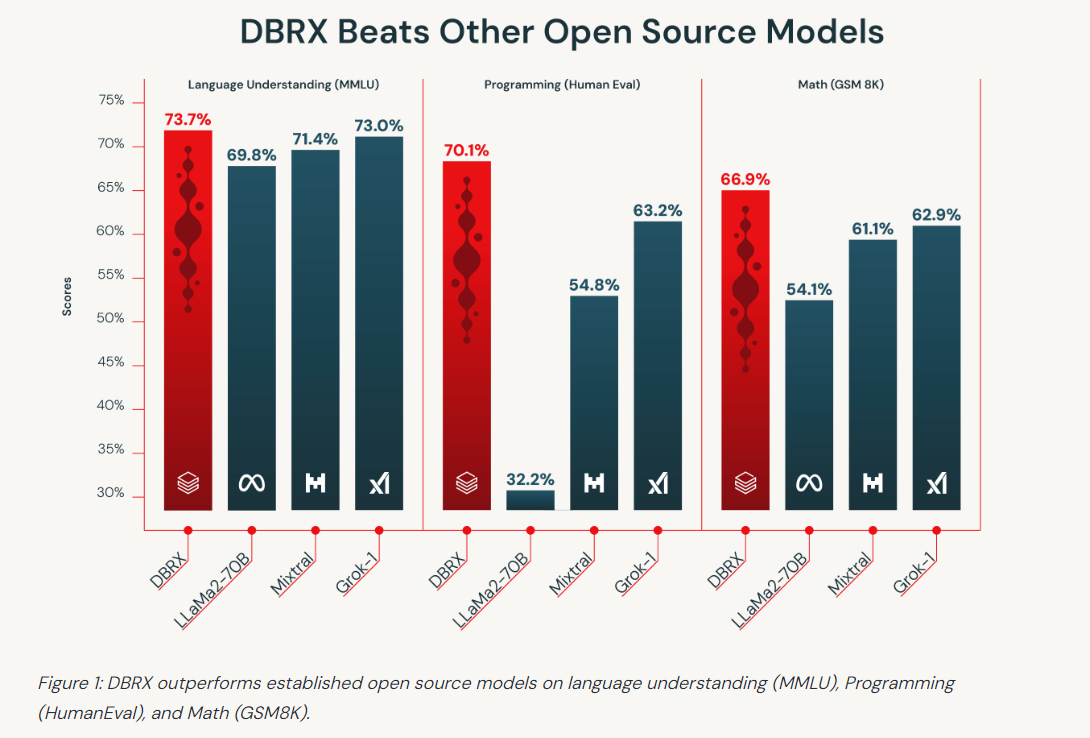

DBRX, available on GitHub and Hugging Face, Databricks and cloud providers, is a general performance model that outperforms open-source models on standard benchmarks. For Databricks, which acquired Mosaic ML, the DBRX LLM rides shotgun with its popular data platform. DBRX was developed by the Mosaic team that previously built the MPT model family.

Ali Ghodsi, CEO of Databricks, said DBRX is designed to help enterprises to "understand and use their private data to build their own AI systems." Using private data to tailor LLMs securely has been a recurring theme in recent months from multiple vendors. The most cost effective way to customize models is to use smaller language models and leverage open source models.

Nvidia GTC highlighted how the software building blocks for generative AI are in place. The company launched Blackwell GPUs, but Nvidia Inference Microservices (NIMs) will ultimately be just as important. NIMs are pre-trained AI models packaged and optimized to run across the CUDA installed base.

Doug Henschen, analyst at Constellation Research, said the Databricks move with DBRX fills a need to enable enterprises to customize open-source models. Henschen said:

"With its DBRX launch, Databricks is a step ahead of rivals in helping customers to use their private data to build their own AI systems. Yes, having another open LLM to choose from is great, but the point is accelerating the move to custom models built on the customer’s own data."

What to Look for in Analytical Data Platforms for a Cloud-Centric World

- Databricks acquires Arcion for $100 million

- Databricks adds on to the Lakehouse, acquires MosaicML for $1.3 billion

- Databricks launches Lakehouse Apps, aims to be development platform

- JPMorgan Chase: Digital transformation, AI and data strategy sets up generative AI

- Databricks Data + AI Summit: LakehouseIQ, Lakehouse AI and everything announced

As for the technical details, here are some key points about DBRX:

- DBRX was trained on 3072 NVIDIA H100s connected by 3.2Tbps InfiniBand. Databricks built DBRX on its suite of tools used by customers.

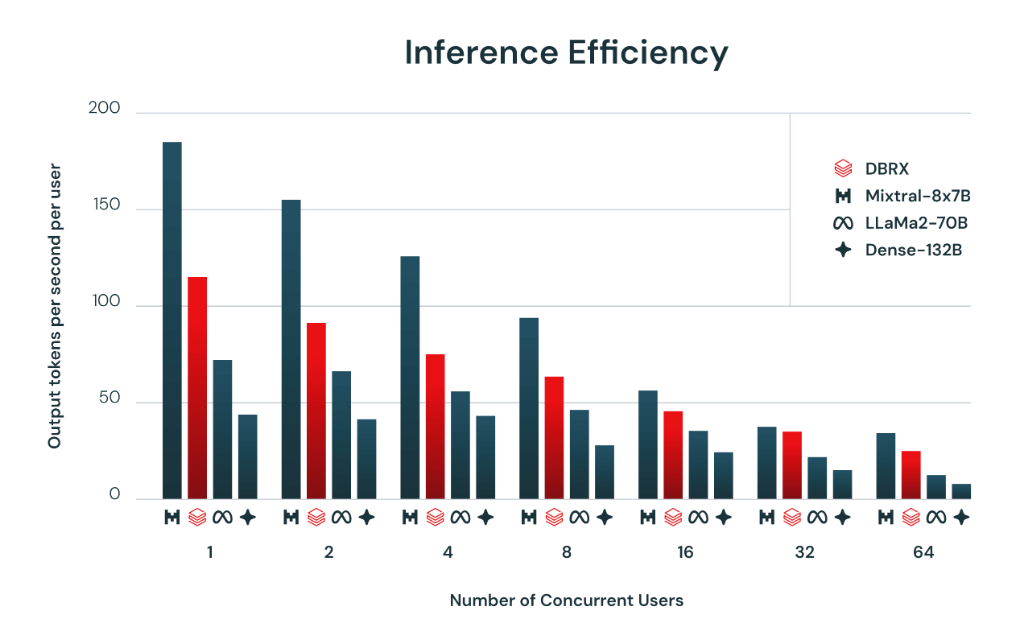

- The LLM uses a mixture-of-experts architecture, which is cost effective and efficient leveraging tokens per second. DBRX is efficient for inference tasks.

- DBRX is a transformer-based decoder only LLM with 132B total parameters with 36B parameters active on any input.

- DBRX was pretrained on 12T tokens.

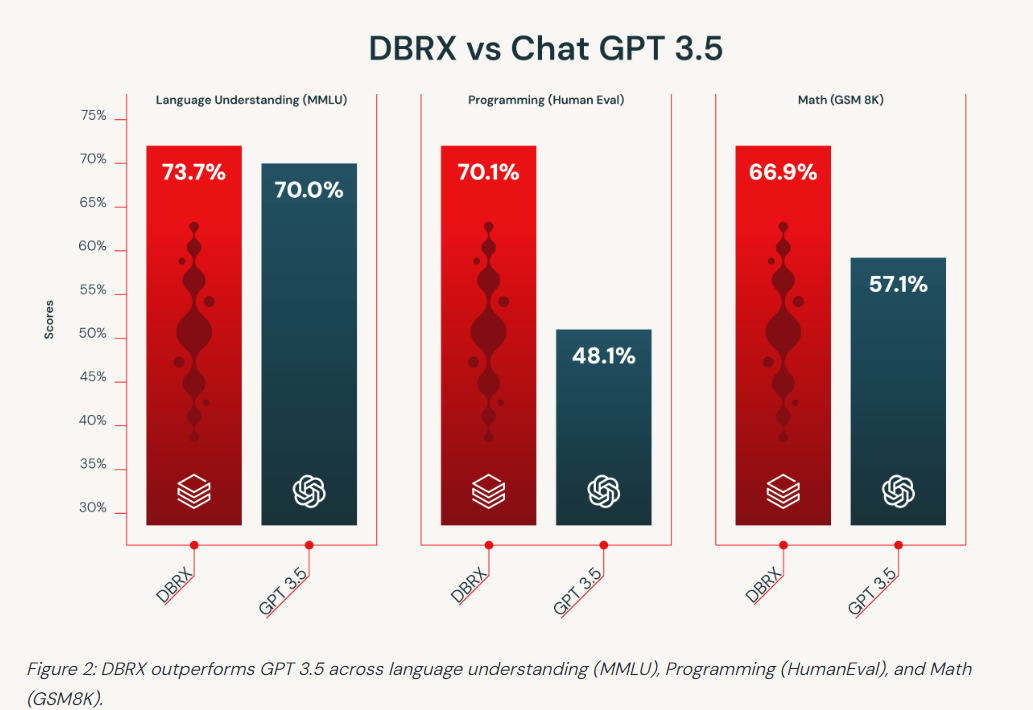

- DBRX is being integrated into Databricks' GenAI products and has surpassed GPT 3.5 Turbo in applications like SQL and retrieval augmented generation (RAG) tasks.

- Early customers and partners include Accenture, Allen Institute for AI, Block, Nasdaq and Zoom.

Databricks Platform customers can leverage DBRX for RAG and to build custom models. DBRX is also on AWS, Google Cloud and Microsoft Azure via Azure Databricks. DBRX will also be available through Nvidia's API Catalog and supported on Nvidia's NIM inference microservice.