Meta launches Llama 3, wide availability with more versions on deck

Meta launched its Llama 3 open source large language model and said it will be available on AWS, Databricks, Google Cloud, Microsoft Azure, Snowflake, IBM WatsonX, Nvidia NIM and have support from enterprise hardware platforms.

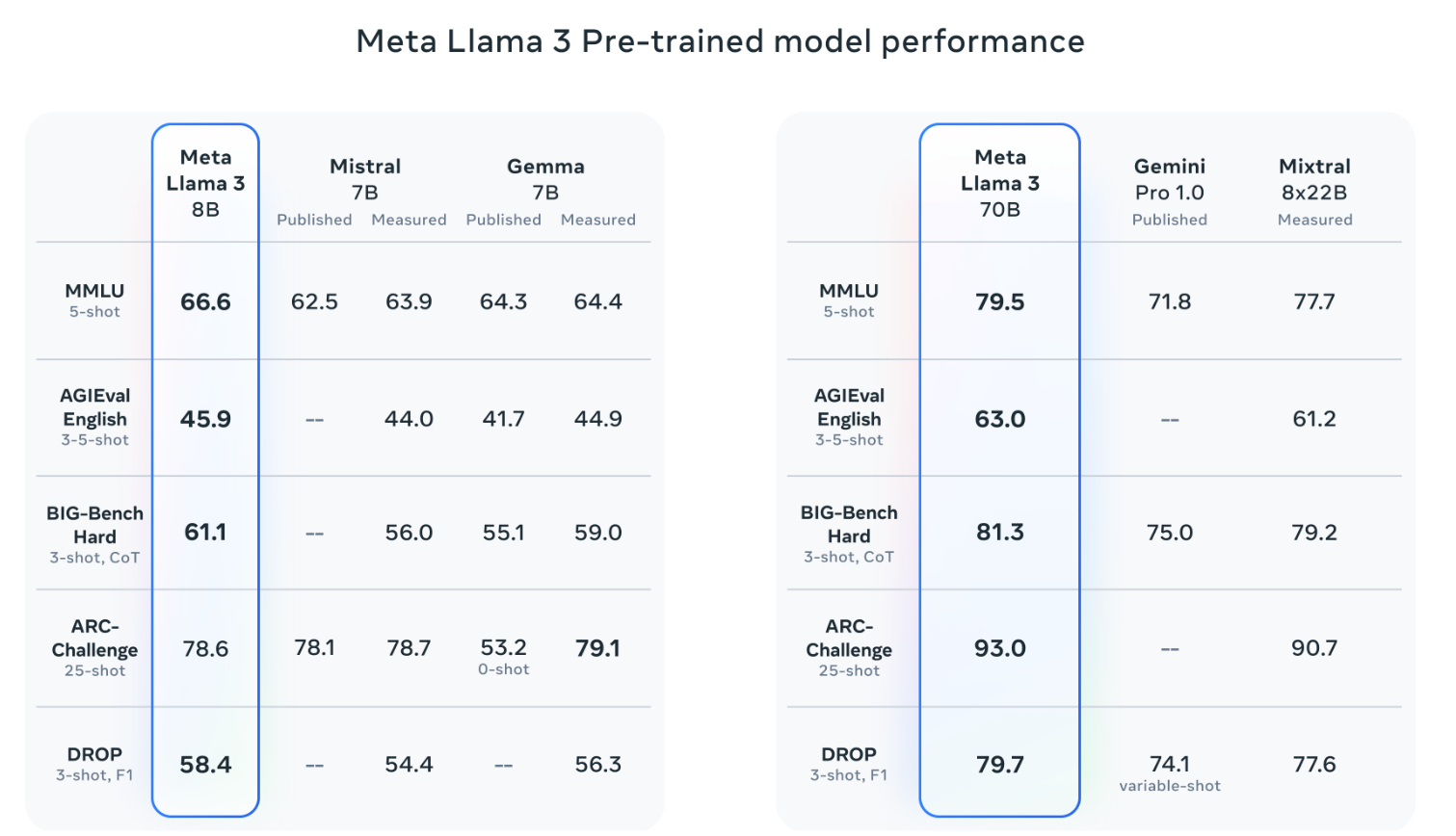

Yes, we're in the age of weekly LLMs that leapfrog each other, but Llama 3, which will initial come in 8B and 70B parameters with more versions on deck, is of interest to enterprises.

Why? Companies are likely to look to capable open source LLMs and then look to fine tune them with enterprise data. Llama 3 represents a backdoor enterprise play for Meta.

- 77% of CxOs see competitive advantage from AI, says survey

- Foundation model debate: Choices, small vs. large, commoditization

- Middle managers and genAI | Why you'll need a chief AI officer | Enterprise generative AI use cases, applications about to surge

In a blog post, Meta outlined the Llama 3 effort, which is a big leap over Llama 2. "Improvements in our post-training procedures substantially reduced false refusal rates, improved alignment, and increased diversity in model responses. We also saw greatly improved capabilities like reasoning, code generation, and instruction following making Llama 3 more steerable," said Meta.

Meta added that it evaluated Llama 3 based on prompts covering 12 use cases including brainstorming, advice, coding, creative writing, extraction and summarization to name a few. In this use case testing scenario, Llama 3 70B topped Claude Sonnet, Mistral Medium and Llama 2.

According to Meta, Llama 3 is being deployed across its applications including Facebook, Instagram and WhatsApp. Llama 3 is also available for a spin on the web.

Going forward, Meta said a 400B parameter model is training and it'll "release multiple models with new capabilities including multimodality, the ability to converse in multiple languages, a much longer context window, and stronger overall capabilities."