AWS re:Invent 2022: Perspectives for the CIO | Live Blog

I'm here at The Wynn hotel in Las Vegas as the huge AWS re:Invent 2022 confab kicks off today. The event catches the cloud services giant at a historic inflection point, as the cloud hyperscaler industry enters early maturity and the epic capital bets Amazon has made over the last decade and a half are showing their wisdom (or not.) The wildcard? This is happening right as the global economy hits significant speed bumps for just the second time in AWS's history. Chief Information Officers (CIOs) are closely scrutinizing their cloud portfolio this year and next to ensure they get the most value from them. What they learn at re:Invent this week will certainly have impact on their cloud trajectories.

Through the course of this next week, I'll be covering the main re:Invent track as live as I can here and Twitter, as well as the separate but co-joined AWS Analyst Summit. I'll be providing real-time analysis and coverage, mostly from a CIO perspective but also from an enterprise architecture point-of-view. Refresh this blog to pull the latest.

Analyst Summit - Monday, November 28th, 2022

8:15am: First up, I'm heading downstairs at The Wynn for the first session of the AWS Analyst Summit which is a public cloud roundtable. This session is off the record in terms of details but I'll provide oblique thoughts as it progresses.

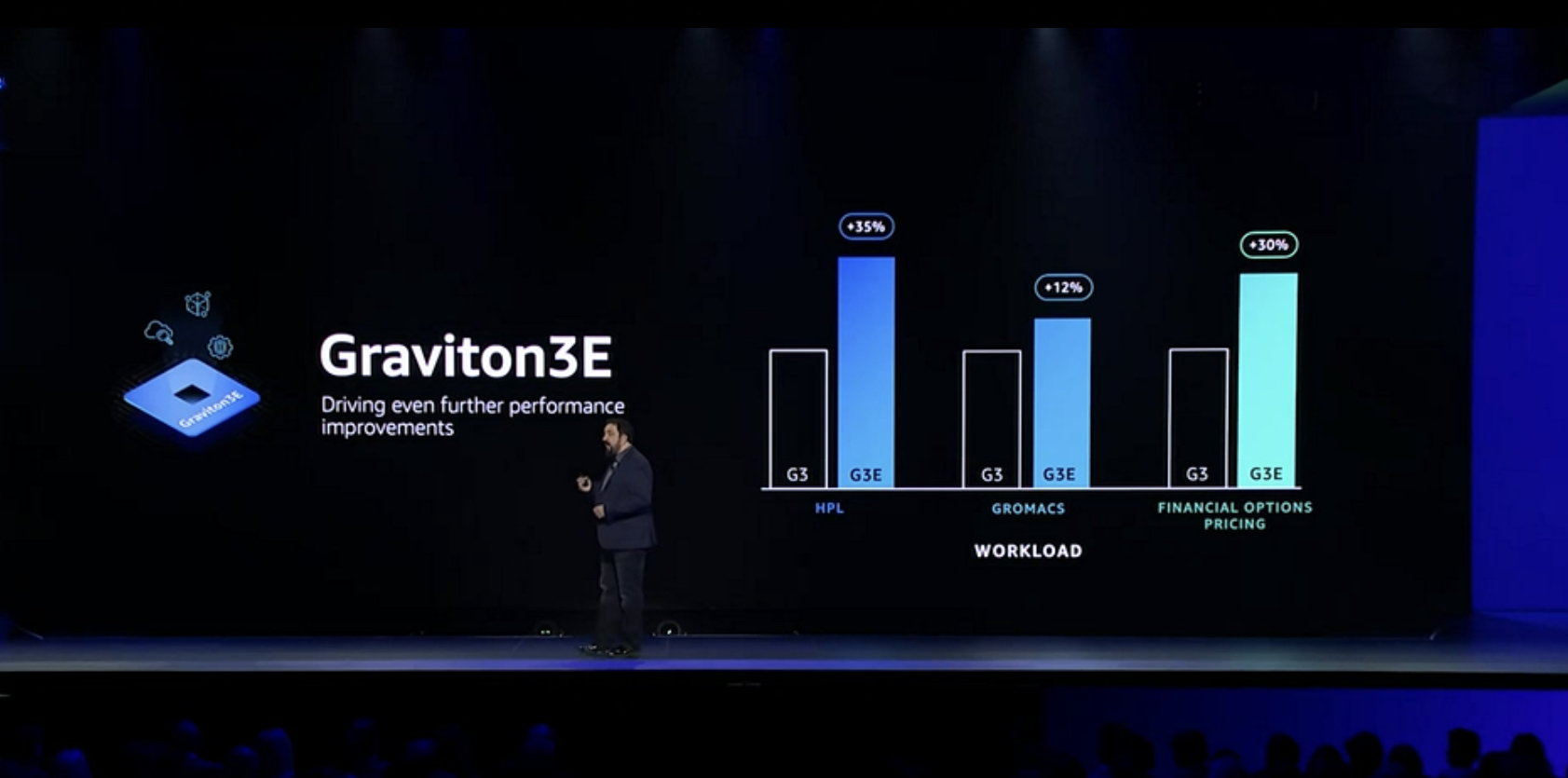

8:23am: It's clear that Amazon is very proud of its Graviton 3 custom silicon. It's 60% more energy efficient over x86. Custom silicon has become a prime differentiator as the super hungry machine learning and AI workloads businesses want to run consume vast horsepower in order to provide competitive advantage. Accelerating the performance of databases is also a major use case. Large language models that support intelligent conversations is another, and the training requirements with vast models to drive performance and reduce cost of using today's very large models. Graviton first emerged in 2018, and the latest iteration provides some real price/performance advantages over general purpose CPUs.

For the CIO, I anticipate that the increasingly convergence of overarching ModelOps strategy with cloud strategy will begin to significantly emphasize putting custom silicon into the center of enterprise-wide ML and AI operations.

8:30am: Networking is also providing to be vital capability, the the hyperscalers are driving cutting-edge advances in cloud networking. Expect to see a number of announcements around this during re:Invent this week.

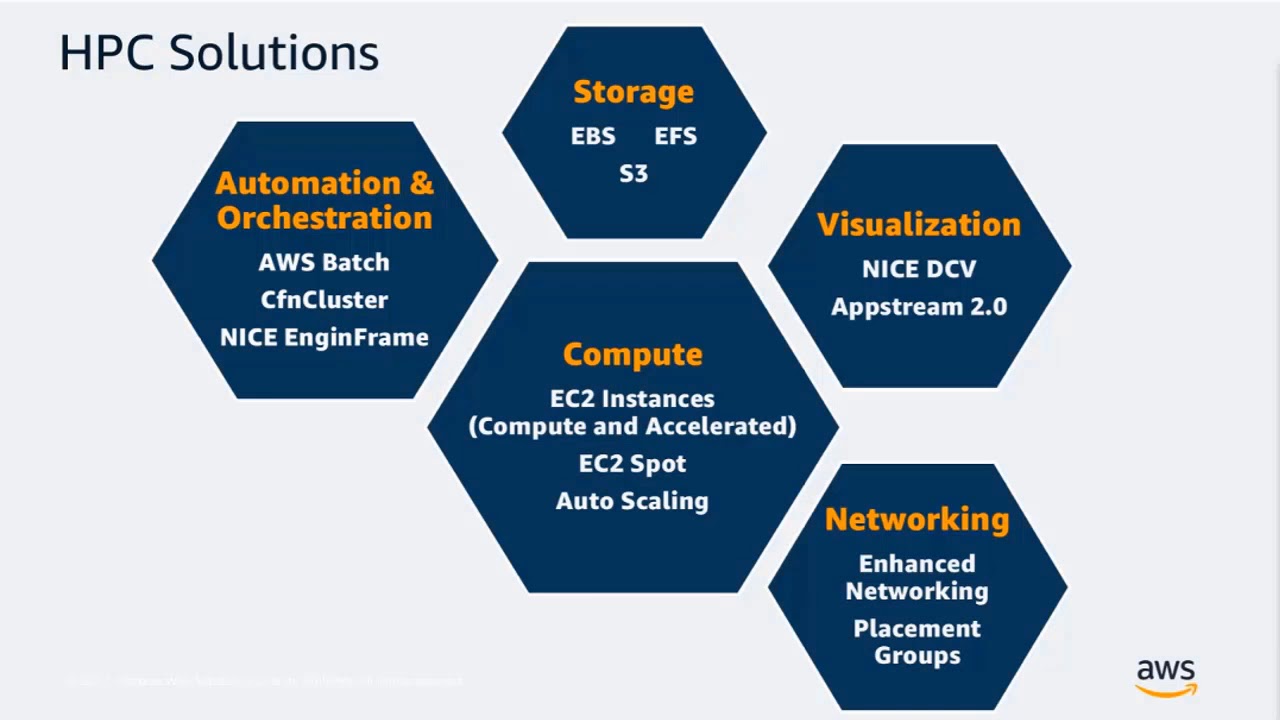

8:37am: High performance computing (HPC) is also a hot topic this week and is clearly an area where Amazon feels like it can compete at a very high level of competency. It's also likely where significant margin is as well. However, HPC instances are real investments (they aren't cheap on an hourly basis) but they do bring the elasticity and scale of cloud to the most difficult compute problems likely to be at the forefront of strategic investments in IT and digital capabilities in Amazon's customer base.

8:41am: "Over-provisioning" is another phrase coming up a lot here at re:Invent. Downsizing to different, smaller instances or processor types in a seamless and automatic way is going to be a very interesting topic for the CIO and cloud operations teams. AWS's Trusted Advisor seen as not having enough granularity to really help richer, hybrid or Outpost environments. Lots of interest in cutting costs while also managing sustainability. Moving workloads to the cheapest carbon neutral instance type.

8:46am: Bringing the entire AWS platform -- and any other hybrid cloud resources -- into one single virtual private cloud (VPC) is seen as a 'holy grail' of contemporary cloud. But there is a concern that AWS tools are emerging more to fix point barriers to achieving this, rather that providing a well-designed, overarching VPC capability with minimum complexity and optimal efficiency/manageability. Orgs continue to be hungry for capability multicloud/cross cloud tools to manage their cloud estates. Developers are now being forced to understand cloud networking, cybersecurity, and provisioning to a far greater level of detail than they ever wanted to. I expect announcements at both the tactical and strategic level for this during re:Invent this week (I don't have any specific knowledge of this.)

8:51am: Running very large workloads is another issue at the forefront of hyperscaler services. Instances sizes within AWS are getting both smaller as well as much larger, in order to fit large models that have hundreds of millions or even billions of parameters in a single. Local zones are continuing to see expansion to bring instances closer to large populations. At the same time, there are questions about whether AWS is going to consolidate its sprawl of zones and services, but it a very challenging topic, even as many customers would like to see more simplicity in AWS services design and consumption.

9:00am: Will there be a return to specialization in cloud services? One-size-fits-all is a pernicious result of large hyperscaler services. This applies to skills as well as in architecture and service design. DevSecOps is the logical conclusion of trying to get everyone to do everything. What and even if AWS sees this as a major challenge or opportunity will hopefully emerge at re:Invent this week. Enterprises want to be able to start small, but be doing their cloud development and operations right and with leading practices from the beginning, without requiring an army of specialists or experts. Or making everyone an expert in everything. A tough maturity topic that should be a major discussion this week.

9:05am: Simplicity, or something I refer to as complexity management, in managing AWS resources in aggregate, especially instance types, keeps coming up. Marshalling everything required to run an HPC app can be a tall order. How AWS can streamline application use cases and dynamically bring capabilities together -- and shift between them -- more easily is another overarching desire by organizations. Limiting the surface area of these challenges is a key way to start slicing cloud complexity into more bite-sized pieces.

9:10am: Data sharing of real-time applications for analytics or a real-time data model is a key area of interest as well. For example, using SageMaker to run ML models on real-time data to modify digital experience on the fly is the world AWS is trying to bring to its customers. End-to-end integration is required that is also highly secure and compliant with privacy, security, and trust is neeeded as well.

9:21: Cloud sovereignty rears its ugly head. Government are busy writing specs that will have major impacts. For AWS, Local Zones like not the solution, though is likely part of it. Outposts as well.

Transform with AWS Cloud Operations from Vision to Reality

12:454pm: Kicking off the first full session at the re:Invent Analyst Summit, Steven Armstrong, Vice President of Analyst Relations at AWS, talks about the week first. Highlights include the AWS's Digital Sovereignty Pledge. As nations around the world introduce new laws that govern how and where businesses can keep data about their local users, the public clouds either have to offer appealing solutions or or face the risk of having their customers move to local clouds that comply with rapidly emerging new geographic and operating constraints.The intent behind this pledge is to inform both lawmakers and customers that AWS is committed to rapidly developing its set of sovereignty controls and associated features across its offerings.

12:56pm: Now Nandini Ramni, VP of Observability and Kurt Kufeld, VP of Platform at AWS are onstage talking about making cloud operations as simple as possible, even in the face of errors. AWS is aiming for a fully self healing, remediating operating environment that triggers an alarm when errors occur, but heals itself automatically if possible and in the morning provides a report.

1:04pm: Nandini is going over the planks of cloud operations: 1) Create the right environment 2) Simplify operations and 3) Deliver the Experience. While this may seem basic, it's the second step, streamlining operations that is the most difficult task in cloud operations centers right now. In fact, accumulating complexity is the enemy of public cloud as it continues to expand, evolve, and mature.

1:11pm: Now Kufeld is going over the details of using AWS Control Tower, an increasingly essential tool for setting up and securely governing a multi-account AWS environment. Kurt says AWS customers say it take anywhere from 12 to 18 months in highly regulated industries to completely assess and approve new AWS services for production. These delays limit and slow down cloiud migrations and IT modernization efforts. Control Tower will gain increasingly comprehensive controls management. If this works as AWS is hoping, it will reduce the time significantly that it takes AWS customers to fine map manage the controls that are required for enterprises to meet their common governance and control objectives

1:19pm: Nandini is back on stage talking about about how as AWS and Amazon have grown and scaled over the years, so has their own observability needs. They create many features to offer those up to our customers, and she says there benefit from customers often pushing the boundaries and that they have lots of feature requests for AWS. She reports that fully 90% of the AWS roadmap is from customer requests. CloudWatch is one of their key offerings for observability within AWS. While CloudWatch has been helpful in enabling operational excellence, for many organizations, it also creates enormous quantities of data, further stretching AWS's capabilities. CloudWatch currently gains five exabytes of log data per month from nine quadrillion event observations across each month.

1:28pm: Customers are asking for cross-account visibility as an urgent capability says Nandini. Streamlined contextualizing of cloud logs across accounts so that customers don't have to "spend months and weeks poring over spreadsheets trying to figure out how to correlate this data across all of these accounts." It's clear that observability is providing a lot of value to customers, but in practical environments it has to be easy to use across a large number of AWS accounts as as needed. Data protection is also vital, so that personally identifying info (PII) is not exposed in logs. This is critical to enable mission critical cloud apps in healthcare (HIPIAA), GDPR, FedRAMP, etc.

1:37pm: Nandini is paying key lip service to hybrid cloud, which is essential to function in today's modern enteprise environments. Also making passing reference to their open source investments in obervability. The key is that AWS is clearly responding to the needs of operations teams to reduce complexity, to automate as much as possible, and to surface the data need to detect what's broken, then fix it quickly. It's clear that the ethos of cloud operations for AWS is shifting to put more of the operational burden on the cloud stack, and preserve human intervention for only the situations where it's absolutely required. This is wise, and many of the announcements this week will continue to make this more of a reality. My take: The question will remain for a while how well this ethos actually works in practice in true hybrid cloud applications that use the AWS stack.

Peter DeSantis Monday Night Keynote at 7:30pm

This evening Peter DeSantis, Senior Vice President of AWS Utility Computing, is expected to provides a closer look at the latest work from AWS. He is widely expected to give a dive deep in how AWS crafts brand new market solutions that combine chips, networking, storage, and compute.

7:30pm: DeSantis is up on stage talking about the return to the Monday night format. Going to take "a look under the hood." To understand the 'how' of AWS os built. To learn about the "way we deliver some of the most important attributes of the cloud security, elasticity performance cost, availability, sustainability, these are not simply features that you can launch and check off the box."

Note that at AWS, they seek to never compromise on security. And we're laser focused on cost. "So how do we improve performance while also improving security and lowering cost? Well, there's no better example of this the Nitro." AWS Nitro is a key reason that AWS began building custom chips and remains "one of the most important reasons why EC two provides differentiated performance and security." Continues a theme seen all day today that AWS is positioning itself as a performance leader in the cloud.

7:38pm: Now introducing Nitro v5 with much improved performance. "Now this new Nitro chip continues the tradition of delivering significantly improved performance." Nitro v5 has about twice the transistors as the previous generation Nitro chip, which gives it about twice the computational power. It also as 50% more memory bandwidth, and a PCIe adapter that provides about twice the bandwidth. DeSantis cites weather forecasting, life sciences, and industrial engineering as use cases needing the fastest processors and so they have paired their new Nitro v5 chip with a processor that was specifically designed to cater to high performance computing workloads.

7:42pm: Announcements coming fast and thick now. Includes new EC2 instances for Nitro. A new specially optimized Graviton 3E processor intended for HPC workloads. "There's a dance between hardware and software to deliver the peak performance that we look for. And Nitro has some really good dance partners." Going deep into the network stack and talking about their Elastic Fabric Adapter and talking about Scalable Reliable Datagram (SRD) and mulipathing of network loads. AWS has clearly gone very deep into network optimization and high performance cloud computing. "We built SRD to serve a very specific purpose."

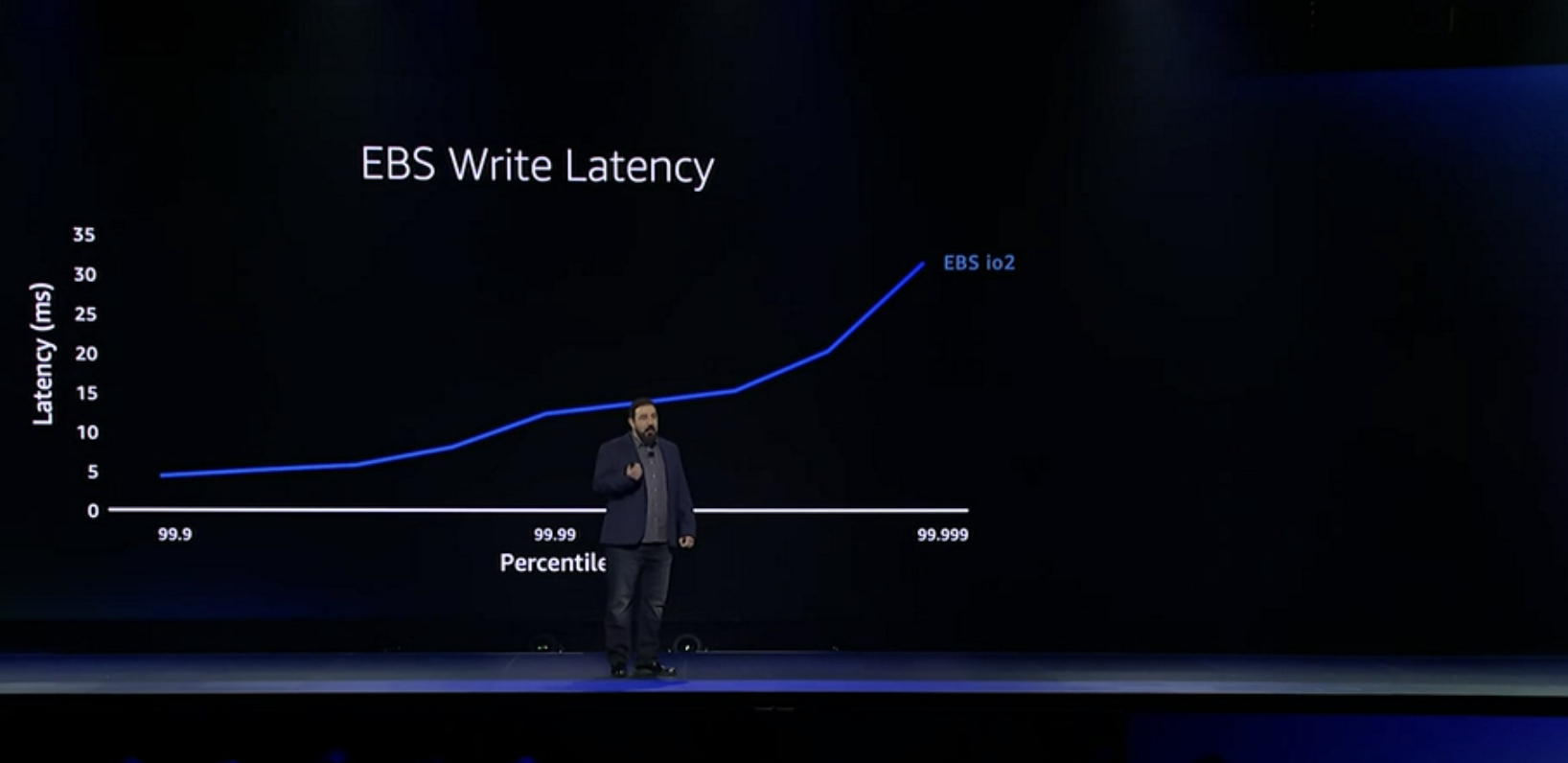

7:48pm: Says SRD has become a foundational capability that can be used in so many ways. "But what we created the ability to better utilize our massive data center network promise to help us reinvent and help customers in so many ways." Talking about write latency in the Elastic Block Store now.

7:52pm: DeSantis says of the places that EBS is most sensitive to network latency is writes. That's because the successful write involves writing that data to multiple places to ensure durability. Each of those replications is an opportunity for a dropped packet or a delayed packet. Announces that starting early next year, all new EBS volumes will be running with SRD. SRD is clearly going to be a competitive advantage for making customer workloads run faster without compromising security, durability, or "any changes to your code."

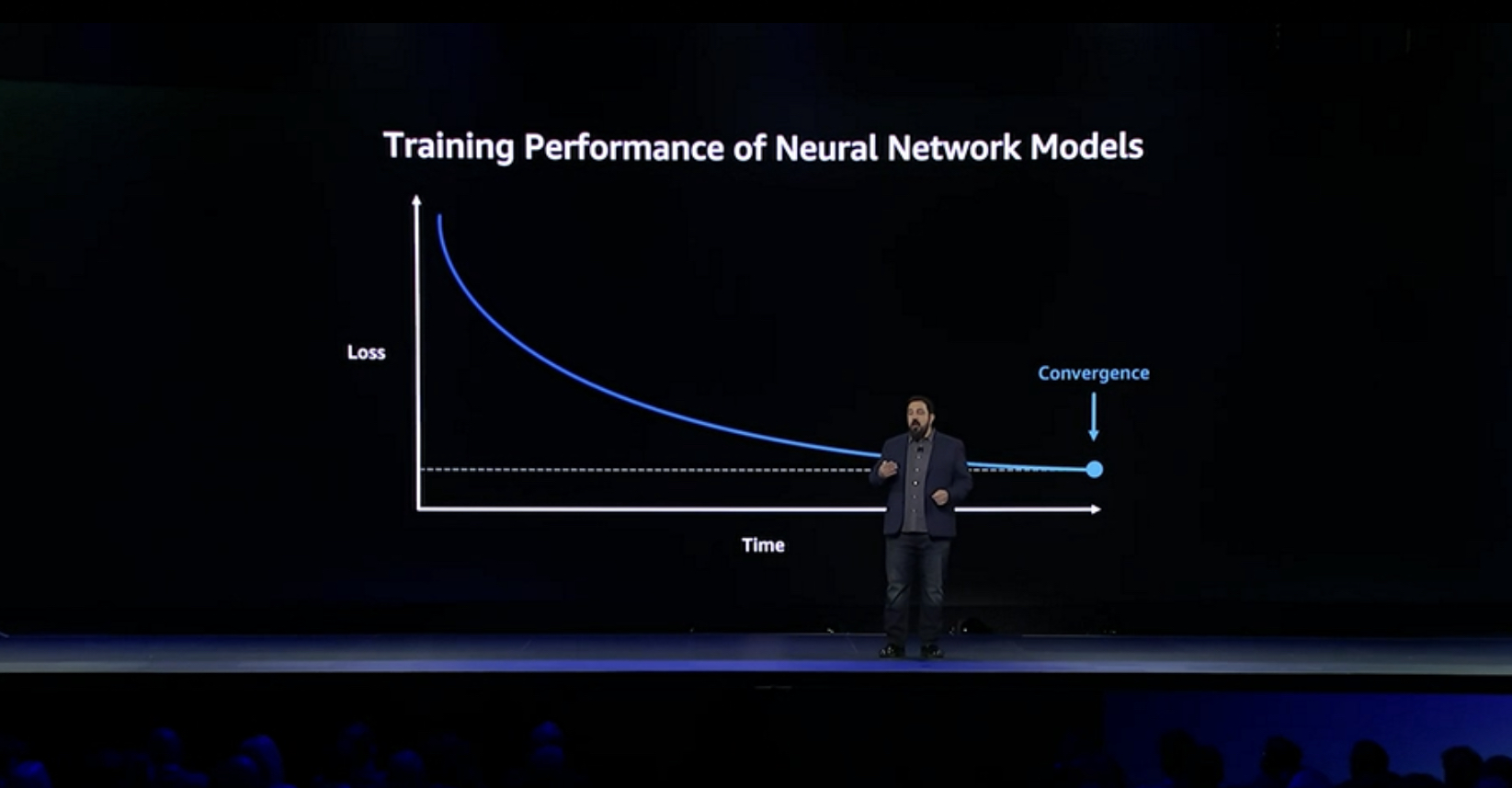

7:55pm: Switching from SRD, which will be "in everything" to machine learning. DeSantis says foundational models have emerged that can generate natural language, images, and 3D models. The capabilities of these models are closely related to the number of variables that are contained in the model. "While we see large models today being trained with over 1 trillion variables. It won't be long before 1 trillion variables are common. It won't be long until we see the next order of magnitude in complexity and scale, and capabilities."

8:00pm: DeSantis says the interesting thing about ML training workloads is there are so many potential bottlenecks. Each iteration of a model is computationally intensive. This requires a great deal of number crunching. Requires specialized hardware to do model number crunching efficiently. But the most valuable ML models are getting really really big, which means servers with large amounts of memory. Enterprises often find themselves needing to scale across multiple servers. But ML models are huge graphs of interconnected parameters. And so must divide model sacross multiple servers, so ML apps end up needing to communicate frequently. And this communication itself over the network can become a bottleneck. Looks at how AWS is working hard to remove all of these bottlenecks with AWS has with purpose built machine learning hardware. Cites the special purpose-driven Trn1 instance using the Trainium processor.

8:05pm: DeSantis beats the performance drum in machine learning applications. Scaling linearly whenever possible, especially without creating fast rising costs. "Let's look at what happens when we scale our training across multiple chips and multiple servers. The name of the game here is efficiency. The more time we can spend training our model and the less time we can spend communicating and sharing results, the more efficient we'll be and higher efficiency results in lower costs." ML apps are an arms race that requires enterprises to figure out how to most cost effectively deliver compelling results more quickly and cheaply than their competitors.

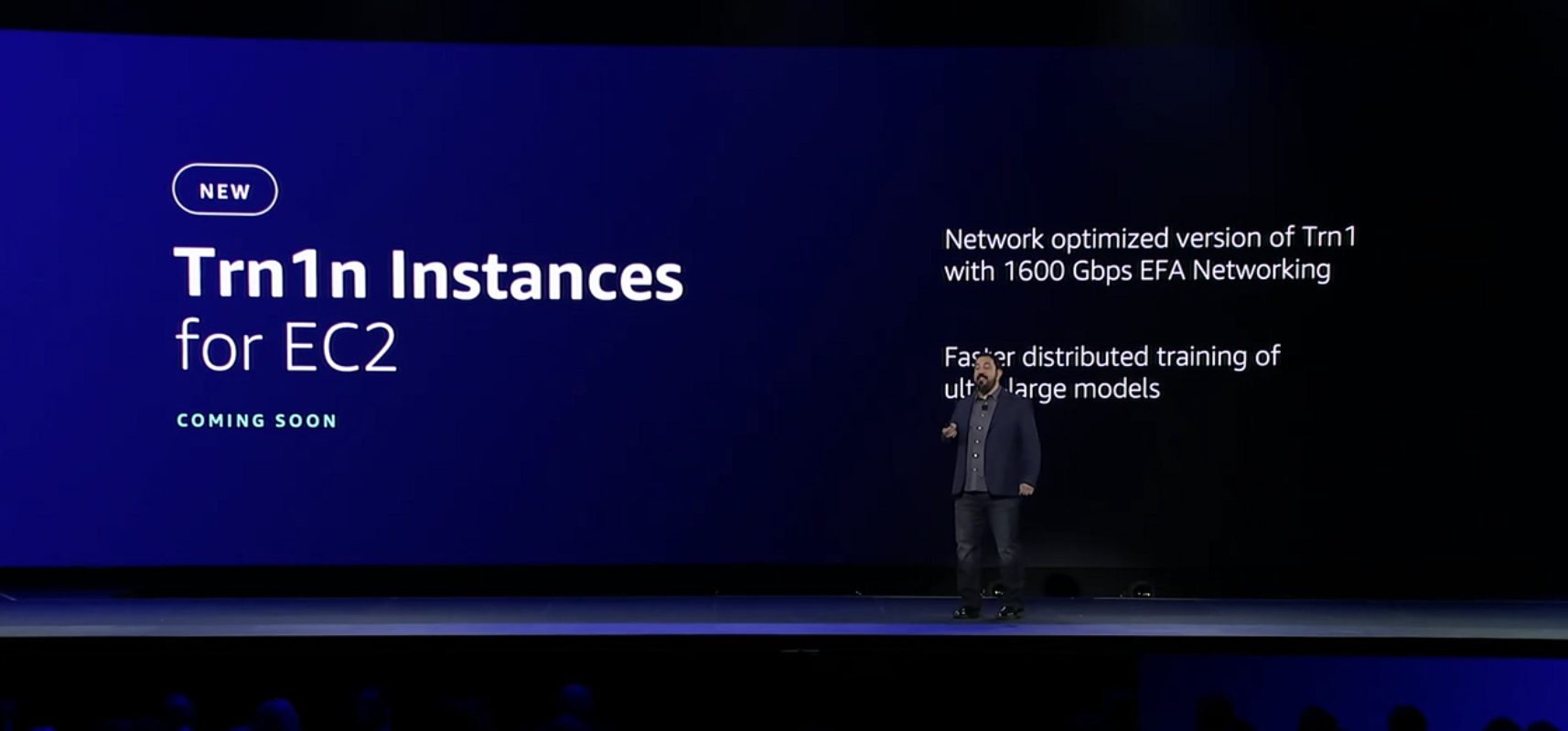

8:10pm: Now exploring detailed scenarios on scaling up machine learning applications. "In our example of about 128 processors, every new processor makes the process take longer. And this is because the process becomes latency dominated, rather than throughput." Working on sophisticated algorithms and approaches to create "rings of rings" to turn linear algorithms into logarithmic algorithms. Demonstrating their deep competency in scaling ML algorithms. While CIOs won't need to understand the details of this, the keynote is a proof point that AWS is thinking profoundly and executing in-depth on delivering on the current frontier of computing, breakthrough ML/AI use cases that require extremely large models. Introduces the new Trn1n instance that will enable extremely large machine learning models.

8:15pm: Bringing Ferrari out to talk about Formula 1 race cars. A popular trope but an industry that just about every vendor talks about. "But racing is not an exact science in striving to understand every aspect of performance. We gather data, lots of data, huge amounts of data. Let's make the most responsive, reactive performance car we possibly can [using data.]" Using customized driver-specific ML models that can help each driver uniquely "find their peak of performance, their pinnacle where the 1000s of performance differentiators come together."

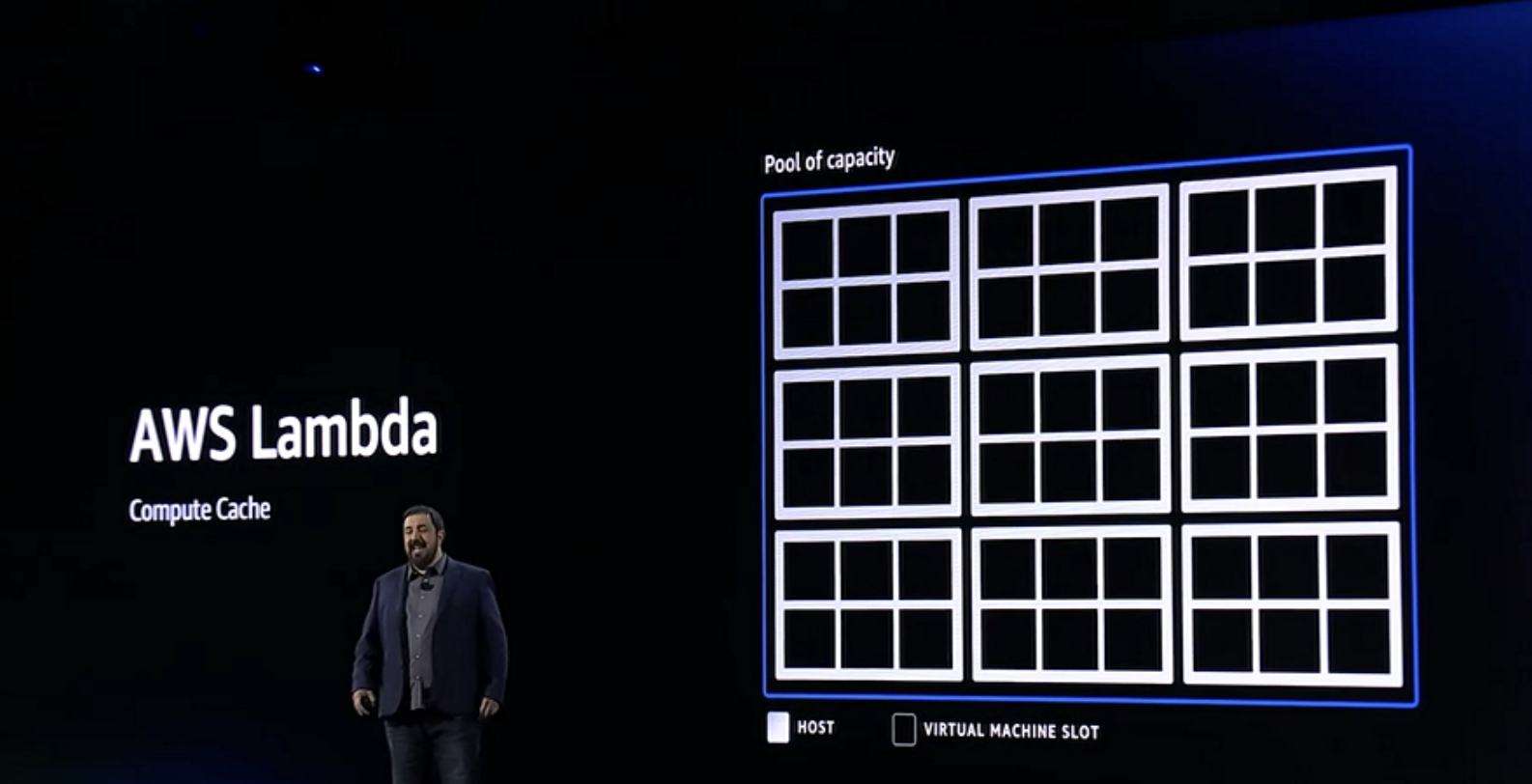

8:26pm: DeSantis back out on stage. Tonight's theme of performance continues. Talking about serverless now, one of the most important long-term trends in cloud that I find most neglect in their cloud strategies. "With serverless computing, you just focus on the code that provides differentiated capabilities." Talking about and how to make multitenancy work, in order to provide the lowest possible cost. The languages supported by AWS Lambda aren't designed for tenancy and can read/write each others' memory. Process isolation not enough. Virtual machine level isolation is required, but has many performance implications.

8:37pm: Talking about new micro-VMs called Firecracker, which "combines the security and workload isolation of a traditional VM with the efficiency that generally only comes from process isolation." Can start new instances in a fraction of a second, instead of seconds of a traditional virtual instance. Introduces Lambda SnapStart that significantly reduces VM cold start capabilities.

Tonight's keynote was a demonstration of AWS's laser-like focus on high performance cloud computing and machine learning in general. While serverless might seem more of an afterthought in tonight's discussion, it's almost as important as the whole ML/Ai conversation, as it pushes most provisioning into policy and allows moving quickly across the entire DevSecOps chain. But for IT leaders, the big takeaway here is that AWS iis committed to bringing customers highly differentiated performance while still preserving full security capabilities and to do it cost effectively. AWS seeks to be the definitive go-to provider of hyperscaled performance-based solutions, even in very challenging application spaces.

Adam Selipsky Tuesday Morning Keynote at 8:30am

8:30am: After a high-energy opening, Adam Selipsky welcomes everyone to the 11th annual re:Invent. Now citing customer stories of BMW and Nasdaq. "AWS is part of the foundation of the today's capital markets." Commits to being 100% renewable energy by 2025. Says they are the largest corporate purchaser of renewable energy in the world.

8:38am: Makes the case for cost effective as of AWS services, that can be used for cost savings, yet be ready for innovation and growth when opportunities arise.

8:46am: Exploring the latest development in space, Selipsky cites the the need to use different tools to account for the scale and variety of the data. Provides examples at scale of major customers like Expedia, Samsung, and Pinterest.

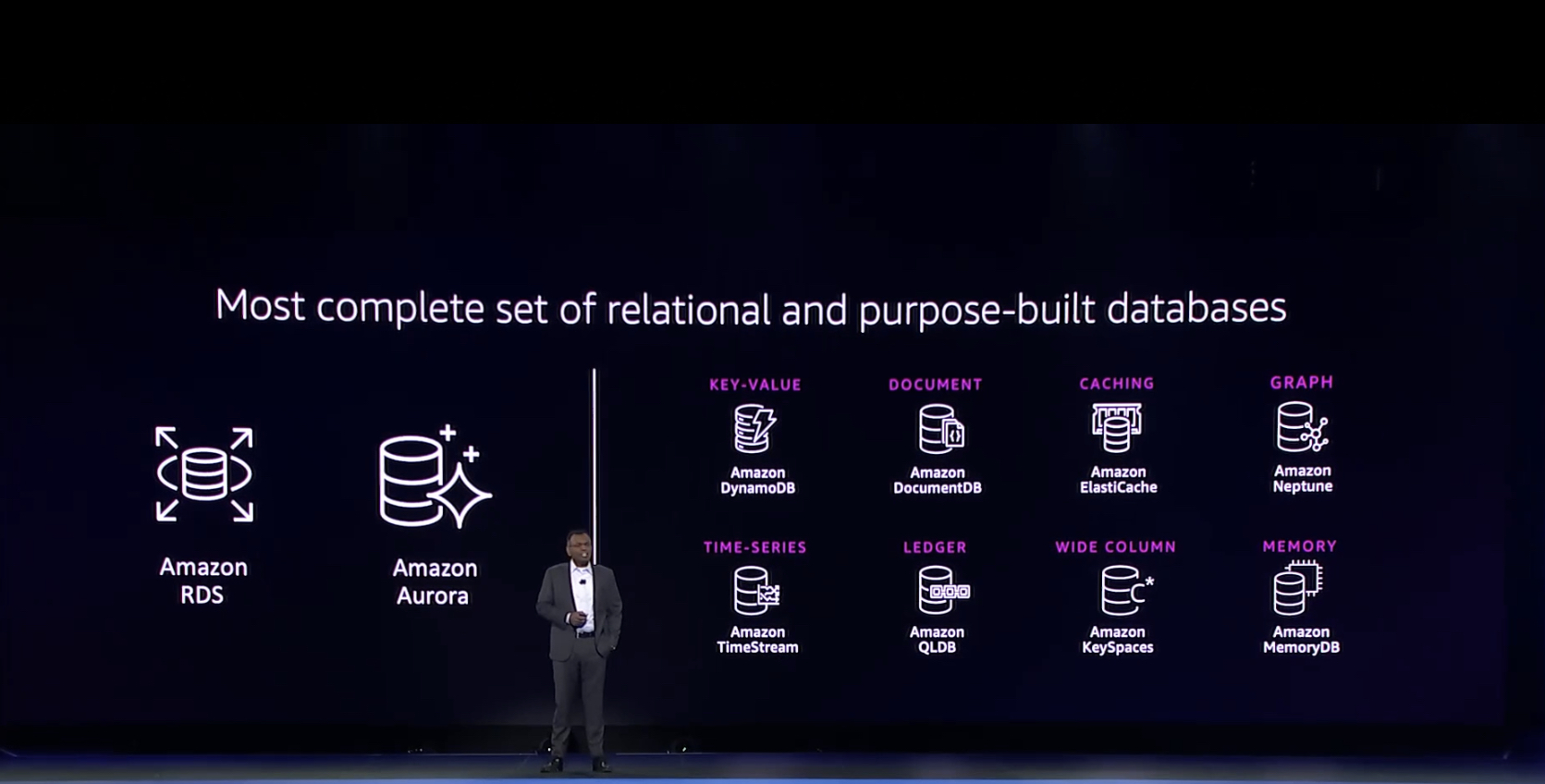

8:51am: Addressing the concerns about so many different services that do the same thing (the space telescope analogy was to help explain this), Selipsky is showing how each database solution they have meets a specific need. Announces the serverless version of OpenSearch. Says there are now serverless versions of all their database services. Provides clear visuals that show how each cloud database solution has a right place in the portfolio.

8:53am: Talking about Engie, a French company that uses data, analytics, and machine learning to optimize how they produce renewable energy. Data and digital tools play a key role in energy transformation is the message here. Most renewable energy sources are intermittent. To optimize the harvesting of this energy, Engie uses predictive analytics, machine learning, and the Internet of Things (IoT.) They also use blockchain. Today, they have a common data hub where they store more than one petabyte of data that they use across more than 1,000 projects. The data hub is built on S3, and they use RedShift and SageMaker as well. Net result: 15% reduction in energy consumption. 60% savings on runtime in the cloud. By far the most in-depth customer story so far.

9:03am: As customer stories dominate much of the Selipsky keynote, it's clear AWS is getting feedback that customers want to hear what other customers are doing. Selipsky back on stage. Now showing "The right tools", a long list of AWS services for data. Making the case that the vast data realm requires the 1) right tools, 2) integration, 3) governance, and 4) insights. Now talking about ETL (data transform and load), and how it is a thankless job. Citing a customer that says maintaining an ETL pipeline is a thankless job. Says Redshift, Athena, and AWS Data Exchange, and SageMaker integration can help. Says zero-ETL must be the goal and the future. Now announcing an Amazon Aurora zero-ETL integration for Redshift and Apache Spark.

9:10am: Now talking about ways to make data accessible yet also compliant and controlled. The balance between too much control and too much accessible is hard to achieve. "We believe that people are naturally curious and smart." Establishing the right governance, gives people trust and confidence. Encourages innovation, rather that restricts it. Yet notes that establishing enterprise-wide governance across all teams and functions is a tall order. It's also critical. Announcing Amazon DataZone, a data management services to catalog, discover, share, and govern data. Fine grained controls. Data catalog populated by ML and easy to search using business terms.

9:16am: Exploring the new ML-powered forecasting with Q, now generally available to explore the vast realm of data. "You need all the tools to handle the vast and expanding volumes of data." When you have all of this, you have a complete end-to-end data strategy. Says AWS is the best place to do this as they heavily invest across the "entire data journey." Goal: To unlock the value of data. In my analysis, this is a response to the criticism last year about continually announcing so many data stores and database solutions. The story is definitely clearer and more holistic now. That organizations simply must have a wide variety of tools to handle the many types of data, business requirements, and use cases that enterprise routinely face.

9:20am: Now moving from the analogy of space to exploring underwater. (Clearly, there is a desire to better explain the great depth and breadth of the AWS cloud portfolio.) Like the space analogy, Selipsky is really running with the undersea analogy. That there is so much left to learn, with the right protections, we can explore with confidence and security. "Same with modern IT." Makes case that a strong core of protection combined with ability to still see what is around you, will enable the digital transformation that organizations must undergo today.

9:26am: Talking about containers now. Announces container runtime threat detection for GuardDuty. Detects threats running inside your containers. Identify attempts to access host node. Integrated with EKS. Now back to security. Thousands of 3rd party security solutions. Now talking about a new Security Lake (sp?) for all the different security standards. Already supports the new Open Cybersecurity Schema Framework (PCSF) standards. Many AWS sources and partner sources, to bring all security logs into one umbrella for the entire security data life cycle and handles retention. This is a big win and long term will be a serious boon for fighting bad actors and creating a more manageable cybersecurity operations lifecycle.

9:33am: The exploration theme has moved over to Antarctica. Tell the story of Amundsen, and the many techniques and approaches they used in extreme conditions. Notes that Amundsen won the race to the South Pole. Makes the case that "good enough" simply isn't good enough in extreme environments. Says the same is with AWS customers, and is why they offer such an array tools to deal with the difficult operating environments of today. Cites reduced simulation time for Formula 1 by 70%. Say Niesen uses AWS to support hundreds of billions of events per day. Reports that AWS now has over 600 instance types to meet just about any type of need or use case, many based on chips not available anywhere else.

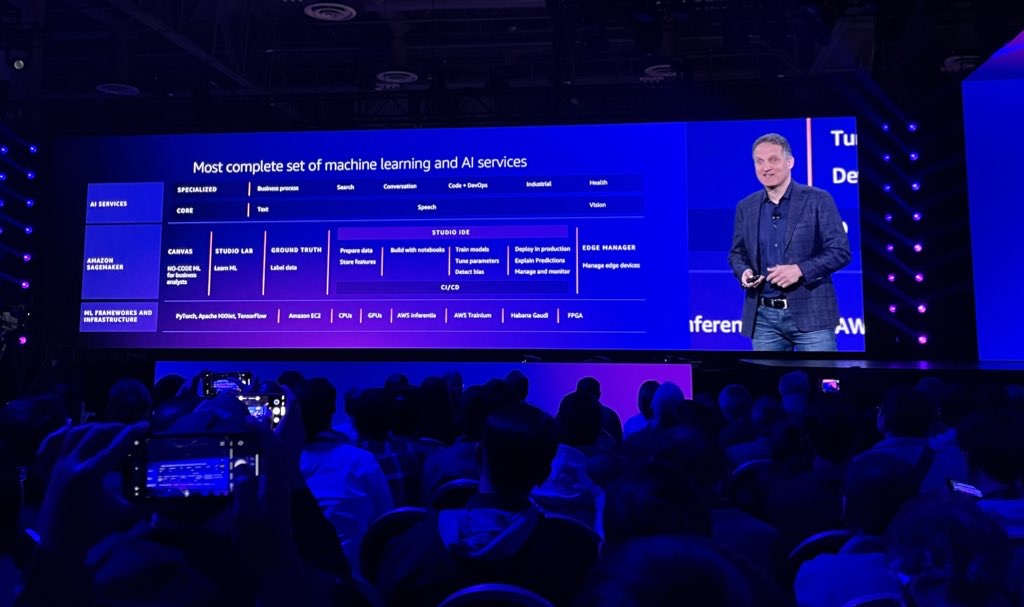

9:42am: Today's machine learning models have grown to use 100 billions of parameters, a hundred fold increase in just a few years. This dramatically driving up the cost of training machine learning models. The Trn1 instance can lower training costs by 50% says Selipski and he claims it offers the best price performance in the industry. The Inf1 instance for EC2 provides 70% lower inference costs. It's clear cost and performance are the big message at re:Invent this year, along with a better explanation of tool proliferation and solution density in the AWS portfolio. "You can choose the solution that is the best fit for your needs" says Selipsky. Announced Inf2 instances for EC2, with 4x higher throughput, and just 1/10th the latency.

9:49am: Calling Siemens up on stage to explore their use of AWS services on how they design, test, and optimize a spacecraft. Used to take weeks, but now can do it in eight hours. Talks about Spaceperspective.com. The head of industrial business notes that 24 out of 25 manufacturers use their software. Cites their Dream It, Make It approach. AWS has helped move their industrial platform to the cloud and make it an as-as-service offering. Unleashes the data within factories as well. Talks about using Mendix low code solution in the AWS Marketplace. Allows anyone to build any application on any services, 10x faster to create Siemens says (and is a hot topic in IT circles right now.) Industry Revolution 4.0 is being enabled by the partnership between AWS and Siemens by a) taking their software into the cloud b) solve business problems c) unleash the value of data and d) enable builders to scale with new ideas. A good case example.

10:01am: Selipsky returns to stage. Talking about AWS SimSpace Weaver, to run massive spatial simutions without managing infrastructure. Supports Unreal Engine and Unity. "All your extremes" is the message here. Graviton for cheaper training. Or performant, easy-to-use tools to manage simulations. Switching quickly to enabling imagination. Talking about J.M. Barrie's and Tolkien's imagination. Citing the Rings of Power Amazon series. "Imagination is a collaborative effort. Creativity flourishes in the company of other explorers." Gives three steps: 1) Removing constraints 2) Combining disparate ideas and 3) collaboration (and hopefully execution.)

10:08am: Talking now about Amazon Connect. 10 million Announcing new capabilities: New ML driven forecasting, capacity planning, and schedule. "Today tens of 1000s of customers use Connect to support more than 10 million interactions per day, usable, deployed connected, just three weeks. Convoy improved the percentage of escalation calls that are answered in less than one minute by 50%." The Priceline enabled more than 1,000 agents to work from home using Connect.

10:15am: Announcing AWS Supply Chain. A lot of positive noise from the audience about this. The long awaited supply chain/ERP solution from Amazon. And potentially the productizing of their crown jewels: "Many AWS customers have asked us whether we would take Amazon supply chain technology and AWS infrastructure and machine learning to help them with their supply chain." Now announcing AWS Clean Rooms for safe collaboration on shared data. Maintains privacy for everyone.

10:20am: Bringing Lyell up on stage to talk about cell therapy to fight cancer. Takes human cells from its patients and has a sophisticated supply chain process that must be compliant and regulatory, with the result being a specific cell therapy. AWS was "the obvious choice" for a strategic cloud provider. Implemented cloud tech to create a next-gen cell therapy facility using advanced analytics. Good example of healthcare and life sciences.

10:28am: As Selipsky return to stage, he talks about the fields of "omics", with must deal with millions of biological samples. Cost, scale, special tools, and privacy are the top four factors. Safe, secure, and compliant, while enabling collaboration. Announcing the general availability of Amazon Omics. Now moving to retail and talking about Amazon's Just Walk Out technology. Amazon One tech to pay with your palm, so you can just walk out. Don't need wallet or phone. "We've seen the example the Seattle Mariners that reported that transaction at the Mariners store went up over three times after installing just walk out technology."

10:35am: Wrapping up the keynote with the repeat of the big themes: "What it takes to thrive in uncharted territories and seize the opportunities. This is the real power of the cloud, how we can help to instill that mindset exploration allowing you to transform, increase how fast you can innovate, by creating environments where people with ideas to try them out and then iterate quickly." And most of all, "The power of the cloud." That's a wrap.

AWS Vertical Industry Leadership and Strategy, Tuesday

2:30pm: WW Director of Business and Corporate Development, Josh Hofmann, is up exploring how AWS solves the "biggest industry problems." They do this by meeting customers where they are, working backwards (a popular and well-known internal AWS approach), from their specific challenges, opportunities, and needs. It requires a bottoms-up approach focused on individual roles, says Hofmann.

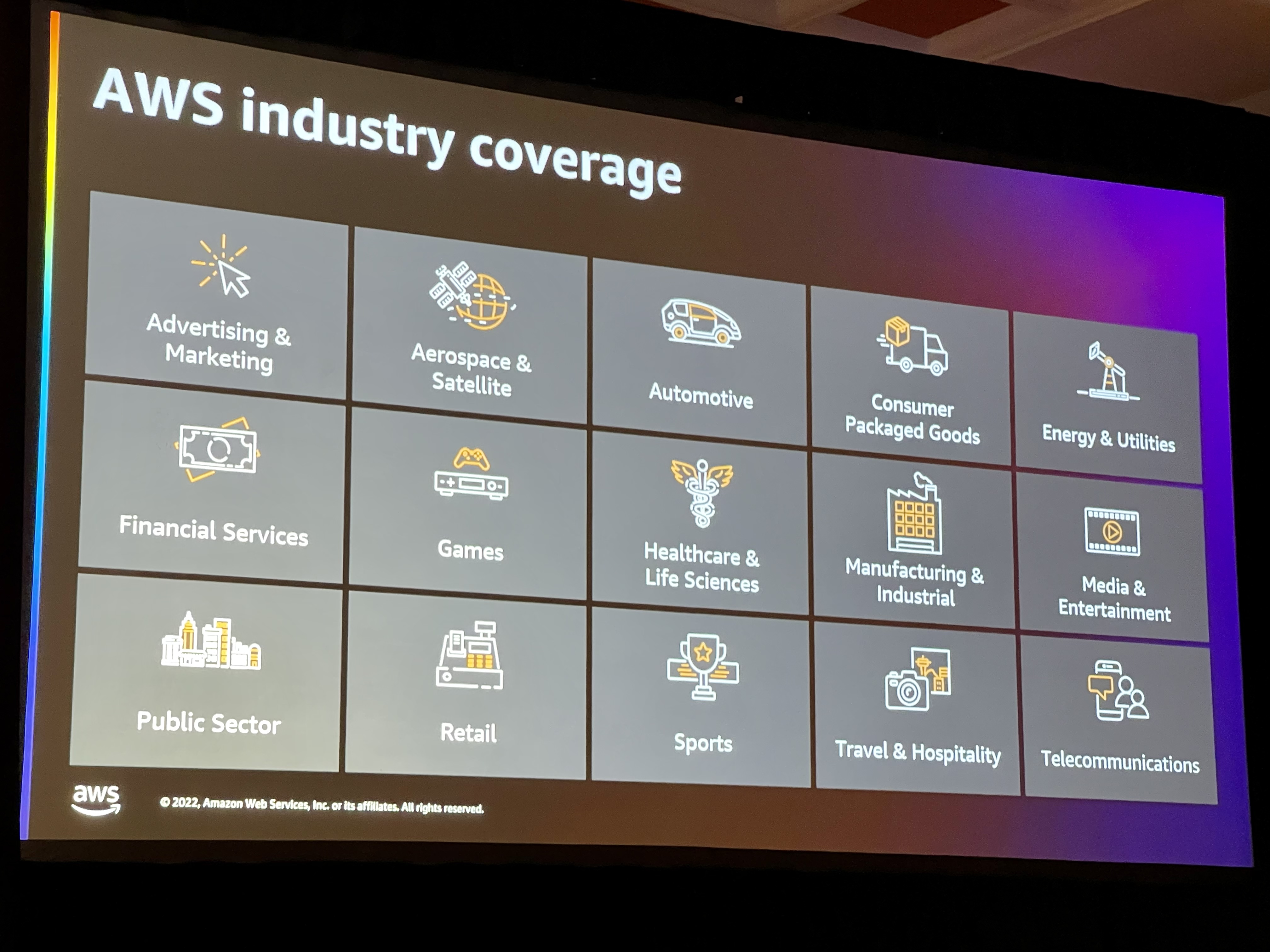

2:37pm: Industry development at AWS involves four planks: 1) Industry expertise and experience 2) Industry-specific purpose-built services and solutions 3) Industry-focused partners and 4) Industry-centered customer engagement.

2:41pm: Bill notes that "they focus on big groundbreaking use cases that we have the opportunity to learn from. And it's really how we get hyper focused on the use cases that allow transformative types of outcomes for our customers. So in some cases, we productize these capabilities as we're learning from them." Something worth carefully noting as CIOs must be careful not to have their unique capabilities productized into their cloud providers' platforms.

2:44pm: In total, AWS currently focused on 18 different industries where they have dedicated coverage, meaning that AWS has very specific roles for each industry that they hire for around the world. They also have partner competencies and other things that are all centered around the different industries where we have dedicated focus. If we look at the different roles that AWS has dedicated to each of the industries, the first and one of the most important is what they call industry specialists. They also have dedicated professional services and out of the 18 industries that they cover 10 of those have professional services practices, dedicated to those industries. That means that each practice is developing templates, patterns, repeatable assets for each of those industries, so dedicated teams of people in AWS's professional services for each of the industries.

2:50pm: AWS also has solution architects that they hire from each of the industries that provide guidance on how to implement a lot of the industry solutions that they have. They also have regulatory and compliance experts. So in industries like financial services, healthcare and energy that are highly regulated, AWS has experts in each of those industries around the world that help shape policy, as well as provide specific tools and other methods to help customers understand how they become or how they become compliant as experts.

2:55pm: AWS has hired hundreds of industry specialists from around the world. They have hired experts who are former PhDs who have actually run clinical trials or who have built clinical trial infrastructures for some of the world's largest pharmaceutical companies They've hired former trading executives who have built the technology infrastructure that power many of the world's largest capital markets. They've also hired former factory leaders who actually worked on the shop shop floor and rolled out solutions to workers who are on the shop floor in ways that they will understand how to use this technology. So in every industry, AWS has hired these types of leaders, to aid them them deeply understanding what their customers are trying to do and what use cases they can enable. AWS also has a sophisticated process for separating purpose-specific IP with partner-specific IP.

3:04pm: Over 100,000 AWS partners currently exist from 150+ countries. They also have an AWS Partner Competency Program. They don't use the giant logo page approach. AWS has a competency program instead that make sure partners have demonstrable customer success, are AWS well-architected, and are deeply familiar with the industry. Have 13 industry-specific partner competency measures so far. This is something that few companies other than the size of AWS can achieve and still maintain the large partner numbers that they have. Cites many industry partner examples including Deloitte, onscale, and Goldman Sachs.

3:08pm: The last piece of their industry strategy is how to engage and communicate it to customers. Customer want to be able to easily discover, assess, deploy, and run new industry-specific partner capabilities. AWS uses industry-specific channels to achieve this, such as key industry events/conferences and leading industry publications (American Banker is an example cited in financial services.) They also hold innovation days and industry symposiums that are regionalized in many different countries.

In short, in my analysis AWS is about as well-organized and structured to tackle industry-specific solutions as is currently state-of-the-art in the industry. If they fall short in the vision, they are hampered by the fact many of their industry customers just want to move to cloud more directly first, and engage less in cloud transformation until later. That said, this can be addressed over time as their customers speed up cloud adoption and transformation. It's also clear that most organizations are not well-prepared to directly engaged in transformation using the cloud primitives that AWS provides (compute, storage, networking, etc.) Instead, AWS's industry approach is much more outcome focused and aligned much more closely with the actual businesses of its customers. CIOs are advised to almost always include industry-specific solutions from AWS and its partners in their cloud evaluation and adoption strategies.

Swami Sivasubramanian AI and ML Keynote, Wednesday

8:30am: Swami is up on stage talking about the process that scientists use to come up with new ideas. Aha moments are actually the result of thousands of hours of previous inputs. That the effort of individual innovations is the result of a scientific process of its own. This narrative is part of a real attempt to explain the complex cloud stack at AWS as arrived at after nearly two decades of innovation.

8:39am: "I strongly believe data is the genesis for modern invention." Makes the case that organizations need to build a dynamic data strategy that leads to new customer experiences as its final output. Underscores how today's organizations have the right structures and technology in place that allows new ideas to "form and flourish."

8:47am: Swami makes the case that AWS supports the data journey organizations with "the most comprehensive set of data services from a cloud provider." These services support data workloads for applications with a set of relational databases such Aurora and a purpose built databases like DynamoDB. AWS services offer a comprehensive set of services for analytics workloads, like SQL analytics with Redshift, big data analytics with EMR, business intelligence with Quick Sight and interactive log analytics with OpenSearch. Shows the whole inventory of relevant Amazon services.

8:51: Swami now explore how AWS provide a broad set of capabilities for todah's increasingly important machine learning models. With deep learning frameworks like pytorch and TensorFlow running on optimized instances and services like that makes it easier for orgaizations to build, train and deploy models end to end. Cites AI services built in machine learning capabilities that services like Amazon Transcribe. Claims that of these services together, can form an end to end data strategy, which enables enterprises to store and query your for databases, data lakes and live data streams. Importantly for enterprises, Swami makes a key note to governance: "You can on your data with analytics, PII and machine learning and catalog and govern your data with services that provide you with centralized access controls and services like Lake Formation and Amazon DataLake to dive into later on."

8:54: Announced Amazon Athena for Apache Spark. "Apache Spark is one of the most popular open source frameworks for complex data processing, like regression testing or time series forecasting our customers regularly use Spark to build distributed applications with expressive languages like Python." Swami reports that to build interactive applications using Spark, customers told them that they want to perform complex data analysis using Apache Spark, but they do not want to face the infrastructure setup and maintenance required to keep them operational.

8:57am: Beating the performance drum that has been a consistent theme this week, Swami says that an organization's data foundation should perform at scale across all data sets, databases and data lakes. Cites some performance metrics at scale: "Today Amazon Aurora auto scales up to 128 terabyte per instance at up to 1/10th the cost of other legacy enterprise databases. Dynamo DB was able to handle more than 100 million requests a second across trillions of API calls on Amazon Prime Day this year." Then announces the general availability of Amazon Document DB Elastic Clusters, a fully managed solution for document workloads of "virtually any size and scale."

9:00am: Brings Expedia on stage to cite how they are using AWS to cite how they've been using the platform to drive data-driven personalization. Connects to over 160 million loyalty members, over 50,000 B2B partners, with over 3 million properties, 500 Airlines and cruise lines, Notes they are are a technology company. Have gathered decade's worth of data on travel behaviors, booking patterns, and preferences.

9:08am: Swami back on stage talking about how AWS removes the "heavy lifting" from creating a world-class data foundation. Says they are always looking for ways to tackle customer pain points by reducing manual tasks through automation and machine learning. Gives examples: "For instance, DevOps Guru uses machine learning to automatically detect and remediate database issues before they even impact customers, while also saving database administrators time and effort to debug issues. Amazon S3 Intelligent Tiering reduces ongoing maintenance by automatically placing in frequently accessed data into lower cost storage passes by saving users up to $750 million to date."

9:12am: Notes that 80% of all new enterprise data is now unstructured or semi structured, including assets like images and handwritten notes. This means preparing and labeling unstructured data for analysis difficult, complex, and labor intensive. And these data sets are typically massive and unstructured, which means time consuming data preparation before organizations can even start writing a single line of code to build ML models. Claims tools for analyzing and visualizing data are really limited, making it harder to uncover relationships within data. Talking geospatial data now. Announces Amazon SageMaker with geospatial ML capabilities.

9:18am: Reports that But customers analytics applications on Rredshift have now become mission critical. Customers told AWS they wanted analytics of the same level of reliability that they have with their databases like Aurora and Dynamo. Announcing Amazon Redshift Multi-AZ a new configuration that delivers the highest levels of reliability.

9:22am: New announcement are coming very quickly, including Trusted Language Extensions for PostgreSQL. Allows developers to securely use extensions on RDS and Aurora. Makes highly capable and convenient to develop cloud apps far more secure and reliable. Next up is Amazon GuardDuty RDS Protection, which enables intelligence threat detection â€in just one click.â€

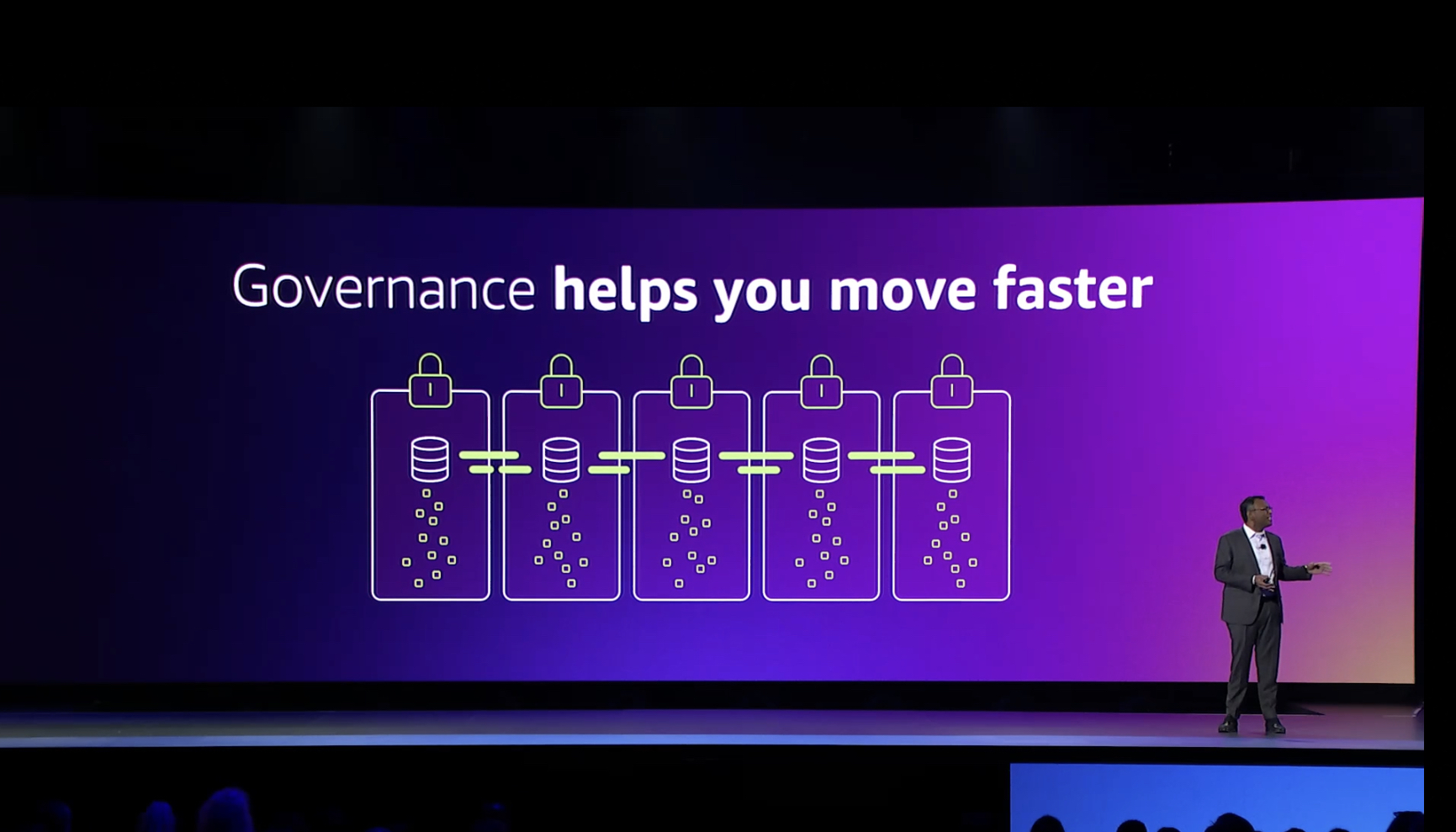

9:31: Now getting to material that is interesting to CIOs: Governance that helps organizations move faster. (Good, effective governance that is.) But enterpriess need to address data access and privileges across more use cases figuring out which data consumers in the organization have access to what data and can itself be time consuming. "The challenges range from manually investigating data clusters to see who has access to designating user roles with custom code that is really simply too much heavy lifting involved and failure to create these types of safety mechanisms. A series of new AWS data governance announcements are no doubt about to be made.

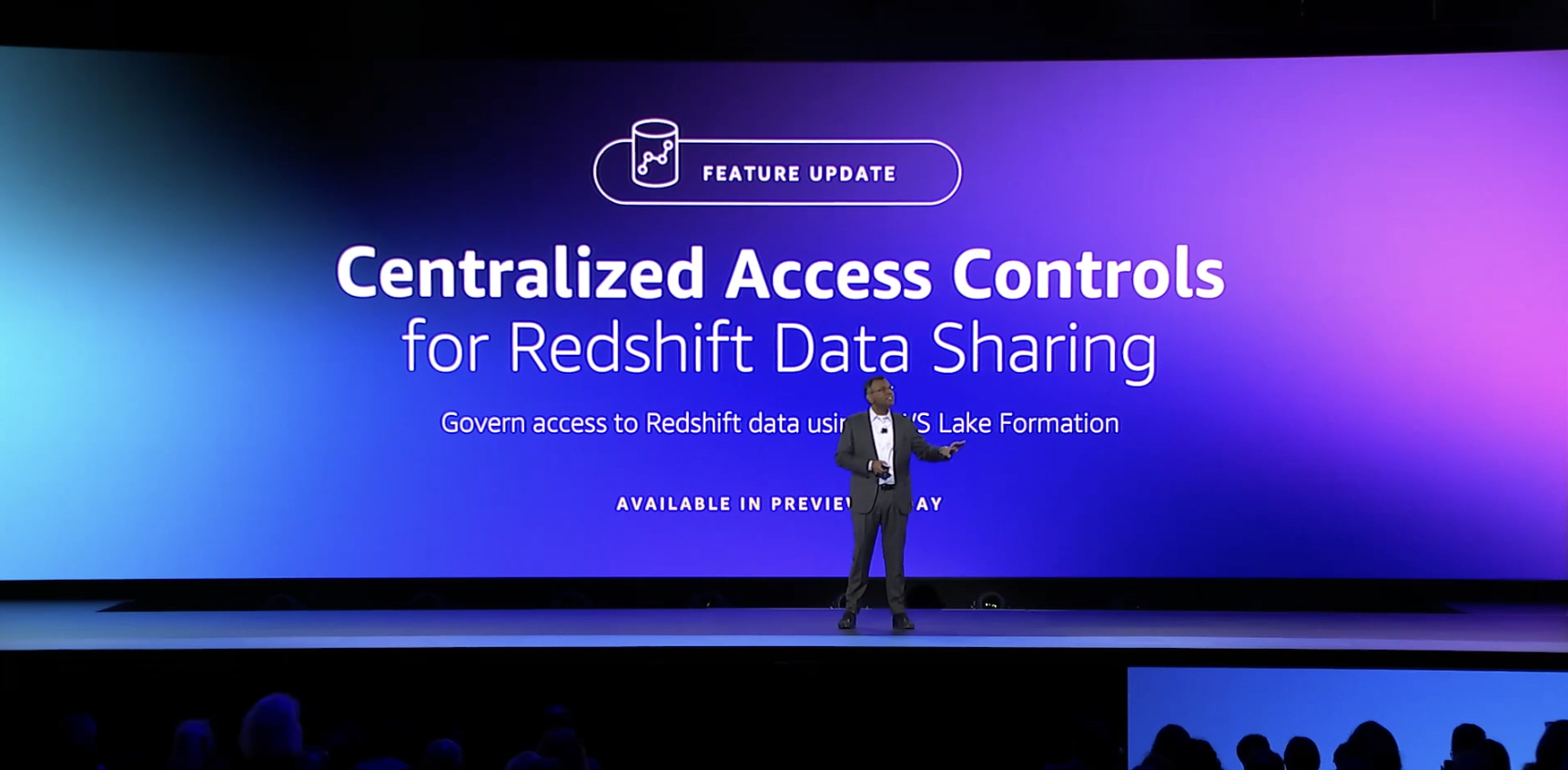

9:37am: And indeed, a rapid series of data governance announcements were made for AWS. Going beyond row/cell governance added last year, unveils new Centralized Access Controls for Redshift Data Sharing using AWS Lake Formation. Announces Amazon SageMaker ML Governance. Has new Role Manager, Model Cards, and Model Dashboard features to enable governance and auditability for end-to-end lifecycle ML development. And finally, cites the Amazon DataZone announcement from earlier in the week.

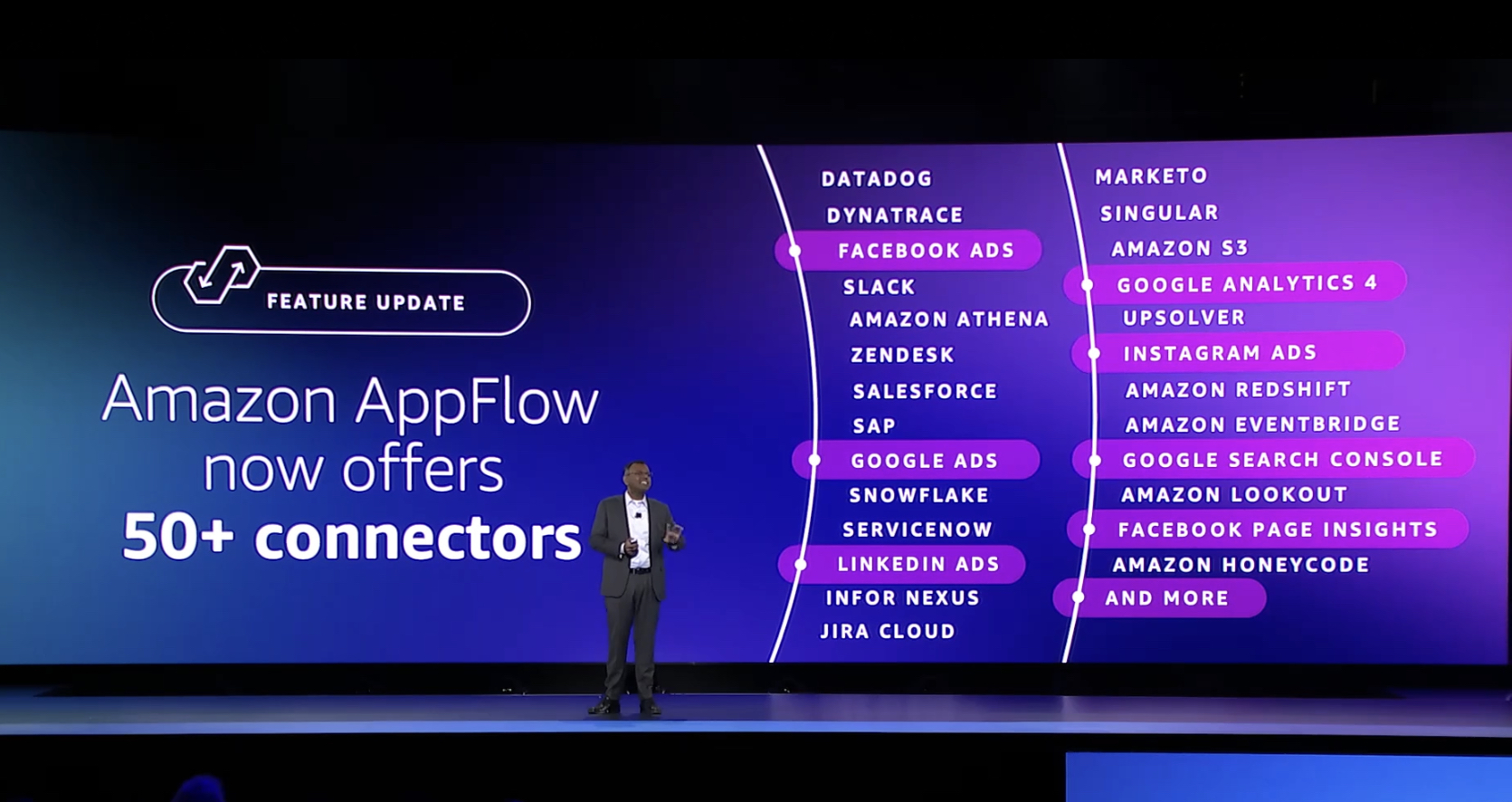

9:49: Talking about data sprawl and the need to bring all the data together. Amazon AppFlow is a core tool to bring SaaS data and synchronize it with internal data stores such as data lakes, data warehouses, and systems of record. Announces 50 major new connectors for AppFlow including Facebook Ads, Google Analytics, and LinkedIn. In addition to offering new connectors in AppFlow, AWS is also doing the same for data wrangler in SageMaker. Note that SageMaker already supports popular data sources like Databricks and Snowflake.

9:58: AstraZeneca up on stage sharing some remarkable stories about how fast they can run hundreds of concurrent data science and ML projects to turn insights into science. "The incredible pace that the scientists can unlock patterns by democratizing ML into an organization using Amazon SageMaker. We use the AWS service catalog to stand up templated and turnkey environments in minutes." Talks about easy remote data collection through data, AI, and ML.

10:04am: Swami back on stage to talk about the third and final plank of a strong data foundation: Democratization. "Since I joined Amazon 17 years ago, I have seen how data can spur innovation at all levels, right from being an intern to a product manager. to a business analyst with no technical expertise. One way is if you enable more employees to understand and make sense of data, take care to forge a workforce that is trained to organize, analyze, visualize and derive insights from your data, and cast a wider net for your innovation."

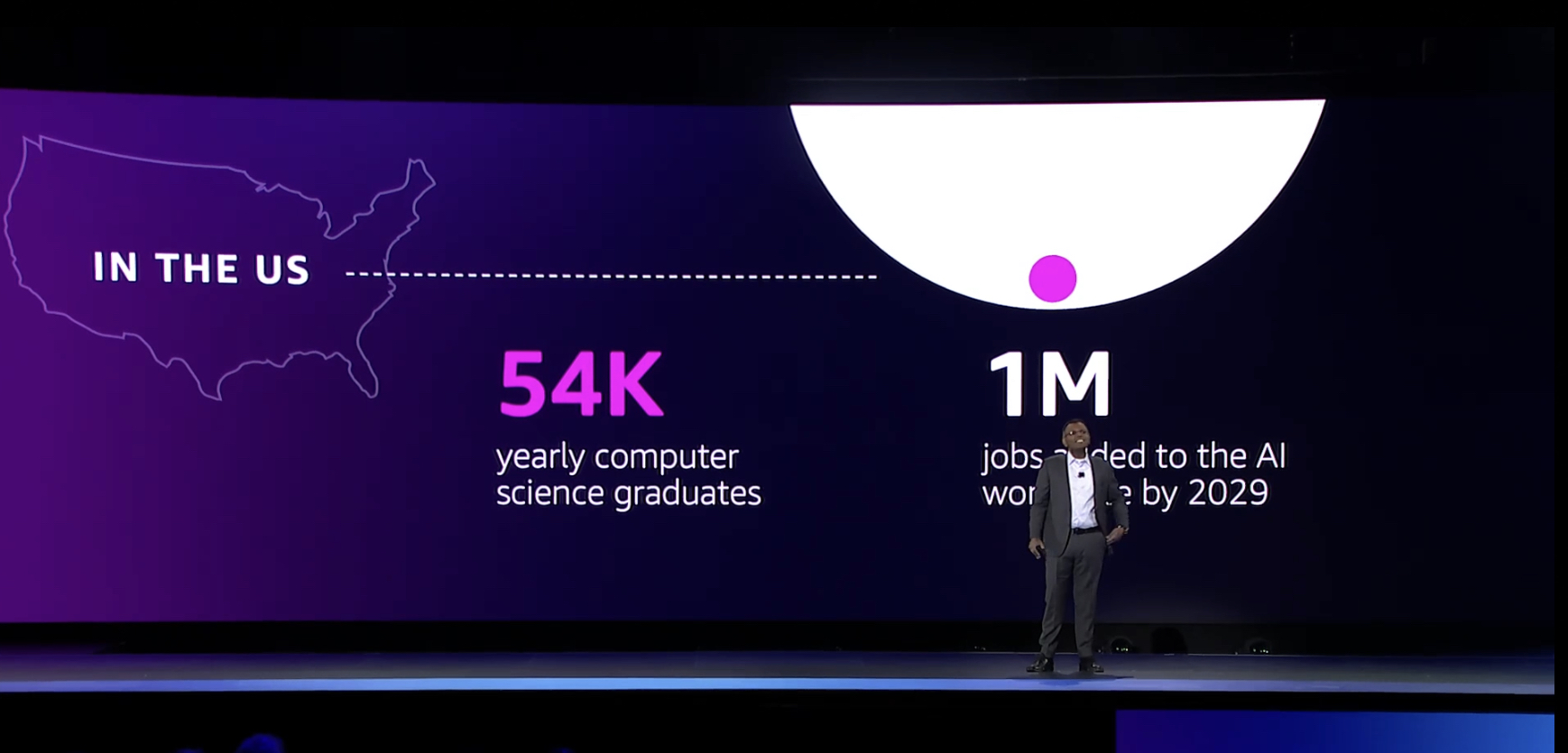

10:06am: To accomplish this, the world will need access to educated talent to fill the growing number of data and ML roles. There is a need for 1 million AI experts, says Swami. There are only 56 thousand computer science graduates a year however. That is a huge gap. "The faculty members with limited resources they simply cannot keep up with the necessary skills to teach data management, AI and ML. If we want to educate the next generation of data developers, then we easy for educators to do their jobs. We need to train the trainers. That's why today I'm personally very proud to announce a new educator program for community colleges and emphasize through AWS."

For CIOs, today's data governance announcements in particular -- but also imprortant new solutions to address the epic data sprawl issues that the cloud is creating -- will be of prime interest. Combined with AWS's increased cost/performance focus, this is a significant improvement in the way enterprise data can be managed in the cloud, especially in today's high sophisticated machine learning and AI operations. While in my analysis, this announcements are not sufficient to address all the enterprise data issues that cloud (especially public cloud and SaaS) introduces, it's a big step in the right direction.

Now it's vital to CIOs to make sure all these new capabilities have a clear place and effective realization with their ongoing cloud strategies. Democratizing received good air time, but there is still much more to be done to achieve it. Many of the practical results will have to be realized in low code platforms and data orchestration tools that AWS does not offer, though AppFlow is increasingly promising. But the "future proof data foundation" message is an important one, and I think their argument holds water that AWS can largely represent this today, and will likely take organizations there in the near future.