Google Cloud's Ironwood ready for general availability

Google Cloud said its seventh generation Tensor Processing Unit (TPU), known as Ironwood, will be generally available soon as the company also outlined new Arm-based Axion instances.

The announcement highlights how hyperscalers, primarily Google Cloud and Amazon Web Services, are deploying custom chips for AI workloads to diversify from Nvidia and smooth out price performance ratios. Ironwood was announced at Google Cloud Next earlier this year.

AWS fired up its massive Project Rainier complex for Anthropic and then lands OpenAI, which is immediately procuring GPUs from AWS. AWS will announce Trainium3, which will feature a big performance boost, at re:Invent 2025 in December.

With that backdrop, Google Cloud, which is already playing with a custom processor lead, struck with Ironwood. In a blog post, Google Cloud noted that its latest TPUs are designed for what it calls "the age of inference." The adoption of AI agents will require optimization and strong price performance.

Google Cloud, which counts OpenAI and Anthropic as customers, announced the following:

- Ironwood general availability with 10x peak performance over TPU v5p. The processor has 4x performance per chip for training and inference relative to TPU v6e, or Trillium.

- Anthropic will be a user of Ironwood instances.

- Axion instances. Google Cloud announced N4A, a cost effective virtual machine, is now in preview. N4A offers 2x better price performance compared to current generation x86 virtual machines. Axion is based on Arm's Neoverse CPUs.

- C4A metal, which is Google Cloud's first Arm bare metal instance, will be in preview soon.

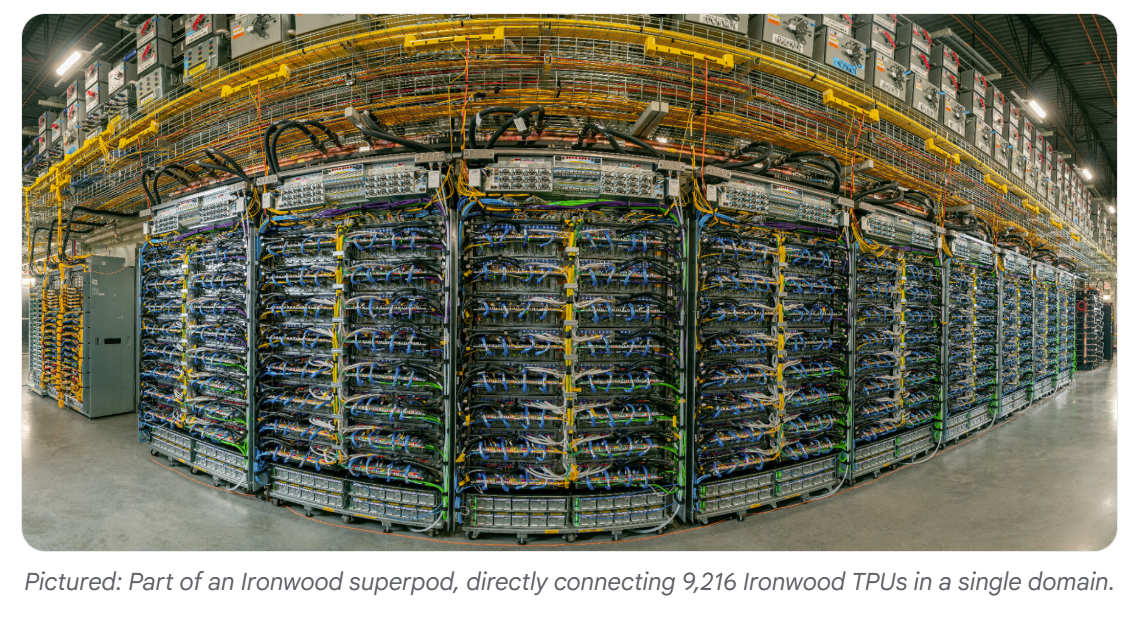

- Google Cloud is using Ironwood TPUs as a key layer of its AI Hypercomputer, which will scale up to 9,216 chips in a superpod.

The upshot is that the AI inference market is going to be much more competitive than the training market, which is dominated by Nvidia. Custom silicon, AMD, Intel and Qualcomm will all be in the mix.