The enterprise LLM questions you should be asking

Large language models are at an interesting juncture. LLM breakthroughs have slowed and there are questions about whether they will lead to artificial general intelligence. Coupled with concerns about an AI infrastructure bubble LLMs are going to be closely watched--especially since they're the key ingredient of agentic AI.

In the end, LLMs don't have to necessarily lead to some superintelligence to have a big impact on enterprises. Enterprise AI and the AI market that fascinates venture capitalists and Wall Street investors are two different markets. There are enough AI returns for enterprises even if LLMs stagnate for the next year.

With that backdrop and this week’s headlines, it's worth pondering the key LLM questions.

- Google launches Gemini 3, Google Antigravity, generative UI features

- Anthropic, Microsoft Azure, Nvidia ink $30 billion compute pact

- Microsoft launches Agent 365, a parade of AI agents at Ignite 2025

- Anthropic: Claude eyes real world impact, industrial use cases

Note I do not have the answers but usually know the questions to ask. Here's a look at the key LLM questions as 2025 comes to a close.

Will LLMs--and the AI agents they power--upend software as a service? This debate has been bubbling up throughout. The winner of the debate of LLMs vs. SaaS is far from settled, but it's no surprise that enterprise software vendors are scrambling to present themselves as platforms. No more cross-selling clouds. No more functional silos. Today, the masters of the cross sell are talking platforms. Salesforce, Microsoft, Workday, ServiceNow and a cast of hundreds are creating AI agents that work across their applications. Meanwhile, OpenAI and Anthropic are looking to break the SaaS margin profile to woo enterprises. The idea is simple: Relegate systems of record to plumbing where ChatGPT or Claude is the interface and workflow engine. It's early in this LLM vs SaaS debate, but the SaaS crowd has plenty of disgruntled customers looking for alternatives. The running joke is SaaS and healthcare are the two enterprise categories always guaranteed to go up. LLMs could be a SaaS replacement or just a nice negotiation tool.

- Among CxOs, SaaS platform fatigue setting in

- The big AI, SaaS, transformation themes to watch in 2025’s home stretch

- LLM giants need to build apps, ecosystems to go with the models

- Pondering the future of enterprise software

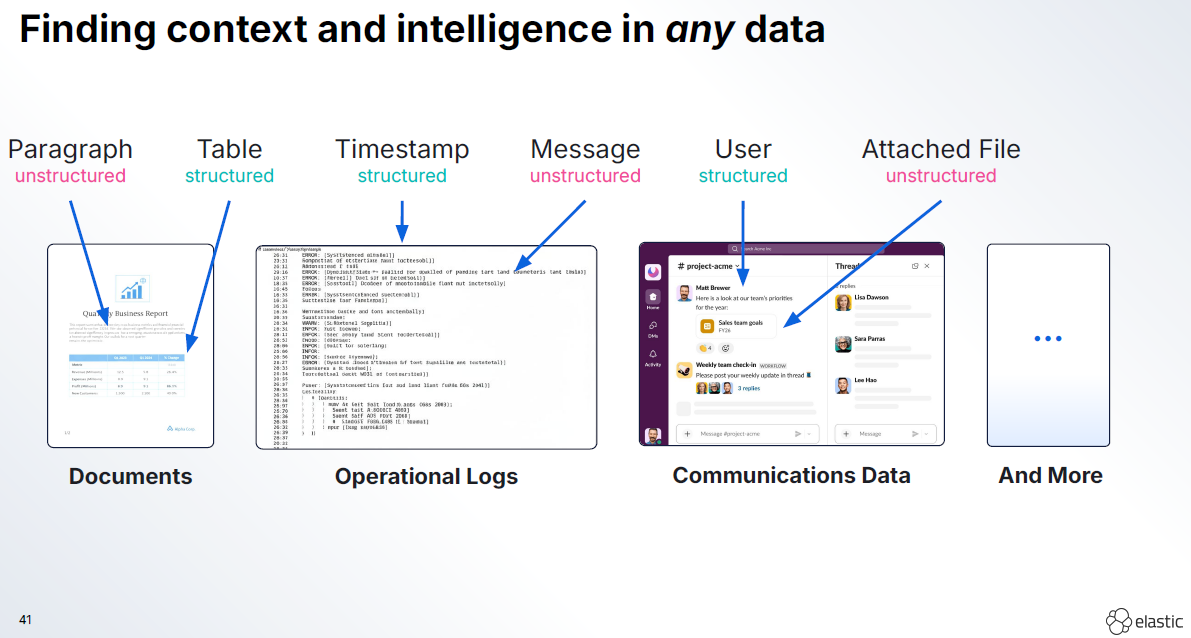

Five years from now will we all say, 'those LLMs turned out to be a kick ass enterprise search'? The more I use LLMs, the more I think their greatest contribution is perusing structured and unstructured data and surfacing it easily. LLMs clearly collapse the time spent on conducting searches and doing superficial research. Yes, LLMs will make stuff up, but they're a great starting point. When combined with enterprise data and repositories that have been useless for years, LLMs are revamping the search game for companies. Suddenly, context engineering is a thing.

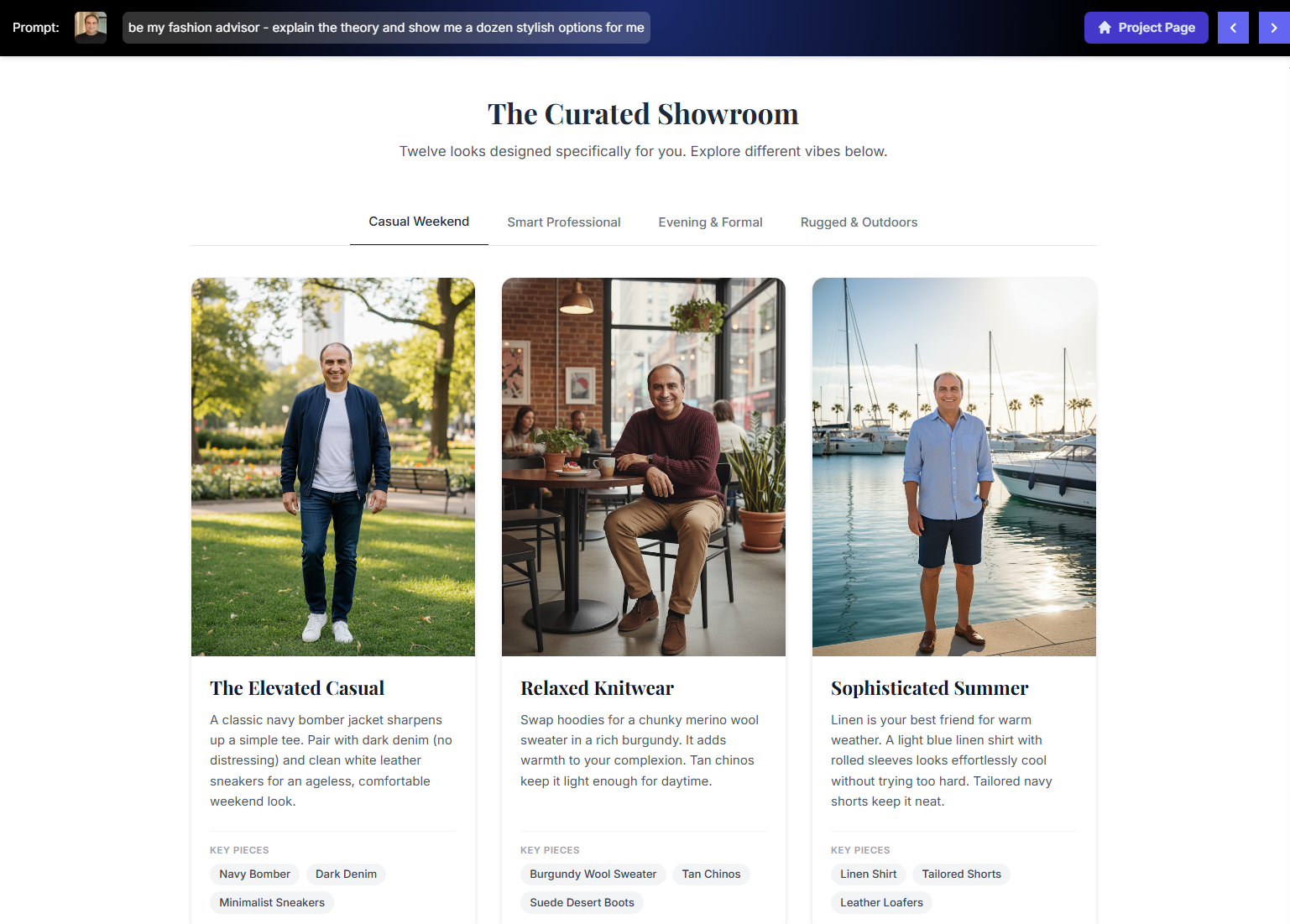

Is the future of UI generative? Enterprises have paid SaaS providers for years as data stores, workflow models and user interfaces (that may or may not be swell). If the UI layer collapses what exactly are you buying? Sure, SaaS providers are talking about how they could be a headless platform, but that'll likely mean lower prices. The idea that LLMs could spin up relative user interfaces on the fly have been appealing--if not a bit theoretical. However, Google's launch of Gemini 3 features a lot of interface goodies where widgets and layout themes area presented on the fly. Suddenly, a search query can provide an answer that comes in a magazine format. Answers can have code for functions like mortgage calculators. According to Google, Gemini 3 knows good design principles. Watch these UI developments closely because there's likely a big impact on enterprise software in the future.

- A look at the intersection of AI and customer experience

- Agentic AI: Is it really just about UX disruption for now?

- Target cuts deal with OpenAI as it plans customer experience overhaul

- Intuit, OpenAI forge integration pact: Why it could be a $100 million win win

Google published a generative UI paper to go along with the Gemini 3 launch. The company said: "Our evaluations indicate that, when ignoring generation speed, the interfaces from our generative UI implementations are strongly preferred by human raters compared to standard LLM outputs. This work represents a first step toward fully AI-generated user experiences, where users automatically get dynamic interfaces tailored to their needs, rather than having to select from an existing catalog of applications."

Will AI agents be implemented without forward deployed engineers? The most popular technology job today is the "forward deployed engineer." These folks are technical experts that can also consult and co-innovate with customers. Forward deployed engineers swoop in surface use cases, get the data models in shape and then help you implement AI agents and your digital workforce. The big idea is that forward deployed engineers can get you from pilot to production faster. A cynic would say software vendors are starting to look like consultants, which is fine since consultants are also offering software. Palantir made forward deployed engineers and its data ontology popular and now enterprise vendors are all over it. Now forward deployed engineers are swell but likely inflate the price tag for enterprises. The big question is when AI agents can do a lot of this work.

- CCE 2025: AI agents: Dreams, reality and what's next

- Enterprise AI: It's all about the proprietary data

- AI agents, automation, process mining starting to converge

ServiceNow is investing in forward deployed engineers as well as automating as much of the implementation as possible. Amit Zavery, ServiceNow's President, Chief Product Officer & COO, said: "We have 100-plus prepackaged workflows with Agentic built in. So, you don't have to do a lot of handholding to get going. Of course, there are going to be co-innovation required. There might be something specific for our customers. That's why we're investing in FD kind of a model with forward deployed engineers who are really AI black belt who can work very closely with customers on the AI expertise required for some of those use cases."

Are LLMs a dead end in the pursuit of artificial general intelligence? Call it AGI. Call it superintelligence. The working theory is that LLMs will lead to AGI. All the cool kids think so. Here's the recipe for AGI. Advance LLMs with a ridiculous amount of GPUs, data centers, land, and power and bam here's superintelligence. That simplified recipe is behind big valuations, lots of debt and monetization schemes that may or may not work out. If interested in a contrarian view, it's worth checking out this Wall Street Journal profile of Yann LeCun, who headed Meta's Fundamental AI Research group. LeCun is arguing that world models, which are trained through visual information instead of text. The punchline of the WSJ story: "If you are a Ph.D. student in AI, you should absolutely not work on LLMs."