Why CxOs, enterprises need to follow OpenAI’s GDPval LLM benchmark

OpenAI launched a new benchmark that grades large language models (LLMs) on real-world work tasks and enterprises need to take note as they ponder AI agents.

OpenAI unveiled GDPval, a system that grades LLMs on tasks that humans currently do. Yes, we know (since vendors tell us repeatedly) that AI is collaborative with humans and not a replacement. But if you were to look to AI as a human labor replacement, OpenAI's GDPval is likely to be handy.

In a blog post, OpenAI noted that GDPval is "a new evaluation designed to help us track how well our models and others perform on economically valuable, real-world tasks."

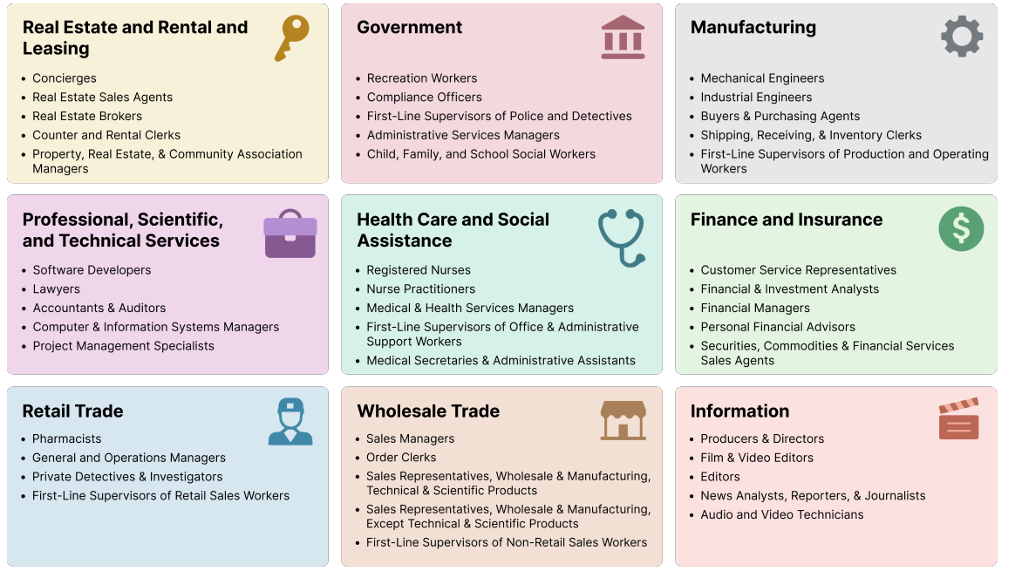

Why GDPval? OpenAI started with Gross Domestic Product (GDP) as an economic indicator and took tasks from the occupations and sectors that contributed the most to GDP.

Here's a look at the occupations and tasks in GDPval.

The win for enterprises is that CxOs can use GDPval to better align models for use cases. The win for the rest of us is that we can now compare models on real-world tasks instead of math exams and other benchmarks that are abstract for most people.

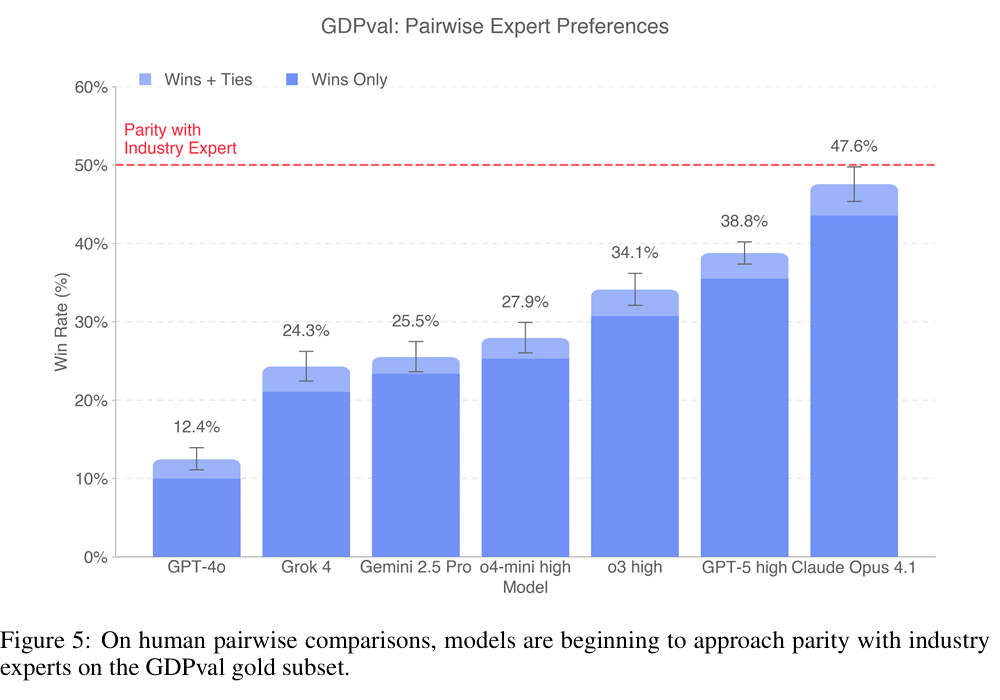

Based on GDPval's topline figures, Anthropic's Claude Opus 4.1 is the leader for work tasks followed by GPT-5.

OpenAI said:

"People often speculate about AI’s broader impact on society, but the clearest way to understand its potential is by looking at what models are already capable of doing. History shows that major technologies—from the internet to smartphones—took more than a decade to go from invention to widespread adoption. Evaluations like GDPval help ground conversations about future AI improvements in evidence rather than guesswork and can help us track model improvement over time."

As for returns, OpenAI also noted:

"We found that frontier models can complete GDPval tasks roughly 100x faster and 100x cheaper than industry experts. However, these figures reflect pure model inference time and API billing rates, and therefore do not capture the human oversight, iteration, and integration steps required in real workplace settings to use our models. Still, especially on the subset of tasks where models are particularly strong, we expect that giving a task to a model before trying it with a human would save time and money."

A few thoughts on how CxOs may approach GDPval:

- GDPval can make it easier to compare digital and human labor costs. For instance, a model that can deliver good work in one shot is more beneficial than one that requires a lot of back-and-forth since that drives compute costs up.

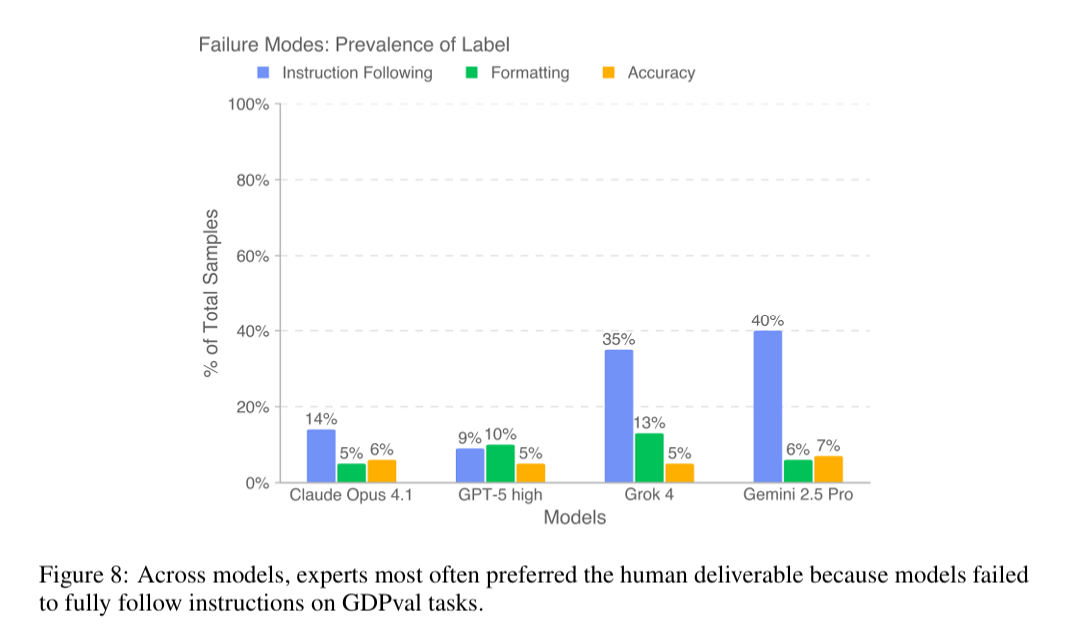

- OpenAI's GDPval paper also includes failure modes and the reasons why. Failure rates are going to be critical for proper evaluation.

- The benchmark also provides an opportunity to think about workflows and processes before humans work on the task. OpenAI's point about using AI to get a task partly to the finish line is valid. However, it's also worth noting what Harvard Business Review just reported on sloppy AI work.

- Humans in the loop during a process is probably the most important decision to make in using AI to automate processes. GDPval gives you a jumping off point for discussion.

- Accenture: Enterprise AI deployments hit inflection point

- AI agents, automation, process mining starting to converge

- Cognizant CEO Kumar: Agentic AI will create a reinforcing flywheel of ROI

- Pondering the future of enterprise software

- Lessons from early AI agent efforts so far

- Every vendor wants to be your AI agent orchestrator: Here's how you pick