Hitachi Digital Services: A deep dive on what it does, IT, OT, AI strategy

Hitachi Digital Services is looking to leverage its ability to integrate operations technology (OT) and information technology (IT) combined with industry domain knowledge, data and artificial intelligence (AI) know-how, and cloud expertise to drive growth.

In many ways, Hitachi Digital Services (HDS) is reintroducing itself to the technology industry following its late-2023 spin-off into an independent subsidiary of Hitachi. Here’s a look at HDS and everything you need to know from its first annual US Analyst & Advisor Day, May 20–21, 2025, in Frisco, Texas.

Background

HDS sits within the Japanese conglomerate Hitachi, which was founded in 1910 and has a long history in IT, R&D, and mission-critical systems across multiple industries. HDS was previously part of Hitachi Vantara before being spun off into a separate entity in November 2023. HDS focuses on cloud, data, Internet of Things (IoT), AI, and integration of OT and IT. Hitachi Vantara is focused on storage systems.

For the parent company, HDS is part of Hitachi’s Digital Systems & Services group. Toshiaki Tokunaga, CEO of Hitachi, is betting on a new management plan called Inspire 2027 that will drive growth for the conglomerate.

In April, on Hitachi’s fourth-quarter earnings call, Tokunaga said the company can leverage its ability to offer IT, OT, and products together to “demonstrate our unwavering commitment to transforming Hitachi into a digital-centric company.†Hitachi’s strategy is to remain decentralized but leverage a digital core to create a more unified company across its Energy, Mobility, Connective Industries, and Digital Systems and Services units. Digital Systems and Services accounts for 28% of Hitachi’s revenue.

Hitachi’s operating model, called Lumada, has been in place since 2016 and now has had a few versions. Lumada 3.0 aims to combine Hitachi’s domain knowledge and capabilities and turbocharge them with AI.

Understanding where HDS sits in the company highlights how it has R&D assets and capabilities across the parent to deliver cutting-edge systems. Nevertheless, HDS isn’t exactly a household name in North America, which represents a small portion of Hitachi’s overall $65B revenue.

Nevertheless, Hitachi’s technology is ubiquitous in global railways, hospitals, financial services firms, and other places. HDS CEO Roger Lvin boils down the company’s mission: “If I distill our mission: We build, integrate, and operate mission-critical applications.â€

Hitachi Digital Services CEO Lvin on AI transformation, operations technology, and use cases

“These mission-critical applications, often infused with what we refer to as the Japanese quality and Japanese process things, cannot go down and have real-life implications when they do not work,†says Lvin.

AI strategy

HDS offers multiple services such as advisory on processes, cloud migrations, and transformation roadmaps; smart enterprise technology for manufacturing, IoT, AI, and connected digital solutions; transformation services such as consolidation, migrations, process optimization, system integration, and automation; and managed services for applications, cloud, incident management, and other areas.

But the vendor conversation today starts with the AI strategy. HDS executives briefed analysts on multiple parts of their business and key topics ranging from IoT, Industry 5.0, and ERP, but AI is the connective tissue across the company as well as parent Hitachi.

HDS’s AI strategy revolves more around architecture and bringing proofs of concept to production for mission-critical applications. The strategy also aims to weave AI into operations throughout the Hitachi conglomerate.

What sticks out about HDS’s AI strategy is that it is decidedly practical and potentially future-proof, given its emphasis on architecture. AI isn’t about buzzwords but, rather, new tools for mission-critical applications.

Prem Balasubramanian, CTO of HDS, said the company didn’t want to set up a separate AI team, because it wanted to go with a holistic approach. “We wanted to establish a team that works with every facet of this company, integrating AI into the workforce and integrating AI into what we build,†he said.

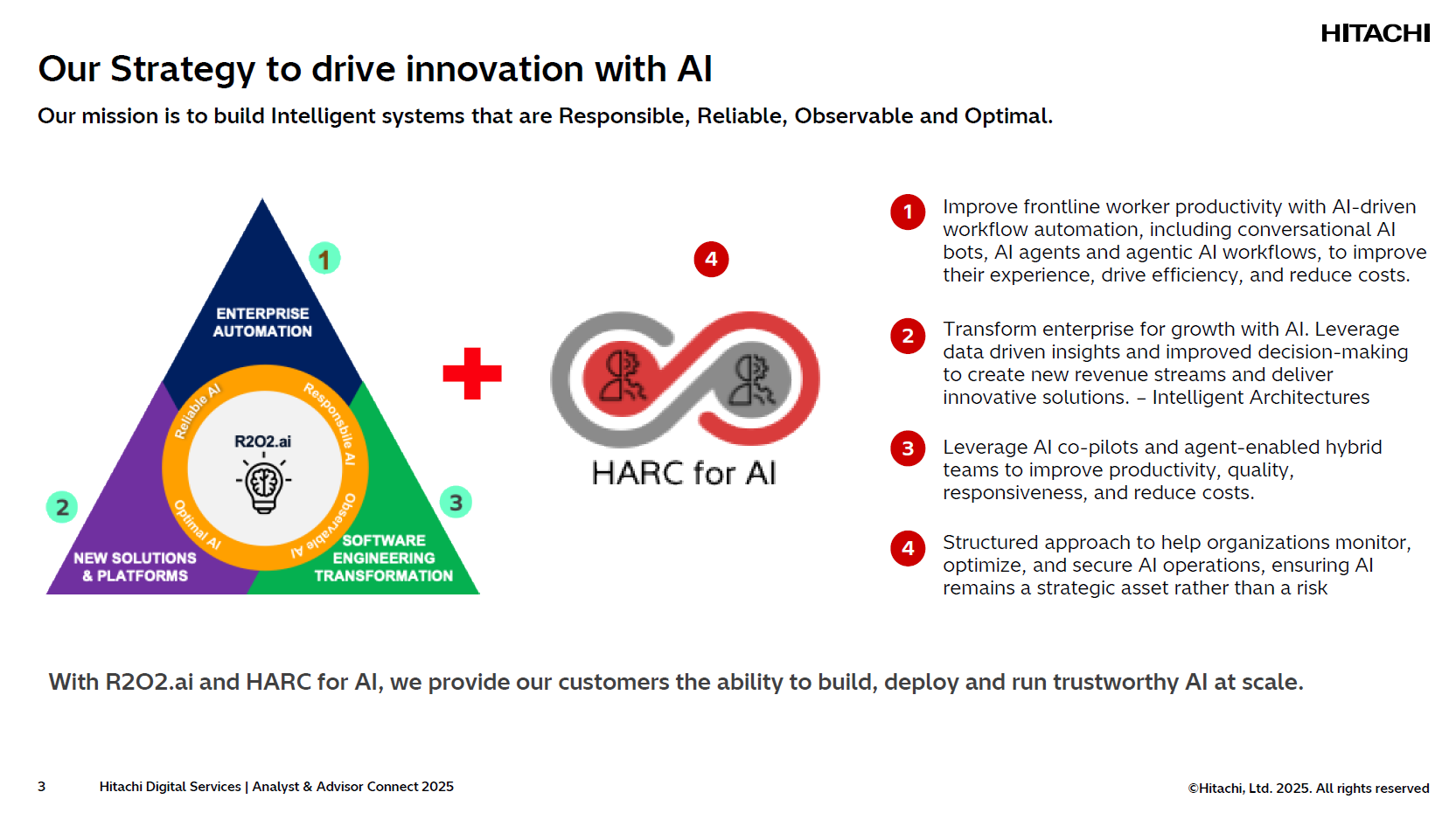

Focused on taking proofs of concept into production, Balasubramanian said, HDS didn’t want to chase frameworks for technologies that would be commoditized like retrieval-augmented generation (RAG) or even models. HDS’s strategy revolves around R202.ai, a set of prebuilt and reusable AI libraries and blueprints; responsible AI tenets; and HARC for AI, an end-to-end observability, security, and governance platform.

Hitachi Application Reliability Centers (HARC) for AI was announced in April as a service to help enterprises run AI and generative AI (GenAI) applications more reliably and efficiently. HARC for AI is designed to keep costs in check, limit performance degradation, and provide oversight of models.

Reliable, Responsible, Observable and Optimal AI, or R2O2.ai, was launched in late 2024 as a framework to bridge the gap between proof-of-concept projects and scalable AI deployments.

“We firmly believe that when you take an AI workload from a proof of concept and you really want to productionize it in an enterprise, you need to ensure that it’s responsible. You need to ensure it’s reliable. That the answers are correct consistently. You need to ensure your explainability and auditability, which is observability, and you need to ensure you’re spending the right amount of money on this,†Balasubramanian said.

Architecture is also critical, because it’s essential when enterprises adopt AI agents. Balasubramanian argued that agents aren’t a separate category as much as a connector to existing systems. “The bulk of agentic AI is existing systems, and you have to integrate agents and AI into them to get more revenue, acquire customers, and retain them," said Balasubramanian. “Our view of agents is that it’s just about technology. We will use all the technology available, but we think of it in domains. One is industrial, vertical use cases. Another is applications and how they interact with the real world. Architecture is going to be the future.â€

Balasubramanian said business value will be driven by delivery of applications that use AI agents seamlessly.

Chetan Gupta, a research and development leader at Hitachi, said the company’s research priorities revolve around simulation, reinforcement learning, and industrial and enterprise transformation.

“We believe AI essentially is a tool to transform enterprise operations and industrial operations, and that’s what we will enable,†said Gupta. “So the way we do things today will be different from the way things will be done tomorrow.â€

The ultimate challenge is moving from proof of concept to production. For that, HDS is focused on training industrial models for specific use cases and reliability.

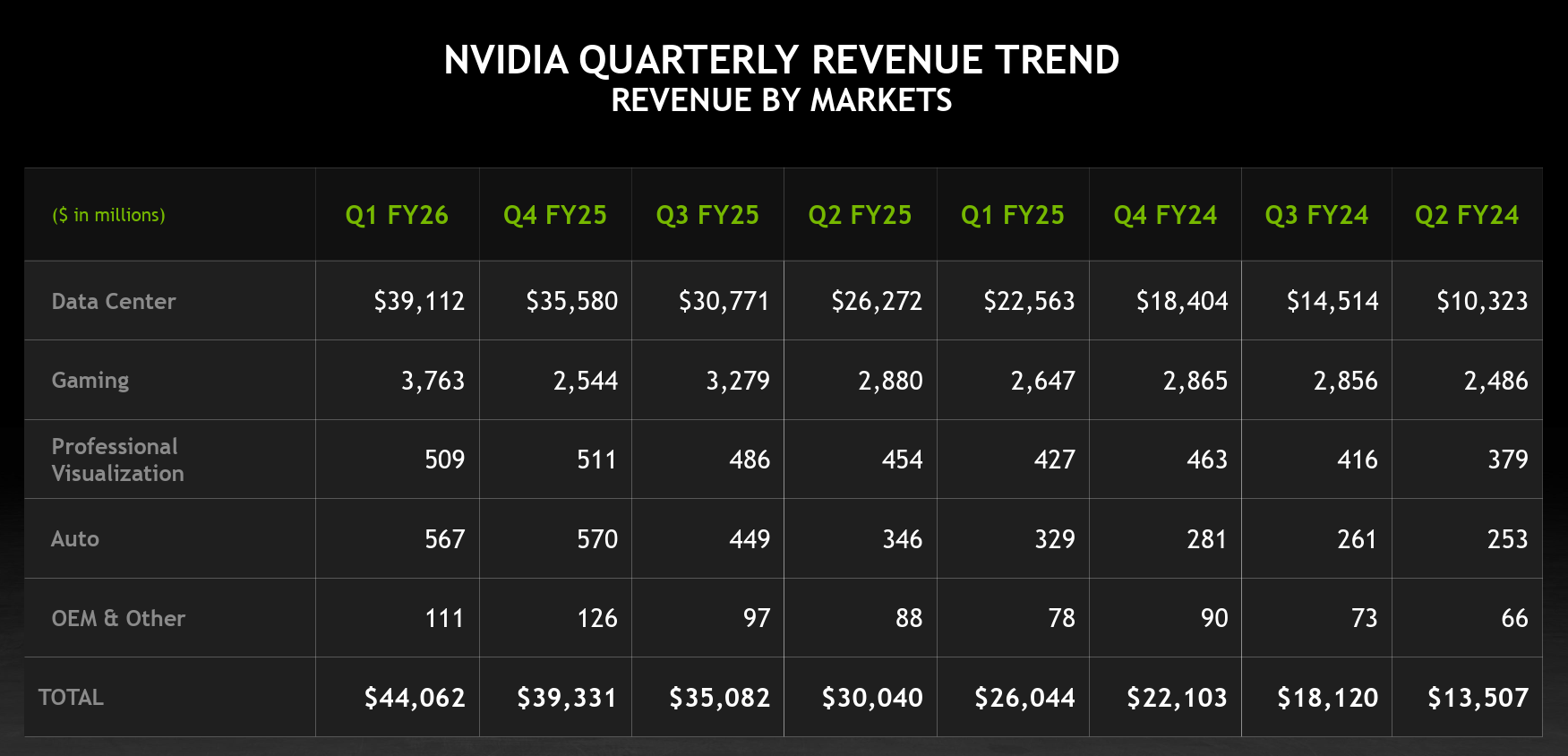

As for partnerships, HDS has worked with Nvidia on multiple use cases across logistics, manufacturing, energy, and transportation and is vendor-neutral.

The cloud imperative

Balasubramanian walked analysts through the company’s cloud partnerships with hyperscale giants such as Amazon Web Services (AWS), Google Cloud, and Microsoft Azure; case studies; and use cases.

“Every use case that we’ve touched upon, we’ve had cloud data in it,†said Balasubramanian, who noted that HDS is running cloud services for multiple enterprises. HDS was able to save more than $20 million a year optimizing a large pharmaceutical company’s cloud infrastructure.

HDS will run cloud infrastructure, optimize it, and often add value with custom applications, said Balasubramanian. HDS has also moved multiple state and federal government agencies onto AWS FedRAMP cloud. In addition, HDS operates in the background for vendors servicing government customers.

Balasubramanian’s big takeaway is that cloud migrations are continuing. “We’ve got what we call a sprint to clouds. It’s essentially a way for us to quickly assess and help migrate to the cloud. We use some accelerators and some products that we work with,†said Balasubramanian. However, there’s less lift-and-shift and more modernization projects to move applications to the cloud to take advantage of AI agents.

Chris Ansert, executive manager of North American Quality Systems and Technology Platforms at Toyota North America, walked through the automobile manufacturer’s Quality1 program, which is a platform to ingest data about product quality issues and resolve them.

The project, which uses HDS services and AWS cloud infrastructure to modernize legacy systems, connects R&D, production engineering, purchasing, production, sales, and service functions in North America to create a feedback loop for quality issues.

Industry use cases

HDS’s secret sauce is integrating OT, IT, and industry use cases. With its domain expertise, HDS can apply AI and the latest technologies to manufacturing, supply chains, and transportation networks.

Ganesh Bukka, global head of HDS’s Industry 4.0 business, outlined the company’s thinking on industry use cases and how they failed to scale. Bukka’s talk revolved around whether Industry 4.0 was a brilliant failure that set up the next evolution of technology.

“For the last decade or so, we talked about Industry 4.0 and every manufacturer was going to revolutionize assets and manufacturing. And this is not just manufacturing. It could be anything in the asset-heavy or even some cases in the asset-light industry. A lot of these initiatives did not scale beyond the pilots,†said Bukka.

Why? Siloed processes proliferated due to digital initiatives, interoperability was challenging, and data and AI skills weren’t developed. Security and culture were other big issues, added Bukka.

“Industry 4.0 was all about technology, but the problem is that IT teams built OT and refused to acknowledge what OT teams wanted. IT built great dashboards, which could give you intelligence, but it could not act autonomously from that intelligence and put it into actions,†explained Bukka.

Bukka argued that HDS is in a unique position for Industry 5.0 applications, given that it’s a system integrator with expertise in melding OT, IT, and complex engineering. .

For the next generation of industrial applications, Bukka said there are five success factors:

- Human collaboration. Human/AI collaboration will be an important element, given that so many AI agents will come online.

- Industrial AI. Hyperpersonalization driven by AI will be critical for creating digital operators.

- Industrial edge computing.

- Industrial metaverse via digital twins, data, and AI.

- Sustainability.

Hitachi launched a digital factory in Hagerstown, Maryland, to build railcars. The institutional and process knowledge from other plans was incorporated into GenAI models, robotic models, and other models. The factory, built via a collaboration between Hitachi Rail, HDS, and Hitachi R&D will be a showcase for the latest manufacturing technology and innovation.

Customers

HDS held multiple customer panels over the two days as well as breakout sessions for automotive, IT operations, cybersecurity, and enterprise applications. Some high-level takeaways:

- Penske Transportation Solutions outlined a project with HDS to create a proactive diagnostics model that predicts vehicle failures. The project reduced downtime for a fleet of more than 400,000 vehicles.

- Toyota Motor North America cited a collaboration on building a quality knowledge center using vector databases and AI models. The platform supports technical assistance for difficult repairs, reduced cycle time, and improved customer service.

- HDS and Verizon Business have a collaboration on healthcare IT and applications, with plans to move across all industries.

Here’s a look at HDS’s reach across the automotive industry, followed by healthcare/life sciences.

Takeaways

- HDS’s expertise in mission-critical applications and IT/OT convergence could give the company a competitive edge as AI evolves from horizontal use cases to vertical ones.

- As AI strategy and implementation become a boardroom issue in manufacturing, healthcare, life sciences, and energy, HDS’s approach could be valuable.

- What remains to be seen is whether HDS can leverage its conglomerate roots seamlessly while remaining focused on its core mission to bring unique value to enterprises.