A tour of enterprise tech inflection points

Technology executives are tossing around the term inflection point a good bit when it comes to agentic AI, quantum computing and any other not-quite-ready for primetime technology.

With that in mind here's a tour of tech inflection points to watch. The issue with inflection points is that they don't have time frames. Where relevant I dropped in a time frame on my believability scores.

Quantum computing accelerates

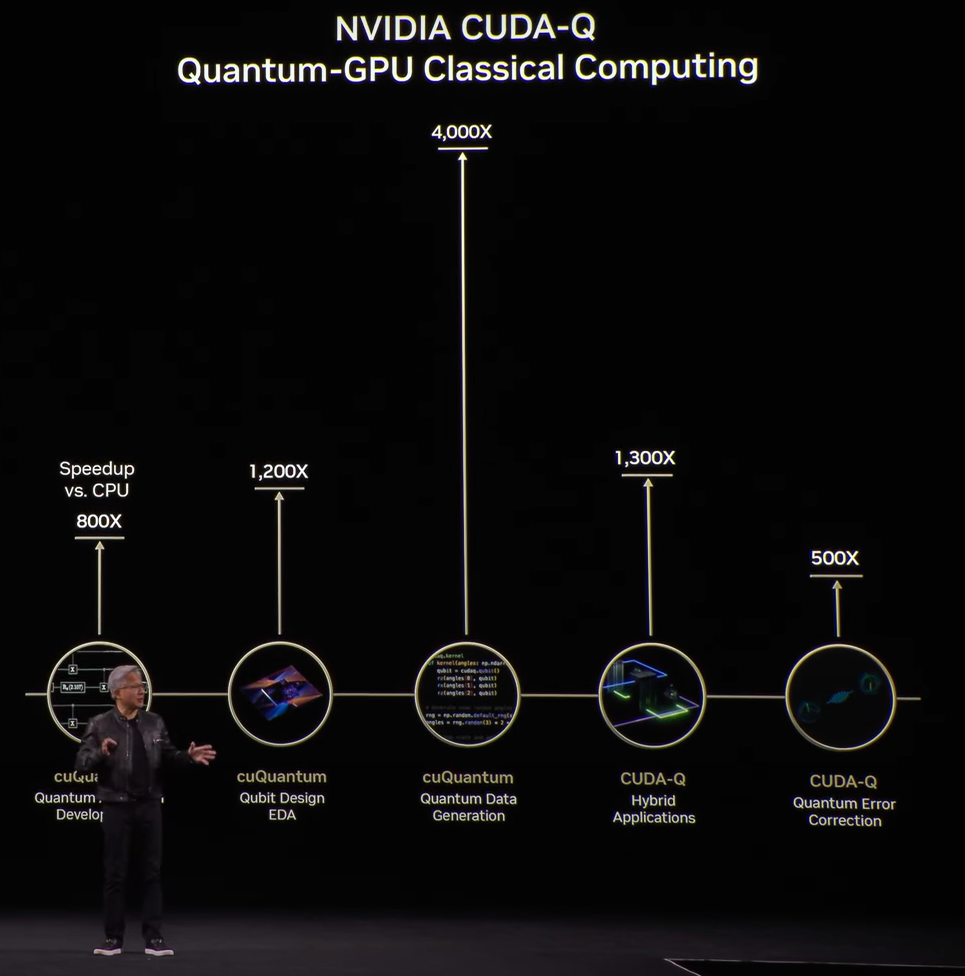

In a week where IBM outlined its quantum computing roadmap to a fault-tolerant quantum system by 2029 and IonQ bought Oxford Ionics for more than $1 billion, Nvidia CEO Jensen Huang said the technology is at an inflection point.

"Quantum computing is reaching an inflection point. We've been working with quantum computing companies all over the world in several different ways, but here in Europe, there's a large community," said Huang. "It is clear now where within reach of being able to apply quantum computing and classical computing in areas that can solve some interesting problems in the coming years."

That’s quite a walk back from comments made in January, but ok.

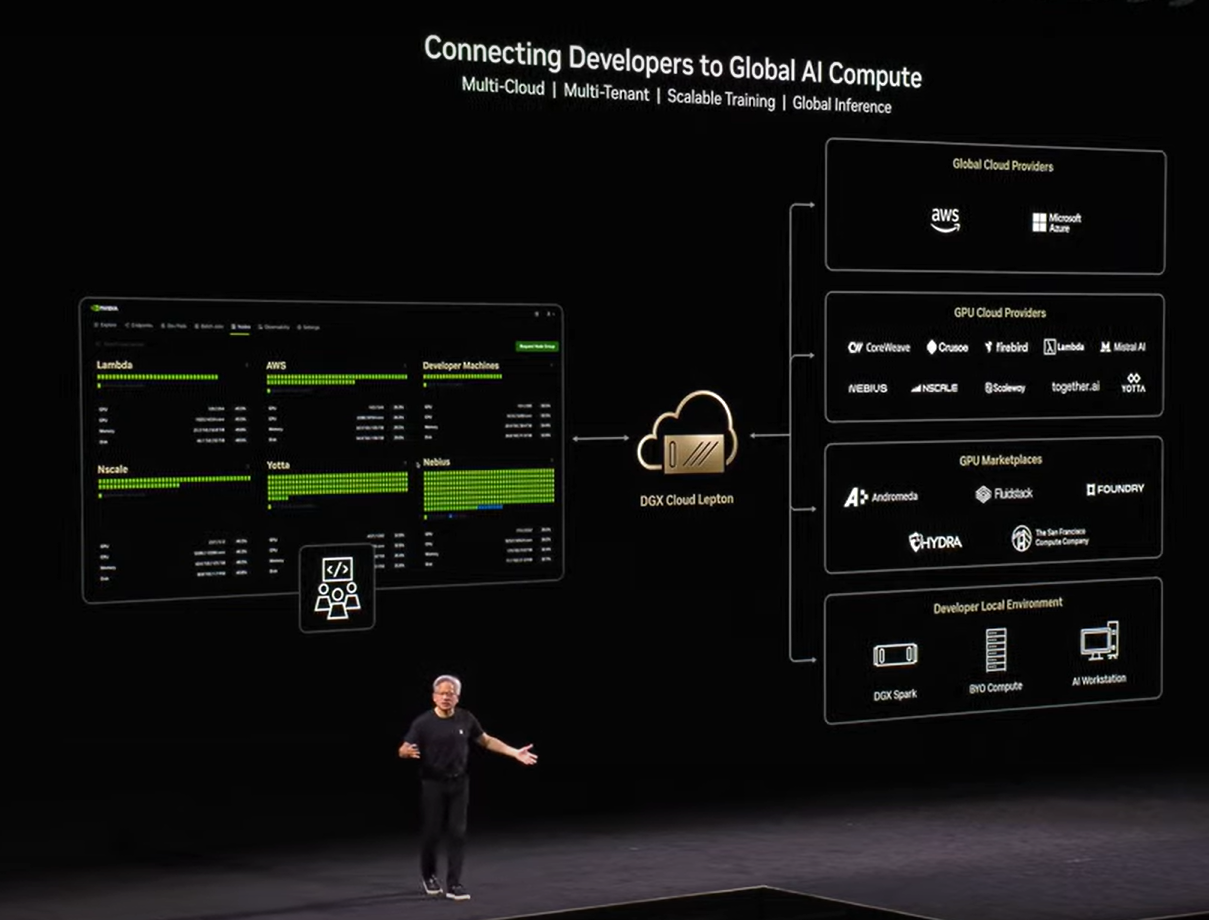

Huang said every next-generation supercomputer will have a quantum processor connected to GPUs. Nvidia has made its libraries available to quantum systems.

Both IonQ and IBM have big plans to scale quantum computers and network them together.

IBM CEO Arvind Krishna said the company is leaning into its R&D to scale out quantum computing for multiple use cases including drug development, materials discovery, chemistry, and optimization.

At Constellation Research we have a watercooler thread and the debate about quantum heated up about this quantum inflection point. In one corner was Holger Mueller, who has argued it's the year of quantum computing (for the last three years). Mueller said CxOs need to think through quantum computing as part of long-term planning.

Estaban Kolsky, an analyst at Constellation Research and our chief distiller, said there are more real-world technologies to figure out and quantum is a lot of hype.

- Mueller vs. Kolsky will be a fun quantum great debate. My take is that there will be a quantum inflection point and it’s closer than you think. Predicting the time frame is another matter entirely.

My inflection point believability on scale of 1 to 10 with a three-year time horizon: 7.

Data platforms and AI converge

The takeaways from Snowflake Summit, Databricks Data + AI Summit and Salesforce's Informatica acquisition are that data platforms and AI are going to converge if agents are going to get work done.

If you've been watching broad AI agent efforts from the likes of AWS, Microsoft Azure and Google Cloud all of them are tethered to data stores and data fabrics.

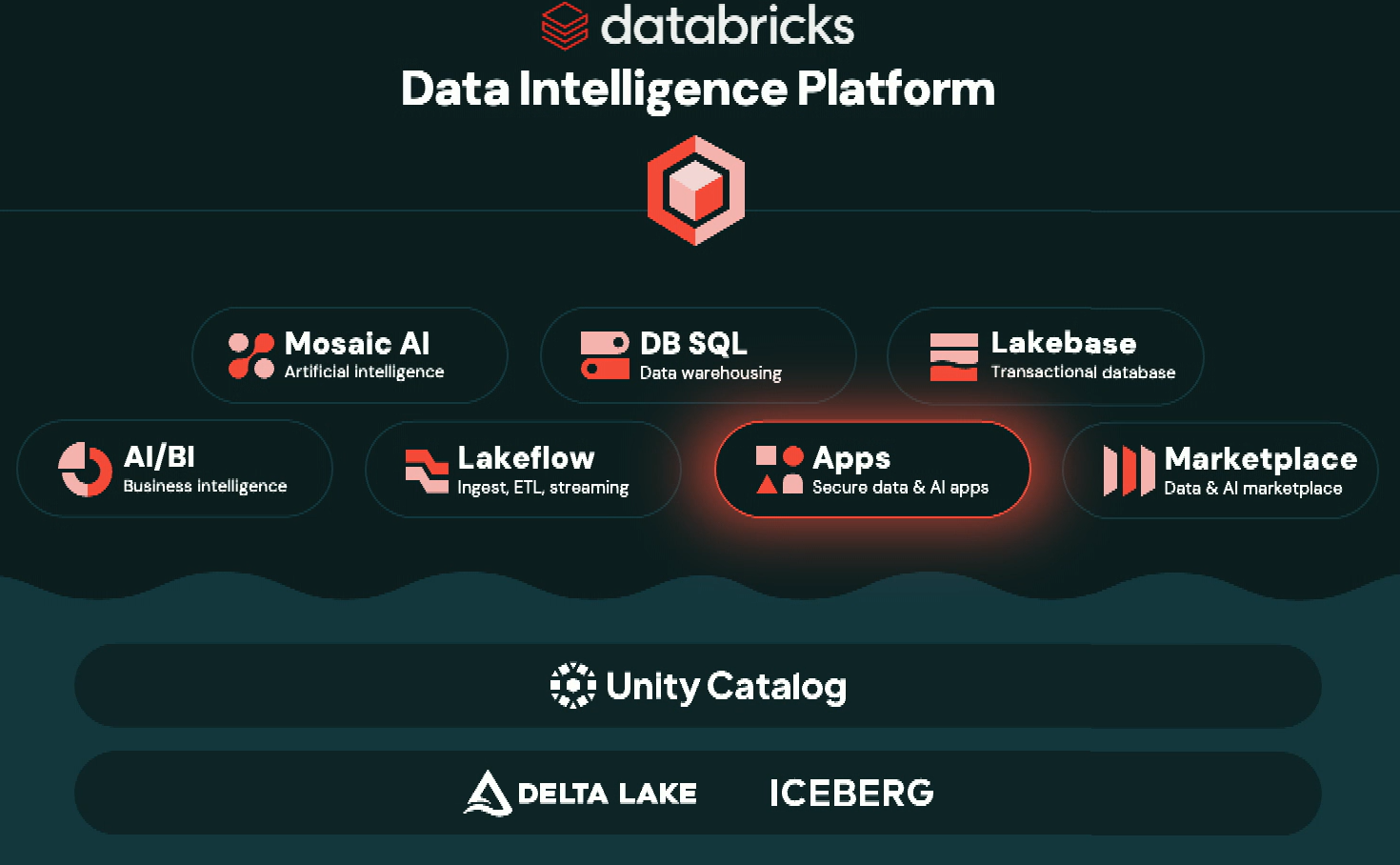

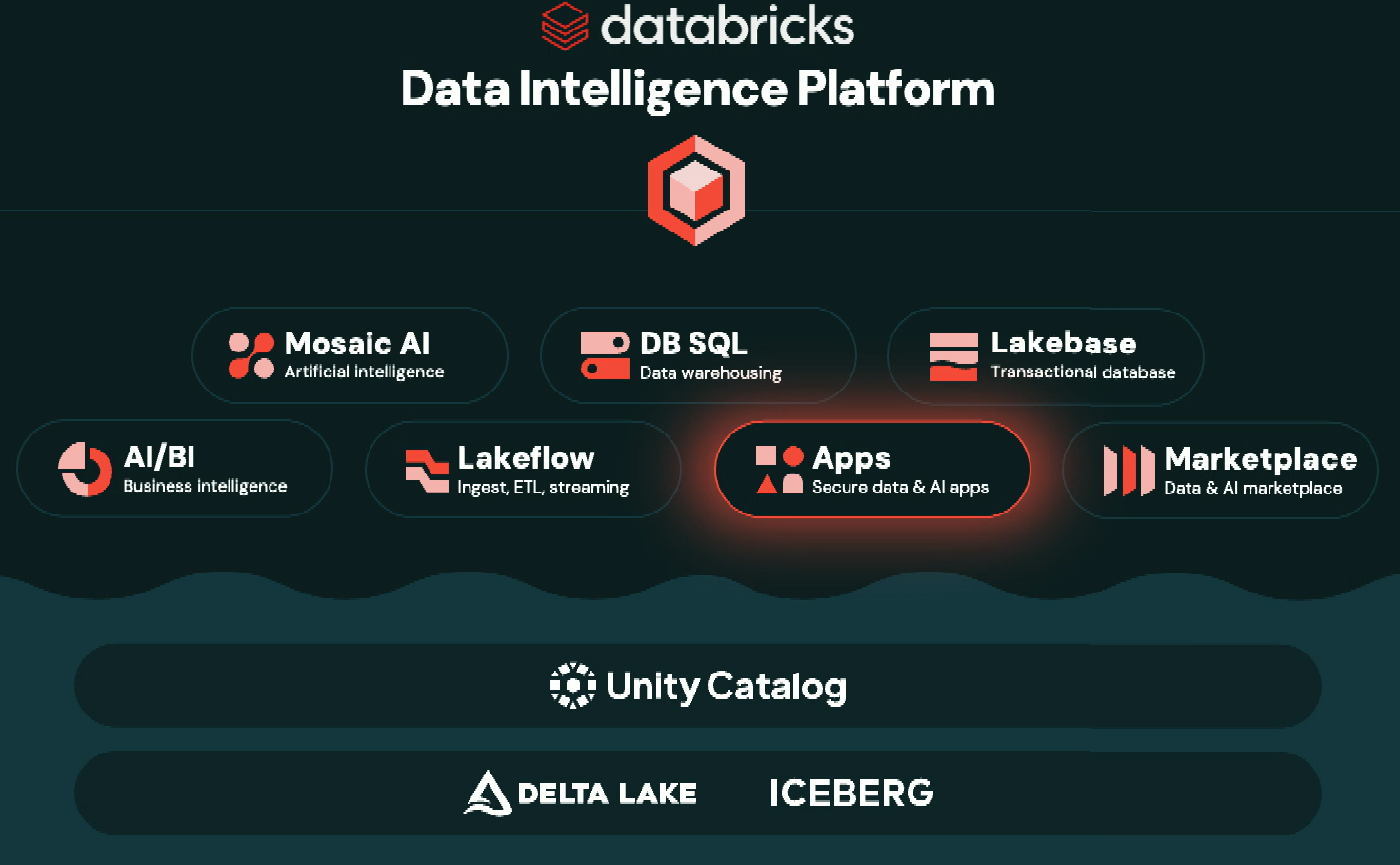

Given that backdrop, it's no surprise that Snowflake and Databricks are leaning the same way. Databricks appears to be more aggressive.

Constellation Research analyst Michael Ni said: "We’re entering a new era where data clouds and hyperscalers are racing to establish themselves as the dominant platform for AI-driven decision-making in their respective markets. The competition is no longer about warehouse performance—it’s about who owns the semantic layer, who governs the agent lifecycle, and who enables the next-gen data app ecosystem. With Lakebase, Agent Bricks, and Unity Catalog metrics, Databricks is asserting that ownership more broadly than ever before."

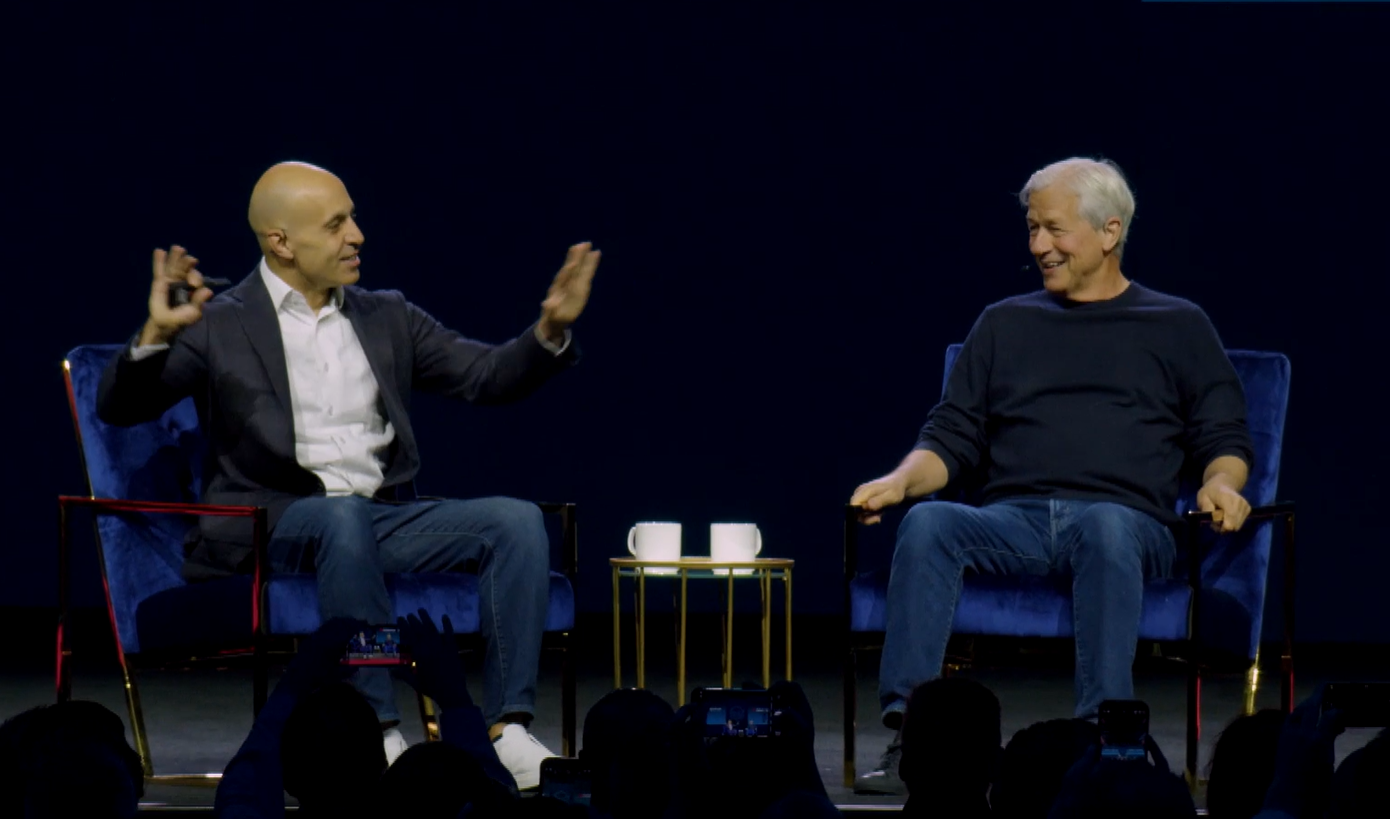

It's worth noting that JPMorgan Chase CEO Jamie Dimon said that data is still way harder than delivering on AI. It stands to reason that we're at an inflection point where agentic AI is really an extension of the data platform.

My inflection point believability on scale of 1 to 10: 9.

Agentic AI

"We're just starting to use agents," Dimon at the Databricks conference. See: JPMorgan Chase's Dimon on AI, data, cybersecurity and managing tech shifts

If JPMorgan Chase, which has a $18 billion technology budget, is just starting with AI agents where do you think the rest of the enterprises sit?

To be sure, we have multiple inflection points with agentic AI. Consider:

To be sure, we have multiple inflection points with agentic AI. Consider:

- We're at an inflection point of vendor marketing about AI agents.

- We're at an inflection point for AI agent standards and solving issues where these automated workers can share data and collaborate.

- We're at an inflection point where enterprises are going to swallow consumption models from SaaS vendors.

- And we're at an inflection point where every board wants an AI agent strategy to automate work.

Anthropic CEO Dario Amodei laid out the agentic AI dream. Speaking during Databricks Data + AI Summit, he said humans will go from conversing and collaborating with AI agents to developing fleets.

"An agent fleet is where a number of agents do things for you, and you are essentially the manager of the agents. It'll go from agent fleets to agent swarms, just when each agent in the fleet itself employs something. And so the human engineer is sitting the top hierarchy, like they're managing an organization or a company, and they still need to intervene. They still need to set direction," said Amodei.

Mainstream adoption? Not yet, but think 2026 for some scale. Biggest issue now is getting these agents to work well and everything we’re hearing is there’s a lot to do before going production. We've covered this topic plenty so let's move on.

- Agentic AI: Is it really just about UX disruption for now?

- RPA and those older technologies aren’t dead yet

- Lessons from early AI agent efforts so far

- Every vendor wants to be your AI agent orchestrator: Here's how you pick

- Agentic AI: Everything that’s still missing to scale

- BT150 zeitgeist: Agentic AI has to be more than an 'API wearing an agent t-shirt'

My inflection point believability on scale of 1 to 10: 7.

Superintelligence

If you read OpenAI CEO Sam Altman's missive this week, you have a good feel for the vision even though it's a little murky.

We're at an inflection point for bold statements about AI.

"In the 2030s, intelligence and energy—ideas, and the ability to make ideas happen—are going to become wildly abundant. These two have been the fundamental limiters on human progress for a long time; with abundant intelligence and energy (and good governance), we can theoretically have anything else," said Altman.

This superintelligence thing will be great for humanity—or not. "The rate of technological progress will keep accelerating, and it will continue to be the case that people are capable of adapting to almost anything. There will be very hard parts like whole classes of jobs going away, but on the other hand the world will be getting so much richer so quickly that we’ll be able to seriously entertain new policy ideas we never could before," said Altman. "We probably won’t adopt a new social contract all at once, but when we look back in a few decades, the gradual changes will have amounted to something big."

All we have to do is get alignment on what society wants from AI systems and democratize the access.

My inflection point believability on scale of 1 to 10: 2. Why? Society is in no place to reach consensus on something like AI superintelligence. Silicon Valley will deliver superintelligence and apologize later.

Apple is missing the AI revolution and peak Apple has passed

Given the ho-hum reaction to Apple's WWDC 2025 and lack of Apple Intelligence progress, it appears that Apple is treading water before a downswing. Like many large tech vendors, Apple appears to be missing the AI curve.

Craig Federighi, SVP of Software Engineering at Apple, said during the WWDC keynote that "we're continuing our work to deliver the features that make Siri even more personal. This work needed more time to reach our high quality bar, and we look forward to sharing more about it in the coming year."

And with that confession at the altar of AI, Federighi and his band of executives spent the rest of the keynote talking about the redesign of its operating systems across devices called Liquid Glass.

It remains to be seen whether Apple can catch up in AI and become more than a mere vessel for other innovators. Ben Thompson at Stratechery said Apple retreated into the familiar. Others complained that Apple just recreated Windows Vista. VisionOS 26 was interesting though.

Either way, Apple's WWDC and product cycles have lost the buzz, but I'd hold off on the obit. One thing is clear: Apple has the resources to miss the AI curve to some degree and milk services revenue until the company figures it out. In its latest quarter, Apple generated $24 million in operating cash flow and returned $29 billion to shareholders and had more than $28 billion in cash.

My inflection point believability on scale of 1 to 10: 9. The problem for Apple is the curve is headed in the wrong direction. We've passed peak Apple.

Data to Decisions Chief Information Officer

JPMorgan Chase has an $18 billion IT budget and Dimon added that data is everything. "We buy and sell 3 trillion of securities every day," said Dimon.

JPMorgan Chase has an $18 billion IT budget and Dimon added that data is everything. "We buy and sell 3 trillion of securities every day," said Dimon.