RPA and those older technologies aren’t dead yet

Robotics process automation (RPA) isn't dead and arguably should get more attention in your enterprise automation portfolio. Simply put, RPA still has a role in an overarching AI strategy and in many cases can be good enough. Keep that in mind for old school AI, machine learning, process automation and even hybrid cloud.

We’ll stick to RPA for now, but the general theme remains: Automation and returns are what matters. And you don’t have to rely solely on heavy compute, reasoning large language models to get there.

Rest assured that most vendors aren't going to preach the benefits of RPA, but orchestration is about more than AI agents. Process, automation and workflow matter. Use the tool for the right job. If a rule-based approach like RPA works use it to deliver returns. Ditto for workflow engines, traditional AI and any other non-agentic technology. For things you already know about there’s no reason to force an LLM to reason repeatedly.

This theme has been bubbling up in recent months amid the agentic AI marketing barrage. CxOs in Constellation Research's BT150 have been noting that RPA is part of the generative AI mix and some acknowledged that the technology may be good enough in many use cases. In fact, older technologies—traditional AI and machine learning—are good enough to deliver significant value.

Marianne Lake, CEO of JPMorgan Chase's Consumer & Community Banking unit, set the scene during the bank’s annual investor day: "Despite the step change in productivity we expect from new AI capabilities over the next five years, we have been delivering significant value with more traditional models. Not every opportunity requires Gen AI to deliver it."

Vendors that play to value instead of the buzzwords seem to be faring well.

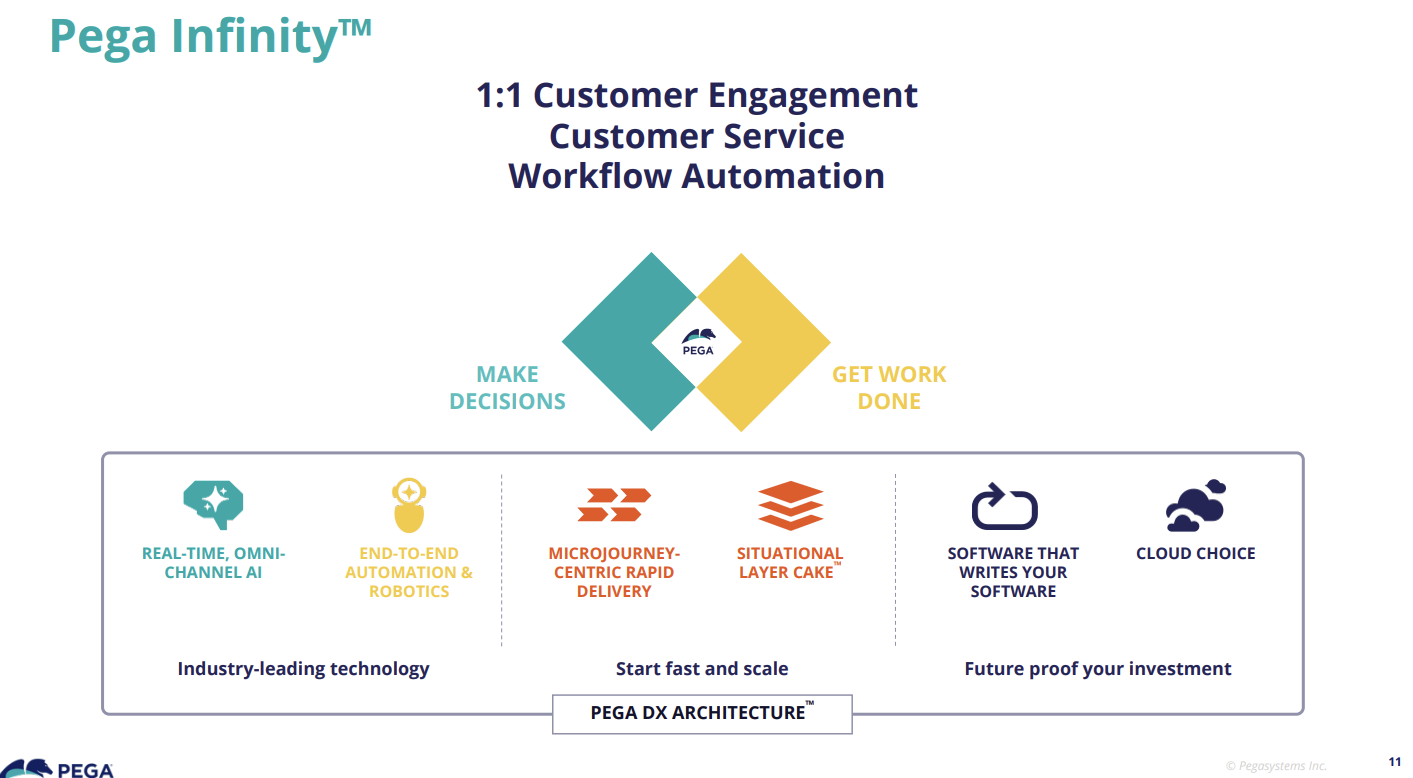

During his PegaWorld keynote, Pegasystems CEO Alan Trefler said orchestrating automation, workflows and AI is about finding the right tool for the task at hand. Yes, Pegasystems has RPA, but didn't mention it during PegaWorld or on recent earnings calls. The company has been riding its GenAI Blueprints for revenue growth and launched the Pega Agentic Process Fabric as well as a blueprint that’ll help you ditch legacy infrastructure.

Overall, Pegasystems broad platform includes decisioning, workflow automation and low code development.

Trefler said agentic AI is being talked about extensively due to "magical marketing." He said that there will be thousands of AI agents running around in enterprises and leading to sprawl and no orchestration.

"The right AI for the right purpose is absolutely critical and candidly forgotten by the pundits that just want to dump things in and hope everything goes right. Large language models aren't everything. Languages models are great for some stuff, but for other things you want to use other forms of AI," said Trefler.

Trefler, like Philipp Herzig is Chief AI Officer and Chief Technology Officer at SAP, argue that prompt engineering is dead. Why? Semantic approaches leave too much uncertainty for processes that need to be followed repeatedly. And you don’t need agentic AI to do everything because it’ll make your costs balloon just on energy consumption.

"If you go down the philosophy of using the GPU to do the creation of the workflow and a workflow engine to execute the workflow, the workflow engine takes a 200th of the electricity because it's not reasoning. It's all this reasoning. You don't have to reason on things you already know about," said Trefler.

The upshot is that old-school AI, machine learning and rule-based RPA can be used in a comprehensive automation strategy. In other words, AI agents and genAI simply think (reason) too much.

Francis Castro, head of digital and technology customer operations at Unilever, said at PegaWorld: "I'm a technologist 25 years in the company, driving technology. Sometimes you fall in love choosing the right tool and the right technology. Sometimes we fall in love with the technology, or we fall in love with the problem, but we forget about what we want to achieve."

The takeaway from Pegasystems is that workflows, automation, agentic AI, process and RPA all go together. It's one continuum. ServiceNow sings from the same hymn book, but sure does talk a lot about AI agents today. In the end, RPA is good enough to automate specific, repetitive and rule-based tasks.

"While the market has been talking about Agentic AI and overloading buyers with a laundry list of AI agents, bots and orchestrators, Pega has been focused on the underlying processes and workflows that have long been their bread and butter," said Liz Miller, analyst at Constellation Research.

Systems integrators are busy building AI agents and have shown they're better at it than vendors. But the big picture for these integrators is automation of processes for customers and internally for business process management.

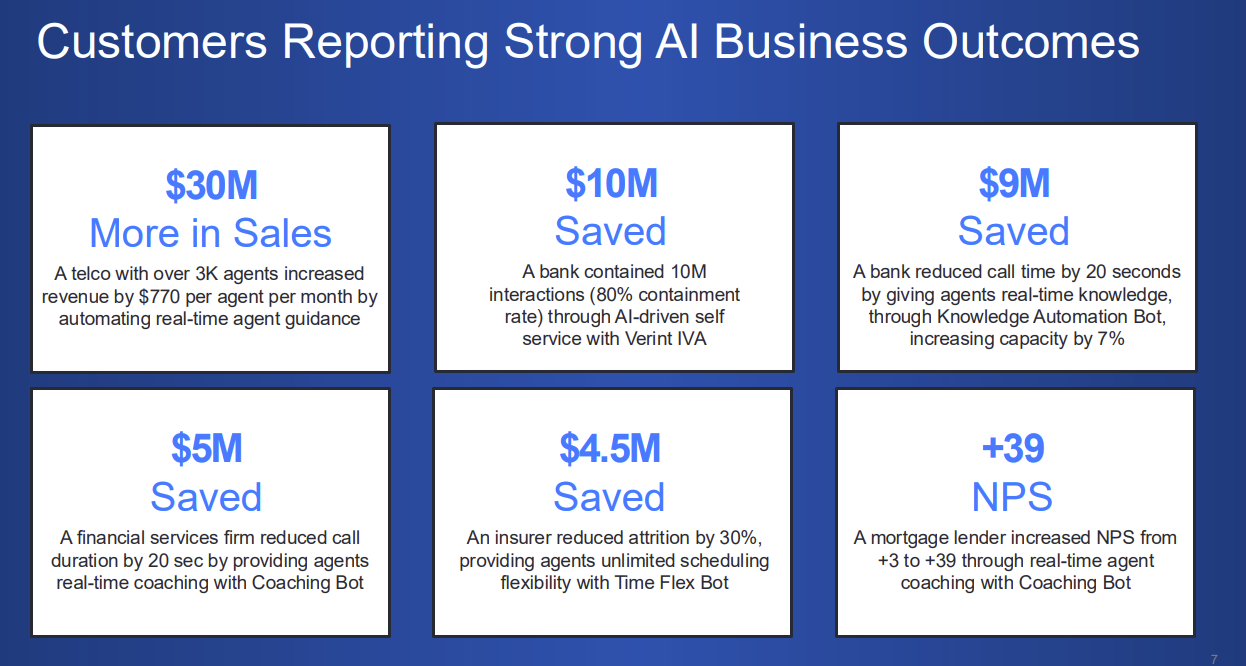

Verint is another vendor playing the value game. The company's first quarter results were better than expected as customers used its AI bots to automate specific processes without the need to change infrastructure or platforms.

Verint CEO Dan Bodner said: "First, more and more brands are fatigued by the AI noise and are looking for vendors that can deliver proven, tangible and strong AI business outcomes now. And second, brands are looking for vendors with hybrid cloud that can deploy AI solutions with no disruption and with a show me first approach."

The CX automation company's secret sauce is delivering value over talk about orchestration, LLMs and agentic AI protocols. "More brands are fatigued by the AI noise and are looking for vendors that can deliver proven, tangible and strong AI business outcomes now. And second, brands are looking for vendors with hybrid cloud that can deploy AI solutions with no disruption and with a show me first approach," said Bodner.

Here's a look at a Verint slide on returns for use cases:

In fact, Bodner mentioned LLMs just twice at the very end of Verint's earnings call. Verint is agnostic about the LLMs it uses for its bots, which were mentioned 24 times. The game for Verint is to automate "micro workflow" in various processes to deliver returns. Verint bots use Verint's Da Vinci AI and are designed to automate tasks and workflows. Verint bots are focused on specific tasks instead of being general purpose.

“Verint’s bet that simple is better paid off,†said Miller. “While everyone was ramping up the hype machine around AI Verint dug into the idea that all of these innovations were great, but outcomes were better. So their simplified preset bot approach where you start with the intended outcome and then connect the automation dots with automation, skills and workflows works.â€

Like Pegasystems, Verint doesn’t talk about RPA anymore. In 2019, Verint talked a lot about RPA and has plenty of docs about the technology lying around.

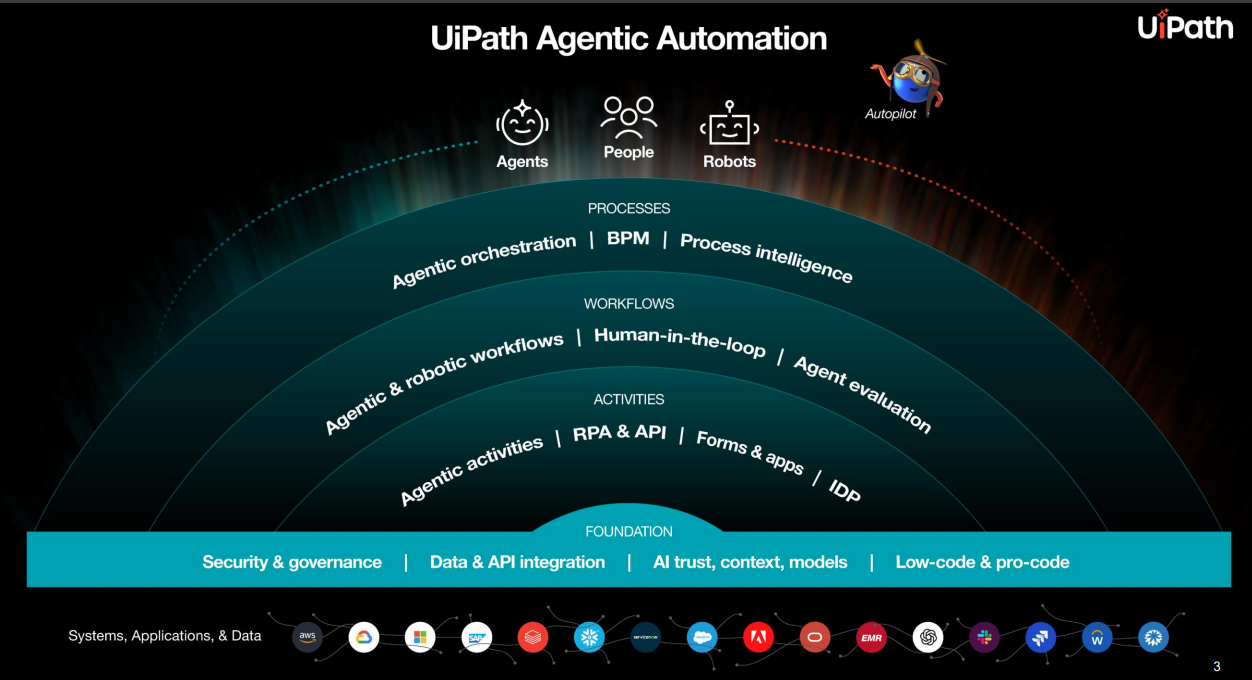

For UiPath CEO Daniel Dines, the company's RPA heritage is an advantage and he hasn’t banned the acronym yet. "Our extensive installed base of robots and AI capabilities already operating autonomously across more than 10,000 customers gives us unparalleled insight into real enterprise processes and workflows where agents are a natural extension," said Dines on UiPath's fiscal first quarter earnings call. "We uniquely bridge deterministic automation or RPA and probabilistic automation or agentic, allowing customers to extend automation into more complex adaptive workflows."

UiPath has been building out its automation platform and launched UiPath Maestro, which aims to leverage AI agents, RPA and humans to orchestrate processes. UiPath’s first quarter results were better-than-expected due to traction for its agentic automation platform.

"There is a tremendous benefit of combining AI agents with robots. And when you go and decide on an AI genetic automation platform, it's a natural way to think maybe we should bring the robots into the same platform," said Dines. "Again, the benefits from the security and governance perspective and having agents and robots and managing humans also in the same platform are tremendous."

Naturally, Dines is going to say RPA has a role in automation given UiPath has a significant legacy business tied to the technology. However, I don't think he's off. AI agents aren't needed for everything when a robot will do fine. And none of these agents are going to work without process intelligence.

CxOs need to deliver value and chasing agentic AI when there are other tools that provide returns faster isn't a great blueprint. Is RPA going to see a renaissance? Probably not. But RPA is definitely worth keeping in the automation toolbox. A lot of those older, less buzzworthy technologies should stick around too.

Data to Decisions Future of Work Tech Optimization Innovation & Product-led Growth Next-Generation Customer Experience Revenue & Growth Effectiveness Chief Information Officer

"There's still a lot of hesitancy, and the models are changing so fast, and there's always a reason to wait for the next model," said Altman. "But when things are changing quickly, the companies that have the quickest iteration speed, make the cost of mistakes low and have a high learning rate win."

"There's still a lot of hesitancy, and the models are changing so fast, and there's always a reason to wait for the next model," said Altman. "But when things are changing quickly, the companies that have the quickest iteration speed, make the cost of mistakes low and have a high learning rate win."