Google I/O 2025: Google aims for a universal AI assistant

Google's future vision is to create leverage its data, properties and services to create a universal AI assistant that will be agentic, understand your personal context and carry out tasks. Google sees Gemini 2.5 Pro becoming a world model that can make plans, create new experiences and simulate the world.

Last year, Google was among the first to introduce agentic AI and the role of agents at Google Cloud Next and Google I/O. This year's Google I/O is about more than new models (although they play a big part) but the agentic experiences they can provide in multiple environments including Android, Google Meet and search.

"We are shipping faster than ever since last I/O. We have announced over a dozen foundation models, multiple research breakthroughs, and released over 20 major AI products and features, and it's only a slice of the innovation that's happening across the company, from search to cloud to YouTube and subscriptions and more," said Alphabet CEO Sundar Pichai, who said in a briefing that Google is leveraging its full hardware stack to roll out new models.

Google is processing 480 trillion tokens per month across its products and APIs, up from 9.7 trillion per month a year ago. That tally is likely to increase as Google rolls out its AI mode search experience with its latest Gemini models.

"It's a total reimagining of search with more advanced reasoning. You can ask longer and more complex queries, like query you see there. In fact, early testers have been responding very positively," said Pichai. "They've been asking queries two to three times, sometimes as long as five times the length of traditional searches. And you can go further with follow up questions. All of this is available as a new tab right in search."

Search is the most obvious area--and critical area for AI since it's Alphabet's profit engine--but Google is rolling out AI in multiple contexts. One interesting development is Google Beam, an AI-first video communication platform that will be rolled out with HP. Google Beam, which emerged from a project outlined at Google I/O a few years ago, takes video conferencing, combines six cameras and an AI model that creates a realistic 3D experience from the video streams.

Google Beam aims to bring 3D, AI to video conferencing with HP

Google also highlighted real-time language translations between two Google Meet coworkers--one speaking Spanish and one English.

Select Google I/O news includes:

- Project Mariner capabilities in search and Gemini API.

- Gemini 2.5 Pro and Flash generally available soon.

- Gemini 2.5 Flash updates.

- Gemini 2.5 Pro Deep Think reasoning mode.

- Veo 3 and Imagen 4 models.

- AI Overviews and AI Mode using Gemini 2.5.

- Deep Search in AI Mode.

- Search Live.

- Gemini is coming to Chrome.

- Try it on, a shopping feature where you can send in a full body picture and Google will use AI so you can wear it.

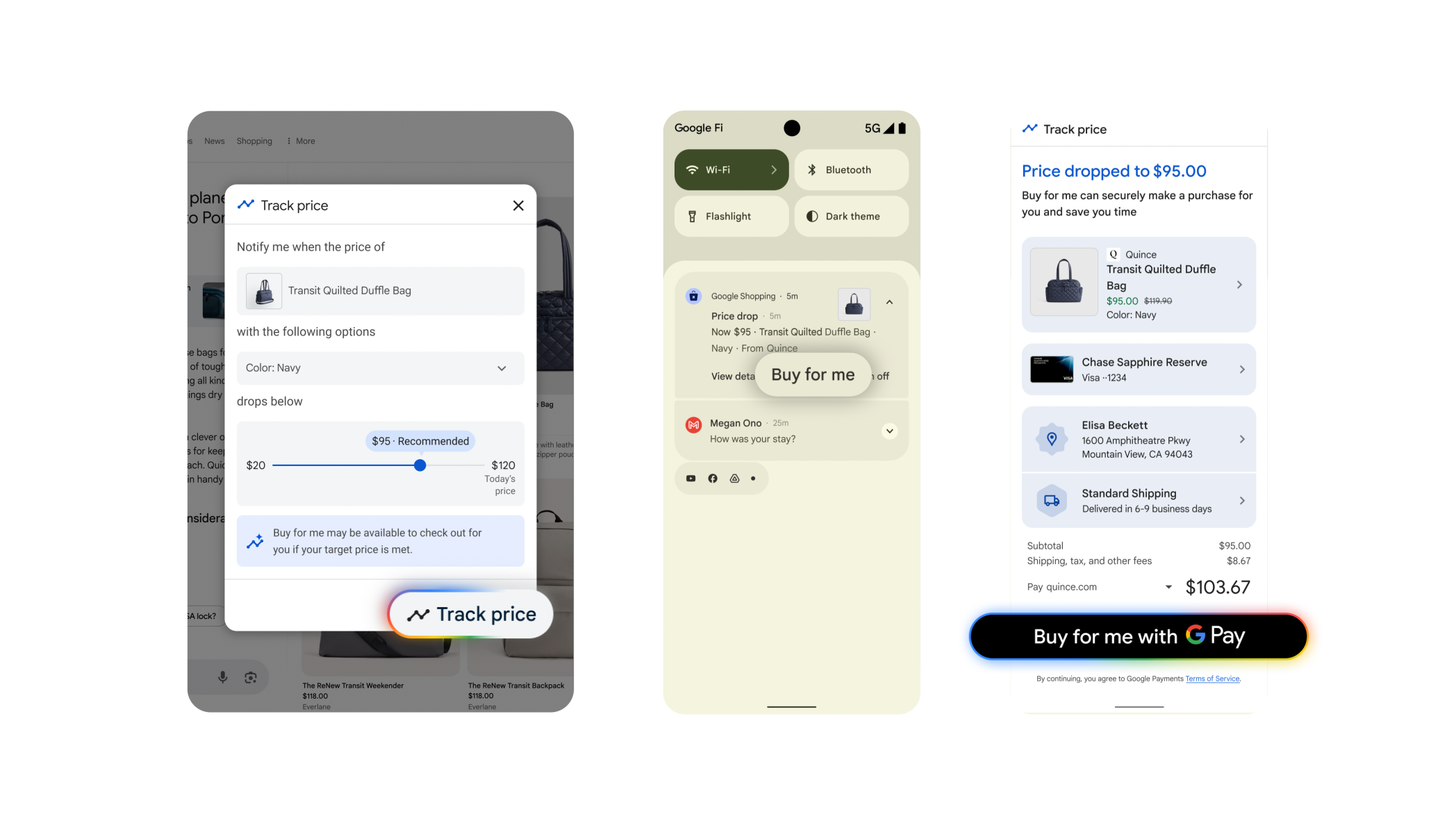

- Agentic checkout, a tool to monitor prices and have an AI agent complete the purchase automatically.

- Gemini app is getting Gemini Live with camera and screen sharing. Google apps will also come to Gemini Live.

- Gemini software development kit will be compatible with Model Context Protocol (MCP).

- Multiple AI driven features in the latest Android.

- Jules, an agentic coding assistant, is in public beta.

- Google has partnered with Gentle Monster and Warby Parker to create glasses powered by AndroidXR people will actually want to wear.

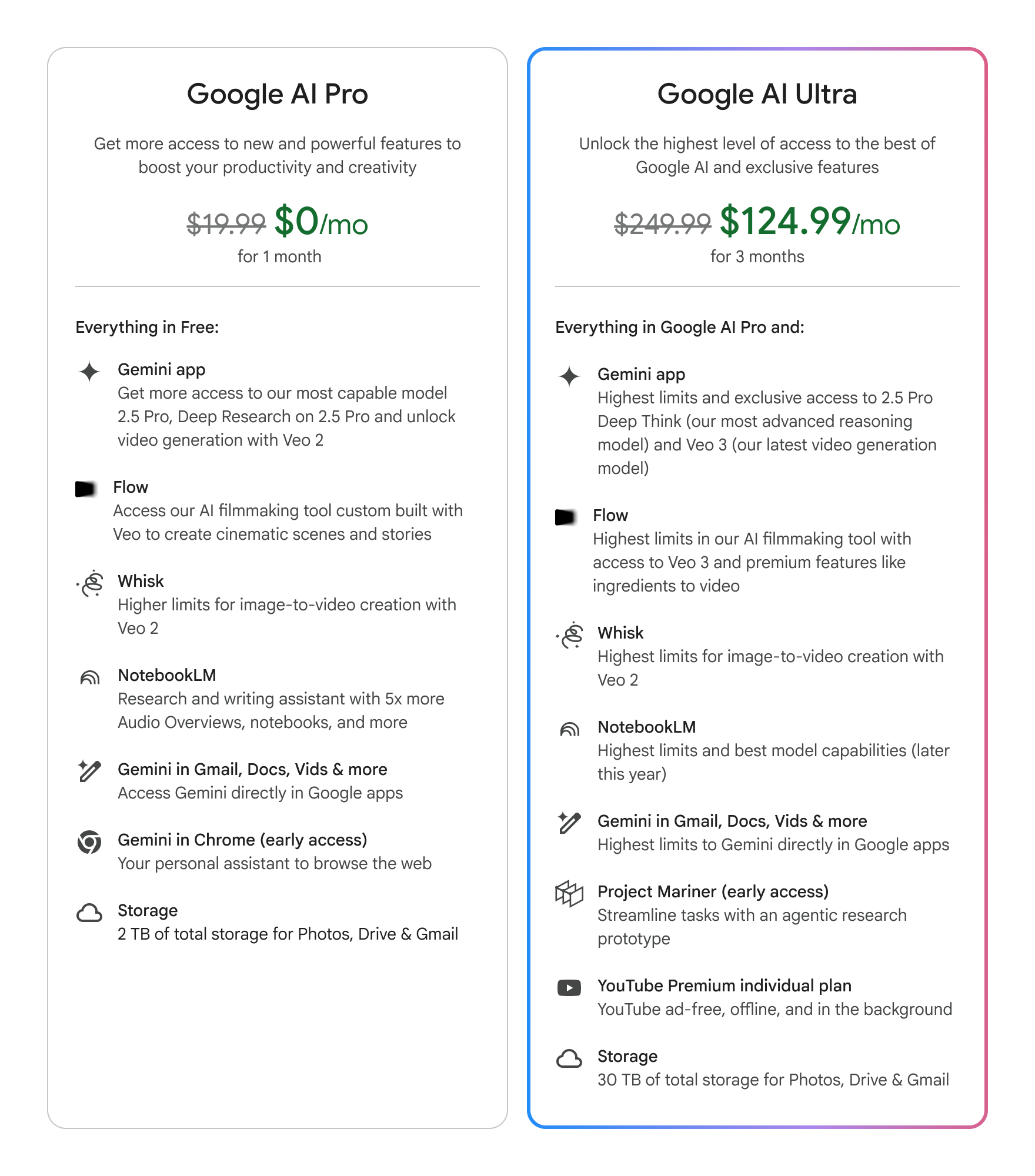

The latest tools will be bundled into a new Google AI Ultra subscription tier that will run $249.99 a month. That plan includes a Gemini app with 2.5 Pro Deep Think and Veo 3, highest limits with NotebookLM, Gemini in Gmail, Docs and other Google apps, Project Mariner, YouTube Premium and 30TB of storage.

The big picture: An AI experience engine

Although the news out of Google I/O is extensive--and enterprise grade once innovation is included in Google Cloud--the big picture here is where the company is headed with its models and agentic AI approach.

Think of Google's AI as its experience engine. Demis Hassabis, CEO of Google DeepMind, said the company is updating Gemini 2.5 Flash, which is popular with developers due to speed and low cost. The Flash update will land in June with a Pro update soon after.

Hassabis said:

"Our recent updates to Gemini are critical steps towards unlocking our vision for a universal AI assistant, one that's helpful in your everyday life, that's intelligent and understands the context you're in, and they can plan and take actions on your behalf across any device. This is our ultimate goal for the Gemini app, an AI that's personal, proactive and powerful."

For instance, Google's Project Astra highlights how the Gemini App can find documents, read your email with permission and carry out tasks like phoning a company and making an appointment.

Now the demo highlighted a bicycle repair and associated questions and the data was on Google--Search, YouTube and Gmail--but you can see where the company is heading. At some point, agents will be able to realistically traverse third-party data stores and services.

"The universal AI assistant will perform everyday tasks for us. It'll take care of our mundane admin and surface delightful new recommendations making us more productive and enriching our lives," said Hassabis. "We're gathering feedback about these capabilities now from trusted testers and working to bring them to Gemini Live new experiences in search, the Live API for developers, as well as new form factors like Android XR glasses, another important way to demonstrate understanding of the world is to be able to generate aspects of it accurately."

Google Cloud Next 2025:

- 9 Google Cloud customers on AI implementations, best practices

- Google Cloud CEO Kurian on agentic AI, DeepSeek, solving big problems

- Google Cloud Next 2025: Agentic AI, Ironwood, customers and everything you need to know

- Google Cloud CTO Grannis on the confluence of scale, multimodal AI, agents

- Lloyds Banking Group bets on Google Cloud for AI-driven transformation

- Healthcare leaders eye agentic AI as next frontier for clinicians, patients

- Google Cloud, UWM partner as mortgage battle revolves around automation, data, AI

- Lowe's eyes AI agents as home improvement companion for customers

Here's how Hassabis sees this universal AI assistant theme playing out in the short term:

- Gemini 2.5 Pro will be able to use world knowledge and reasoning to simulate natural environments in conjunction with models like Veo.

- Making Gemini a world model is a step toward a universal AI assistant.

- The Gemini app will be transformed into a universal AI assistant. Capabilities outlined in Project Astra last year wil turn up in the Gemini app.

- For users, agentic AI embedded into the Gemini app will enable them to multitask better.

Liz Reid, Head of Google Search, outlined how AI overviews will change and Vidhya Srinivasan, General Manager of Google Ads and Commerce, highlighted how AI will change shopping, answer follow-up questions and help consumers buy products throughout the customer journey. Ultimately, this AI assistant theme will run through all of Google's services.

Reid said the search improvements are designed to move from information to intelligence. "There are a lot of times where you come to search and you're just trying to find something when you really need a recommendation. We're going to be enabling you, starting with your own searches, but also giving you an option to opt in to connect your Google Apps, things like Gmail, so that you can just get much better recommendations fit for you," she said.

Srinivasan walked through a shopping experience where Google's AI agent watched prices and options and then since it had all the billing information could complete a checkout. The consumer would have to approve the transaction, but the theme is the same. Google is moving from agents that provide information to ones that do things. "This is really the start of a search that goes beyond information to intelligence," said Srinivasan.

What's clear is that agentic AI is going to be the new user interface. That impact may happen well before agents start executing on tasks and all the mundane work in day-to-day life.

Data to Decisions Future of Work Marketing Transformation Matrix Commerce Next-Generation Customer Experience Innovation & Product-led Growth Tech Optimization Digital Safety, Privacy & Cybersecurity Google ML Machine Learning LLMs Agentic AI Generative AI Robotics AI Analytics Automation Quantum Computing Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain Leadership VR Chief Information Officer Chief Executive Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer