Vice President & Principal Analyst

Constellation Research

About Liz Miller:

Liz Miller is Vice President and Principal Analyst at Constellation, focused on the org-wide team sport known as customer experience. While covering CX as an enterprise strategy, Miller spends time zeroing in on the functional demands of Marketing and Service and the evolving role of the Chief Marketing Officer, the rise of the Chief Experience Officer, the evolution of customer engagement and the rising requirement for a new security posture that accounts for the threat to brand trust in this age of AI. With over 30 years of marketing, Miller offers strategic guidance on the leadership, business transformation and technology requirements to deliver on today’s CX strategies. She has worked with global marketing organizations to transform…

Read more

A contact center at a crossroads is nothing new. It seems that every time there is a technological evolution, the contact center faces disruption. That is especially true in this age of artificial intelligence that is transforming experiences for customers and employees alike. AI has redefined efficiency, encouraging a streamlining of technology estates, data stores and forever shifting where and the how engagement, work and collaboration happens.

The clarity of the high-fidelity signal gathered directly by the contact center from the customer can now be harnessed, analyzed and shared across the enterprise, in large part, thanks to AI. The responses, reactions and skills demonstrated by service representatives that add to the durability of customer relationships can be captured and added to an organization’s knowledge repository. For the contact center, AI has delivered operational efficiencies long dreamed about. As quickly as AI has reinforced the strategic importance of the contact center, it has also invited questions from technology leaders looking to streamline communications technology stacks and avoid overly complicated and customized investments.

This intersection requires a decision and has elevated key questions around need, infrastructure and intention. Why are the “as a service” offerings around communications—contact center as a service (CCaaS), unified communications as a service (UCaaS), marketing automation, sales engagement, customer service management, et al.—so segmented? Why don’t organizations think of customer communications as a form of customer collaboration? Why does it take five platforms, three swivel chairs and endless patience all in the name of customer relationships? Why is this entire vision so complicated, costly and inefficient?

Pressure is mounting to not just justify the costs of doing business, but to reign in the total cost of technology ownership. As AI experimentation gives way to scaled AI implementation, there is an expectation for AI infusion into every aspect of business. Thinking of AI in the organization, let alone the contact center, as an add-on or accessory misunderstands the true power of AI. The expectation is for AI to be woven into the very fabric of work. This demands new strategies around data, workflows and infrastructure…and this demand turns into a pressure that is both top down (as C Suite leaders and Boards ask about AI progress) and bottom up (as employees wonder why AI tools are not as readily available for work as it is in their personal lives).

The unintended outcome of this surge of efficiency is a reexamination of communications stacks and structures, pushing markets once segmented by dialers, inbound or outbound actions and where calls happened to consolidate into more elegant, cloud native and fully connected systems.

The ask has become to focus on how the people who engage most directly and immediately with customers work as opposed to where they work. Instead of discussing in-office versus remote work, the contact center has recentered on people over places. This new strategy for efficiency looks at how work is done and how that work can be enhanced and decisions accelerated thanks to automation and AI. While service reps are being empowered with seamless automated support to their work, customers are being encouraged to engage at their will, in the channels of their choosing. The goal of the contact center should be to streamline the work of the service rep to intentionally take the work out of being a customer.

Advancing the Contact Center With AI

While technology convergence is inevitable, the contact center won’t be destroyed. Convergence has, however, made choosing a path forward an imperative. So where can contact center leaders start down the right path?

Rethink from the outside in. Despite its absolute and critical role in customer engagement and experience delivery, the contact center is often developed as an inside out strategy, focusing on meeting operational goals that traditionally put the business at the center and work outwards to mold the customer’s experience around those goals. This is where a legacy mindset of shorter call times, call deflection and other organization-first goals and business outcomes have won. But now, thanks to AI, these same operational efficiency goals can be achieved while putting the customer at the center of decision making and strategy.

No customer wants to spend MORE time on the phone with a service representative. Speed and efficiency in managing simple concerns and requests is just as important to the customer as it is to the service team. With generativeAI, this speed of decisions and engagements in context can happen in real time, in the customer’s context and fully attune to the customer’s journey. The partnership between service reps and their Copilots are immersive and conversational, with a capacity to deeply understand the customer, the business and the individual service rep to boost productivity while simultaneously boosting engagement and customer service.

Modern examples of this include Microsoft’s Copilot, including Microsoft’s AI-native portfolio for Service. The Copilot capabilities embed across Dynamics 365 Customer Service and Dynamics 365 Contact Center, integrating with CRM and enterprise data resources to assist service reps and streamline workflows. Rather than disrupting work because of a customer, these workflows carry the customer’s needs, expectations, history and voice into the organization and turns that into powerful assistance and agentic processes.

Turn customer obsession into a passion for value. There is often a mantra that everyone in business should be “obsessed” with the customer. But as in life, obsession can go horribly wrong as it presents as fixation or delusion. Instead, the contact center has a unique opportunity to leverage its keen understanding of the customer and context to establish workflows and automations that focus on how the organization can both proactively and reactively deliver value.

Autonomous service works best when the business outcome and customer value exchange is aligned. Call deflections hold value to the business, shifting customers into more cost-effective self-service digital experiences. But they are only a valued experience if the customer achieves their goals in a manner they expect. Architecting value-first autonomous workflows thinks from the customer and tracks their engagements back to the contact center, understanding where, how, when and why the customer is engaging. To just be obsessed with a customer could mean knowing everything about that person, but it does not guarantee the ability or capacity to act. Value delivery is rich with empathy but also compels the service rep and the business to help change the status quo of the customer.

Become the center point for market and customer knowledge. Knowing more about the customer and their definition of value shouldn’t be locked away in a contact center solution. Thanks to AI’s capacity to ingest, curate and contextualize customer conversations to better understand the meaning, intention and reality of a customer’s connection, contact centers have become exceptionally comfortable and confident in their ability to synthesize customer voice into a real-time intelligence asset.

Automating time-consuming tasks like call summaries and follow up emails is the performance driving starting point. This is the opportunity to establish an enterprise-wide strategy where AI can surface unified intelligence across the entire organization, presenting in service rep should have access to shipping and supply chain information that impacts the customer, supply chain and shipping should have access to information around potential points of customer friction and expectations. The sharing of contact center driven intelligence should be a bi-directional exchange across the modern enterprise.

Shifting the Conversation from Convergence to AI Acceleration

The future of the contact center deserves to be easier, with less heavy lifting and less wear and tear on the people brought to power experience strategies. AI has the capacity to help lift that load and deliver the efficiency and productivity the contact center has always expected and craved. But that is just the first stage of AI maturity and value! There is much more to be achieved, especially as the technology paths and platforms continue to converge. As the walls come down between internal and external communications systems, this concept of an experience of collaboration can be achieved. Shared intelligence, shared understanding and shared experience delivery doesn’t need to be trapped in an individual system or dependent on a single interface or presentation layer. Insight, intelligence and the manifestation of the customer hosted in the form of data can and should easily intersect with the knowledge an organization curates about the business and about the products being consumed.

Thanks to AI the current technology convergence can be an opportunity for simplification and not an assured fate of collapse or pressure-induced failures. Collaboration and communication can intertwine and accelerate the value all parties realize. The real beauty of AI is that thanks to its capacity to ingest, analyze and normalize complex data sources and types, it can extrapolate far beyond human capacity. So let the convergence begin! May it not kickstart an era of rip-and-replace or the stagnation and fear that some of the recent cloud migrations and infrastructure modernizations of the past revealed. Instead, let this vision of communications, collaboration, AI and the customer help make this adventure called the work of service be just a bit easier, more valuable and seamlessly connected.

Data to Decisions

Future of Work

Matrix Commerce

New C-Suite

Next-Generation Customer Experience

Tech Optimization

Innovation & Product-led Growth

Digital Safety, Privacy & Cybersecurity

ML

Machine Learning

LLMs

Agentic AI

Generative AI

AI

Analytics

Automation

business

Marketing

SaaS

PaaS

IaaS

Digital Transformation

Disruptive Technology

Enterprise IT

Enterprise Acceleration

Enterprise Software

Next Gen Apps

IoT

Blockchain

CRM

ERP

finance

Healthcare

Customer Service

Content Management

Collaboration

Chief Customer Officer

Chief Executive Officer

Chief People Officer

Chief Information Officer

Chief Digital Officer

Chief Technology Officer

Chief AI Officer

Chief Data Officer

Chief Analytics Officer

Chief Information Security Officer

Chief Product Officer

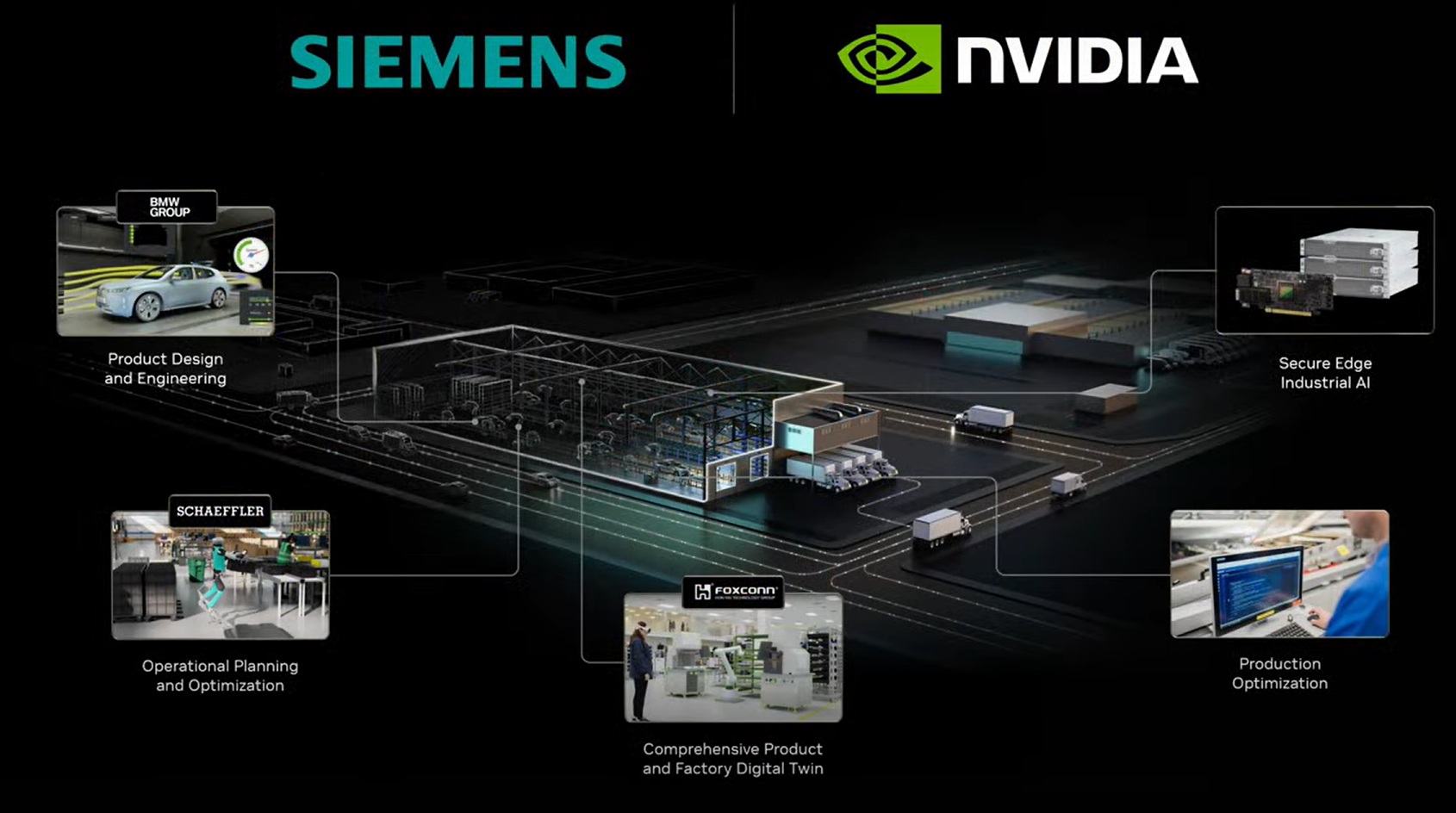

has more than 1.5 million developers. Nvidia also announced that European enterprises are adopting agentic AI including Novo Nordisk, Siemens, Shell, BT Group, SAP, Nestle, L'Oreal and BNP Paribas. The company also touted adoption of its Nvidia Drive autonomous vehicle platform at Volvo, Mercedes Benz and Jaguar as well as quantum computing efforts in the region.

has more than 1.5 million developers. Nvidia also announced that European enterprises are adopting agentic AI including Novo Nordisk, Siemens, Shell, BT Group, SAP, Nestle, L'Oreal and BNP Paribas. The company also touted adoption of its Nvidia Drive autonomous vehicle platform at Volvo, Mercedes Benz and Jaguar as well as quantum computing efforts in the region.