HPE launches GreenLake for LLMs, aims to democratize Cray supercomputing for AI training

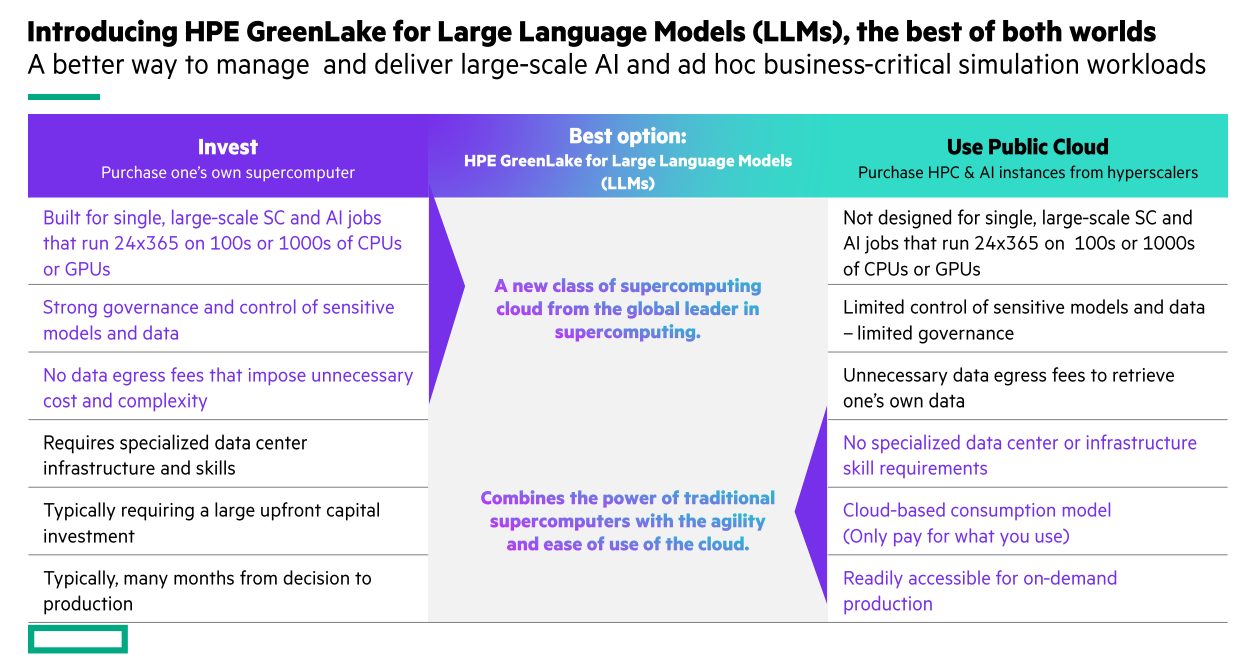

Hewlett Packard Enterprise is mobilizing its Cray supercomputing knowledge to launch HPE GreenLake for Large Language Models (LLMs) as enterprises look for options to privately train, tune and deploy AI.

At HPE Discover, HPE outlined its plans for HPE GreenLake for LLMs, which is expected to be generally available by the end of the year. The move is designed to address enterprise concerns about security, data privacy and compliance for generative AI deployments.

Vendors in recent weeks have moved to address corporate data concerns as Salesforce launched an AI trust layer and Oracle said it will offer a cloud service to keep corporate data protected.

- How AI workloads will reshape data center demand

- Enterprise tech buyers wary of generative AI hype, security

- How 4 CEOs are approaching generative AI use cases in their companies

HPE is taking a hybrid and private cloud approach to deploying AI with a focus on industries including healthcare and life sciences, financial services, manufacturing and transportation. HPE GreenLake for LLMs is also running on infrastructure powered by nearly 100% renewable energy, which could be critical given enterprises are increasingly tracking carbon footprints.

HPE GreenLake for LLMs is well timed since Constellation Research analyst Dion Hinchcliffe recently published a report outlining how CXOs are moving to private cloud models for cost savings.

According to HPE CEO Antonio Neri, HPE GreenLake for LLMs is the first of a series of AI applications planned. "HPE is making AI, once the domain of well-funded government labs and the global cloud giants, accessible to all by delivering a range of AI applications, starting with large language models," he said.

"We are experiencing an exponential growth of data everywhere, but only 50% of it is used for decisions. The reality is we have been data rich and insight poor. AI and generative AI has accelerated and we now have the ability to harness the power of our data...You don't have to spend millions to acquire supercomputing infrastructure."

HPE expands GreenLake services amid private cloud renaissance

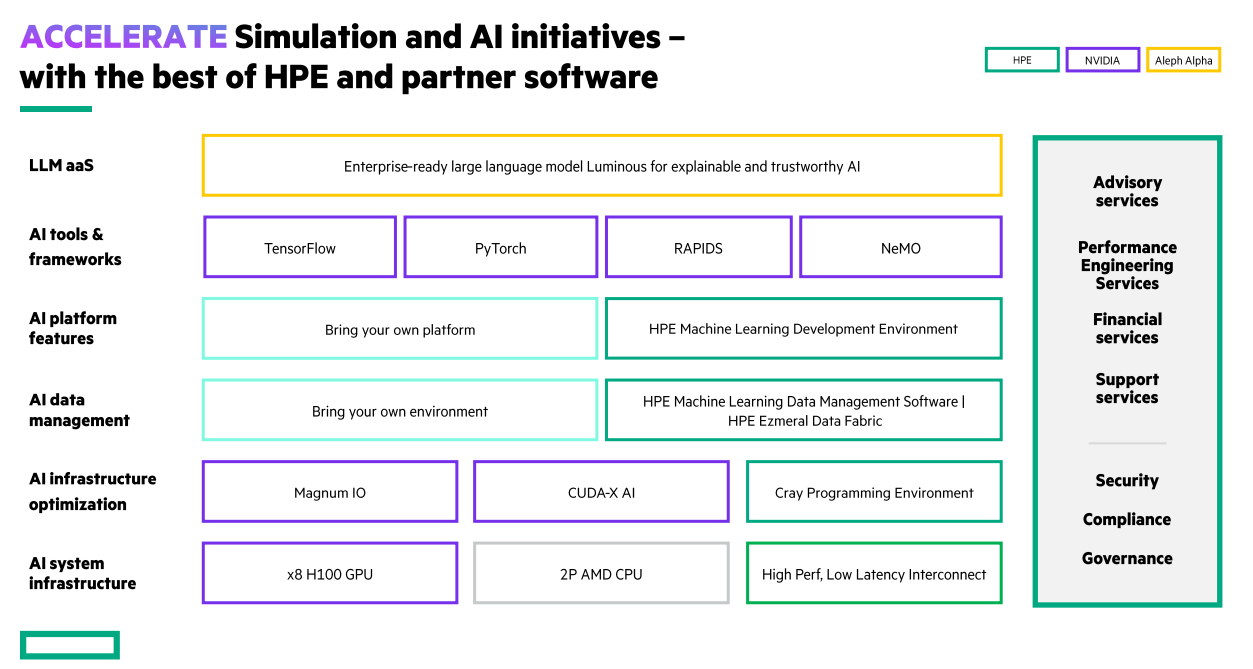

HPE GreenLake for LLMs will be delivered with Aleph Alpha, a German AI startup that will provide a proven LLM for use cases requiring text and image processing and analysis.

With the launch of HPE GreenLake for LLMs, HPE is looking to expand its market beyond HPC users to R&D innovators, Chief AI Officers and CXOs who are looking to develop models faster and more efficiently. HPE GreenLake for LLMs will be browser based with role-specific tooling.

HPE noted that it is accepting orders now for HPE GreenLake for LLMs with availability at the end of 2023 in North America. Europe will follow in early 2024.

Mobilizing Cray

HPE acquired supercomputing giant Cray in 2019 in a move that propelled the company to the top of the supercomputer rankings.

With that purchase of Cray, HPE has been able to scale AI training and simulation workloads across CPUs and GPUs at once.

And now that generative AI is likely to spread across the enterprise and be democratized, HPE GreenLake for LLMs is able to leverage that Cray infrastructure.

HPE GreenLake for LLMs will be available on-demand and powered by HPE Cray XD supercomputers as well as the HPE Cray Programming Environment, a software suite to optimize HPC and AI applications. There's also a set of tools for developing, porting, debugging and tuning code.

According to HPE, Luminous, the pre-trained LLM from Aleph Alpha, was tuned for multiple use cases for banks, hospitals and law firms on HPE GreenLake for LLMs.

HPE GreenLake for LLMs will also use a HPE's AI software including HPE Machine Learning Development Environment HPE Machine Learning Data Management Software.

In addition, HPE GreenLake for LLMs will run on supercomputers initially hosted in QScale’s Quebec colocation facility that provides power from 99.5% renewable sources.

To round out the AI push, HPE expanded its inferencing compute offerings. New HPE ProLiant Gen11 servers are optimized for AI workloads, using advanced GPUs. The HPE ProLiant DL380a and DL320 Gen11 servers boost AI inference performance by more than 5X over previous models, said HPE. That performance comparison is based on image generative AI performance of NVIDIA L40 (TensorRT 8.6.0) versus T4 (TensorRT 8.5.2), Stable diffusion v2.1 (512x512).

The Constellation Research take

Constellation Research analyst Holger Mueller said HPE left a few open questions to ponder. For instance, what is the connectivity between the data and Cray systems? What happens if HPE machines are on-premises and data is in the cloud?

Mueller added that the real cost savings may be in the ProLiant systems optimized for AI workloads. He added that HPE will compete with Oracle Cloud at Customer as well as IBM and others.

Andy Thurai, who covers AIOps and AI at Constellation Research, said HPE GreenLake for LLMs appears to be going "after the mature AI workloads NOT the innovative workloads."

He said:

"In order for any enterprise to experiment on AI models they need strong ecosystems, data availability, skills and immediate need. HPE doesn’t have that right now. Many organizations are expected to use hyperscale cloud providers and decide if it is going to worthwhile to move to HPE. That can be good and bad. Good: Enterprises already know what they want. Bad: Volume and TAM will be very limited to those customers. Public clouds will come up with a mechanism to keep the initial innovative cloud workloads from moving out. It will be an interesting battle to see."

Thurai also noted the following:

- HPE GreenLake for LLMs is unique effort outside of the public cloud vendors.

- Focusing on domain specific use cases in specific industries can bring value that's hard to replicate in public clouds.

- Strong governance and control of sensitive models and data, no data egress fees, sustainability, and lower costs are all strong claims worth investigation by enterprises.

- HPE is hoping its partnership with Aleph Alpha will show other AI players that they can train private LLMs just as easily.

More: