Amazon CEO Andy Jassy's long-winded defense of Amazon Web Services' AI strategy sure caused some consternation, but fears are likely misplaced. After all, nuance doesn't play well on Wall Street and neither do the laws of large numbers.

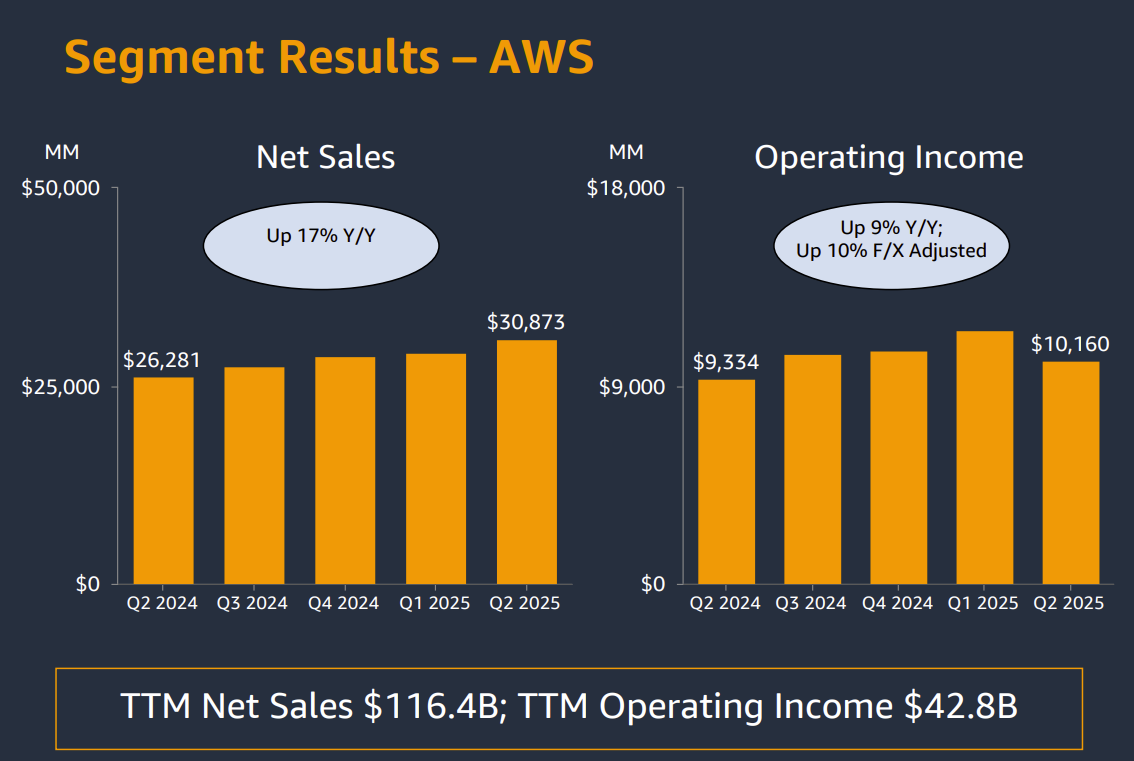

The hubbub over Amazon's second quarter earnings report was largely attributed to AWS' growth rate of 17.5% vs. growth rates at Microsoft Azure and Google Cloud, which were 34% and 32%, respectively.

Jassy's short answer is that part of AWS' growth rate was due to the laws of large numbers. AWS has an annual revenue run rate of $123 billion compared to Azure at $75 billion and Google Cloud at $50 billion. Backlog for AWS as of June 30 was $195 billion, up 25% from a year ago.

Turns out that AWS’ AI strategy is a difficult to grok given the company is focused on developers, large language model choices and building blocks. AWS is downright practical and spent its AWS Summit New York talking about the architecture and approaches needed to make AI agents scale in the enterprise.

- AWS launches Bedrock Agent Core, custom Nova models

- AWS' practical AI agent pitch to CxOs: Fundamentals, ROI matter

I'd argue that messaging is needed since that's what CxOs are struggling with, but understand why an eat-your-vegetables approach isn't as invigorating as the hype machine. Nevertheless, Jassy said AWS is ramping Nvidia and Trainium2 instances as fast as it can to meet demand. Capacity is being consumed as fast as it's put in, said Jassy, who noted energy and supply constraints are the biggest blockers. "We have more demand than we have capacity at this point," he said.

On the earnings call, Jassy made the following points:

- Inference workloads are running on AWS infrastructure and that'll grow over time as workloads move to production.

- Enterprises are at an early stage in AI adoption of AI agents. Cost and security are going to be huge issues as AI agents are adopted in enterprises.

- Price and performance will matter more as enterprises scale.

- AWS is focused on efficiency in building and deploying AI agents for enterprises.

With that backdrop let's annotate Jassy's big defense of AWS, which was blamed for Amazon shares falling Friday.

Morgan Stanley analyst Brian Nowak asked whether AWS was falling behind on AI and what to expect in the next 12 months.

Jassy said:

"I think it is so early right now in AI. If you look at what's really happening in the space, you have -- it's very top heavy. So you have a small number of very large frontier models that are being trained that spend a lot on computing, a couple of which are being trained on top of AWS and others are being trained elsewhere. And then you also have, I would say, a relatively small number of very large-scale generative AI applications.

The one category would be chatbots with the largest by a fair bit being ChatGPT, but the other category being really, I'll call it, coding agents. So these are companies like Cursor, Vercel, Lovable and some of the companies like that. Again, several of which run significant chunks on top of AWS. And then you've got a very large number of generative AI applications that are in pilot mode -- or they're in pilots or that are being developed as we speak and a very substantial number of agents that also people are starting to try to build and figure out how to get into production in a broad way, but they're all -- they're quite early.

Takeaway: Vendor talk about AI applications and are way ahead of actual production deployments at enterprises.

And many of them that are out there are significant, but they're just smaller in terms of usage relative to some of those top heavy applications I mentioned earlier. We have a very significant number of enterprises and startups who are running applications on top of AWS' AI services and then -- but they're all -- again, like the amount of usage and the expansiveness of the use cases and how much people are putting them into production and the number of agents that are going to exist.

Takeaway: AWS will make money on the compute and storage that will go along with AI services as much as the AI offerings.

It's still just earlier stage than it's going to be and so then when you think about what's going to matter in AI, what's going to -- what are customers going to care about when they're thinking about what infrastructure use, I think you kind of have to look at the different layers of the stack. And I think for those that are -- both building models, but also just -- if you look at where the real costs are, they're going to ultimately be an inference today, so much of the cost in training because customers are really training their models and trying to figure out to get the applications into production.

But at scale, 80% to 90% of the cost will be an inference because you only train periodically, but you're spinning out predictions and inferences all the time. And so what they're going to care a lot about is they're going to care about the compute and the hardware they're using. And we have a very deep partnership with NVIDIA and will for as long as I can foresee, but we saw this movie in the CPU space with Intel, where customers are anchoring for better price performance. And so we built just like in the CPU space, where we built our own custom silicon and building Graviton which is about 40% more price performance than the other leading x86 processors.

Takeaway: The value of AI will be all about inference.

We've done the same thing on the custom silicon side in AI with Trainium and our second version of Trainium2 is really -- it's become the backbone of Anthropic's next Claude models they're training on top of, and it's become the backbone of Bedrock and the inference that we do.

I think a lot of the inference, it's about 30% and 40% better price performance than the other GPU providers out there right now, and we're already working on our third version of Trainium as well. A lot of the compute and the inference is going to ultimately be run on top of Trainium2.

Takeaway: Like compute, GPUs will commoditize too.

And I think that price performance is going to matter to people as they get to scale. Then I would say that middle layer of the stack are really -- it's a combination of services that customers care about to be able to build models and then to be able to leverage existing leading frontier models and then build high-quality generative AI applications that do inference at scale. And we see it for people building models, they continue to use SageMaker AI very expansively, and then Bedrock, when you're leveraging leading frontier models is also growing very substantially.

And as I said in my opening comments, the number of agents of scale is still really small in the scheme of what's going to be the case, but part of the problem is it's actually hard to actually build agents. And it's hard to deploy these agents in a secure and scalable way.

The launches we made recently in Strands that make it much easier to build agents and then Agent Core that make it much easier to deploy at scale and in a secure way are being very well received and customers are excited is going to change what's possible on the agent side.

Takeaway: AWS is playing for AI at scale and that requires foundational building blocks being built now.

Remember, 85% to 90% of the global IT spend is still on-premises. If you believe that equation is going to flip, which I do, you have a lot of legacy infrastructure that you've got to move. These are mainframes. These are VMware's instances and when we build agents like AWS Transform to make it much easier to move mainframe to the cloud, much easier to move VMware to the cloud, much easier to move .NET windows to .NET Linux to save money, those are compelling for enterprises or things like Kiro that allow customers to develop in a much easier way and in a much more structured way, which is why I think people are excited about it.

I really like the inputs and the set of services that we're building in the AI space today. Customers really like them and it's resonating with them. I still think it's very early days in AI and in terms of adoption. But the other thing I would just say is that. Remember, because we're at a stage right now where so much of the activity is training and figuring out how to get your generative AI applications into production.

Takeaway: The core cloud business is just fine.

People aren't paying as close attention as they will and making sure that those generative AI applications are operating where the rest of their data and infrastructure. Remember, a lot of generative AI inference is just going to be another building block like compute, storage and database. And so people are going to actually want to run those applications close to where the other applications are running, where their data is.

There's just so many more applications and data running in AWS than anywhere else. And I'm very optimistic about as we get to a bigger scale what's going to happen to AWS on the AI side. And I think we have a set of services that is unique top to bottom in the stack. I think on the last part about what do we expect with respect to acceleration, we don't give guidance by segment.

But I do believe that the combination of more enterprises who have resumed their march to modernize their infrastructure and move from on-premises to the cloud, coupled with the fact that AI is going to accelerate in terms of more companies deploying more AI applications into production that start to scale, coupled with the fact that I do think that more capacity is going to come online in the coming months and quarters, make me optimistic about the AWS business."

Takeaway: AWS is playing the long game and it's a somewhat boring is beautiful approach to AI.

And yes, Jassy's defense could have been tighter.

- Intuit starts to scale AI agents via AWS

- AWS launches Kiro, an IDE powered by AI agents

- AWS re:Inforce 2025: GenAI, AI agents and common sense security

- AWS re:Inforce 2025: Takeaways from the Amazon, AWS CISOs

- AWS re:Inforce 2025: How customers are using AWS security building blocks

- AWS re:Inforce 2025 Event Report: A Deep Dive on What You Need to Know