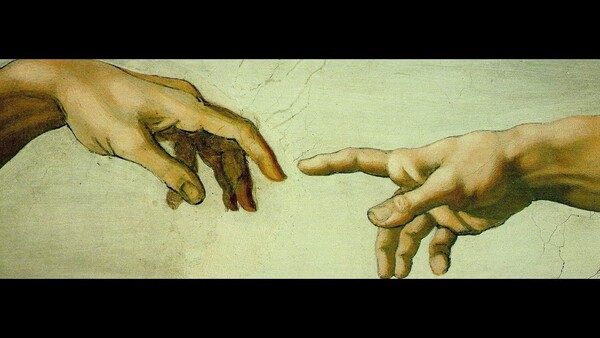

Man made software in his own image

In 2002, a couple of Japanese visitors to Australia swapped passports with each other before walking through an automatic biometric border control gate being tested at Sydney airport. The facial recognition algorithm falsely matched each of them to the others' passport photo. These gentlemen were in fact part of an international aviation industry study group and were in the habit of trying to fool biometric systems then being trialed round the world.

When I heard about this successful prank, I quipped that the algorithms were probably written by white people - because we think all Asians look the same. Colleagues thought I was making a typical sick joke, but actually I was half-serious. It did seem to me that the choice of facial features thought to be most distinguishing in a facial recognition model could be culturally biased.

Since that time, border control face recognition has come a long way, and I have not heard of such errors for many years. Until today.

The San Francisco Chronicle of July 21 carries a front page story about the cloud storage services of Google and Flickr mislabeling some black people as gorillas (see updated story, online). It's a quite incredible episode. Google has apologized. Its Chief Architect of social, Yonatan Zunger, seems mortified judging by his tweets as reported, and is investigating.

The newspaper report quotes machine learning experts who suggest programmers with limited experience of diversity may be to blame. That seems plausible to me, although I wonder where exactly the algorithm R&D gets done, and how much control is to be had over the biometric models and their parameters along the path from basic research to application development.

So man has literally made software in his own image.

The public is now being exposed to Self Driving Cars, which are heavily reliant on machine vision, object recognition and artificial intelligence. If some software of this sort can't tell people from apes in static photos given lots of processing time, how does it perform in real time, with fleeting images, subject to noise, and with much greater complexity? It's easy to imagine any number of real life scenarios where an autonomous car will have to make a split-second decision between two pretty similar looking objects appearing unexpectedly in its path.

One assumes Self Driving Cars (SDCs) will be tested to exhaustion but will it be enough? If cultural bias is affecting the work of programmers, it's possible that testers suffer the same blind spots without knowing it. Maybe the testers never even thought to try out the offending photo labeling programs on black people. So how are the test cases for SDCs being selected? What might happen when an SDC ventures into neighborhoods where its programmers and testers have never been?

Everybody in image processing and artificial intelligence should be humbled by the racist photo labeling. With the world being eaten by software, we need to reflect really deeply on how such design howlers arise. And frankly double check if we're ready to let computer programs behind the wheel.