IBM open sources Granite models, integrates watsonx.governance with AWS SageMaker

IBM has open sourced its portfolio of Granite large language models under Apache 2.0 licenses on Hugging Face and GitHub, outlined a bevy of AI ecosystem partnerships and launched a series of assistants and watsonx powered tools. The upshot is that IBM is looking to do for foundational models what open source did for software development.

The announcements, made at IBM's Think 2024 conference, land as the company has made a bevy of partnerships that put it in the middle of the artificial intelligence mix either as a technology provider or services provider. For instance, IBM and Palo Alto Networks outlined a broad partnership that combines Palo Alto Networks' security platform with IBM's models and consulting. IBM Consulting also partnered with SAP and ServiceNow on generative AI use cases and building copilots.

IBM also recently named Mohamad Ali Senior Vice President of IBM Consulting. Part of his remit is melding IBM's consulting and AI assets into repeatable enterprise services.

All these moves add up to IBM positioning itself as a partner to enterprises to scale AI across hybrid cloud and platforms.

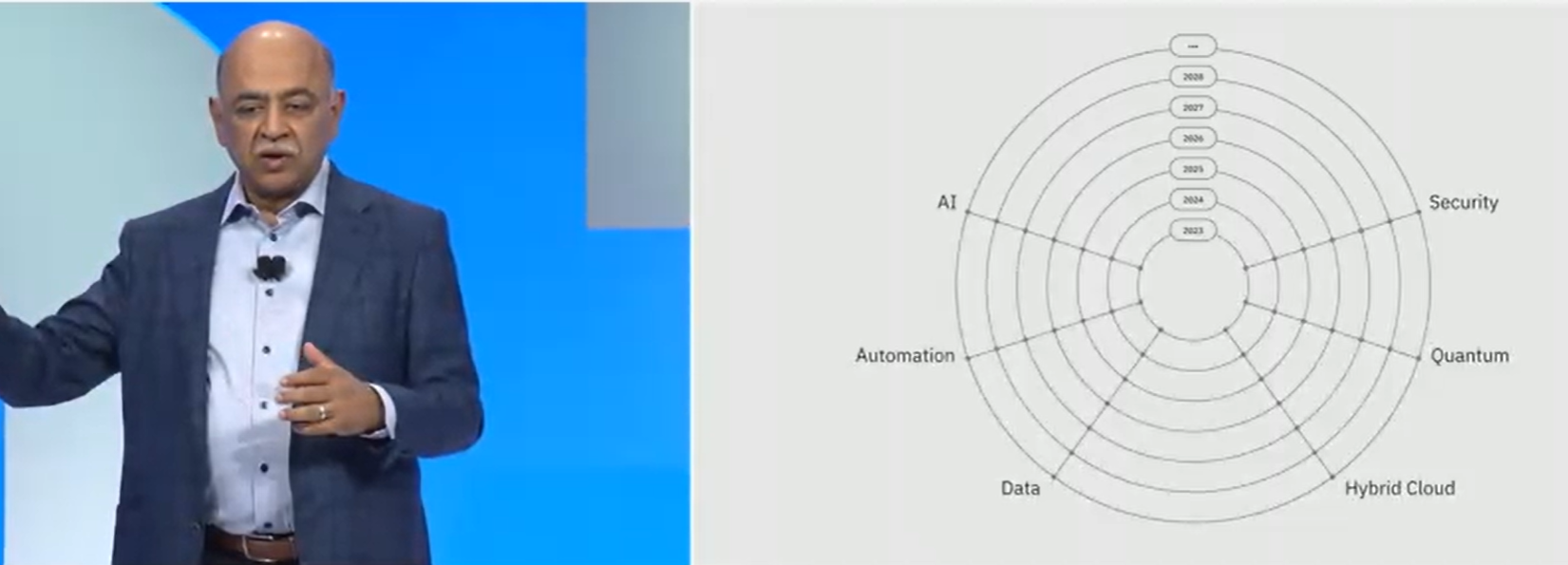

The backdrop of IBM's announcements, according to IBM CEO Arvind Krishna, is scaling AI. During his keynote he summed up the state of generative AI.

"There's a lot of experimentation that's going on. That's important, but it's insufficient. If you watch every one of the previous technologies, the history has shown you kind of move from innovating a lot to deploying a lot. As you deploy it is where you will get the benefits but in order to deploy, you also need to start moving from experimenting to working at scale. Think about a small project, then you got to think about it in an enterprise scale in a systemic way. How do you begin to expand it? How do you begin to make it have impact across an enterprise or across a government? And that is what is really going to make it come alive."

Here's how IBM sees itself in the AI ecosystem.

And here's a look at what IBM announced at Think 2024:

IBM open-sourced its Granite models and made them available under Apache 2.0 licenses on Hugging Face and GitHub. The code models range from 3 billion to 34 billion parameters and are suitable for code generation, bug fixing, explaining and documenting code and maintaining repositories.

Krishna said that IBM's move to open source Granite and highlight smaller models has multiple benefits. "We also want to make sure that you can leverage smaller purpose LLM models. Can a small model do as much maybe using 1% of the energy and 1% of the total cost?" he said. "We believe that actually having a smaller model, but that is tuned for a purpose. Rather than one model that does all the things that we can actually make models that are fit for purpose."

IBM launched InstructLab, which aims to make smaller open models competitive with LLMs trained at scale. The general idea is that open-source developers can contribute skills and knowledge to any LLM, iterate and merge skills. Think of InstructLab as the open-source software equivalent of AI models.

Red Hat Enterprise Linux (RHEL) AI will include the open-source Granite models for deployment. RHEL AI will also feature a foundation model runtime inferencing engine to develop, test and deploy models. RHEL AI will integrate into Red Hat OpenShift AI, a hybrid MLOps platform used by Watson.ai. Granite LLMs and code models will be supported and indemnified by Red Hat and IBM. Red Hat will also support distribution of InstructLab as part of RHEL AI.

IBM launched IBM Concert, a watsonx tool that has one interface for visibility across business applications, clouds, networks and assets. The company also launched three AI assistants built on Granite models including watsonx orchestrate Assistant Builder and watsonx Code Assistant for Enterprise Java Applications. For good measure, IBM launched watsonx Assistant for Z for mainframe data and knowledge transfer.

Via a partnership with AWS, IBM is integrating watsonx.governance with Amazon SageMaker for AI governance of models. Enterprises will be able to govern, monitor and manage models across platforms.

IBM will indemnify Llama 3 models, add Mistral Large to watsonx and bring Granite models to Salesforce and SAP. IBM watsonx.ai is also now certified to run on Microsoft Azure.

Watsonx is also becoming an engine for IT automation. IBM is building out its generative AI observability tools with Instana, Turbonomic, network automation tools and Apptio's Cloudability Premium. These various parts were mostly acquired.

Constellation Research's take

Constellation Research analyst Holger Mueller said:

"IBM is pushing innovation across its portfolio and of course it is all about AI. The most impactful is probably the open sourcing the IBM Granite models. With all the coding experience and exposure that IBM has, these are some of the best coding LLMs out there, and you can see from the partner momentum, that they are popular. The release of IBM Concert is going to be a major step forward for IBM customers running IBM systems and 3rd party systems. Of note is also the release of Qiskit, the most popular quantum software platform, that IBM has significantly increased in both capabilities and robustness. These three stick out for me as the most long term impact for enterprises."