AWS closes generative AI narrative gap, plays on choice, Trainium, Inferentia chips

Amazon CEO Andy Jassy fleshed out Amazon Web Services' narrative when it comes to generative AI providing a multi-level view that also includes a good dose of its own silicon.

When it comes to generative AI, AWS has been caught between Microsoft Azure and Google Cloud Platform, two companies that have app-layer stories to tell. What's an app-layer story? Think co-pilots galore. That co-pilot led narrative is dominated by tech vendors that play on the application layer--Microsoft, Google and Salesforce to name a few.

Jassy's argument on Amazon's earnings conference call is that generative AI also means a lot of large language model (LLM) training. That training is why everyone is why Nvidia is the prom queen at the generative AI dance.

But Jassy outlined two key points. Nvidia GPUs won't be the only option for model training. Yes, there will be AMD, but there will also be AWS' custom silicon. To AWS, generative AI is comprised of three layers and so far, the application layer has received all the buzz. AWS is going to play in the lower layers--compute and models as a service.

He said (emphasis mine):

"At the lowest layer is the compute required to train foundational models and do inference or make predictions. Customers are excited by Amazon EC2 P5 instances powered by NVIDIA H100 GPUs to train large models and develop generative AI applications. However, to date, there's only been one viable option in the market for everybody and supply has been scarce.

That, along with the chip expertise we've built over the last several years, prompted us to start working several years ago on our own custom AI chips for training called Trainium and inference called Inferentia that are on their second versions already and are a very appealing price performance option for customers building and running large language models. We're optimistic that a lot of large language model training and inference will be run on AWS' Trainium and Inferentia chips in the future."

Training models isn't cheap and those with the infrastructure are going to fare well. Not everyone needs a luxury training processor.

The middle layer of the generative AI game will be LLMs as a service. Managed services are the AWS specialty in the cloud. Jassy said:

"We think of the middle layer as being large language models as a service. Stepping back for a second, to develop these large language models, it takes billions of dollars and multiple years to develop. Most companies tell us that they don't want to consume that resource building themselves. Rather, they want access to those large language models, want to customize them with their own data without leaking their proprietary data into the general model, have all the security, privacy and platform features in AWS work with this new enhanced model and then have it all wrapped in a managed service."

Jassy's comments line up with what we've heard repeatedly from CXOs. Some enterprises are looking at private cloud options for training. Some are thinking about going on-premises for training. Others want model choice including smaller LLMs that are use case specific. Choice isn't a bad thing and it's highly likely that not every enterprise is going to play along with OpenAI tolls.

This LLM choice mantra was seen last week when AWS outlined Bedrock at AWS Summit New York.

These first two layers are where AWS will play, said Jassy. "If you think about these first 2 layers I've talked about, what we're doing is democratizing access to generative AI, lowering the cost of training and running models, enabling access to large language model of choice instead of there only being one option," said Jassy.

What about the apps? Jassy said AWS is an enabler with services like CodeWhisperer. "Inside Amazon, every one of our teams is working on building generative AI applications that reinvent and enhance their customers' experience. But while we will build a number of these applications ourselves, most will be built by other companies, and we're optimistic that the largest number of these will be built on AWS," he said.

For good measure, Jassy reiterated what many vendors and customers have been saying. Without a good data strategy, you don't have AI, generative or otherwise. And by the way, AWS is embedded in a bunch of data management plays.

See: JPMorgan Chase: Digital transformation, AI and data strategy sets up generative AI | Goldman Sachs CIO Marco Argenti on AI, data, mental models for disruption

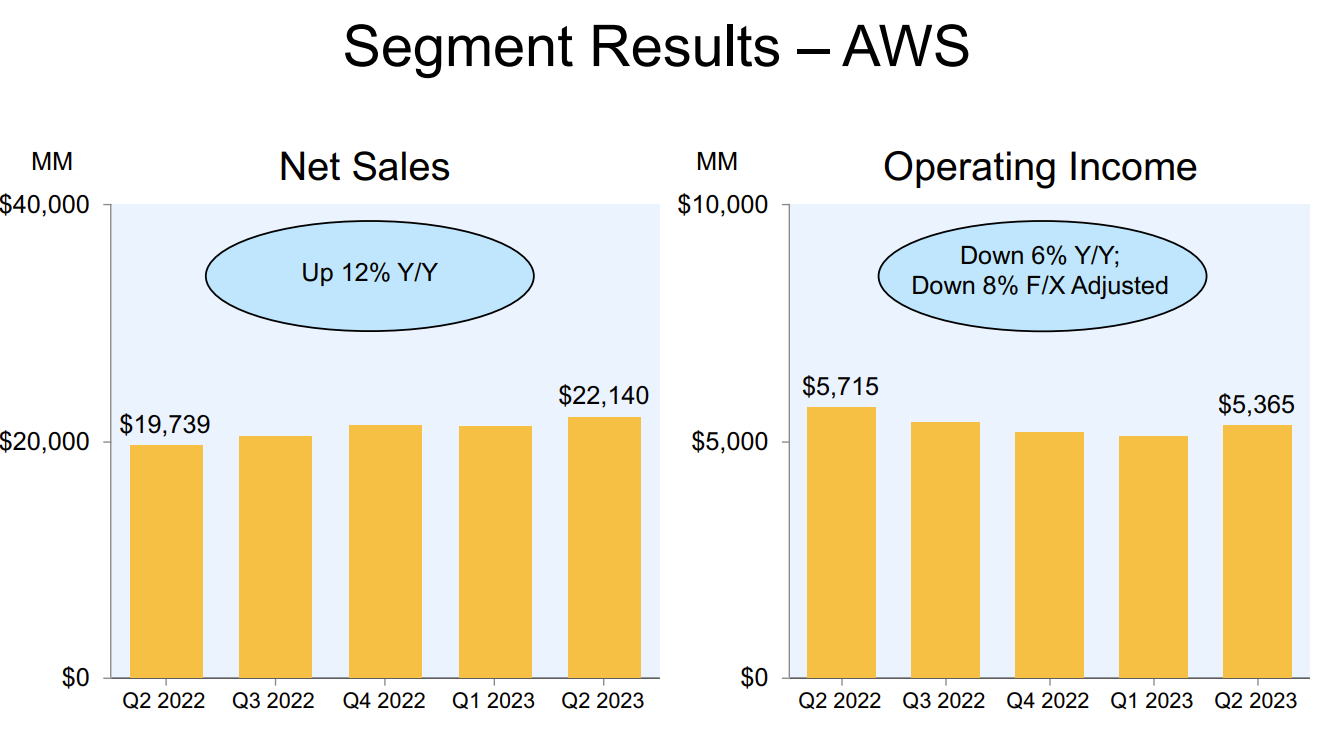

Add it up and AWS laid out its generative AI case and got back into the perception and mindshare game. Yes, AWS growth rates are stable (12% in the second quarter) and that's slower than its rivals. But there's also a much bigger base. AWS is also seeing a lot of cost optimization from customers. In the end, it's likely those generative AI workloads are going boost AWS, which is "starting to see some good traction with our customers' new volumes."

Naturally, analysts want to know how AWS is going to monetize generative AI. The short answer is AWS is going to see more volume. What's unclear is whether there will be some add-on model. My guess is probably not. For starters, the upcharge for generative AI is an approach for SaaS companies that is going to get tired quickly.

Jassy, however, said it's way too early. "I think we're in the very early stages there. We're a few steps into a marathon in my opinion. I think it's going to be transformative, and I think it's going to transform virtually every customer experience that we know. But I think it's really early," said Jassy. "I think most companies are still figuring out how they want to approach it. They're figuring out how to train models. They want to -- they don't want to build their own very large language models. They want to take other models and customize them, and services like Bedrock enable them to do so. But it's very early. And so, I expect that will be very large, but it will be in the future."

- Enterprise IT vendors see gradual demand improvement, AI-driven buying

- How to think about generative AI, use cases, regulation, ethics and resilience

- CXOs more optimistic, eye growth, optimization, automation, ChatGPT pilots

- How AI workloads will reshape data center demand

- Enterprise tech buyers wary of generative AI hype, security

- How 4 CEOs are approaching generative AI use cases in their companies

Takeaways

Here's what AWS accomplished on Amazon's earnings conference call:

- AWS provided a more nuanced view of generative AI that plays to its core strengths--developers and enterprise builders. By not going co-pilot happy, AWS' narrative was almost refreshing.

- Outlined how important custom silicon will be. Nvidia infrastructure isn't cheap and CXOs will be looking for whatever processors get the training job done.

- Put Jassy back into a familiar role: AWS lead singer.

- Gave enterprise buyers a sermon more in line with their current thinking on generative AI.

- Allayed concerns from Wall Street. I noted the narrative gap when it came to the cloud and generative AI players and how AWS got lost. Analysts will be on the bandwagon again based on Amazon's second quarter results. Why does Wall Street matter? CXOs watch a lot of CNBC and Bloomberg too.

Related:

- Shutterstock's generative AI way forward: 6-year training data deal with OpenAI

- Rivian: AI, data power customer experiences

- How JetBlue is leveraging AI, LLMs to be 'most data-driven airline in the world'

- AI is everywhere including your supermarket, homebuilder and soup

- How Home Depot blends art and science of customer experience

- Greystone CIO Niraj Patel on generative AI, creating value, managing vendors

- Accenture's Paul Daugherty: Generative AI today, but watch what's next

- Box CEO Levie on generative AI, productivity and platform neutrality