Anthropic's multi-agent system overview a must read for CIOs

Anthropic outlined how it has built multi-agent systems for Claude Research and CIOs need to read and heed the practical advice and challenges when thinking through AI agents.

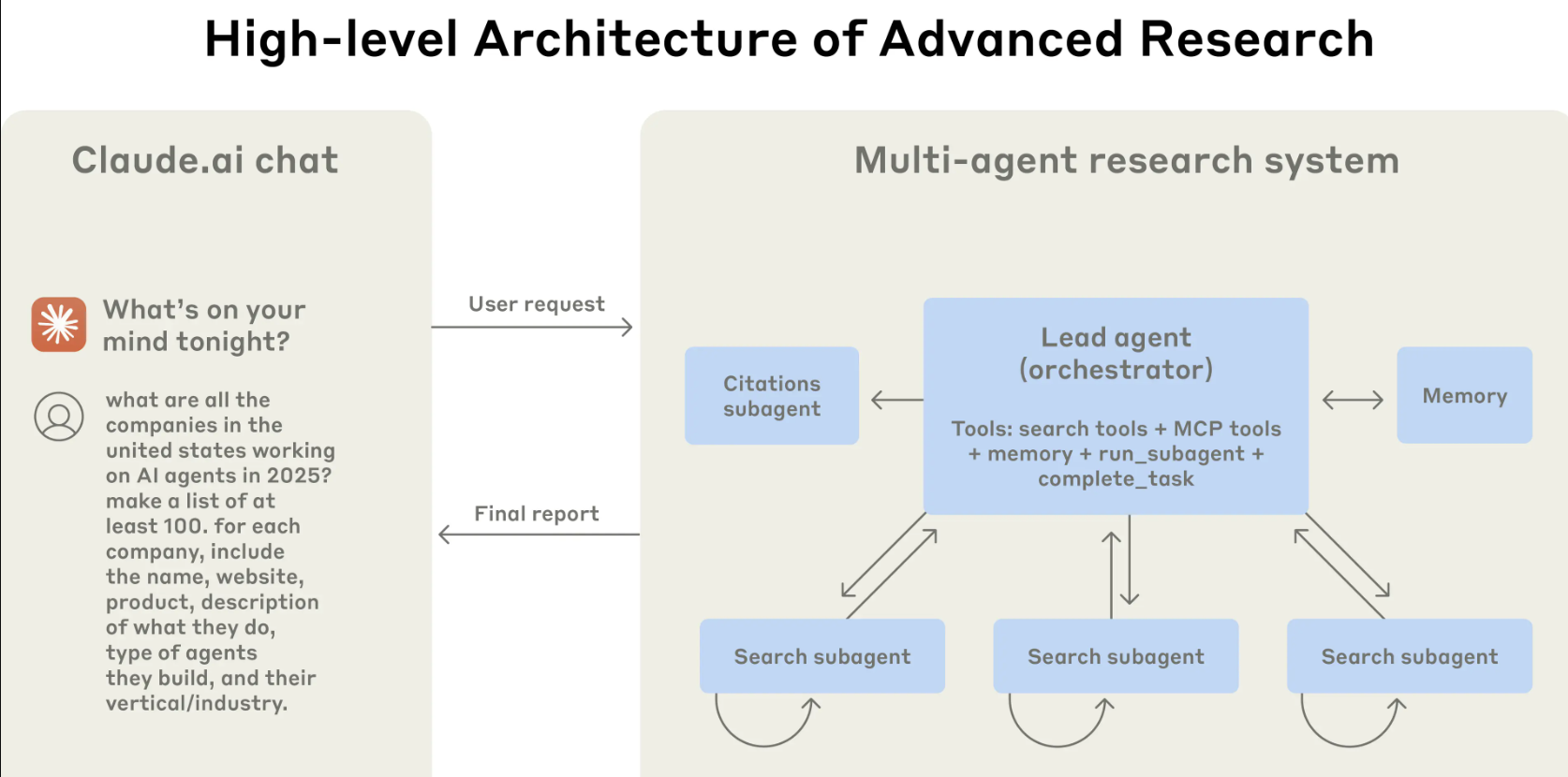

In a post, Anthropic's engineering team laid out how the company built a multi-agent system and there's a lot of practical advice on architecture, orchestrating multiple large language models (LLMs) so they can collaborate, and challenges with reliability and evaluation.

Anthropic created a lead agent as well as subagents. What was most interesting about Anthropic's research were the challenges. Your vendor is likely to tell you that there's an easy button for agentic AI, but Anthropic's post gives you some questions to ask about the architecture behind the marketing.

Here's what CIOs should note:

Multi-agent systems can deliver accurate answers, but can also burn tokens quickly. Agents use 4x more tokens than chat interactions and multi-agent systems use about 15x more tokens than chats. "For economic viability, multi-agent systems require tasks where the value of the task is high enough to pay for the increased performance," said Anthropic.

Takeaway: If you use multi-agent systems for tasks where a more simple approach may be justified you're going to get hit with a big compute bill.

- Agentic AI: Is it really just about UX disruption for now?

- RPA and those older technologies aren’t dead yet

- Lessons from early AI agent efforts so far

- Every vendor wants to be your AI agent orchestrator: Here's how you pick

- Agentic AI: Everything that’s still missing to scale

- BT150 zeitgeist: Agentic AI has to be more than an 'API wearing an agent t-shirt'

Agents can continue even when they get results that are sufficient.

Takeaway: Anthropic said you'll need to "think like your agents and develop a mental model of the agent to improve prompting.

Agents can "duplicate work, leave gaps, or fail to find necessary information" if they don't have detailed task descriptions.

Takeaway: Lead agents need to give detailed instructions to subagents.

Agents struggle with judging the appropriate effort for different tasks.

Takeaway: You'll have to embed scaling rules in the prompts for tasks. The lead agent should have guidelines to allocatee resources for everything from simple queries to complex tasks.

Agents need to use the right tool to be efficient and interfaces between agents and tools are critical. Anthropic used the following example: "An agent searching the web for context that only exists in Slack is doomed from the start."

Takeaway: Without tool descriptions agents can go down the wrong path. Each tool needs a purpose and clear description. Anthropic said also let agents improve prompts by diagnosing failures and suggesting improvements.

Thinking is a process. Anthropic outlined how it extended thinking mode in Claude with multiple process improvements to get from the lead agent thinking to sub agent assignments.

Takeaway: While Anthropic was focused on parallel tooling and creating subagents that can adapt, the biggest lesson here is to think through and understand the process behind how agents do the work.

Minor changes in agentic systems can cascade into large behavioral changes and debugging is difficult (and needs to happen on the fly).

Takeaway: Anthropic created a system that goes beyond standard observability to monitor agent decision patterns and interactions without tracking individual conversations. Observability tools will be critical to any agent platform.

Deployments are going to be difficult with multi-agent systems.

Takeaway: Anthropic said it doesn't update agents at the same time because it doesn't want to disrupt operations. How many enterprise outages will we have because bad code brought down autonomous agent operations?