Databricks launches Mosaic Agent Bricks, Lakeflow Designer, Lakehouse

Databricks launched Mosaic Agent Bricks, a workspace for creating AI agents that are production ready, accurate and cost efficient, Lakeflow Designer and Lakehouse, a transactional database.

Agent Bricks advances the Databricks approach with Mosaic AI, which can build agent systems delivering domain-specific results, and aims to move AI agents into production. Agent Bricks will automatically generate domain-specific synthetic data and task-based benchmarks.

Databricks CEO Ali Ghodsi, said Agent Bricks is a "new way of building and deploying AI agents that can reason on your data." Databricks kicked off its Data + AI Summit in San Francisco.

Databricks said Agent Bricks will help build scalable AI agents that don't hallucinate, evaluate what's a good result and build a synthetic data set to mirror customer data. Agent Bricks are auto optimized.

- Databricks acquires Neon, sees Postgres scaling key to agentic AI

- Databricks forges partnership with Anthropic, adds innovative system to enhance open source LLMs

- Databricks, Palantir forge integration pact

- SAP launches Business Data Cloud, partnership with Databricks: Here's what it means

- Databricks annual revenue run rate hits $3 billion, compared to Snowflake’s $3.77 billion

With Agent Bricks, customers will be able to describe a high-level problem and Databricks will create LLM judges, generate synthetic data and auto optimize to create a grounding loop.

Databricks cited Astra Zeneca and Hawaiian Electric as early adopters of Agent Bricks that moved from point-of-concept to production in minutes from days.

By taking the guesswork out of creating production AI agents, Databricks is looking to scale agentic AI as well as drive consumption of its data platform.

Key points about Mosaic Agent Bricks:

- Agent Bricks generates task-specific evaluations and LLM judges to assess quality.

- Synthetic data is created that looks like customer data to supplement learning.

- Agent Bricks uses multiple techniques to optimize agents.

- Customers can balance quality and cost for agent results.

- Use cases for Agent Bricks includes information extraction, knowledge supplementation, customer LLM agent and multi-agent supervision.

Databricks' announcements landed a week after Snowflake Summit 2025.

- Snowflake Summit 2025: Everything you need to know

- Snowflake makes its Postgres move, acquires Crunchy Data

A common theme from both Databricks and Snowflake was that data platforms and AI are increasingly connected and that database technology was built for a different era. Both Databricks and Snowflake have doubled down on Postgres as a base for new AI applications. The general idea is to get data to AI applications in the lowest cost and efficient manner.

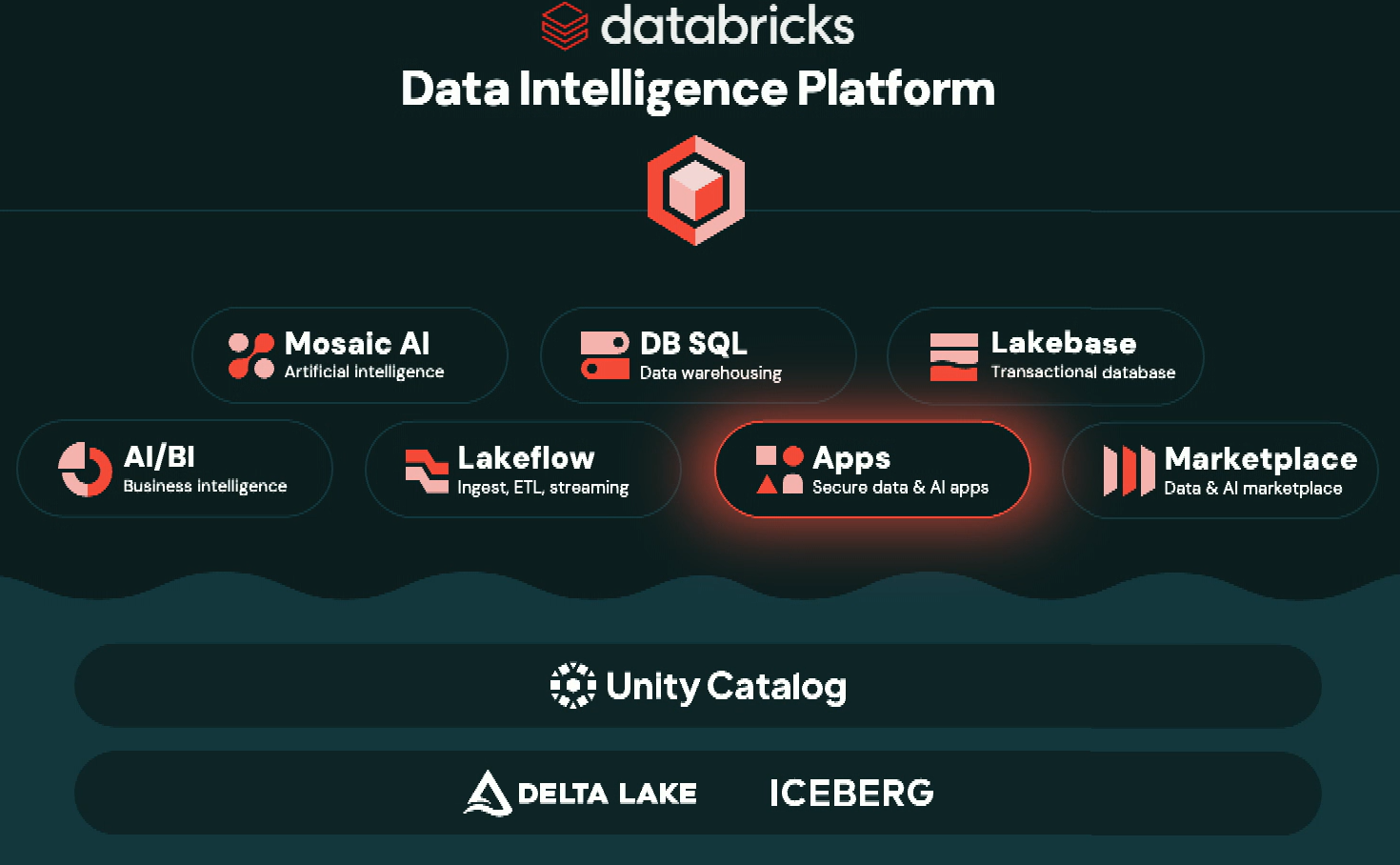

In addition, Databricks is looking to combine data and AI so enterprises can define objectives using natural language and then the platform handles the rest of the process--data prep and features; build models with fine tuning; deploy tools, retrieval models and agent sharing; evaluation (both automated and human; and governance and modeling. Databricks' big argument is that data intelligence needs to touch every application with analytics, AI and database.

Databricks also launched Mosaic AI support for serverless GPUs and MLflow 3.0, a platform for managing the AI lifecycle.

Lakeflow Designer

Separately, Databricks launched Lakeflow Designer, a no code to code first pipeline so builders have a common language.

Lakeflow Designer is backed by Lakeflow, which is now generally available and has no-code connectors that can create pipelines with a single line of SQL. Lakeflow Designer features no-code ETL with scale, access control and AI support.

Key items about Lakeflow Designer include:

- Lakeflow Designer has a drag-and-drop UI so business analysts can build pipelines as easily as data engineers.

- Lakeflow Designer is backed by Databricks Lakeflow, Unity Catalog and generative AI features.

- Lakeflow Designer will be launched in private preview.

Constellation Research analyst Michael Ni said:

"This isn’t just about scale—it’s about unlocking the 90% of questions that never make it to engineering. From campaign lift tracking to territory planning, Lakeflow Designer lets business teams define and ship data products using no/low-code tools that don’t get thrown away. Lakeflow Designer is the Canva of ETL: instant, visual, AI-assisted—yet under the hood, it’s Spark SQL at machine scale. The business analyst designs, and the data engineers can review and tweak collaboratively with the analyst. The engine industrializes it with full transparency and trust."

In addition, Lakeflow and Lakeflow Designer will rhyme with Snowflake's OpenFlow. "Lakeflow and OpenFlow reflect two philosophies: Databricks integrates data engineering into a Spark-native, open orchestration fabric, while Snowflake’s OpenFlow offers declarative workflow control with deep Snowflake-native semantics. One favors flexibility and openness; the other favors consolidation and simplicity," said Ni.

Databricks eyes transactional data with Lakebase

Databricks also announced Lakebase, a transactional database engine where data is stored in low-cost lakes for easy access to AI applications.

Lakebase is Databricks effort to address what databases need to do for AI applications. Databricks argued that Lakebase is designed for AI due to the following characteristics:

- Lakebase has separate compute and storage, which creates very low latency, high queries per second and 99.999% uptime.

- The Lakebase is built on open source Postgres that supports community extensions.

- Lakebase is built for API since it launches in less than a second, gives customers the ability to pay for what they use and can manage changes well.

- Lakebase runs on Postgres OLTP Engine and shares DNA with Neon and has fully managed pipelines for data sync.

Databricks also announced the following:

- Lakebridge, a tooling set that's free and aimed at predictable migrations. Lakebridge features a warehouse profiler, code converter, data migration and validation with support for more than 20 legacy data warehouses.

- Databricks Apps, which are governed data intelligence apps on Databricks, are generally available.

- Unity Catalog Metrics, which defines metrics in one place and provides dashboards and notebooks across an enterprise. Unity Catalog Metrics also works with AI/BI Genie to promote novel questions in certified semantics.

- Databricks One, a version of Databricks designed for business teams that's in public preview. Databricks One has an intuitive customer experience, simple administration and Unity Catalog.

- Community Edition: Databricks Community Edition was updated and includes most features. Developers can learn and experiment with data and AI use cases and Databricks is spending $100 million on programs for education.

Ni added:

"We’re entering a new era where data clouds and hyperscalers are racing to establish themselves as the dominant platform for AI-driven decision-making in their respective markets. The competition is no longer about warehouse performance—it’s about who owns the semantic layer, who governs the agent lifecycle, and who enables the next-gen data app ecosystem. With Lakebase, Agent Bricks, and Unity Catalog metrics, Databricks is asserting that ownership more broadly than ever before."