Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

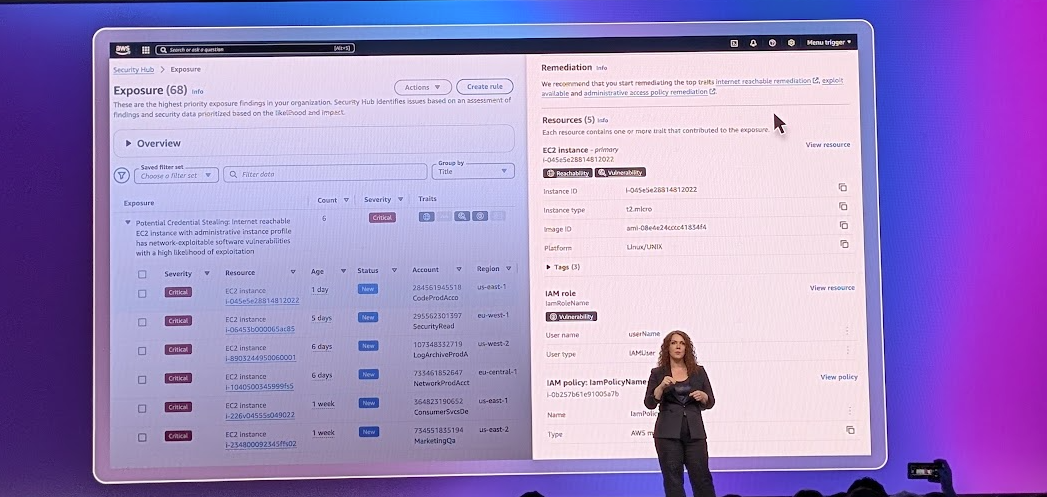

For Amazon Web Services customers, the continuum between security services and the rest of the cloud vendor's portfolio covering storage, data, AI and compute tends to blend together.

The primary takeaway from re:Inforce 2025 is that AWS isn't trying to make money in security. Yes, AWS has security products like GuardDuty, Inspector and SecurityHub sold separately, but a lot of AWS security additions are built into existing services.

What's clear is that AWS has a lot of visibility into its platform data and can handle emerging threats with AI. Whether security innovation turns into a separate product or handy feature in an existing service, say Amazon Bedrock, falls into the to-be-determined category.

These customer vignettes from AWS re:Inforce highlight the cloud provider's security strategy that is more about providing building blocks where security is built-in, but not necessarily the main show.

Comcast: Three North Stars

Noopur Davis, Comcast's Global CISO, Chief Product Privacy Officer, said the company has been an AWS customer for six years. Comcast has adopted AWS frameworks and used the cloud provider's building blocks to enable its developers build in cybersecurity throughout the software development lifecycle.

"We take a long-term view of security. Our first North Star is privacy, followed by data security and operate on zero trust," said Davis, who oversees a team of 2,000 cybersecurity pros.

Davis added that Comcast began integrating generative AI in April 2023 and had to develop frameworks for models, the data feeding models and guardrails.

Comcast has developed an AI workbench that will build in security across the software development lifecycle. According to Davis, security and data practices are comingled and part of a broader transformation effort.

Davis outlined the following security projects:

- The company is using AI and its data for threat modeling, pen testing and code remediation.

- Comcast is integrating security into its development workbenches.

- AI bots are used for governance, regulatory and compliance processes.

- Comcast has developed on AI-enabled risk engine across its platform.

For Comcast, using AWS for data pipelines and modeling has improved the company's ability to use AI for cybersecurity.

Comcast is a partner and as well as a customer. At re:Invent 2024, the cable giant said it was shifting its 5G wireless core services to AWS. In 2023, Comcast took its internally developed cybersecurity data fabric commercial with AWS and Snowflake via a product called DataBee.

DataBee, which uses AWS compute and storage, was the first product from Comcast Technology Solutions new cybersecurity division formed in 2022.

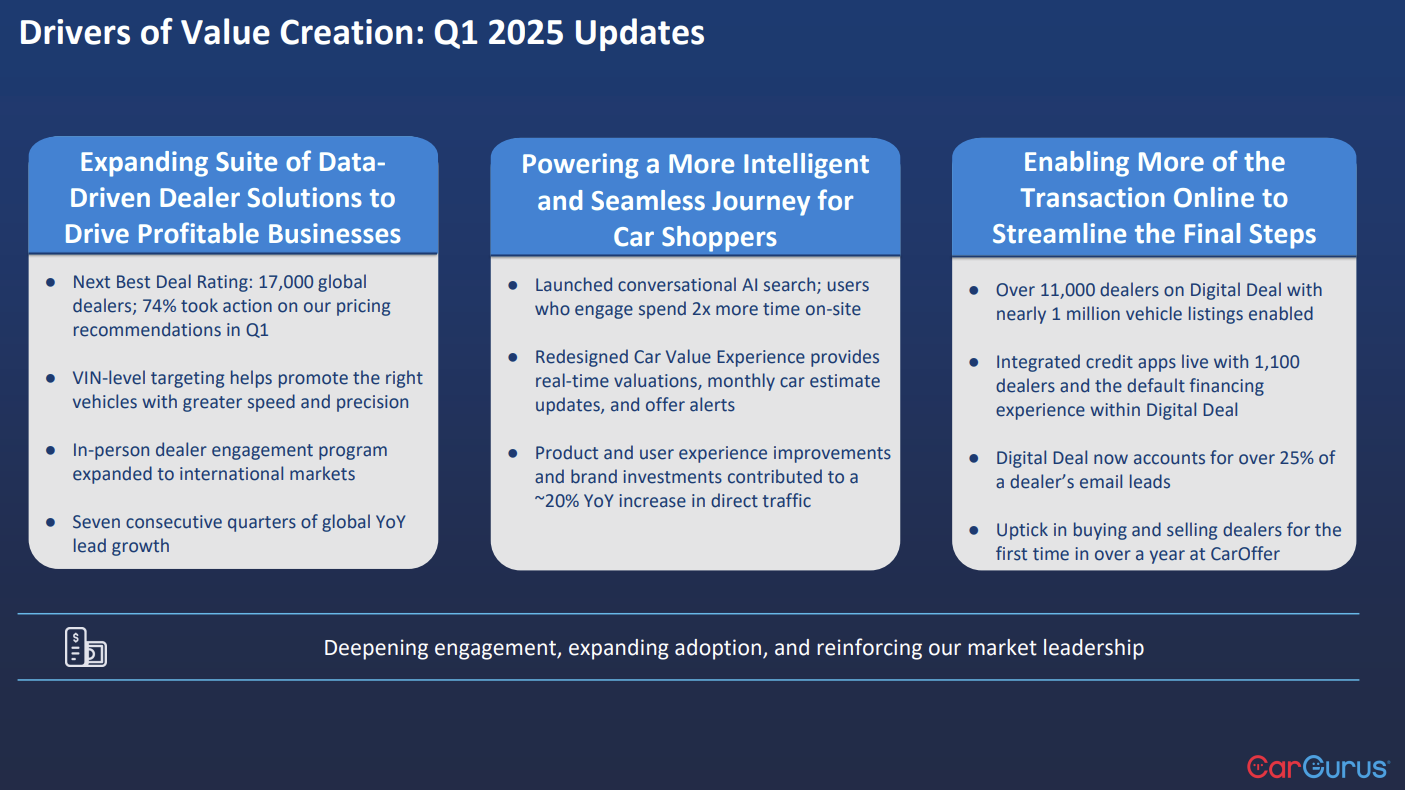

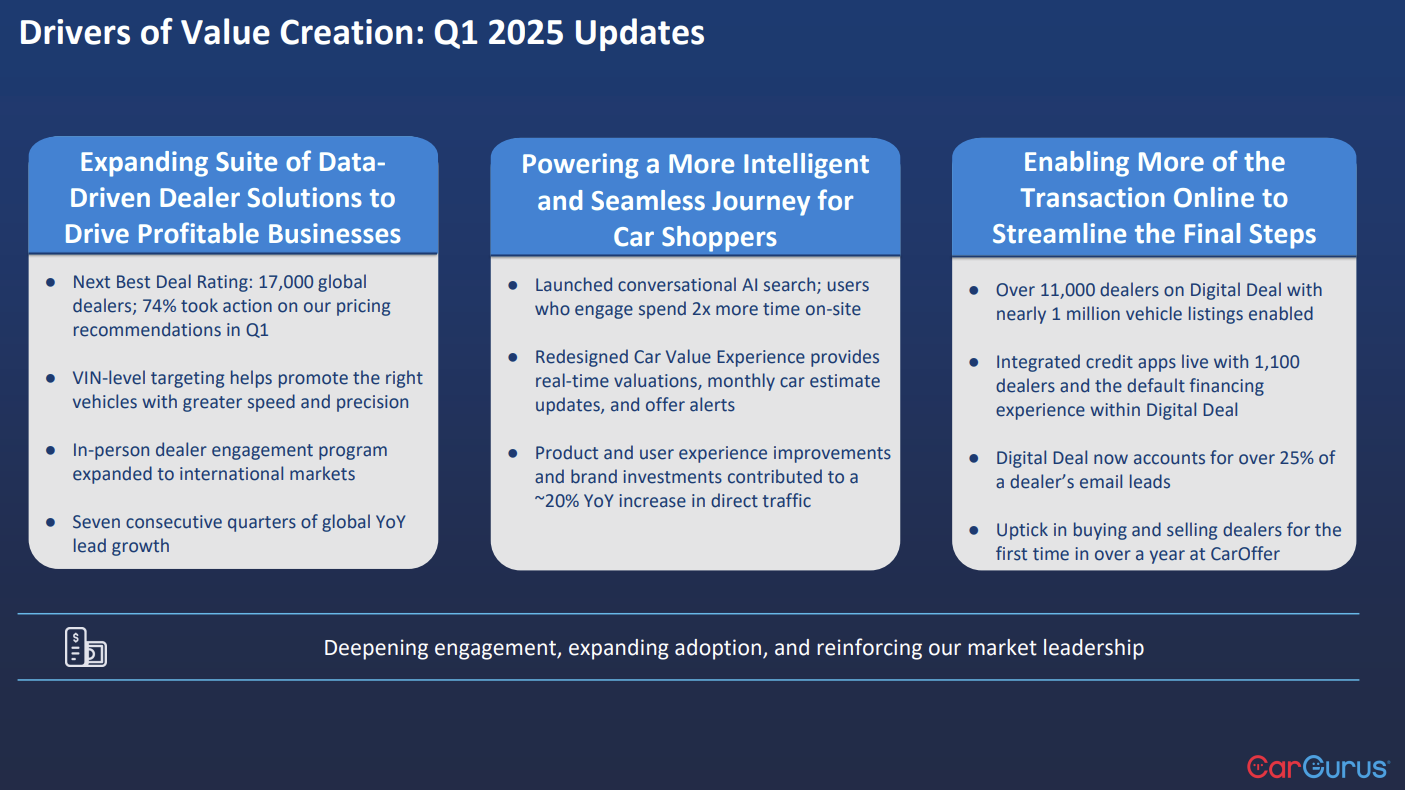

CarGurus: Data protection, data lineage, AI governance

Kelly Haydu, VP Information Security, Technology & Enterprise Applications at CarGurus, said the auto marketplace is powered by data. "Data is the fabric of a company," said Haydu, who added that securing that data requires understanding data flows incoming and outgoing.

CarGurus was an early adopter of generative AI and began incorporating the technology two-and-a-half years ago. As a result, CarGurus had to develop AI risk management and governance strategies early.

In 2022, CarGurus moved to AWS as the preferred cloud provider. To secure data, CarGurus relies on Cyera, a startup focused on data protection. CarGurus has also created a bevy of security frameworks including some that evaluate emerging technologies as markets change.

Haydu said:

"We have a great handle on our data today, but as AI starts to come on more and more I need to know when the piece of technology is introduced. When the machines are talking to the machines and the agents are talking to the agents, we needed to have an automated way to understand the data sources from inception to at rest in our databases, and that's called data lineage. Understanding the life cycle of that data will continue to be important as more data comes into our ecosystem in the upcoming years."

Going forward, CarGurus will continue to evolve as the company expects to ingest more data and leverage AI and automation as it scales. Haydu said data security has four evolving categories:

- AI and automation. "What we're going to see in the future is more dynamic, real-time analysis of the data coming in from platforms," said Haydu. "It's going to be about contextual analysis with machines versus having a scan that has to happen."

- Integration across data fabrics.

- Risk management. Haydu said risk management is going to focus on individuals and their access, environments with data sensitivity.

- Zero trust architecture, which will be adopted into platforms.

Santander: Addressing risk, security frameworks, regulators

Jamie Nash, Head of Technology and Operations Risk at Santander, implemented a digital banking platform called Open Bank in 18 months with AWS and Deloitte.

She said it's critical to have a security framework that can be shared with regulators. Nash added that data sovereignty remains a big concerns for financial institutions.

Nash said Santander used AWS Risk and Compliance Navigator Framework as the primary blueprint. Santander had an existing risk framework that was adapted for cloud computing with the help of AWS and Deloitte. The move took traditional banking security controls to cloud equivalents.

Santander addressed data sovereignty by doing the following:

- Keeping all customer data within the US.

- Architecting Open Bank so it could maintain compliance.

- Keeping existing customer reference data on-prem instead of moving to the cloud.

- Open Bank was focused on new customers to avoid data migration issues.

- As for regulators, Nash said Santander was in early communication about open bank to speed up approvals.

"The biggest concern was the regulatory component and it was the most daunting aspect, because we are obviously very highly regulated," said Nash. "We don't see a lot of banks moving their full tech stack in the cloud in one fell swoop. We had to explain and educate our regulators."

Data to Decisions

Digital Safety, Privacy & Cybersecurity

Innovation & Product-led Growth

Future of Work

Tech Optimization

Next-Generation Customer Experience

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

cybersecurity

Chief Information Officer

Chief Information Security Officer

Chief Privacy Officer

Chief AI Officer

Chief Experience Officer