Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

Security isn't an issue for securing today's AI agent use cases since the key tools and techniques are already in place. The grand vision for cross-platform AI agents that hop across data stores and processes is going to require more work on the standards and plumbing side.

That's the big takeaway from AWS at its annual security conference in Philadelphia. The conference, which was free of the AI agent-washing we're so used to, was refreshing. After all, we're accustomed to AI agent fairy tales by now from most vendors.

Here's a look at AWS re:Inforce 2025 and my key takeaways.

The AWS security story isn't easy to tell

AWS is a company that has multiple security offerings, but doesn't try to make money from them. Security isn't a business for AWS as much as it is a base layer for everything it does.

The company has started to roll up security building blocks into suites and services, but is primarily focused on the AWS environment. That reality means that the storyline of AWS vs. CrowdStrike vs. Palo Alto Networks vs. Zscaler doesn't exist.

AWS revenue for cybersecurity? Finding that number is almost impossible since security is more feature than product at AWS.

It's hard to even play buzzword bingo for AWS. The analysts at AWS re:Inforce 2025 were all trying to walk away with a grand plan to secure agentic AI. What we got was that AWS is confident that AI agent use cases today can be secured with existing identity and access management technologies. Why? AI agent architecture rhymes with microservice architecture, which is already secured at multiple points. And AWS already gives every compute resource an ID anyway.

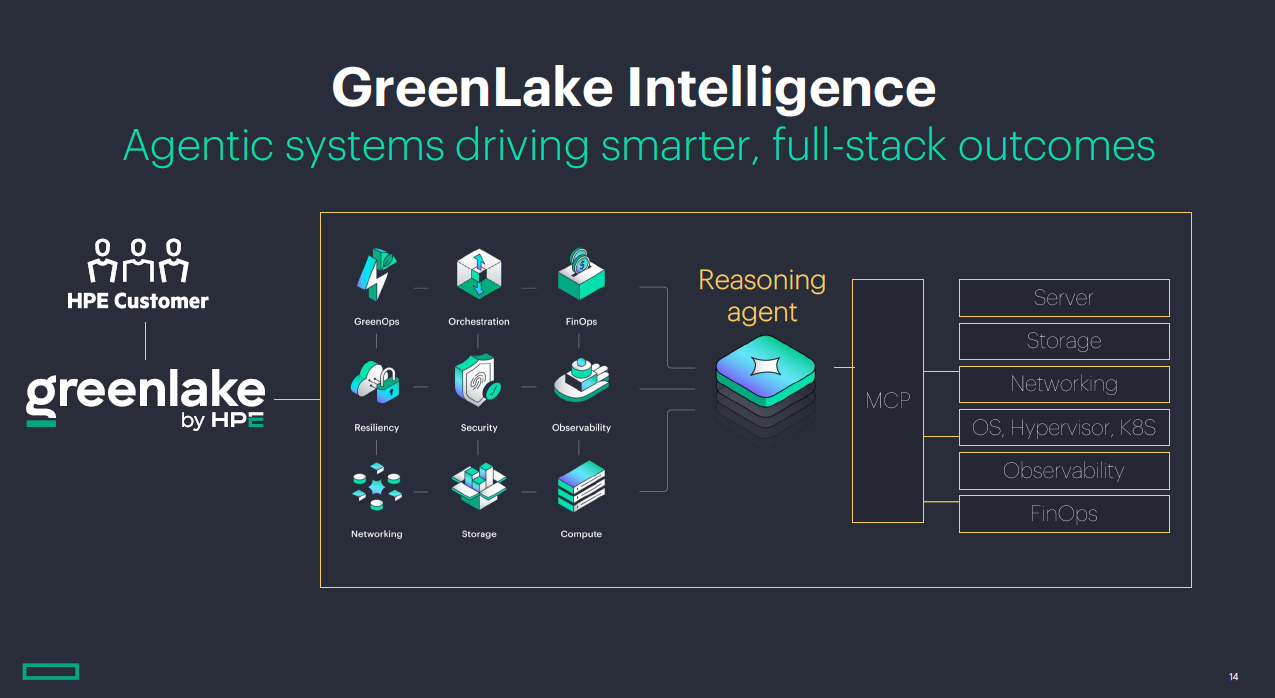

In the future, standards like model context protocol (MCP) need more work for security, but that multi-system, multi-cloud, multi-process vision of agentic AI is being baked.

Simply put, the cybersecurity narrative we're all used to doesn't quite apply to AWS. Microsoft has a security business and a product-focused view. Google Cloud has Mandiant, security products and a pending Wiz acquisition to grow revenue. Cybersecurity vendors talk agentic AI, platformization and expanding total addressable markets.

AWS' narrative is like this: Security is in the design of everything we do so developers have building blocks to use. In many cases, security is just a feature. We're not trying to make money on security. We can do a better job of making security services easier for customers to consume, but the parts are there or soon will be.

AWS Chief Information Security Officer Amy Herzog said, "you can't just separate genAI from the rest of the conversation." "The playbook is the same as always. What are you trying to accomplish?," said Herzog. "There are definitely technical challenges that we are starting to get ahead where we might be in a few years. But I think that's a different conversation."

AWS is making its security services more consumable

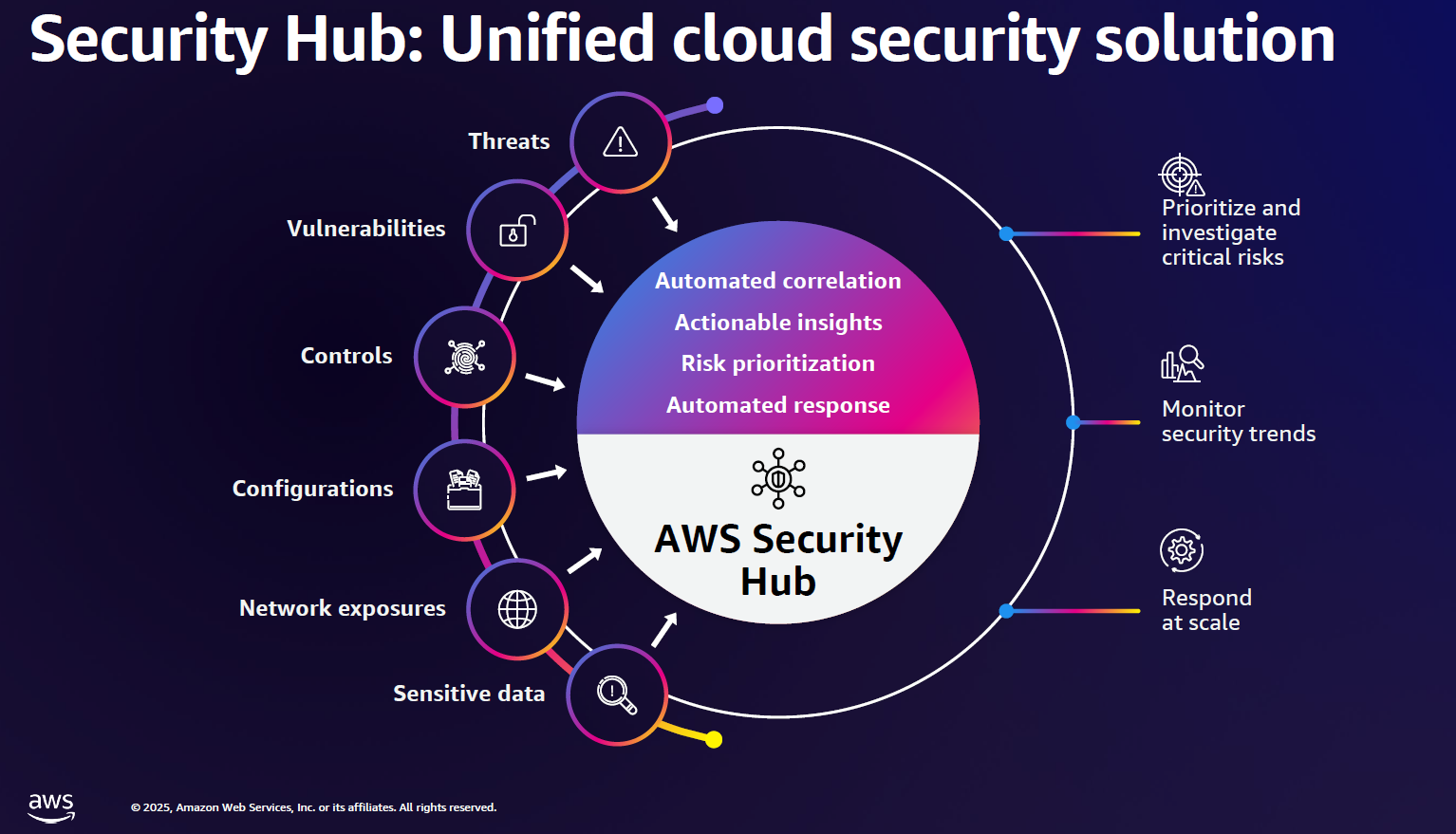

AWS has a sprawling set of security building blocks, but the news drop from AWS re:Inforce 2025 highlights an emerging theme from the company: It is rolling up its services into suites.

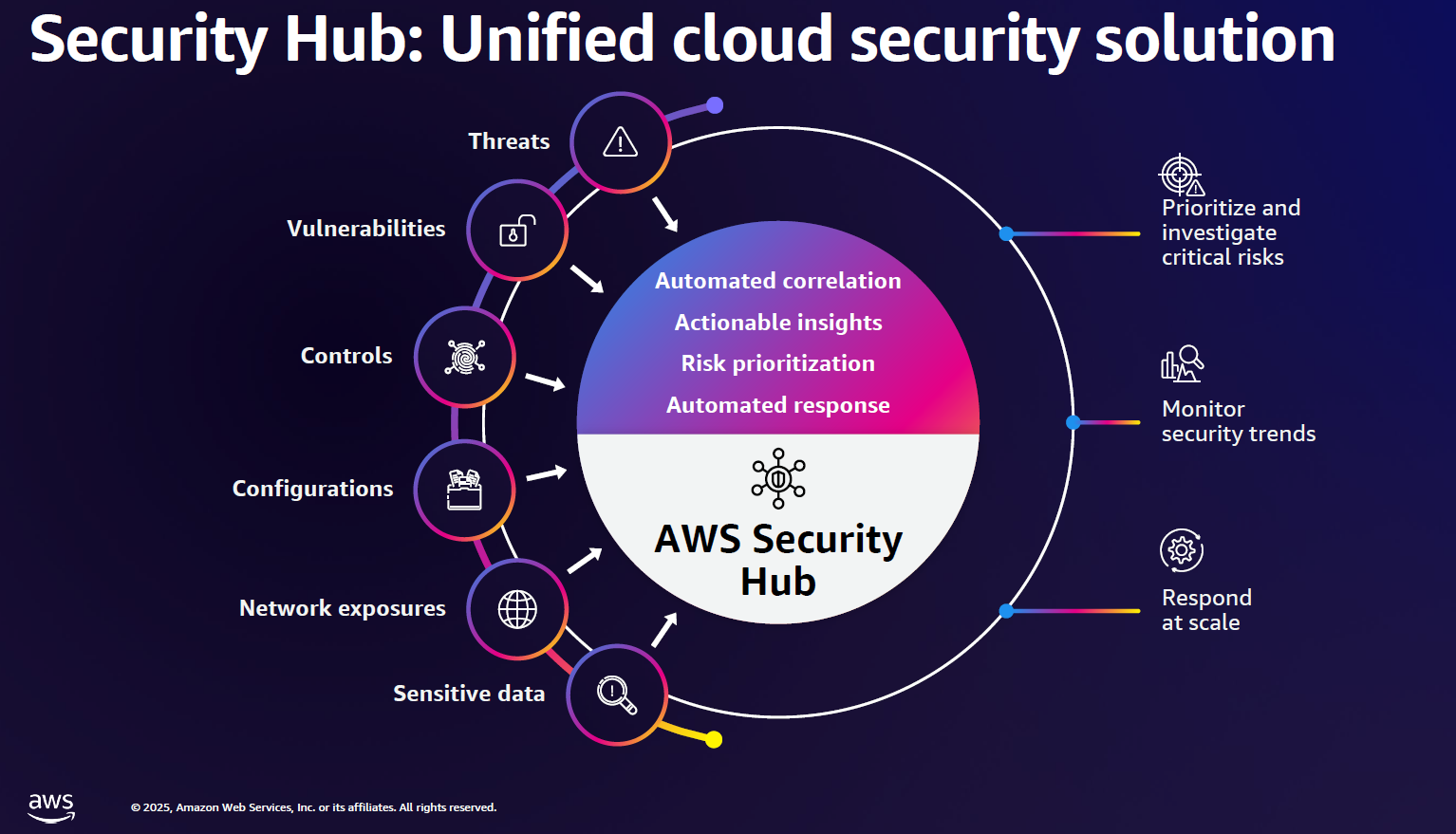

The launch of SecurityHub and AWS IAM Access Analyzer as well as GuardDuty and AWS Shield are examples of making it easier to use various services in one place. "Security Hub combines signals from across AWS security services and then transforms them into actionable insights, helping you respond at scale," said Herzog.

This packaging of disparate yet useful services across AWS picks up on a theme from AWS re:Invent 2024 where the company unified data, analytics and AI under SageMaker. Amazon QuickSight and Amazon Q Business were also were combined for easier use.

Simply put, AWS is keeping small teams to innovate, create new products and run and gun while putting them together for easier consumption too. It's an interesting balancing act.

Securing genAI, AI agents: It's all just security

In many ways, analysts at AWS re:Inforce 2025 were on the hunt for a cybersecurity easy button for agentic AI. AWS didn't take the bait and didn't need to even though analysts weren't pleased. The reality is the industry can secure today's AI agent use cases with existing tools, but this cross industry, multi-vendor, multi-cloud, multi-platform and process army of autonomous agents carrying out work doesn't have open security standards yet.

Eric Brandwine, VP and Distinguished Engineer at Amazon, said: "There are absolutely interesting novel attacks against LLMs, and some of these have been applied to commercially deployed services. But the vast majority of LLM problems that have been reported are just traditional security problems with LLM products. You've got to get the fundamentals right. You've got to pay attention to traditional deterministic security."

Karen Haberkorn, Director of Product Management for AWS Identity, Directory and Access Services, said today's identity services for initial AI agent use cases can deploy existing security offerings. "An AI agent is a piece of software that needs to authenticate to act on behalf of a user. We need to understand your permission, the agent's permissions and ensure the only interactions user are at the intersection," said Haberkorn. "It's a paved path."

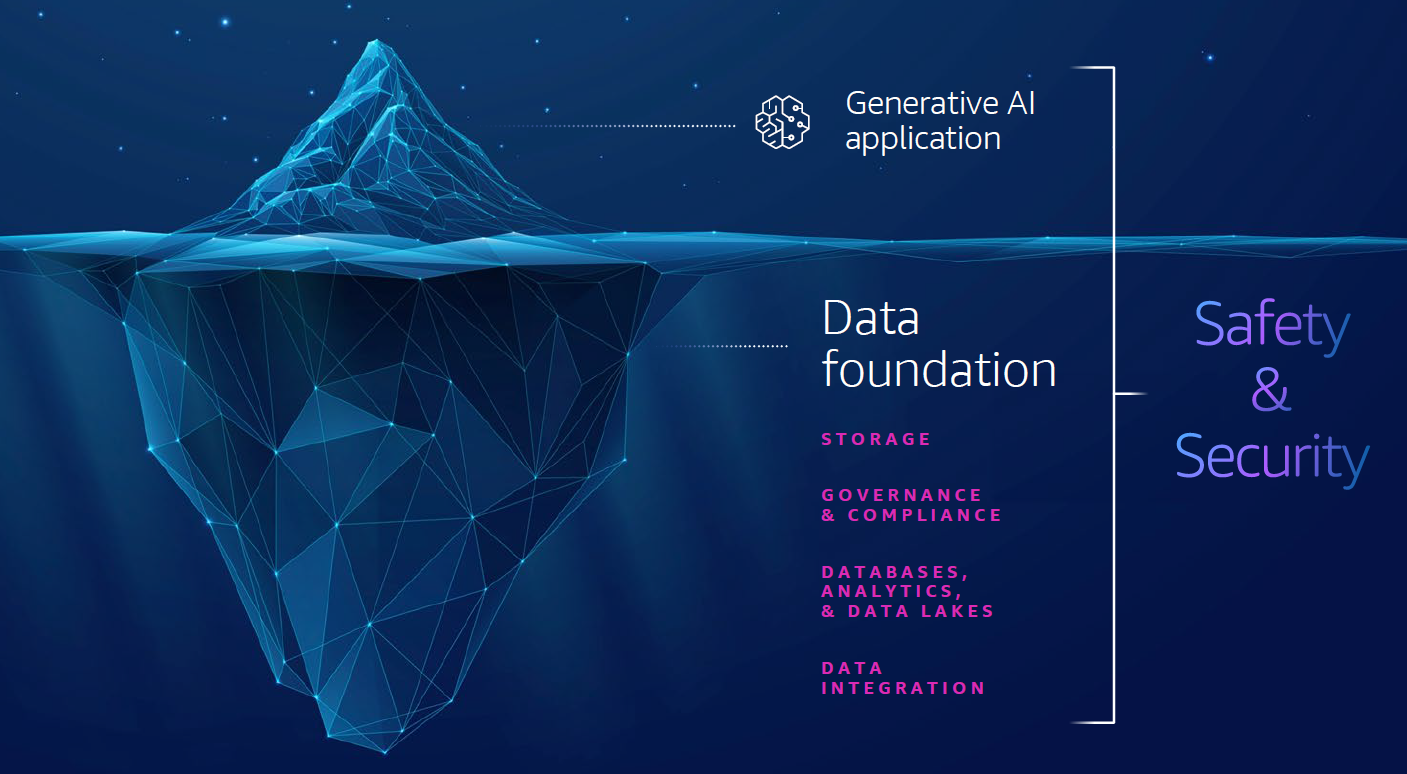

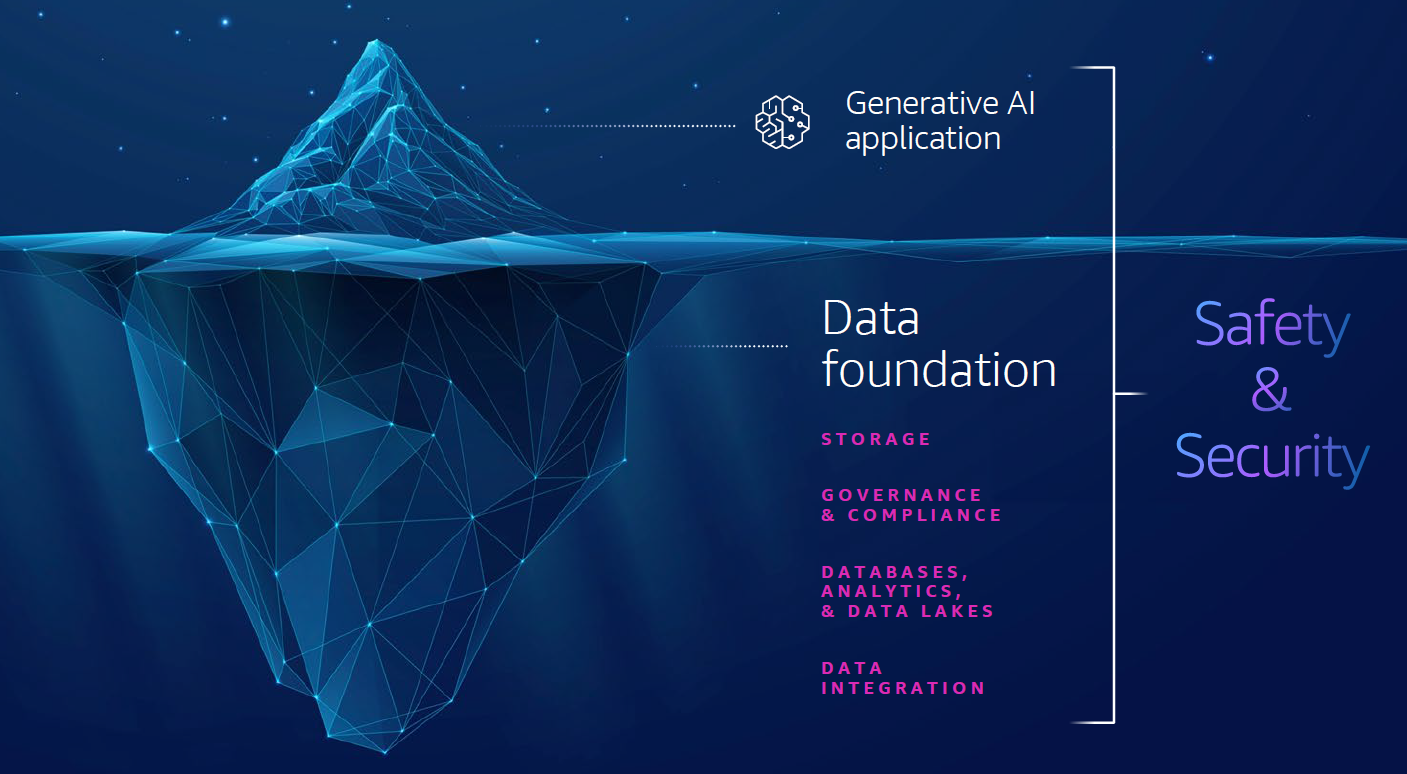

That refrain was heard in multiple presentations. Yes, there's securing AI. And there's using AI for security. But for the most part, it's all security. And specifically, it's data security.

"We're seeing a large interest in conversations and adoption around agents, We're seeing at least like 15% or so adoption of agents. So far, we see that number continuing to explode as we evolve, but our vision is to be the most trusted in performance and deploy the most trusted performance agents in the world," said Matt Saner, Senior Manager, Security Specialists at AWS. " We're working backwards from what the customers are telling us they want to use, and that's what we're working to enable for them. Everything we build is integrated and empowered by the underpinnings of our native security services."

Quint Van Deman, Senior Principal, Office of the CISO at AWS Security, said agentic AI is certainly evolving, but all the primitives you'd rely on for security are already in place. "A human is delegating to a service or agent and talking to another service with trusted identity," said Van Deman. "The details are being worked out, but building these things feels very familiar. Agents have identities."

Van Deman said AWS gives an identity to every underlying piece of compute and that could be a way forward to credential agent workflows. Current standards can also be leveraged. "This feels like a new iteration of an old problem and doesn't strike me as net new," he said.

Haberkorn did note that that AWS can do better packaging up security for agent builders "so they don't have to go looking for it."

Where security and agentic AI will become tricky is when there's a constellation of agents in multiple places. There will need to be more standards and guardrails to ensure agents can securely connect and collaborate. Model Context Protocol (MCP) will add in security standards and AWS and other vendors are working on the issue individually. These efforts will have to combine if the autonomous AI agent dream is going to play out.

Haberkorn said there's a lot of plumbing work that must happen to bring identity to cross-platform AI agents. For instance, microservices can only do what the code allows them to do. Agents are more creative and will need guardrails.

"The use cases today are just the beginning of the journey," said Haberkorn. Software development use case for agents, including Q Developer and Q Transformation will likely inform future efforts.

"Shift left"

At AWS re:Inforce 2025, the term "shift left" was mentioned dozens of times. The phrase was uttered so much I thought were in one of those "super" moments when every word ever said would have a "super" in front of it for years.

I found shift left to be annoying after a while--especially since the meaning was kind of vague beyond broad developer-speak. And since re:Inforce was in Philly I found shift left to be as undefined as "Jawn," which I still don't follow even though I'm a native.

Technically, shift left refers to a principle of integrating security, testing and quality assurance earlier in software development. Often, these practices come in at the end of the development process.

In the context of developers and security, AWS' penchant for shift left makes sense. The term has appeared in other tech keynotes and GitLab's most recent earnings call. The big question now is whether shift left becomes a cultural reference. I'm super curious to see how this phrase turns out and happy to double click on it later. See what I did there?

Data to Decisions

Digital Safety, Privacy & Cybersecurity

Innovation & Product-led Growth

Future of Work

Tech Optimization

Next-Generation Customer Experience

amazon

AI

GenerativeAI

ML

Machine Learning

LLMs

Agentic AI

Analytics

Automation

Disruptive Technology

cybersecurity

Chief Information Officer

Chief Information Security Officer

Chief Privacy Officer

Chief AI Officer

Chief Experience Officer