iPaaS Primer: How the Integration Platform as a Service is Evolving

iPaaS vendors are filling out their capabilities with API management, workflow automation, AI/ML, and, on the cutting edge, GenAI.

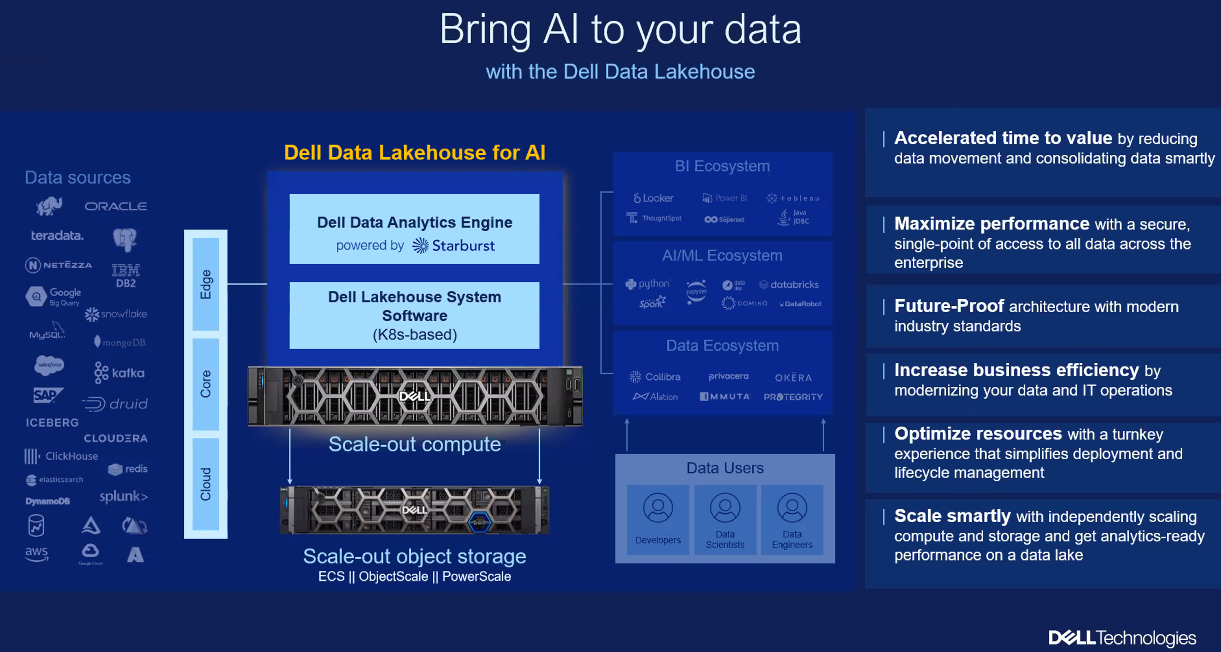

I’ve been covering the path from data to decisions for nearly nine years here at Constellation Research, and it’s a path that invariably starts with integration – integrating data sources and data-generating applications so organizations can connect business processes, gain insight, make decisions, and act. With the steady rise of the cloud over these last nine years, the integration platform as a service (iPaaS) has come to the fore. Here’s a closer look at the latest trends in iPaaS, which is one of the three core markets I cover, along with analytical data platforms (data lakes, data warehouses and lakehouses), analytics/BI and citizen data science capabilities including artificial intelligence (AI), machine learning (ML) and generative AI (GenAI).

iPaaS have emerged as the cloud-based platforms for connecting databases, applications and mission-critical systems both in the cloud and from on-premises environments to the cloud. It’s not just about connecting sources to targets, as in the batch-oriented extract/transform/load (ETL) days of yore. Integration is increasingly a two-way street, with updates and data streams sent to AI models, source systems, automated business processes, and data platforms.

iPaaS have helped organizations move on from brittle, hard-coded, point-to-point integrations. The iPaaS becomes the consistent intermediary between points of integration, facilitated by the platform’s hundreds of out-of the-box connectors to popular apps and data sources (all of which are maintained by the vendor). The work of connecting sources and systems becomes much more accessible to non-IT types by way of drag-and-drop and point-and-click interfaces. What’s more, the components of integrations created with the iPaaS are modular and can be reused to quickly assemble new integrations. When systems change, components can be quickly updated across all integrations in which they are used, helping teams work faster and be more productive.

When the iPaaS emerged more than a decade ago, vendors typically came out of the data-integration or application-integration arena, but what Constellation calls a next-generation iPaaS has to be able to do it all. Many iPaaS vendors also address business-to-business integration and the electronic data interchange (EDI) requirements seen in supply chain environments. In addition to offering hundreds of prebuilt connectors and templates for common integration flows, iPaaS typically provide monitoring, alerting and debugging capabilities to keep tabs on and troubleshoot integrations, pipelines and jobs.

As detailed below, the three main areas where iPaaS vendors are stepping up are:

API management. Connecting cloud apps and data sources is all about using application programming interfaces (API) that abstract away complexity and promote agility and flexibility. Unfortunately, APIs also introduce a new source of complexity in the form of API sprawl. Here’s where API management capabilities come in. iPaaS vendors are stepping up with 1. API lifecycle management capabilities, 2. Unified control planes for wrangling all those APIs, and 3. Governance frameworks to ensure that APIs are tracked and managed.

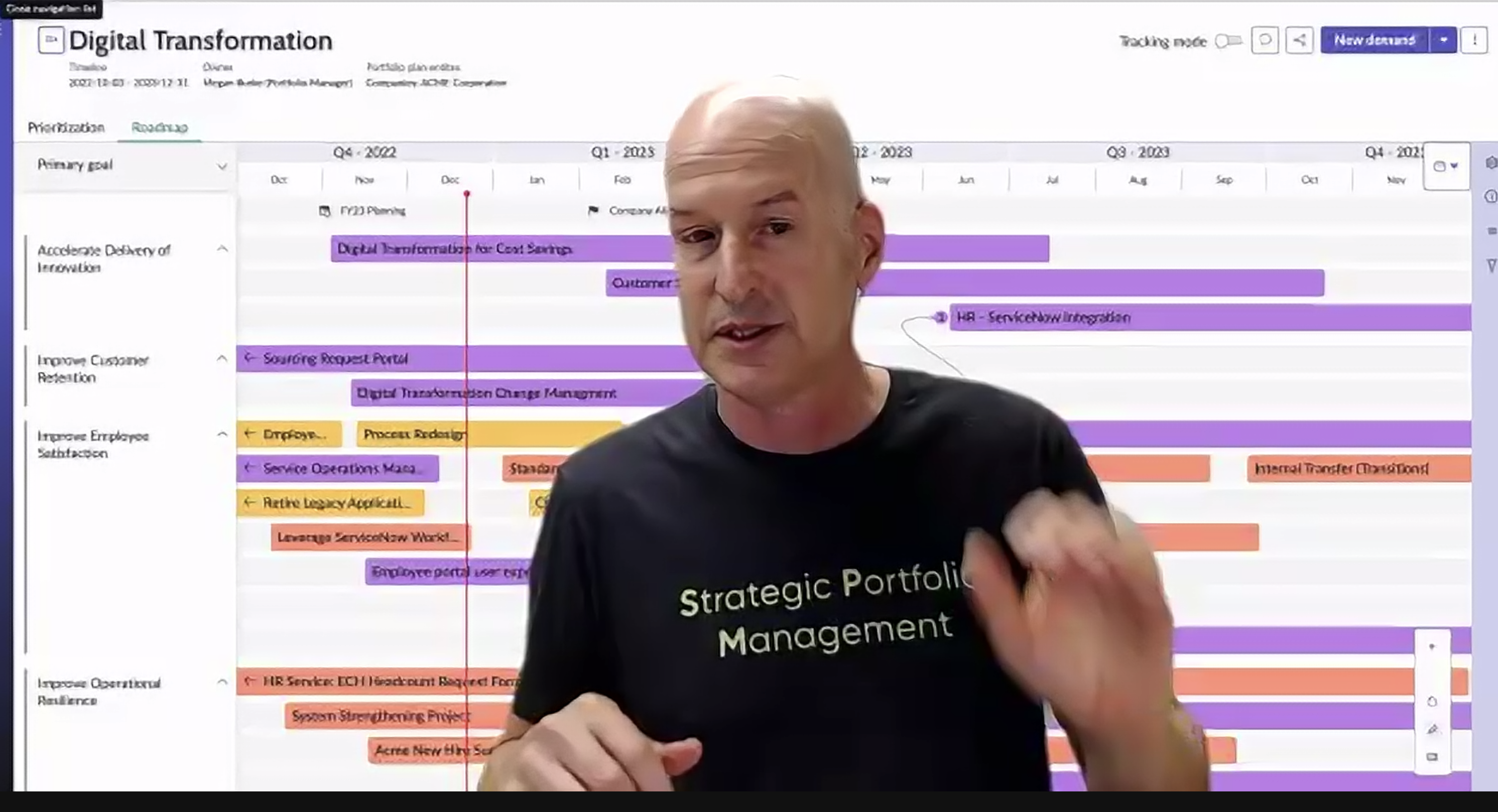

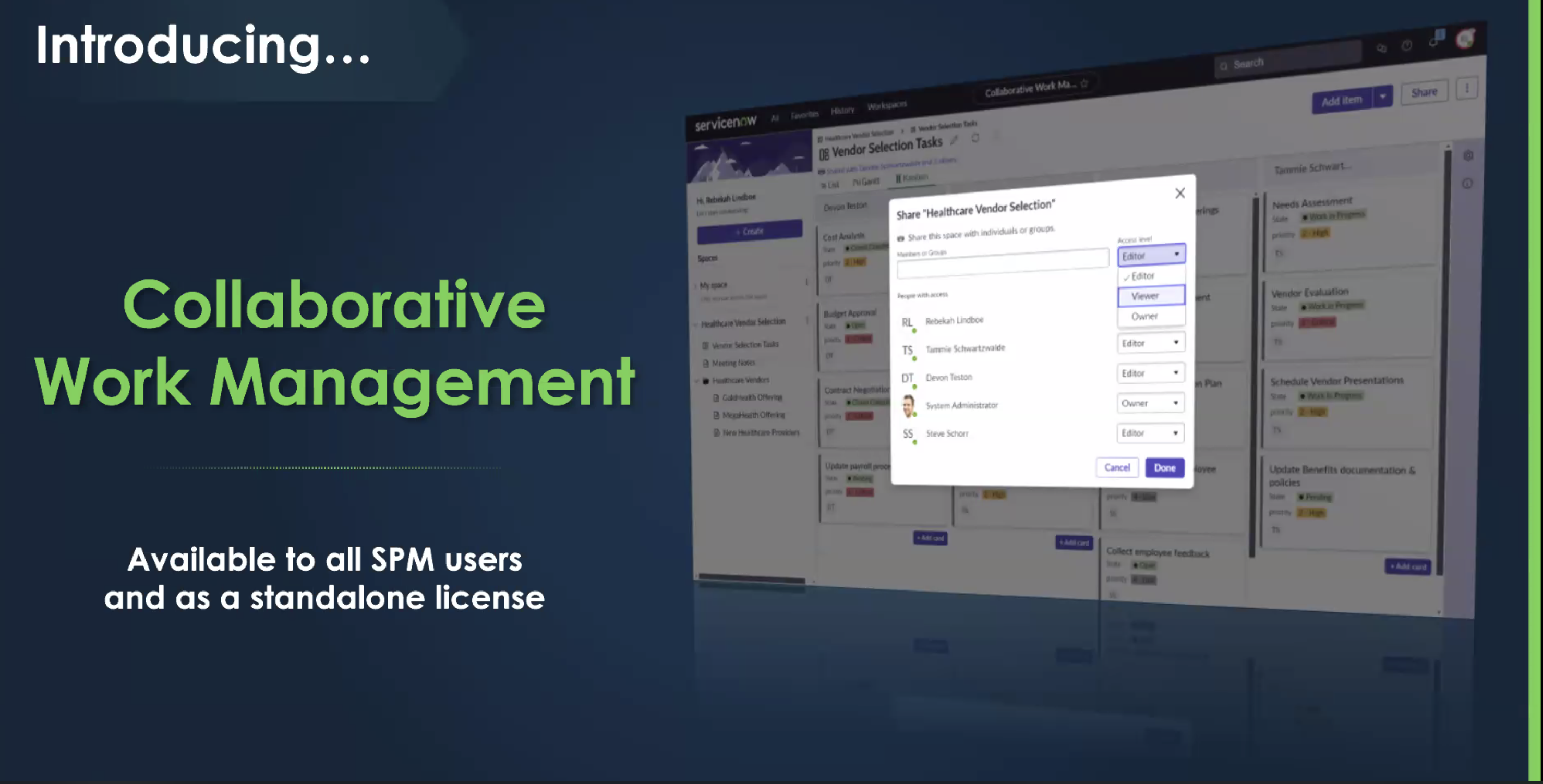

Workflow and automation. Organizations continue to face pressure to do more with fewer people, so workflow and automation capabilities are on the rise. It makes sense to automate wherever possible. Where there’s any doubt about next steps, use the iPaaS to create a workflow with humans in the loop for exception handling. Where there is confidence about exactly what an event or an analytic threshold or a prediction means, choose straight-through automation without unnecessary human intervention.

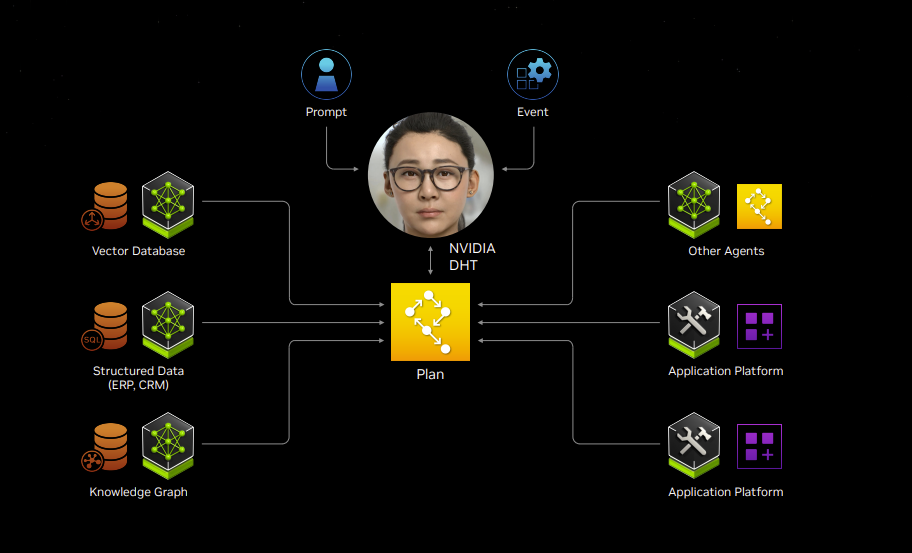

AI/ML. As the name suggests, an iPaaS is a cloud-based platform provided as a service. That puts vendors in the position to provide recommendations based on observable integration patterns. The customer’s private data remains secure and unseen by the vendor, but leading iPaaS vendors are learning from the metadata patterns and graphs of interactions behind the scenes in order to suggest appropriate data sources, pre-existing integrations, and/or next-best integration steps to users. These recommendations help save time and enhance productivity for professional and novice users alike.

GenAI. The latest innovation in iPaaS is the use of GenAI, which is being used to design and deploy new integrations and to explain and document new or existing integrations. GenAI will make the iPaaS accessible to an even broader swath of users through natural language interfaces, and it will help organizations to modernize legacy integrations by explaining, recreating, and optimizing code created by people who have long since left an organization.

Streaming capabiliites. The pace of business is always accelerating, so it’s a must to consider low-latency data integration. A next-gen iPaaS should address streaming requirements.

To summarize, modern iPaaS are benefitting professional integrators and tech-savvy business users alike. Using an iPaaS enhanced with augmented capabilities including AI/ML and GenAI, tech-savvy business types can create integrations for themselves rather than having to wait in line for IT to do the work. For the professionals, an iPaaS can accelerate and scale up their integration work, enabling them to:

- Create, monitor, maintain and modify integrations much more quickly and productively.

- Validate, troubleshoot and optimize integrations created by the tech-savvy business types.

- Explain, document and streamline legacy integrations and code.

Recommendations

If there’s a risk in investing in an iPaaS, it’s that the platform might not support all the types of integration or the scale of integration that the organization will need. A next-generation iPaaS is one that is complete and able to serve as the companywide standard. If you can do it all with one platform you’ll get much more out of the investment, both in terms of the technology and the training of people, and there will be no need for point solutions.

Look beyond the next integration project to consider the breadth of integration requirements in recent history and in the foreseeable future. Do you have on-premises requirements? Will you need to work with more than one public cloud? Are investments anticipated in new enterprise apps, such as ERP or CRM systems? What are the workflow and automation requirements?

On the cutting edge, if an iPaaS vendor doesn’t have an AI/GenAI strategy by this point – let alone GenAI-based features in preview – I’d say it’s time to cut them from your short list.

Costs and licensing regimes are crucial. Does the platform you are considering offer modularity? As noted above, a complete iPaaS is a future-proof choice, but if you don’t have plans to use subsets of capabilities, is it possible to add them (and pay for them) only as and when needed? What subscription models are available? Is it per user, per connection or capacity based? The more choices available the better, as the model that makes sense today may get expensive as the number of users or integrations multiplies.

To give you a head start on your tech selection process, I recently updated my Constellation ShortListTM for Integration Platform as a Service. If you don’t see a candidate you are considering on my ShortList, feel free to contact me at [email protected] for an advisory consultation. I wish you the best of success in your technology selection process.

Data to Decisions Tech Optimization Innovation & Product-led Growth Future of Work Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity ipaas ML Machine Learning LLMs Agentic AI Generative AI Robotics AI Analytics Automation Quantum Computing Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain Leadership VR business Marketing SaaS PaaS IaaS CRM ERP finance Healthcare Customer Service Content Management Collaboration Chief Information Officer Chief Analytics Officer Chief Data Officer Chief Information Security Officer Chief Technology Officer Chief Executive Officer Chief AI Officer Chief Product Officer