Google Cloud Next: The role of genAI agents, enterprise use cases

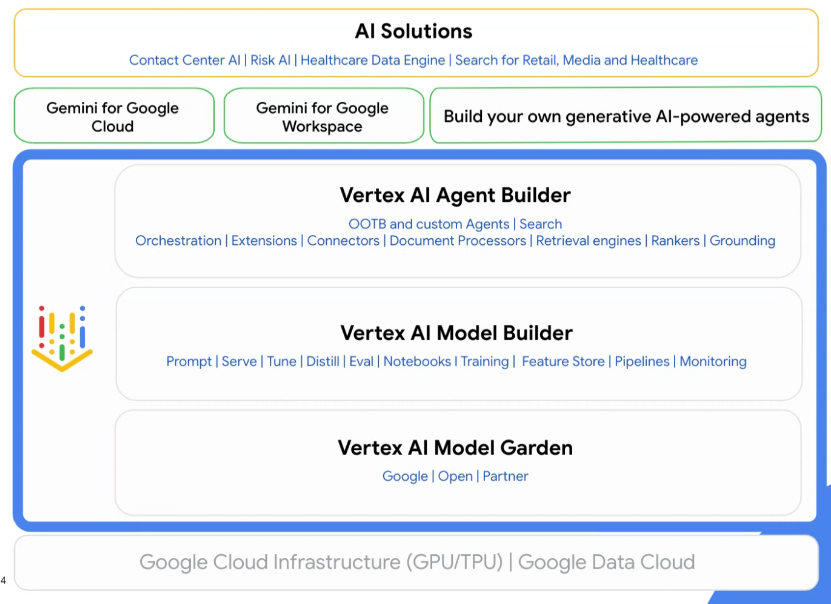

Google Cloud pitched an agent-oriented vision for generative AI at Google Cloud Next and highlighted a bevy of emerging use cases going from pilot to production.

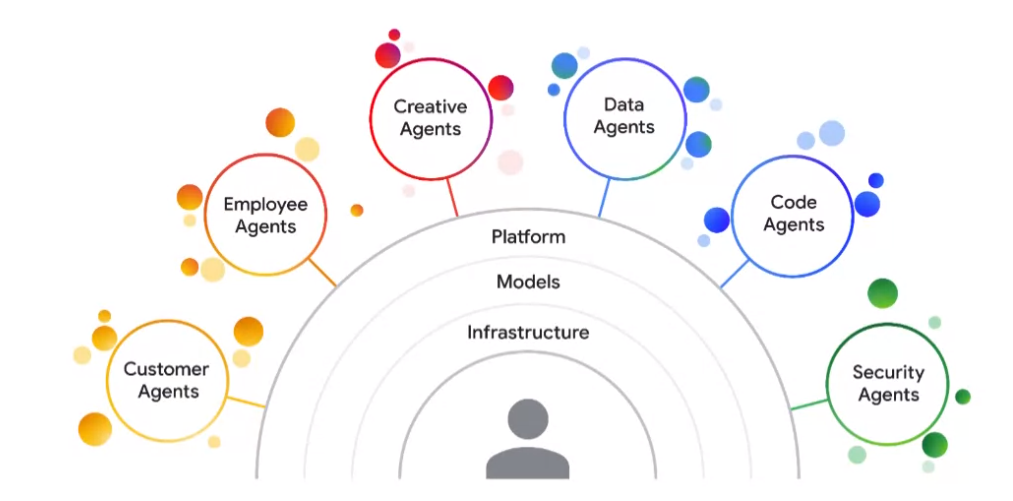

"We are now building generative AI agents," said Google Cloud CEO Thomas Kurian. "Agents are intelligent entities that take action to help you achieve specific goals."

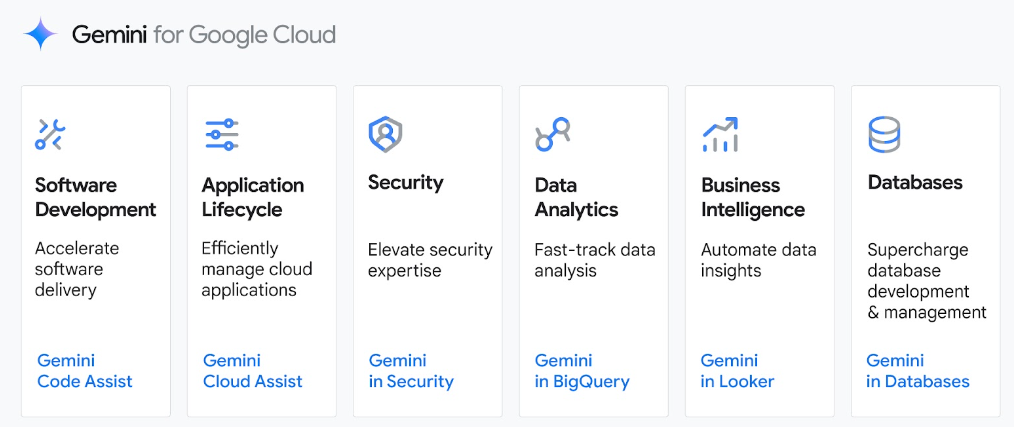

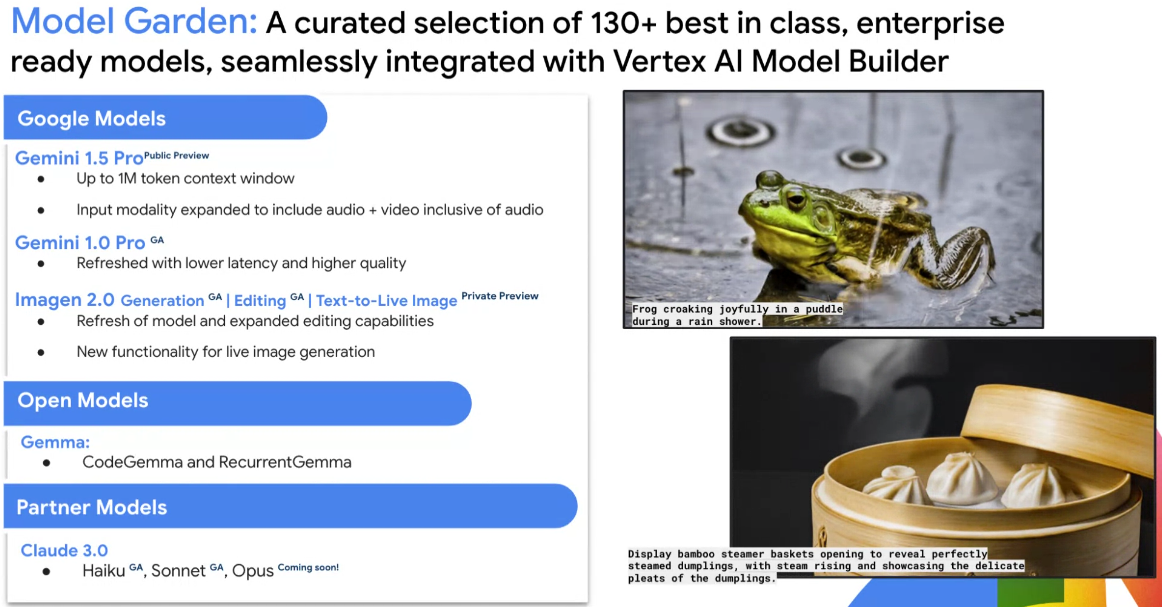

These actions can range from helping a shopper find a dress, picking health benefits, nursing shift handoffs, bolstering security defenses or building applications. Google Cloud's agents during the keynote were built with its Gemini large language model, but presumably other LLMs were possible via the company's Model Garden.

- Google Cloud Next 2024: Google Cloud aims to be data, AI platform of choice

- Google Unveils Ambitious AI-Driven Security Strategy at Google Cloud Next'24

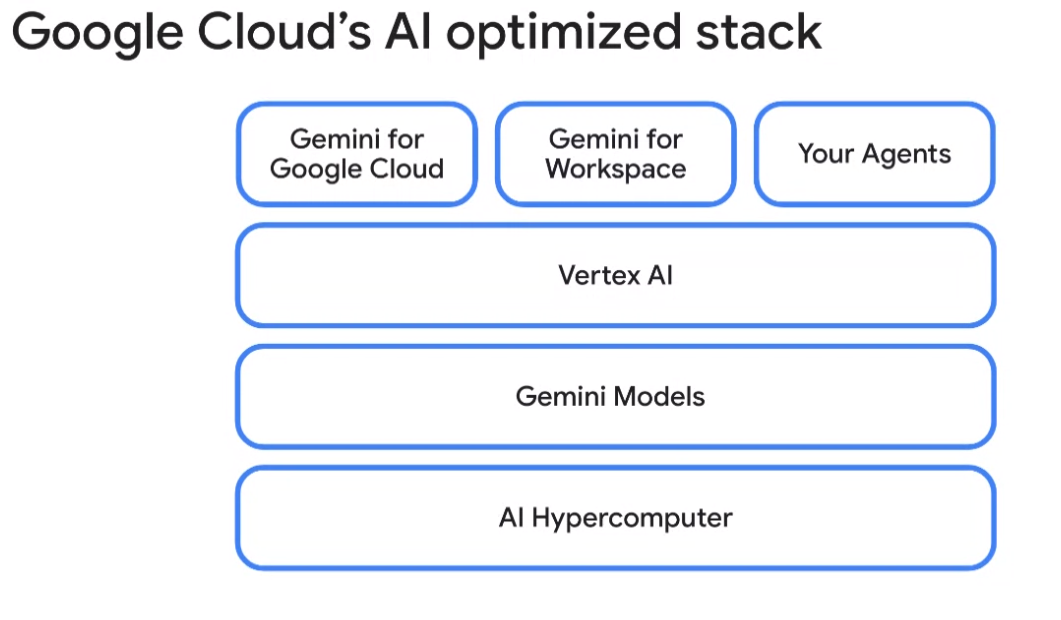

Google Cloud continues to "offer widely used first party, third party and open-source models," said Kurian. "Vertex AI can be used to tune, augment, manage and monitor these models."

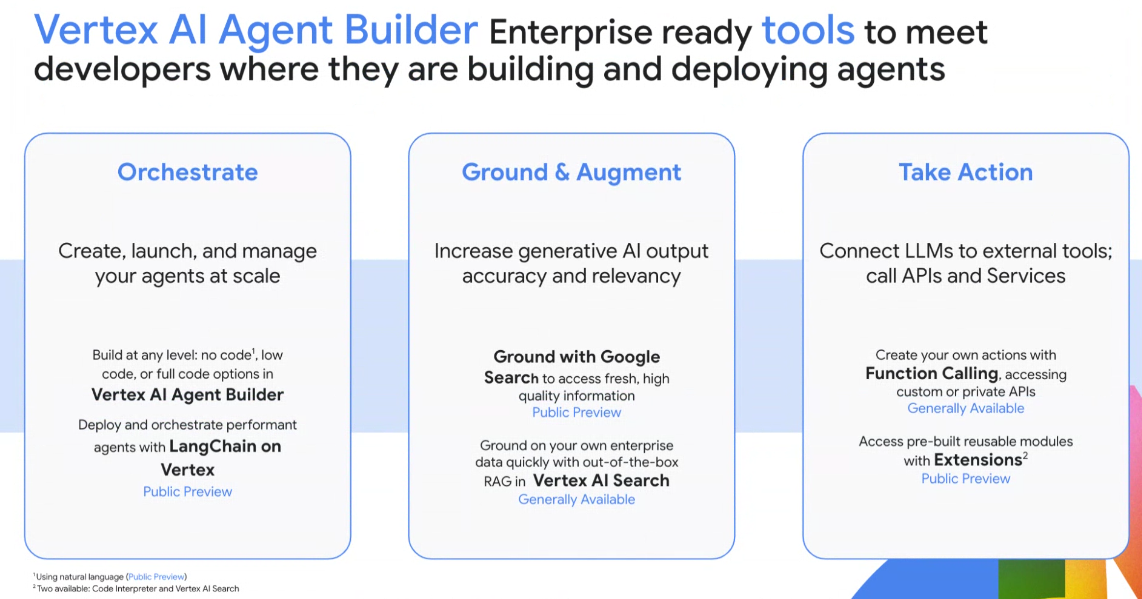

In many ways, Kurian's riff about agents is Google Cloud's answer to Microsoft's Copilot stack and AWS' Q. What Google Cloud did was tie agents to business outcomes and processes that could be automated. "These agents would connect with other agents as well as humans," said Kurian.

Kurian added that genAI agents powered by Gemini models will be the connective tissue between all of Google Cloud's services.

Constellation Research analyst Holger Mueller summed up Google Cloud's approach with agents:

"In the AI race Google provides the right mix of assistants/agents (not the inflationary number of co-pilots like Microsoft) while providing the Ãœber AI with Gemini Cloud Assist (which has the same ambitions like Amazon's AWS' Q). And all of that on the best hardware infrastructure from chips to intra-data center networking and public networking. Google Cloud is powered by Gemini, the most advanced LLM out there, and offers grounding services with Google Search. All in all Google keeps it lead of 3-4 years when it comes to custom algorithms on custom silicon."

Here's a tour of use cases by the type of agents being deployed on Google Cloud.

Customer agents. For enterprises, customer agents are viewed as extra sales and service people. These agents are able to listen carefully, understand your needs and recommend products and services.

Mercedes Benz highlighted multiple customer agent experiences in car as well as for customizing models to buy. "The sales assistant helps customers to seamlessly interact with Mercedes when booking a test drive or navigating through offerings," said Mercedes Benz CEO Ola Källenius.

Enterprises cited by Google Cloud appeared to be gravitating toward genAI as a service engine. Discover Financial uses genAI to search and summarize procedures during calls and IHG Hotels & Resorts is building a travel planning tool for guests.

In addition, Target is optimizing offers and curbside pickup on its app and site. Best Buy is also building an agent to troubleshoot product issues and manage order deliveries. Paramount+ is also using genAI to personalize viewing recommendations.

Google Cloud customer agents can be tailored by conversation flow, languages and subject matter and then know when to hand off to a human agent.

Employee agents. The returns on employee agents are relatively straightforward: Remove repetitive tasks so employees can be more productive. Employee agents can also streamline chores such as health benefits enrollment.

Most of the employee agent examples were tethered to Gemini models running through Google Cloud Workspace, but via Vertex AI extensions models can connect to any external or internal API. Uber CEO Dara Khosrowshahi said employee agents were being built to aid support teams, summarize user communications and reduce marketing agency spending.

How Uber's tech stack, datasets drive AI, experience, growth

Other use cases included Dasa, a Brazil-based medical diagnostic company, using agents to surface relevant findings in test results; Etsy optimizing ad models; and Pepperdine University, which is using Gemini to provide captions and notes across multiple languages.

Gemini-powered agents in Workspace are also being used to analyze RFPs, contracts and other corporate documents. This analysis of large documents and paperwork automation was a key use case across companies such as HCA Healthcare and Bristol Myers Squibb.

Home Depot is leveraging Gemini for its Sidekick application that manages inventory. See: How Home Depot blends art and science of customer experience

Creative agents. Like employee agents, creative agents have been tied to Workspace in the Google Cloud ecosystem. However, I saw an AWS demo where a marketer or ad agency team can create mood boards, pick models and accelerate content concepts to minutes from days or weeks.

For Google Cloud, creative agents are all about using Gemini to create slides, images and text. Carrefour is using Vertex AI to create dynamic campaigns across social networks quickly.

Procter & Gamble is using Google Cloud's Imagen model to develop images and creative assets. Canva is using Vertex AI to power its Magic Design for Video editing tools.

WPP is using Gemini 1.5 Pro to power its media activation tools.

The returns of creative agents can be powerful in that enterprises can avoid media waste and its associated costs across a campaign. In addition, storyboards can be created and tweaked quickly.

Related: Middle managers and genAI | Why you'll need a chief AI officer | Enterprise generative AI use cases, applications about to surge | CEOs aim genAI at efficiency, automation, says Fortune/Deloitte survey

Data agents. A common use case is using generative AI to search, analyze and summarize document, video and audio repositories to surface insights. A good data agent is one that can answer questions and then tell us what questions we should be asking.

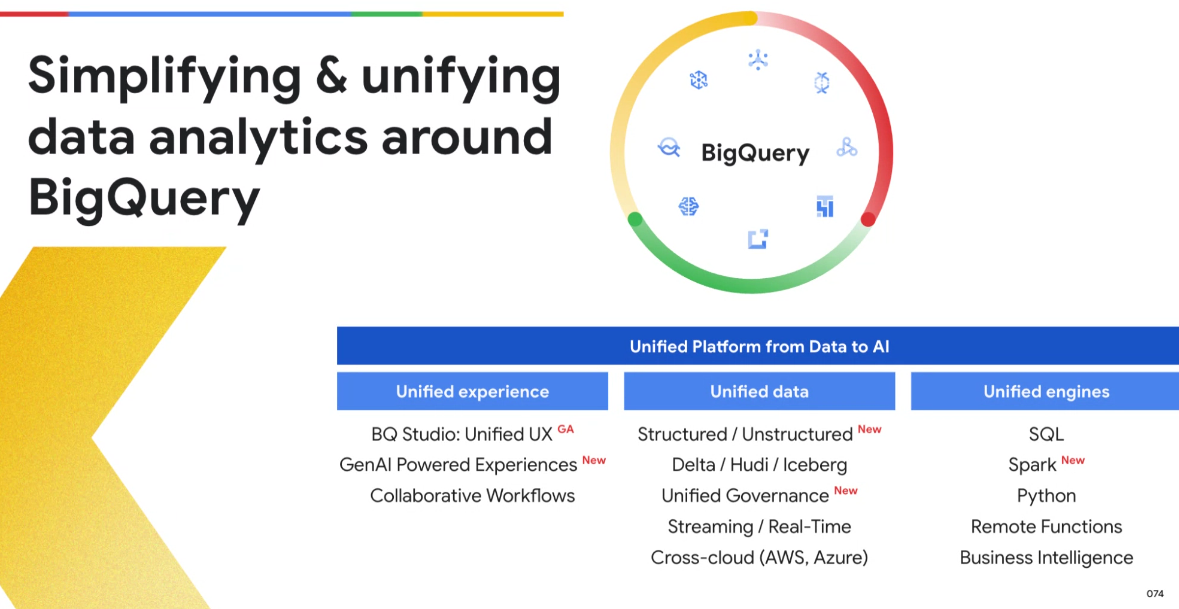

Suresh Kumar, CTO of Walmart, said it is using data agents to comb BigQuery and surface insights for personalization, supply chain signals and improve product listings.

Data agents are being deployed for drug discovery and medical treatments. Mayo Clinic is using data agents to search for more than 50 petabytes of clinical data.

In addition, delivery carriers and airlines are using data agents to optimize shipments and routes.

Data agents can be deployed for data preparation, discovery, analysis, governance and to create data pipelines. These agents can also provide notifications when KPIs are being met or in jeopardy.

Constellation Research analyst Doug Henschen said the data agent argument is strong.

"The vision for Data Agents is pretty compelling, with a key point made by Google Cloud being that multi-modal opportunities lie ahead. Multi-modal GenAI-powered data agents will unlock combinations of structured and unstructured data including video, audio, images and code and correlations with structured data. One scenario that Alphabet CEO Sundar Pichai shared was that of an insurance company adjuster that might combine video, images and text to automate a claims process. With BigQuery at the center, Google Cloud foresees data agents applying multiple engine to data, whether SQL, Spark, search or whatever to solve business problems."

BT150 CXO zeitgeist: Data lakehouses, large models vs. small, genAI hype vs. reality

Code agents. Goldman Sachs CEO David Solomon said that genAI ability to boost developer productivity was promising. "There's evidence that generative AI tools for assisted coding can boost developer efficiency and we're excited about that," said Solomon, who said genAI is being used to analyze content and market signals and boost client engagement.

Goldman Sachs rival JPMorgan Chase also sees a boom in developer productivity with genAI code assistance. JPMorgan Chase CEO Dimon: AI projects pay for themselves, private cloud buildout critical

Wayfair CTO Fiona Tan said the retailer is standardizing Google Code Assist and improvements via Gemini 1.5 Pro. Google Cloud is also leveraging Gemini Code Assist and has increased productivity by 30%.

Security agents. Anyone following the ongoing battle with Palo Alto Networks, CrowdStrike and Zscaler knows generative AI has a big role in security. Google Cloud said that Palo Alto Networks will build on top of Google Cloud AI.

Google Cloud said security agents are designed to incorporate data and intelligence to serve up insights and incident response faster. The win is that generative AI can create a multiplier effect for cybersecurity analysts by analyzing large samples of malicious code.

Charles Schwab and Pfizer were cited as a Google Cloud security customers. The goal of a security agent is to identify and address threats, summarize and explain findings and recommend next steps and remediation playbooks quickly. Ultimately, security agents will automate responses.

Constellation Research analyst Chirag Mehta analyzed Google Cloud's security strategy in a research note. He said:

"As a Google Cloud prospect or customer, take a comprehensive inventory of your current security tools landscape, encompassing Google Cloud and its partner ecosystem. Engage with Google Cloud and security tool vendors to discuss their roadmaps for Google Cloud, with a specific focus on how they plan to leverage AI to address your unique requirements. Additionally, consider exploring tools that offer multi-cloud support, regardless of your primary cloud provider, to future proof your security infrastructure."

Data to Decisions Digital Safety, Privacy & Cybersecurity Innovation & Product-led Growth Tech Optimization Future of Work Next-Generation Customer Experience Google Cloud Google SaaS PaaS IaaS Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP CCaaS UCaaS Collaboration Enterprise Service AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Chief Information Officer Chief Technology Officer Chief Information Security Officer Chief Data Officer Chief Executive Officer Chief AI Officer Chief Analytics Officer Chief Product Officer

Ultimately, Google Cloud is bidding to be the AI optimized stack of choice that will enable companies to deploy a series of agents that can automate workflows and carry out tasks. And by the way, Google Cloud is offering model choices, but

Ultimately, Google Cloud is bidding to be the AI optimized stack of choice that will enable companies to deploy a series of agents that can automate workflows and carry out tasks. And by the way, Google Cloud is offering model choices, but

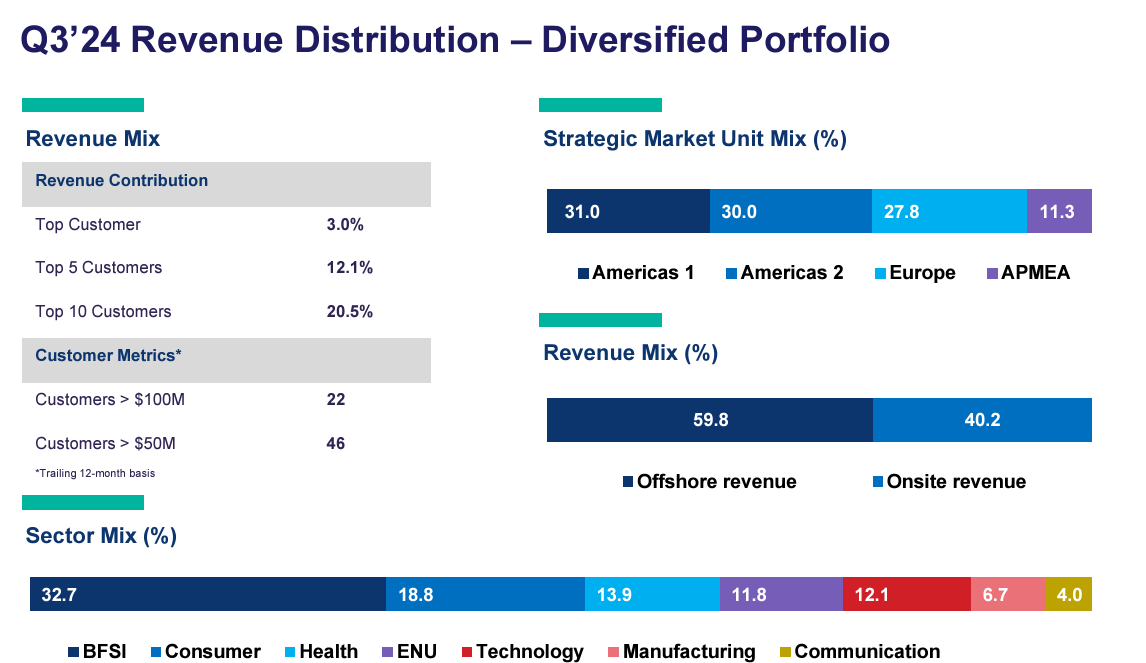

Pallia (right) most recently was CEO for Wipro's Americas 1 unit and has been with the company for more than three decades. Pallia was responsible for Wipro Americas' vision, strategy and industries and was president of Wipro's consumer business and head of business applications services. Delaporte will remain with Wipro through the end of May for the transition to Pallia.

Pallia (right) most recently was CEO for Wipro's Americas 1 unit and has been with the company for more than three decades. Pallia was responsible for Wipro Americas' vision, strategy and industries and was president of Wipro's consumer business and head of business applications services. Delaporte will remain with Wipro through the end of May for the transition to Pallia.