Archetype AI raises $13 million in seed funding, launches Newton physical world foundation model

Archetype AI has raised $13 million in seed funding and launched Newton, a foundational model that is built to understand the physical world via data signals from accelerometers, gyroscopes, radars, cameras, microphones, thermometers and other environmental sensors.

Newton aims to take physical data and combine them with natural language to provide insights about the physical world. Architype AI's funding round was led by Venrock and included Amazon Industrial Innovation Fund, Hitachi Ventures, Buckley Ventures and Plug and Play Ventures.

Archetype AI's Newton highlights how foundational models continue to evolve at a rapid clip. While large language models have focused on language and image patterns, there is plenty of room for more niche use cases. Archetype AI describes Newton as "a first-of-its-kind physical AI foundational model that is capable of perceiving, understanding and reasoning about the world."

Ivan Poupyrev, CEO and co-founder of Archetype AI, said the company's mission is to solve the biggest problems which are "physical, not digital." "Our goal is to encode the entire physical world so we can derive meaning from the signals all around us and create new solutions to problems that we previously couldn’t understand," he said.

Newton is designed to scale across any kind of sensor. In theory, Newton could bring insights to the Internet of things as well as trillions of sensors in multiple industries. Archetype AI is an example of a foundational model company that can work through multiple verticals and use cases.

Speaking at an AWS analyst meetup, Matt Wood, VP of AI at AWS, was asked about whether foundational models would be commoditized quickly. After all, the LLM layer is likely to be abstracted with models being swapped as easily as cloud instances. Wood said foundational models are unlikely to be commoditized. Instead, these models will become more specialized.

Wood said:

"There is so much utility for generative AI. You're starting to see divergence in terms of price per capability, but I think that we're talking about task models, industry-focused models, vertically focused models and more. There's so much utility that I doubt these models are going to become commoditized."

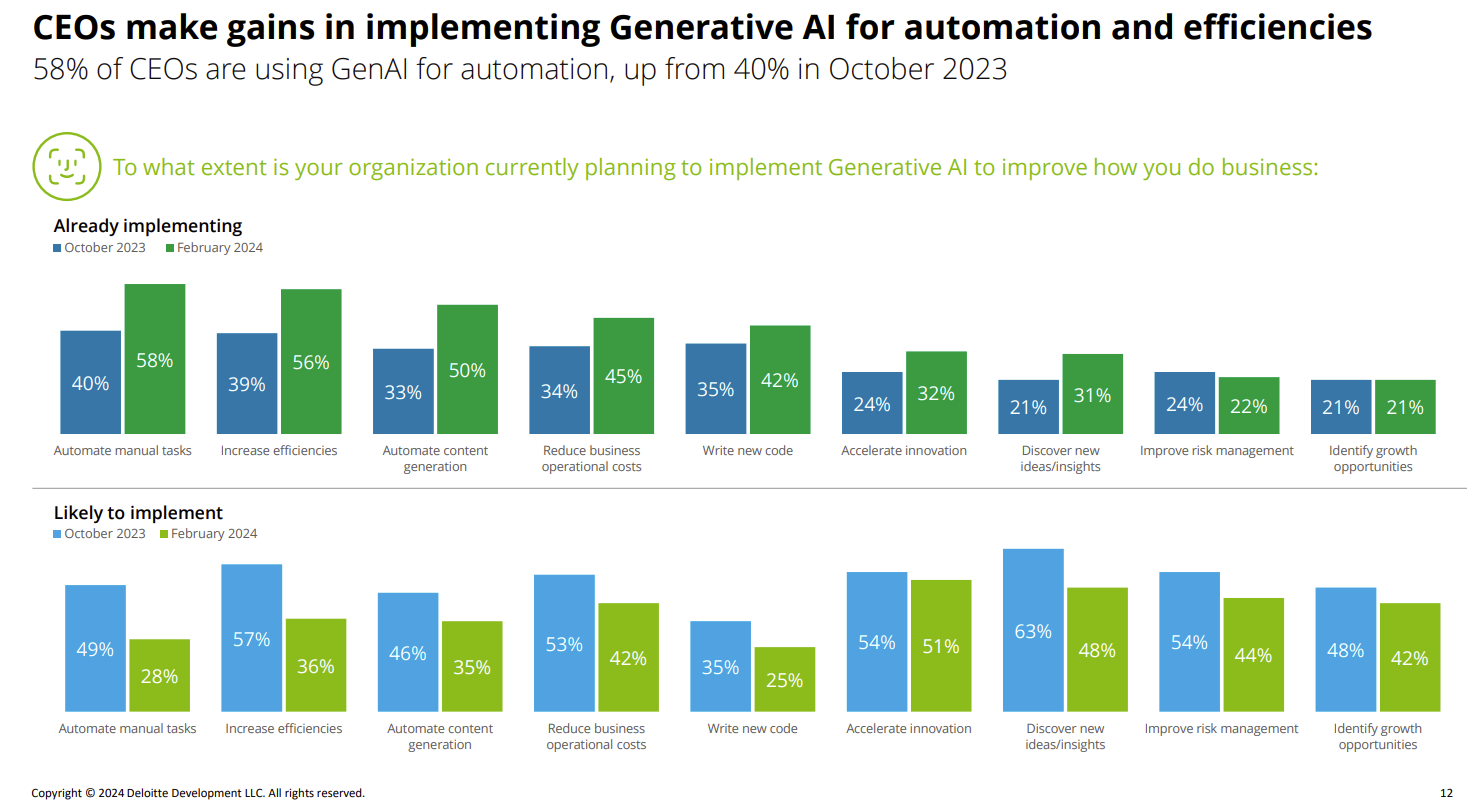

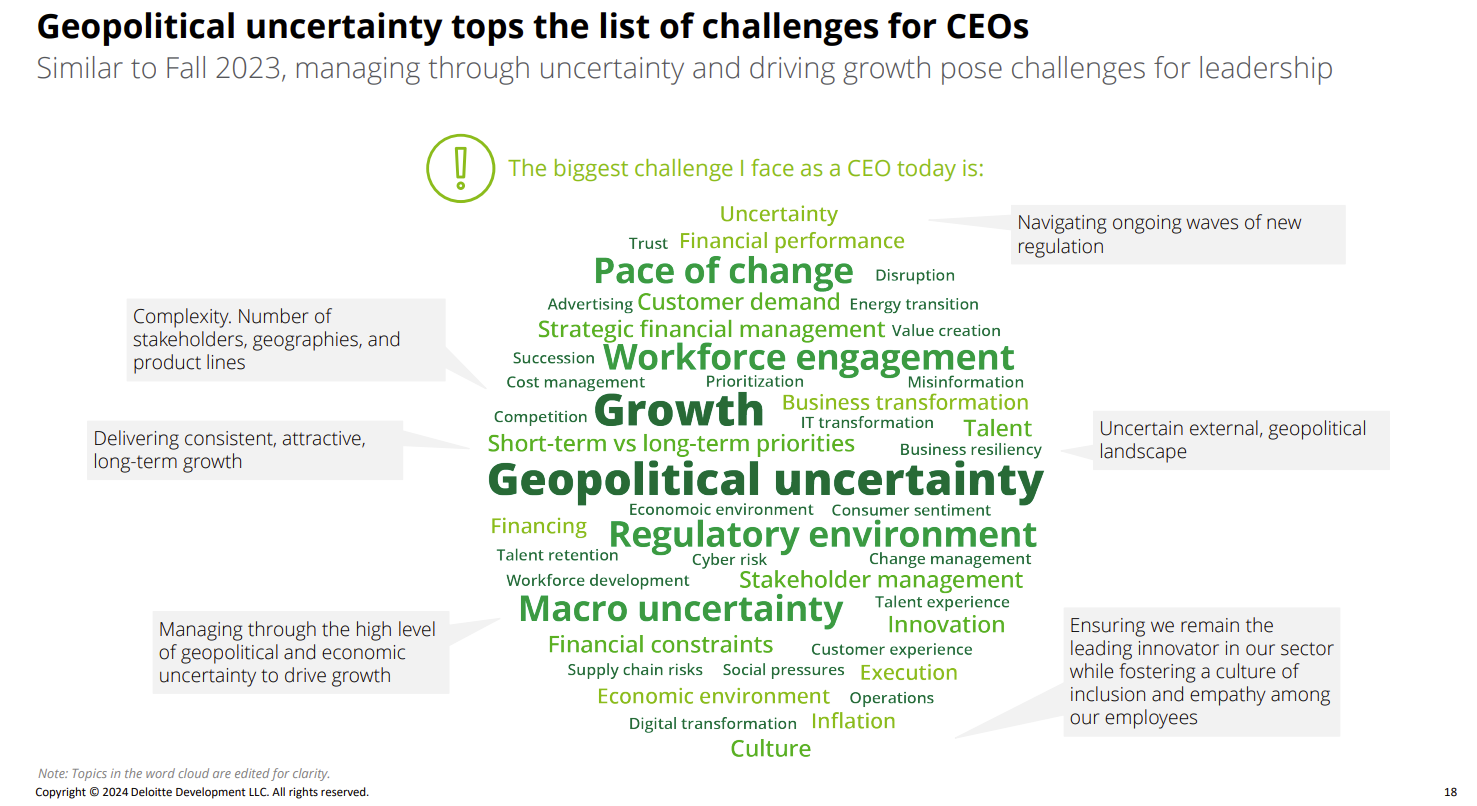

Related: CEOs aim genAI at efficiency, automation, says Fortune/Deloitte survey | Why you'll need a chief AI officer | Enterprise generative AI use cases, applications about to surge

Data to Decisions Innovation & Product-led Growth Future of Work Tech Optimization Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity AI GenerativeAI ML Machine Learning LLMs Agentic AI Analytics Automation Disruptive Technology Chief Information Officer Chief Executive Officer Chief Technology Officer Chief AI Officer Chief Data Officer Chief Analytics Officer Chief Information Security Officer Chief Product Officer

Wood spoke with industry analysts including me and Doug Henschen at AWS' New York offices. Here are some of the key themes to note.

Wood spoke with industry analysts including me and Doug Henschen at AWS' New York offices. Here are some of the key themes to note.