Will generative AI make enterprise data centers cool again?

This post first appeared in the Constellation Insight newsletter, which features bespoke content weekly and is brought to you by Hitachi Vantara.

The lowly enterprise data center may be making a comeback as companies look to leverage generative AI but keep corporate data secure.

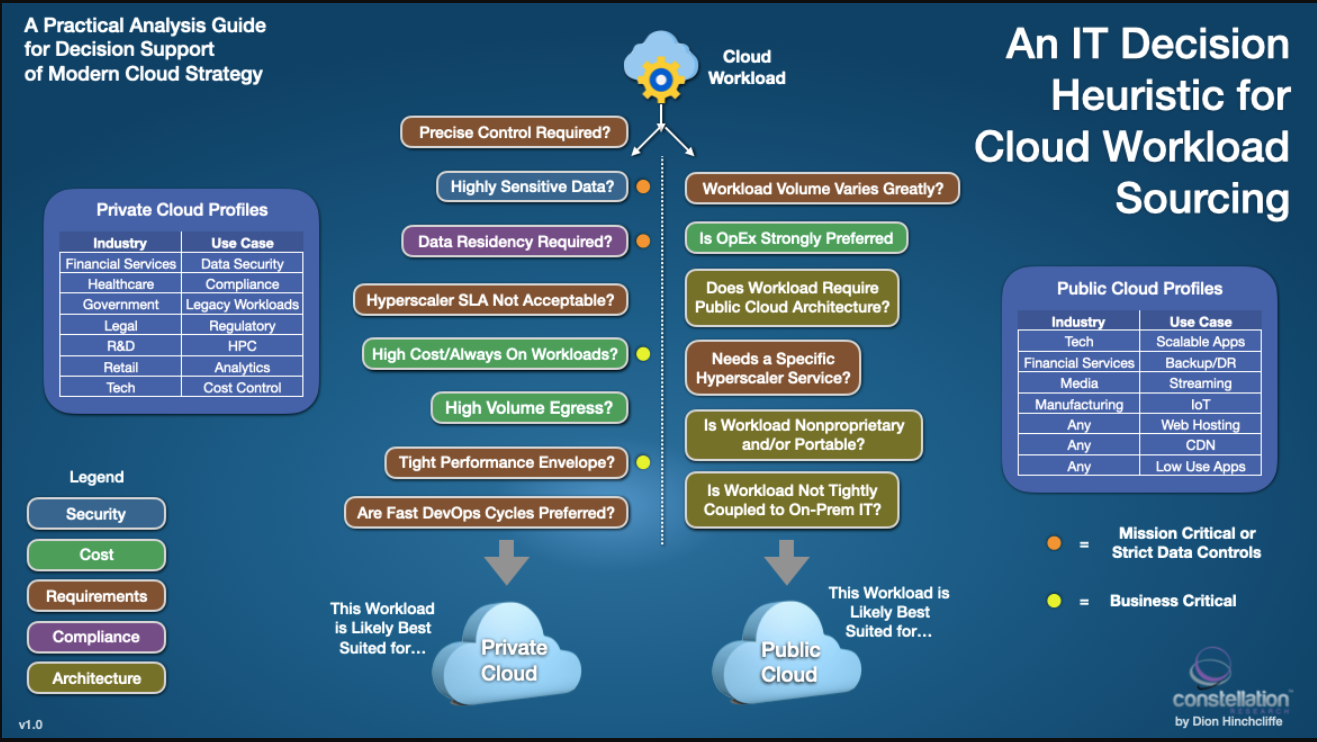

This burgeoning trend may not come as a complete surprise. Constellation Research analyst Dion Hinchcliffe noted in a report last year that CIOs were scrutinizing public cloud workloads because they could get better total cost of ownership on-premises. What's emerged is a hybrid cloud approach where generative AI may tip the scales toward enterprise data centers.

On C3 AI's third quarter earnings call, CEO Tom Siebel talked generative AI workloads. He said: "The interesting trend we're seeing--and this is really counterintuitive--is we're going to see the return of in-house data centers where people have the GPUs inside. I'm hearing this in the marketplace and even my engineers have talked about it. Some are saying, 'it's going to be cost effective for us to build.'"

Siebel isn't the only one seeing generative AI making the data center cool again.

Nutanix CEO Rajiv Ramaswami said the company is "seeing more examples of people repatriating" workloads from the public cloud to data centers and private cloud arrangements. Enterprise concerns about generative AI are likely to equate to more data center buildouts than you'd think.

"I'm talking about enterprise workloads, because what has gone to the public cloud largely has been net new applications. So, for these workloads are still sitting in the enterprise environment, I think CIOs are being a lot more careful about how much of that do they take to the public cloud," said Ramaswami. "There's some repatriation happening from the public cloud back on-prem. But there's also a lot more scrutiny and forethought being applied to what should go into the public cloud."

Nutanix, which just reported earnings, is also seeing traction with its GPT-in-a-box offering, which accelerates generative AI across an enterprise while keeping data secure. Many of the industries interested in GPT-in-a-box cited by Ramaswami were noted by C3. Federal agencies, defense, pharmaceutical, and finance.

It's not surprising that defense, federal agencies and banking and finance are driving on-premises generative AI workloads. These companies are well-capitalized, have critical security needs and operate as technology companies in many ways.

Enterprises in these industries, along with nation-states, are the ones most likely to build their own data centers for AI workloads. Nvidia CEO Jensen Huang equates these next-gen data centers as AI factories.

Huang explained on Nvidia's most recent earnings call:

"You see us talking about industrial generative AI. Now our industries represent multi-billion-dollar businesses, auto, health, financial services. In total, our vertical industries are multi-billion-dollar businesses now. And of course, sovereign AI. The reason for sovereign AI has to do with the fact that the language, the knowledge, the history, the culture of each region are different, and they own their own data.

They would like to use their data, train it to create their own digital intelligence and provision it to harness that raw material themselves. It belongs to them, each one of the regions around the world. The data belongs to them. The data is most useful to their society. And so, they want to protect the data. They want to transform it themselves, value-added transformation, into AI and provision those services themselves."

The rest of us just want better TCO on AI workloads, but you get the idea. Dell Technologies appears to be capitalizing on AI-optimized servers along with SuperMicro.

How AI workloads will reshape data center demand

Jeff Clarke, Vice Chairman and Chief Operating Officer at Dell Technologies, said orders for AI-optimized servers grew 40% sequentially and backlog doubled to $2.9 billion.

"83% of all data is on-prem. More data will be created outside of the data center going forward that inside the data center today, that's going to happen at the edge of the network, a smart factory and oil derrick. We believe AI will ultimately get deployed next to where the data is created driven by latency.

We sold to education customers, manufacturing customers, and governments. We've sold the financial services, business, engineering and consumer services. Companies are seeing vast deployments, proving out the technology. Customers quickly find that they want to run AI on-prem because they want to control their data. They want to secure their data. It's their IP and they want to run domain specific and process specific models to get the outcomes they're looking for."

Power problems

What could derail the march to in-house data centers? Today, it's procuring GPUs, but that'll work itself out. Power will be the big issue going forward. Siebel explained:

"The constraint today is you have to call up Jensen and beg for GPUs. Let's say we can figure out how to do that. Maybe we know somebody who knows Jensen. Okay, the hard part is we can't get power. You cannot get the power to build the data center in Silicon Valley. Power companies will not give you power for a data center. So, I think this GPU constraint is ephemeral and soon it's going to be power."

Pure Storage Charles Giancarlo cited power as a limiting factor for AI adoption on the company's fourth quarter earnings call. "The energy demands of AI are outstripping the availability of power in many data center environments," he said.

The power issue was also noted at Constellation Research's Ambient Experience conference this week. Rob Tarkoff, Executive Vice President & GM, CX Applications at Oracle, said sustainable and cost-effective power is of generative AI's big challenges.

"Where are we going to get the power cost effectively and environmentally sustainable power to be able to run all the models we want to run? How are we going to keep the cost at a level that companies can realistically invest in?" asked Tarkoff, who added that scaling data and compute isn't cheap. "All of this sounds really cool in production, but it's an expensive and energy consuming value proposition."

Constellation Research's take

This thread about enterprises potentially building out data centers for generative AI spurred a bit of debate internally. Here's what a few analysts had to say.

- Doug Henschen: "Data warehouse/lakehouse workloads are selectively remaining on or moving back on premises. Warehouse and lakehouse workloads are often data- and compute-intensive, and in many cases, organizations that have moved these workloads to the cloud have had sticker-shock experiences. Among those firms that have maintained on-premises systems and data center capacity, we've seen some instances where they've either delayed migration of data warehouse workloads to the cloud or even repatriated workloads to on-premises platforms. When workloads are stable and predictable and there's no need for cloud elasticity, it can be more cost effective to run these workloads on premises."

- Dion Hinchcliffe: "Here's the problem with public cloud and today's increasingly always-on, high performance computing workloads (basically, a lot of ML + AI, both training and ops): 1) The hyperscalers' profit margins, 2) R&D costs for I/PaaS, and 3) the massive build-out Capex to support high growth. All three numbers are high right now. Public cloud customers cover all three expenses as major cost components in their monthly bills. Many CIOs have now realized they can bring in a good percent of workloads back in, sweat their private DC assets to the last penny, and largely avoid paying these markups (that at the end of the day aren't really their problem.) So, the big question: Is the pendulum swinging back to private data centers? Not hardly, but as my CIO interviews and research on the topic last year showed, there is definitely an active rebalancing of workloads to where they run best. That means a good bit of AI, especially training, will go back in-house or to specialty partners that are highly optimized for this, that will also keep the private training data away from the hyperscalers."

- Chirag Mehta: "The reality doesn't quite add up. Even with AI, running GPU on-prem is Capex intensive and requires highly skilled people. Other than a few companies this is not a practical thing to do."

- Andy Thurai: "The repatriation/migration is happening on a selected workload basis. For AI workloads, especially the training, it is a bit complicated and requires a ton of MLOps and scaling tools that the private data centers don't have. The GPU shortage and scale needed for model training are also constraints. While it is easy to move ML workloads, AI workloads are still a challenge from what I hear."

Relevant Shortlists:

- Next-Generation Computing Platforms

- Artificial Intelligence and Machine Learning Best Of Breed Platforms

- Artificial Intelligence & Machine Learning Cloud Platforms

- Cloud-Based Data Science and Machine Learning Platforms

- Hybrid-Cloud and MultiCloud NoSQL Databases

- Hybrid-Cloud and Multicloud Analytical Data Platforms

- Infinite Connectivity Vendors