Vice President and Principal Analyst

Constellation Research

Chirag Mehta is Vice President and Principal Analyst focusing on cybersecurity, next-gen application development, and product-led growth.

With over 25 years of experience, he has built, shipped, marketed, and sold successful enterprise SaaS products and solutions across startups, mid-size, and large companies. As a product leader overseeing engineering, product management, and design, he has consistently driven revenue growth and product innovation. He also held key leadership roles in product marketing, corporate strategy, ecosystem partnerships, and business development, leveraging his expertise to make a significant impact on various aspects of product success.

His holistic research approach on cybersecurity is grounded in the reality that as sophisticated AI-led attacks become…

Read more

In eight years, from a few hundred attendees at a nondescript location in San Francisco to 30,000+ attendees in one of the largest convention centers in Las Vegas, with a private concert in a football stadium, Google Cloud Next conference has come a long way. And so has Google Cloud. Google Cloud grew its revenue 5x in the last five years.

As conference attendees patiently waited in a long line at an overpriced Starbucks to get their caffeine fix on their way to a keynote, and then rushing between keynotes, sessions, and the expo, with a pit stop at a crummy food court before retiring to their hotel rooms, you could not go even a minute without hearing the word “AI.†The energy was evident and the enthusiasm palpable. In one of the most striking moments, during a developer keynote, one of the Google Cloud speakers referred to 2023 as “legacy.†That’s how much Google Cloud has changed.

Standing tall on a keynote stage in front of thousands of attendees, Thomas Kurian, the CEO of Google Cloud, announced their latest AI model, Gemini 1.5 Pro. A much taller screen behind him flashed a slide in large Google Sans font. An audible gasp from the audience followed. This AI model has a context window of 1 million tokens, the largest in the industry, which translates to analyzing data of various modality such as 1 hour of video, 30k lines of code, 11 hours of audio, and 700k words. Gemini now powers various Google Cloud’s offerings including their security portfolio.

Source: Google Cloud

Google Cloud's Differentiation Strategy: AI and Security Take Center Stage

When questioned about competition in a Q&A session with analysts, in his measured and deliberate answer, Kurian highlighted AI and security as Google Cloud's key differentiators. He also boasted Google Cloud’s open approach of giving options to customers at each layer whether they prefer first party, third party, or open-source AI models.

AI and ML are a natural fit for security, as they can help process billions of signals to identify attack patterns, profile adversaries, and respond to threats in real-time. Generative AI copilots have become increasingly prevalent in enterprise software, providing a conversational interface for end users to analyze extensive data and gain insights through simple English queries. Google Cloud has also embraced this trend, announcing Gemini to integrate AI capabilities across its product portfolio, including security offerings.

The Evolution of Google Cloud's Security Strategy

Google Cloud's security narrative traces back to Google's early days as a native cloud company, where scaling infrastructure to support billions of users securely was paramount. Google developed its security stack from hardware to applications, encompassing nearly every system layer. Google then brought this native security to Google Cloud to differentiate and compete against other public cloud providers, Amazon and Microsoft. It also paved the way for externalizing internal services as products.

In 2017, Google introduced security services like Identity Aware Proxy (IAP) and Data Loss Prevention (DLP), but they gained little traction. The 2018 announcement of Security Command Center (SCC) in beta marked a turning point, serving as a hub for protecting Google Cloud assets and integrating ISV partner solutions. While Google Cloud wasn't yet seen as a security solutions company, this marked the beginning of its SecOps journey.

In 2019, Google integrated Chronicle (with Backstory and VirusTotal) from Alphabet's X moonshot factory into Google Cloud, followed by the 2022 acquisition of Mandiant. These additions expanded Google Cloud's security portfolio but introduced disparate products with different purposes, target customers, and underlying cloud platforms.

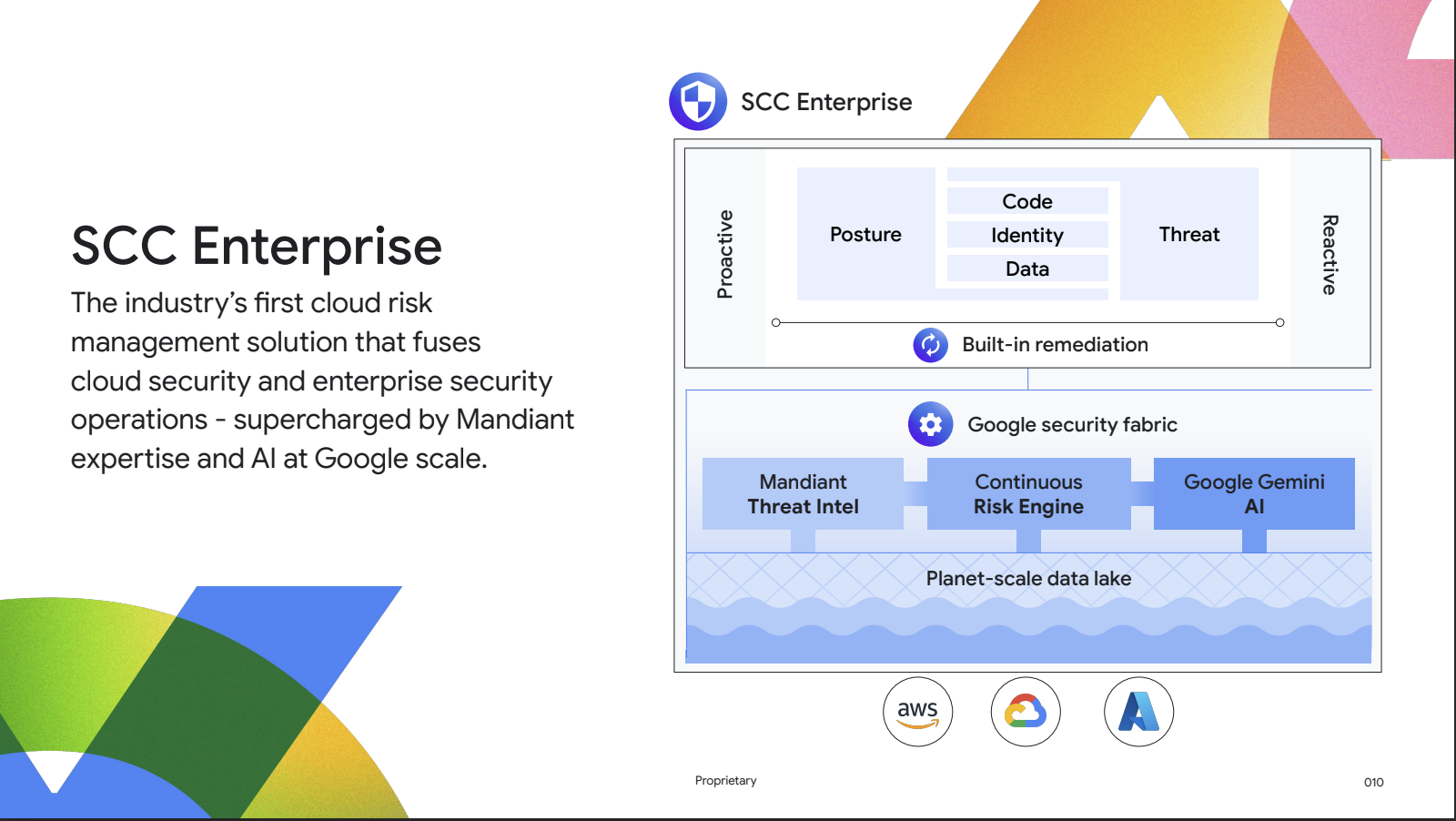

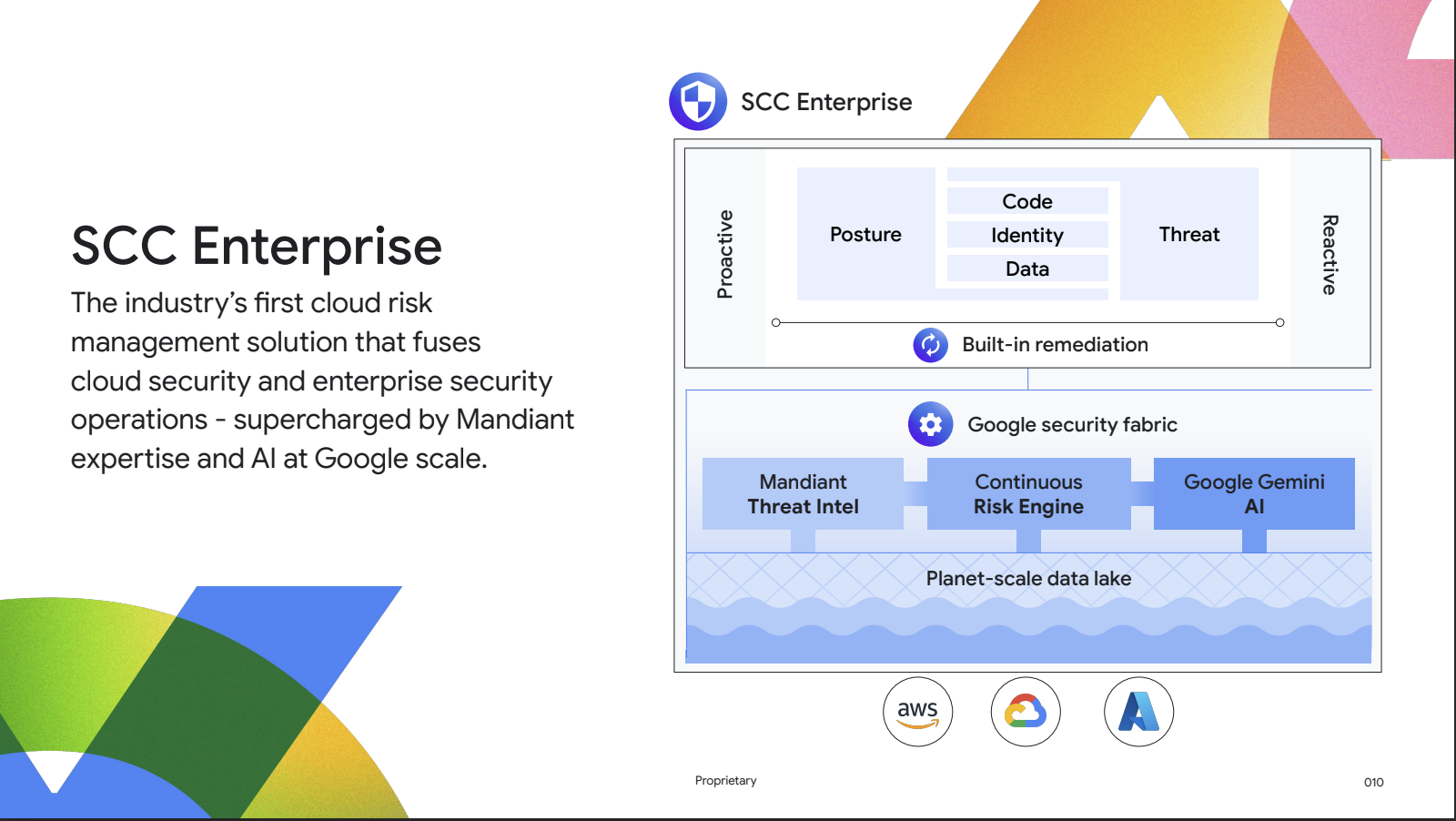

Since then, Google Cloud has focused on unifying the experiences, expanding Chronicle into a SecOps platform with SIEM and SOAR functionality, and competing as a security vendor beyond just a cloud provider. The announcement of Security Command Enterprise at Next'24 marked a significant milestone, converging cloud security with enterprise security and supporting AWS and Azure on the same "security fabric" as Google's SecOps functionality. Additionally, Google consolidated all security teams under a single organization with a general manager overseeing security as a business beyond Google Cloud, making Next'24 a coming-out party for Google Cloud's security offerings.

Unifying Threat Intelligence and Security Operations

To better understand cybersecurity, let's draw a parallel with investment banking. Investment banks have equity research teams that analyze stocks and provide recommendations, while sales and trading teams execute transactions based on this research. They collaborate, sharing real-time market insights to inform trading strategies.

Similarly, in cybersecurity, threat intelligence analyzes adversaries, incidents, and malware by scanning vast data volumes. This intelligence is crucial for operations teams managing digital asset security. However, these teams often lack shared tools and unified underlying information.

Google Cloud aims to change this with a new risk management approach, unifying CNAPP and TDIR (threat intelligence, detection, and response) on a single "security fabric" platform. By combining multiple categories, Google is attempting to define a new category on its own, a feat no other security vendor has successfully achieved. While categories can be limiting and primarily benefit vendors, Google does have an opportunity to differentiate, but it’s not going to be easy.

Source: Google Cloud

Securing Chrome as an Endpoint

As customers access applications through web browsers, Google recognizes the importance of securing Chrome as an endpoint to provide robust protection. With Chrome's widespread adoption in the enterprise sector, customers seek enhanced security features without requiring a separate browser. By treating Chrome as a secured endpoint, Google can significantly enhance endpoint security, even if operating system vulnerabilities remain. This approach also opens up a new cybersecurity category for Google to explore.

At Next'24, Google announced the general availability of Chrome Enterprise Premium, featuring Data Loss Prevention (DLP) and context-aware proxy capabilities. Notably, Google Cloud introduced Identity Aware Proxy (IAP) and DLP in 2017. While many customers have initiated their Secure Access Service Edge (SASE) journey, most won't complete it in the near future. Instead, they're seeking incremental solutions like proxies and DLP to supplement their existing tools. Google Cloud can capitalize on this demand with its offerings and those from its ecosystem partners, providing a comprehensive security suite.

Balancing AI Innovation with Core Cloud Security Investments

Google has acknowledged that certain areas, such as NGFW (Next-Generation Firewall), require significant investment without clear differentiation, and has opted to partner with Palo Alto Networks instead of building its own software firewall. This approach allows Google to serve its customers while avoiding unnecessary development efforts.

Humans are the weakest link in security. Google's previous lack of focus on Identity and Access Management (IAM) has been surprising, given its critical role in cybersecurity. However, Google is now addressing this underinvested area, introducing Privileged Access Manager in preview at Next'24. Leveraging AI, Google has the opportunity to revolutionize identity management and automate manual workflows, reducing the risk of severe breaches often caused by human error.

In addition to IAM, Google is investing in other critical security areas, such as Cloud Armor for DDoS protection and Autokey for customer-managed encryption keys. It's crucial for Google to continue differentiating its core cloud security offerings, ensuring the core platform receives the necessary investment, rather than disproportionately focusing on AI.

Challenges ahead

Google Cloud has laid a strong foundation with its security portfolio, and its intentions are commendable. Nevertheless, there are challenges that need to be addressed to fully realize its vision.

Crafting a Unified and Compelling Security Story

Google Cloud's security portfolio messaging is overly product-focused, rather than solution-centric. Customers are looking for solutions; they don’t want to solve a puzzle of mapping problems to products. On top of that, by combining CNAPP and TDIR, Google is creating a unique category, but this complexity can make it difficult for customers to understand how Google Cloud security offerings align with their specific needs, especially if they don't primarily use Google Cloud. This is particularly challenging for organizations that don't consider Google a security vendor, despite Google's multi-cloud security offerings.

Google will have a decision to make: Do they see their security offerings as a differentiation for customers to consider Google Cloud or do they see their security offerings to differentiate on their own merits with or without the underlying cloud platform they run on. Ideally, Google can achieve both—being the most secure cloud and a highly differentiated multi-cloud security vendor—but Google needs to articulate a unified and a compelling story that supports their vision.

Hybrid Environments and The IoT and OT Security Gap

Despite growing adoption of public cloud, 80% of IT workloads remain on-premise. While public cloud providers anticipate customers will modernize and migrate their on-premise workloads, the reality is that the majority of legacy workloads will remain on-premise in the near future. Moreover, the vast array of connected Operational Technology (OT) and Internet of Things (IoT) devices, running various legacy and non-standard operating systems, poses significant security challenges. These systems are often exploited by attackers, who leverage them as gateways to propagate malware to other systems.

As a native cloud company, Google is not well-positioned to secure these on-premise assets, nor should it attempt to build on-premise solutions. Instead, Google needs to foster strong partnerships to address this critical gap. Most organizations will operate in a hybrid environment for the foreseeable future, and industries with a high concentration of vulnerable OT and IoT devices—such as healthcare, retail, oil and gas, manufacturing, mining, and utilities—require comprehensive security solutions. Google Cloud must develop a compelling and comprehensive strategy to support these organizations and ensure the security of their on-premise workloads and devices.

Balancing Competition and Partnership, a Delicate Dance

Google is navigating a delicate balance with its security partners, many of whom have made significant investments in Google Cloud to serve their shared customers. While Google will inevitably compete with some of these partners, not just on Google Cloud but across public cloud platforms, it has committed to protecting their interests and has contractual obligations in place. These partners generate substantial revenue for Google Cloud, making it crucial for Google to tread carefully and avoid disrupting this revenue stream.

To strike the right balance, Google must compete and differentiate its offerings with clarity and integrity, driven by a genuine vision to innovate and improve products, rather than simply seeking to boost growth. By doing so, Google can maintain trust and collaboration with its partners while advancing its own goals.

Recommendations for customers

Assess and Align Your Security Tools Landscape

As a Google Cloud prospect or customer, take a comprehensive inventory of your current security tools landscape, encompassing Google Cloud and its partner ecosystem. Engage with Google Cloud and security tool vendors to discuss their roadmaps for Google Cloud, with a specific focus on how they plan to leverage AI to address your unique requirements. Additionally, consider exploring tools that offer multi-cloud support, regardless of your primary cloud provider, to future proof your security infrastructure.

Go Beyond Categories to Solve Specific Problems

Instead of shopping by category, focus on solving specific problems. Shift your mindset from being a vendor manager to a problem-solver, targeting the outcomes you want to achieve. Remember, your unique starting point and system maturity may differ from others, so avoid a one-size-fits-all approach. Tailor your solutions to your distinct needs and goals.

Advocate for Your Needs and Shape the Security Conversation

Clearly articulate and communicate your expectations to vendors, as security is a complex and vast domain. Recognize that vendors prioritize their product roadmaps, just as you prioritize your needs. Engage in open discussions with fellow security and technology leaders to share strategies and learn from their experiences. Be an active and vocal participant in the community, shaping the conversation and influencing the solutions that meet your needs.

Stay Ahead of Cybersecurity Threats with Expert Trends Report

As you craft your security strategy and execution plan, check out our "11 Top Cybersecurity Trends of 2024 and Beyond." (If you're a vendor and don't have access to the report please contact me for a courtesy copy.) Drawing insights from numerous conversations with security, technology, and business leaders as well as extensive market research, this cybersecurity trends report offers a holistic view into the broader cybersecurity landscape. It also offers tangible recommendations for CxOs who are frantically navigating the cybersecurity maze to design and operationalize their cybersecurity strategy, with the objective to improve their defenses against increasingly sophisticated attacks.

Chief Information Officer

Chief Information Security Officer

Chief Privacy Officer

Chief Technology Officer