Editor in Chief of Constellation Insights

Constellation Research

About Larry Dignan:

Dignan was most recently Celonis Media’s Editor-in-Chief, where he sat at the intersection of media and marketing. He is the former Editor-in-Chief of ZDNet and has covered the technology industry and transformation trends for more than two decades, publishing articles in CNET, Knowledge@Wharton, Wall Street Week, Interactive Week, The New York Times, and Financial Planning.

He is also an Adjunct Professor at Temple University and a member of the Advisory Board for The Fox Business School's Institute of Business and Information Technology.

<br>Constellation Insights does the following:

Cover the buy side and sell side of enterprise tech with news, analysis, profiles, interviews, and event coverage of vendors, as well as Constellation Research's community and…

Read more

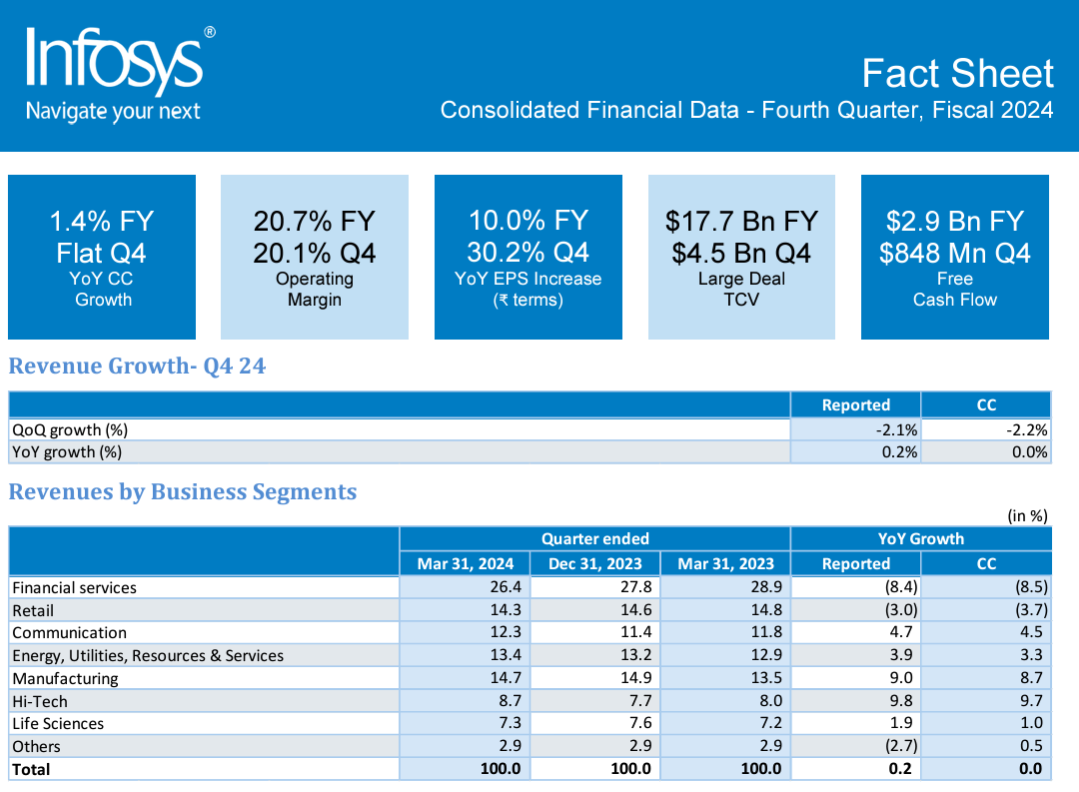

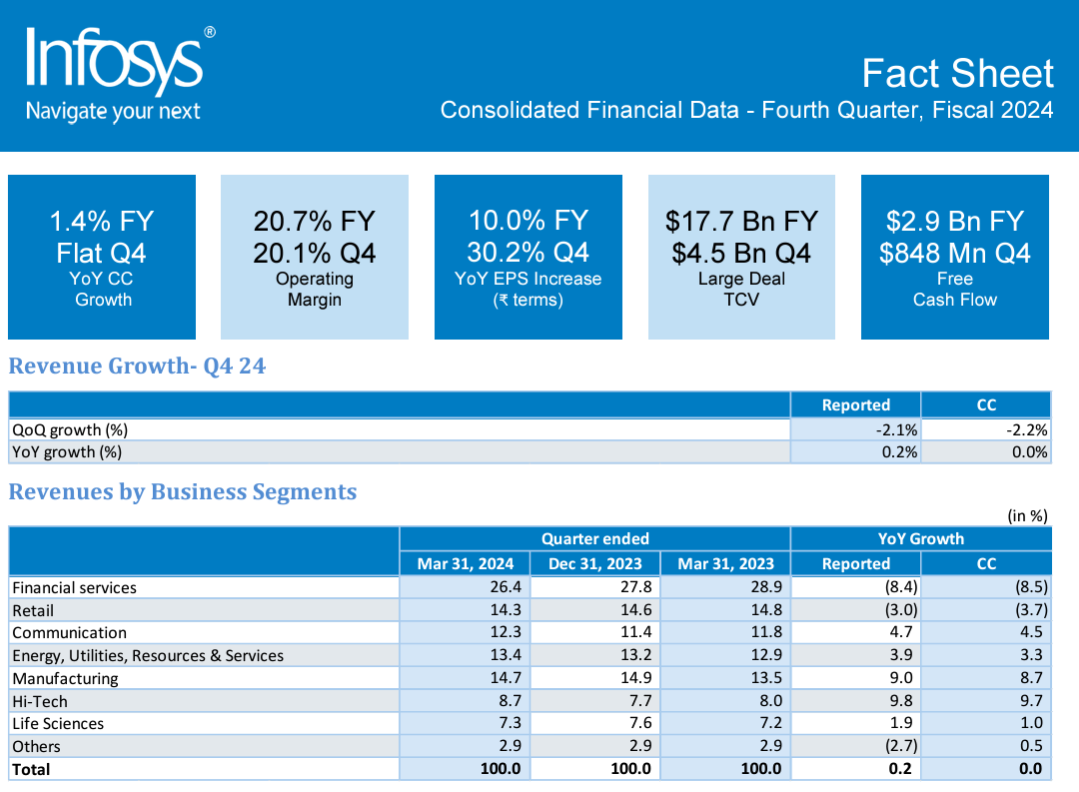

Infosys CEO Salil Parekh said the services provider is landing large deals and "seeing excellent traction with our clients for generative AI work," but its fourth quarter was mixed. Infosys also said it would acquire In-tech, an engineering and R&D services provider catering to the German automotive industry.

The company reported fourth-quarter earnings of $958 million on revenue of $4.56 billion, flat from a year ago. Wall Street was expecting Infosys to report fourth quarter earnings of 17 cents a share. For the year ended March 31, Infosys reported earnings of $3.17 billion on revenue of $18.56 billion.

For fiscal 2025, Infosys projected revenue growth of 1% to 3% with operating margins of 20% to 22%.

Constellation Research CEO Ray Wang said Infosys is facing a bevy of moving parts that the company will have to navigate. "The combination of AI arbitrage, margin compression, and exponential efficiency is having an effect on the overall market. Constellation expects that the overall service market will be flat to single digit growth for the next year," said Wang.

Constellation ShortListâ„¢ Digital Transformation Services (DTX): Global

Indeed, Infosys outlined a bevy of items on its earnings call. During the fourth quarter, Infosys said it had "a rescoping and renegotiation of one of the large contracts in the financial services segment." That renegotiation led to a 1% revenue hit, but 85% of the contract continued as is.

Parekh on a conference call said Infosys said fiscal 2024 brought in $17.7 billion in large deals. These large deals revolve around cost efficiency and consolidation.

Here's a look at what Infosys is facing.

The good

Generative AI remains a highlight for Infosys. Parekh said:

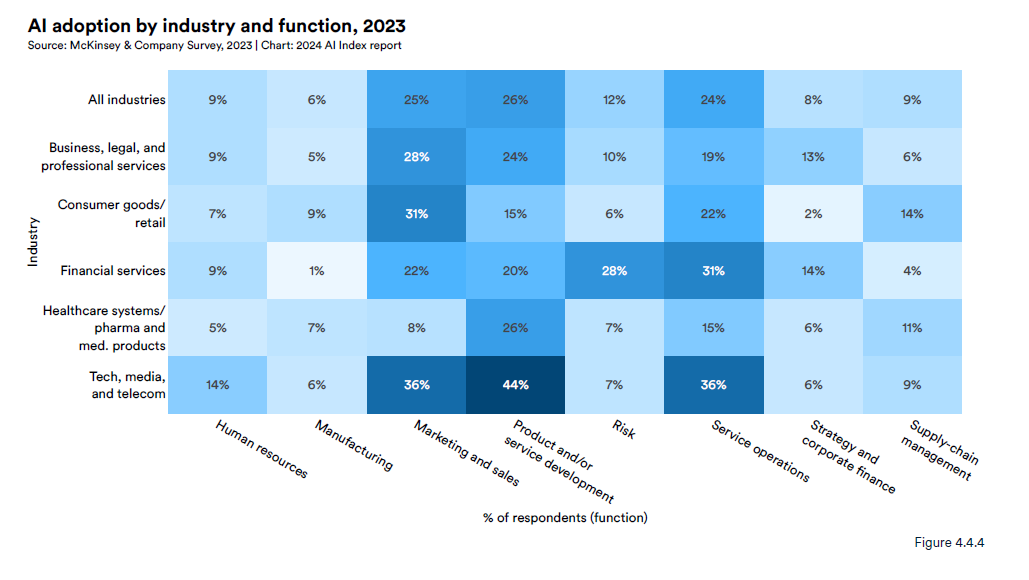

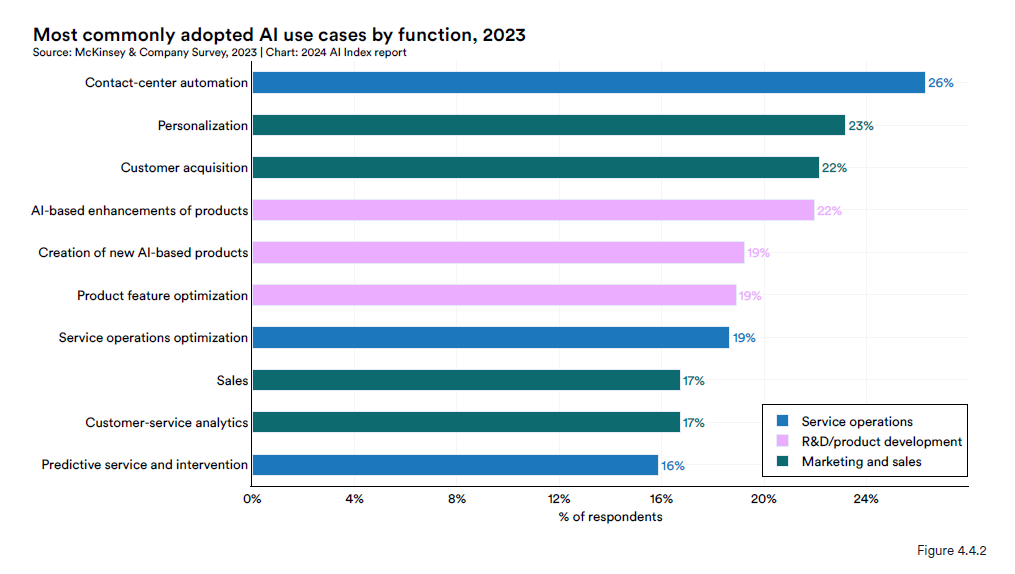

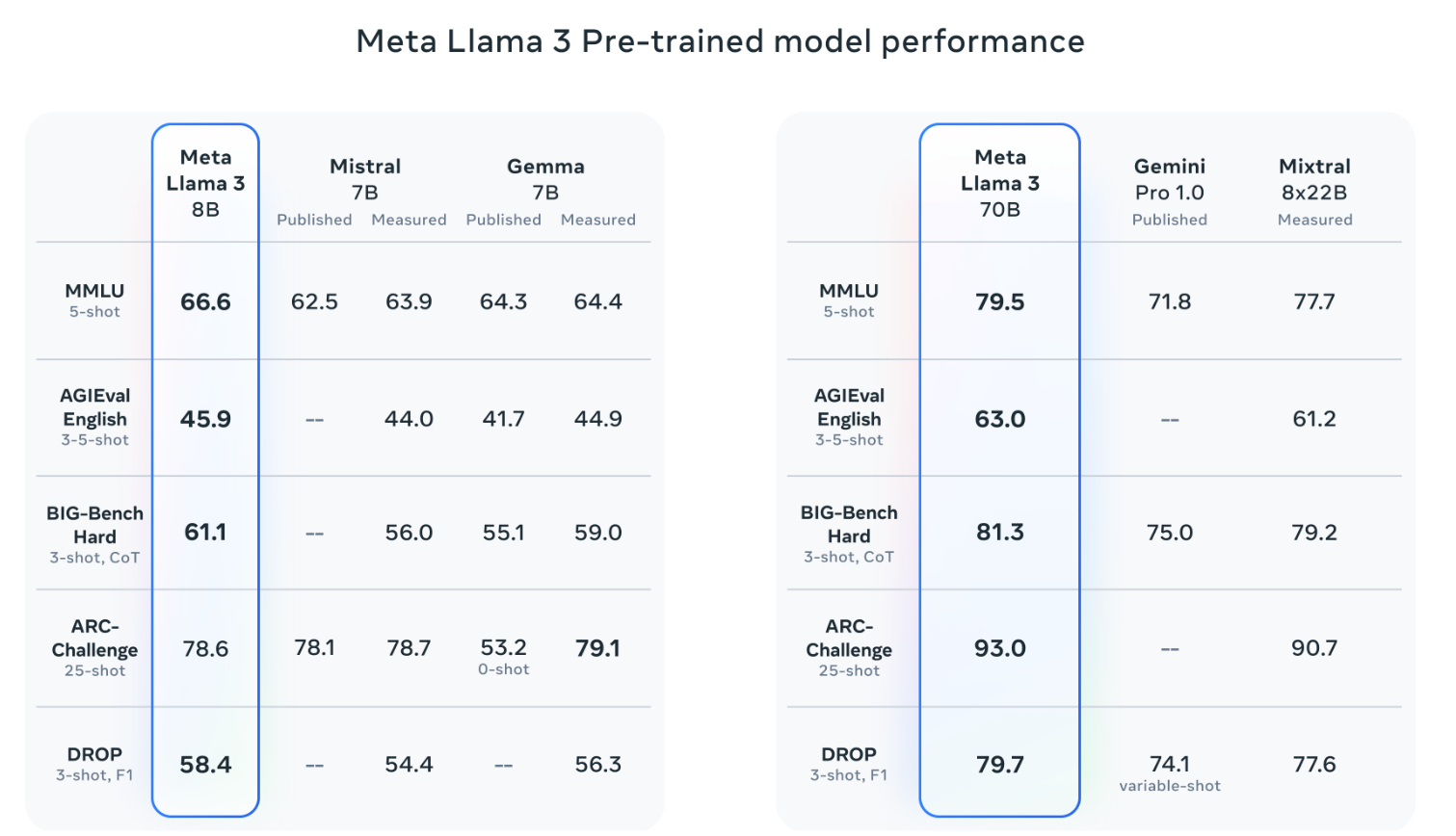

"We're working on projects across software engineering, process optimization, customer support, advisory services and sales and marketing areas. We're working with all market-leading open access and closed large language models.

As an example, in software development, we've generated over 3 million lines of code using one of generative AI large language models. In several situations, we've trained the large language models with client specific data within our projects. We've embedded generative AI in our services and developed playbooks for each of our offerings."

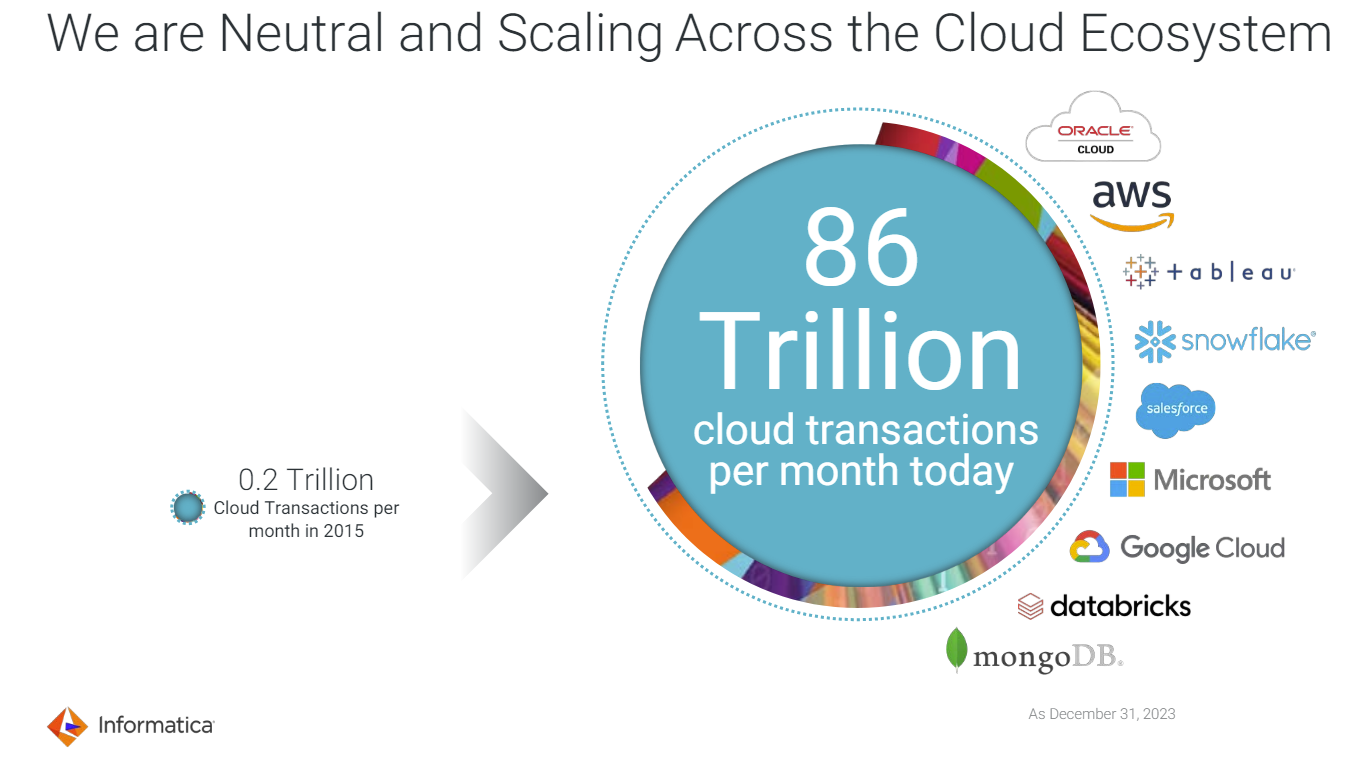

Public and private cloud migrations remain a priority. "We continue to work closely with the major public cloud providers and on private cloud programs for clients. Cloud with data is the foundation for AI and generative AI and Cobalt encompasses all of our cloud capabilities," said Parekh.

Data and automation. Parekh said the acquisition of In-tech played into the data strategy for Infosys. "We see data structuring, access, assimilation critical to make large language models and foundation models to work effectively, and we see good traction in our offering to get enterprises, data ready for AI," he said.

The challenges

Jayesh Sanghrajka, CFO of Infosys, said the company saw 180 basis points of margin compression quarter over quarter due to the renegotiation of the large contract, salary increases, brand building and visa expenses.

Infosys did offset the margin hit somewhat by lower post sales customer support and efficiency efforts called Project Maximus.

On the economy, Sanghrajka said:

"We continue to see macroeconomic effects of high inflation as well as high interest rates. This is leading to cautious spend by clients who are focusing on investing in services like data, digital, AI and cloud."

Industry demand

Sanghrajka said industry demand was mixed with strength in financial services and manufacturing as well as retail.

Financial services: "Financial services firms are actively looking to move workloads to cloud, pipeline and deal wins are strong and we are working with our clients on cost optimization and growth initiatives."

Manufacturing: "There is increased traction in areas like engineering, IoT, supply chain, smart manufacturing and digital transformation. In addition, our differentiated approach to AI is helping us gain mind and market share. Topaz resonates well with the clients. We have a healthy pipeline of large and mega deals."

Retail: "In retail, clients are leveraging GenAI to frame use cases for delivering business value. Large engagements are continuing S/4HANA and along with infra, apps, process and enterprise modernization. Cost takeout remains primary focus."

Communications: Clients remain cautious, and budgets are tight. Sanghrajka said cost takeout, AI and database initiatives may show promise.

Overall, Sanghrajka said Infosys should benefit with large deals recently won as well as AI. "We are witnessing more deals around vendor consolidation and infra managed services. Deal pipeline of large and mega deals is strong due to our sustained efforts and proactive pitches of our cost takeouts and digital transformation, etc., across the subsectors," he said.

Data to Decisions

Next-Generation Customer Experience

Tech Optimization

infosys

Chief Information Officer