Nvidia launched its Nemotron 3 family of open models as it aims to provide an efficient set of large language models that can be used by enterprises to customize and deploy in multi-agent systems.

The company said it is releasing open models, training data and libraries. Nvidia, which doesn't have to worry about monetizing models since it cashes in on GPU sales, is focused on providing tools to build agentic AI systems, which will use multiple LLMs focused on various tasks.

Nvidia is also filling in a major US open model gap. Meta's Llama hasn't been updated as the company has retooled its AI unit and may be focusing on proprietary models.

Nemotron 3 models will come in three sizes--Nano, Super and Ultra. Nemotron 3 Nano provides 4x higher throughput than Nemotron 2 and delivers the most tokens per second for multi-agent systems at scale.

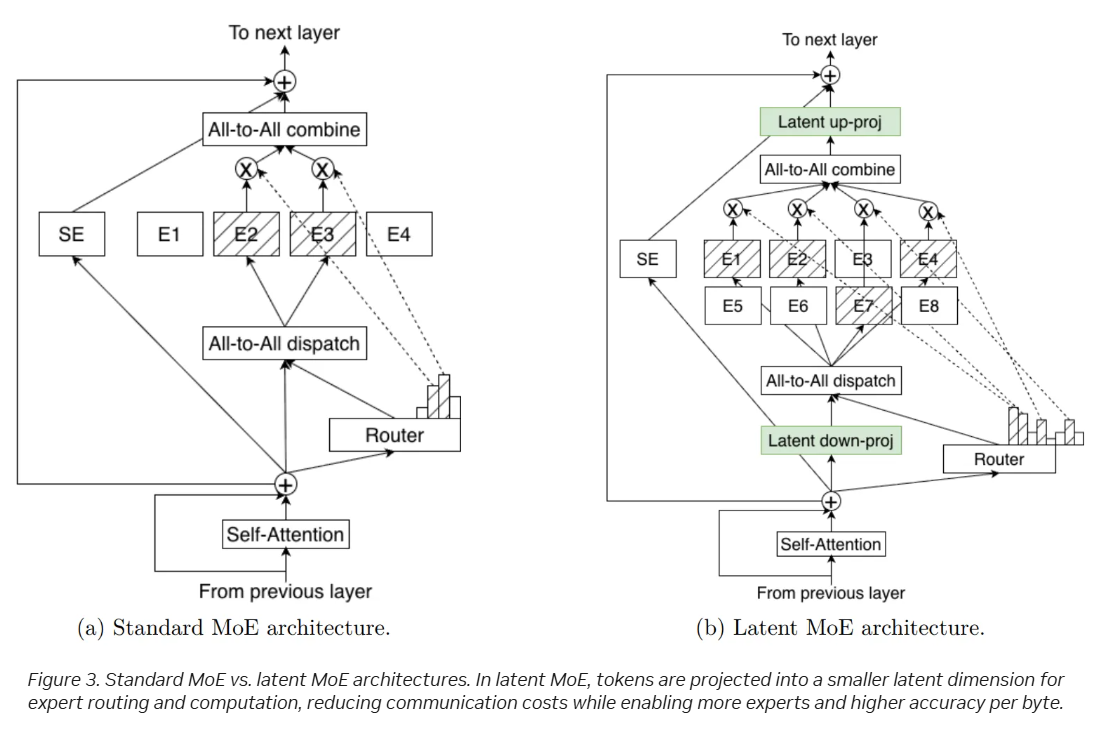

The Nemotron 3 Super and Ultra models use a hybrid latent mixture-of-experts (MoE) architecture.

Key points about the Nemotron 3 models include:

- Nemotron 3 is aimed at multi-agent use cases with a focus on issues such as context drift and high inference costs.

- Nvidia argues that the open approach gives enterprise transparency. That transparency will give developers trust to automate workflows.

- Customization is critical and the open approach enables more specialization.

- Nemotron 3 Nano is a small, 30-billion-parameter model that activates up to 3 billion parameters at a time. Nemotron 3 Nano is designed for efficiency and tasks including software debugging and content summarization.

- Nemotron 3 Super is a high-accuracy reasoning model with approximately 100 billion parameters and up to 10 billion active per token. Nemotron 3 Super is designed for multi-agent applications.

- Nemotron 3 Ultra is a large reasoning engine with about 500 billion parameters and up to 50 billion active per token. Nemotron 3 Ultra is designed for complex AI applications.

- Super and Ultra use Nvidia's 4-bit NVFP4 training format on the NVIDIA Blackwell architecture.

- Nvidia released three trillion tokens of new Nemotron pretraining, post-training and reinforcement learning datasets as well as safety datasets.

Nvidia outlined multiple early adopters ranging from Accenture to CrowdStrike to Oracle, Palantir and ServiceNow to name a few.

The game plan for Nvidia is to use Nemotron 3 to give developers options to mix and match open models with proprietary offerings to optimize costs.

Nemotron 3 Nano is available now on Hugging Face and inference service providers including Baseten, DeepInfra, Fireworks, FriendliAI, OpenRouter and Together AI. Nemotron is also available on platforms from Couchbase, DataRobot, H2O.ai, JFrog, Lambda and UiPath. And Nemotron 3 Nano is available on AWS via Amazon Bedrock with availability on Google Cloud, CoreWeave, Crusoe, Microsoft Foundry, Nebius, Nscale and Yotta on deck.

According to Nvidia, Nemotron 3 Super and Ultra will be available in the first half of 2026.