Google I/O 2024: Multimodal Gemini, Project Astra, AI agents and 'teammates'

Google I/O 2024 featured a bevy of generative AI advances that will be layered throughout Google's product portfolio--photos, music, Workspace, search, video and other areas--but the real takeaway was that the company laid out its vision for agents, models training models, and creating systems that work for you.

Sundar Pichai, Alphabet CEO, outlined the vision a day after OpenAI laid out GPT-4o.

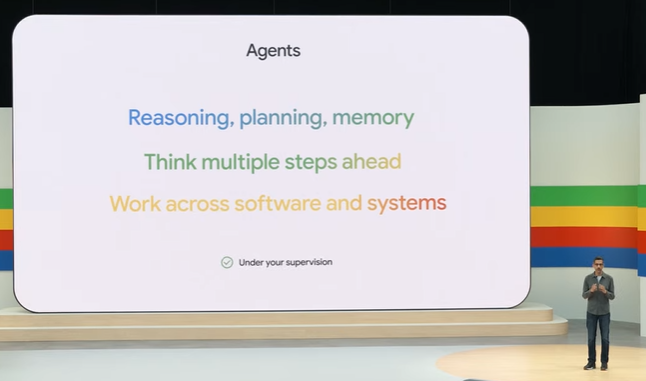

"With multi-modality soon you'll be able to mix and match inputs and outputs. This is what we mean when we say it's an IO for a new generation. That's one of the opportunities we see with AI agents. I think of them as intelligent systems that show reasoning, planning and memory and are able to think multiple steps ahead. They work across software and systems, all to get something done on your behalf and most importantly, under your supervision.

We are still in the early days, and you will see glimpses of our approach throughout the day."

Search is obviously the most important venue for Google generative AI given that it funds the model buildout. Google rolled out multistep reasoning in search and features such as AI Overview. Google search will ultimately reason, do research for you and tap into real-time data. We assume that this work on your behalf will feature advertising, but the data loop may be more important.

But search was just the obvious headliner--for developers and Wall Street. Here are the takeaways to note from  Google I/O's barrage of news, which served as a sequel to Google Cloud Next.

Google I/O's barrage of news, which served as a sequel to Google Cloud Next.

Project Astra. Astra is a "universal AI agent" designed to be helpful in everyday life. Astra is one of the reasons Gemini is multimodal and has AI training AI models. Google Deepmind Chief Demis Hassabis said Astra makes is a creation that's based on models training models and data feedback loops.

"At any one time, we have many different models in training, and we use our very large and powerful ones to help teach and train our production ready models together with user feedback," said Hassabis.

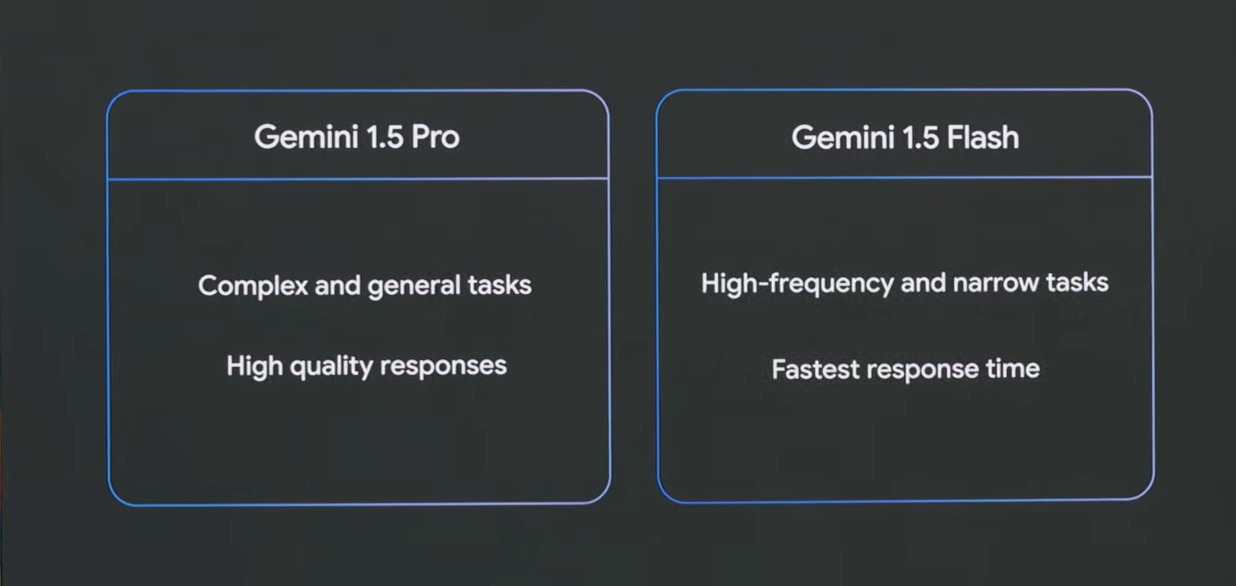

The token arms race. Pichai outlined how 1.5 million developers are using Gemini models to create next-gen AI applications. Gemini 1.5 Pro will now have a 2 million token context window and aggressive pricing. Gemini 1.5 Pro will be available today in Workspace Labs and be capable of synthesizing meetings, documents and your Gmail quickly.

Trillium, a new TPU that will be available on Google Cloud for machine learning and AI workloads. "Trillium deli vers a 4.7x improvement in compute performance per chip. Over the previous generation. So most efficient, and performant TPU today will make Trillium available to our cloud customers in late 2024," said Pichai, who noted that Google Cloud will offer Nvidia Blackwell GPUs too. Pichai's message was that Google has the scale to keep building out infrastructure for the AI arms race whether it's in liquid cooling advances, custom processors and fiber cables around the world.

vers a 4.7x improvement in compute performance per chip. Over the previous generation. So most efficient, and performant TPU today will make Trillium available to our cloud customers in late 2024," said Pichai, who noted that Google Cloud will offer Nvidia Blackwell GPUs too. Pichai's message was that Google has the scale to keep building out infrastructure for the AI arms race whether it's in liquid cooling advances, custom processors and fiber cables around the world.

Gemini 1.5 Flash is a model that's expected to be as powerful as Gemini 1.5 Pro, but be faster. Gemini 1.5 Flash is designed for low latency tasks as well as the small, but fast model trend. Gemini 1.5 Flash, available for public preview, is built for speed and real-time answers such as customer service responses even with an up to 1 million token window.

- Foundation model debate: Choices, small vs. large, commoditization

- Google Cloud Next: The role of genAI agents, enterprise use cases

- Google Cloud Next 2024: Google Cloud aims to be data, AI platform of choice

- Google Unveils Ambitious AI-Driven Security Strategy at Google Cloud Next'24

- Equifax bets on Google Cloud Vertex AI to speed up model, scores, data ingestion

Search will have planning capabilities. Google pitched a vision where search can answer complex questions and plan. When looking for ideas, Google will give you an AI-generated answer powered by Gemini. These answers and suggestions will likely be monetized. AI-organized search results will appear when you're looking for inspiration. Don't be surprised if these planning capabilities turn up in Google Cloud services. Would business and process planning be much of a leap?

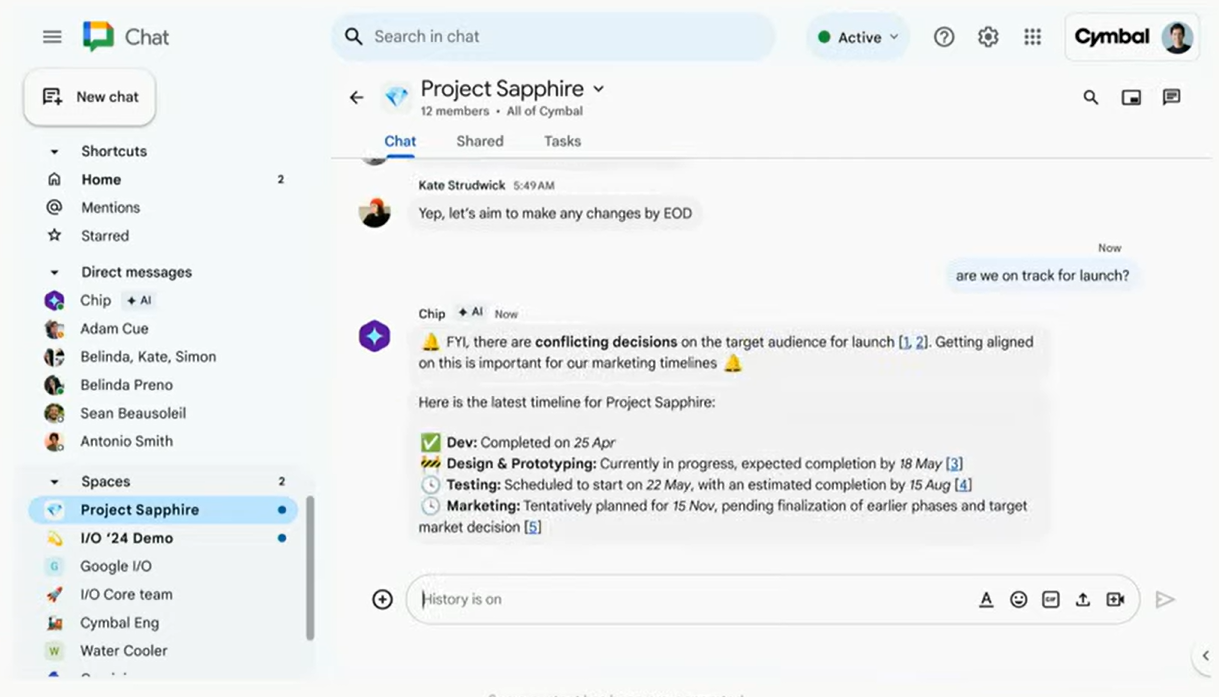

Meet your AI teammate. Workspace's Gemini-powered side panel will be available this month. These features across Workspace apps--Gmail, Meet, Docs--were announced at Google Cloud Next. Google demonstrated AI Teammate, an agent that has a Workspace account and participates in tasks and projects. The demo featured "Chip" configured by the business. The timeline? Stay tuned. Google is also rolling out Gems, which is a personalized Gemini assistant.

Gemini Nano will be multi-modal on Android devices. This AI on device announcement front runs Apple WWDC, but the move highlights how smartphones and edge devices will run models with low latency and create new experiences. Android 15 will feature "Gemini at the core."