Zoho makes big AI move with launch of Zia LLM, pack of AI agents

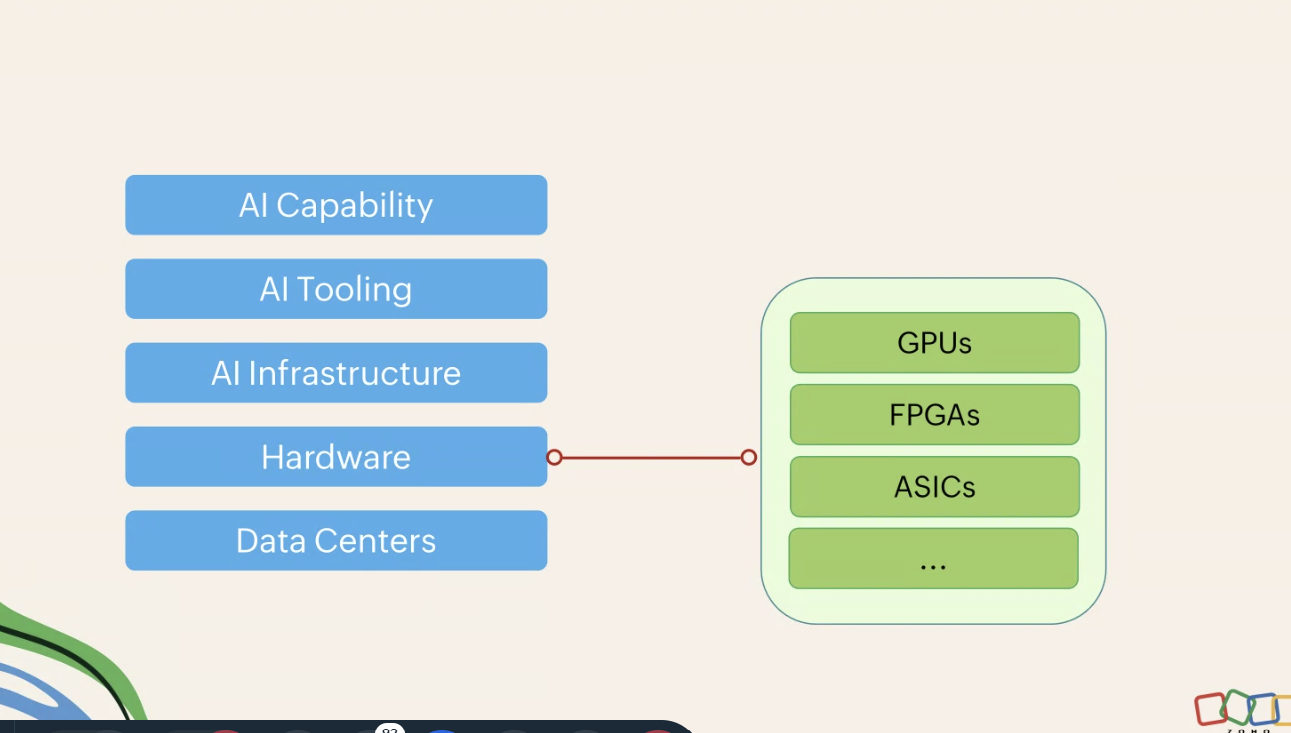

Zoho has launched its own large language model called Zia LLM, 40 pre-built Zia Agents, a no-code agent builder with Zia Agent Studio and a model context protocol (MCP) server that will connect its AI actions with third-party agents. The combination means Zoho is looking to democratize and differentiate with an AI strategy that revolves around developing its own right-sized models, optimizing and passing on the savings to customers.

For Zoho, the series of launches fleshes out its agentic AI strategy with the aim of democratizing various use cases, workflows and automation for enterprises of all sizes.

CEO Mani Vembu said Zoho's goal was to build foundational AI internally to better provide value and an integrated approach that "allows us to bring customers around the world cutting edge toolsets at a lower cost."

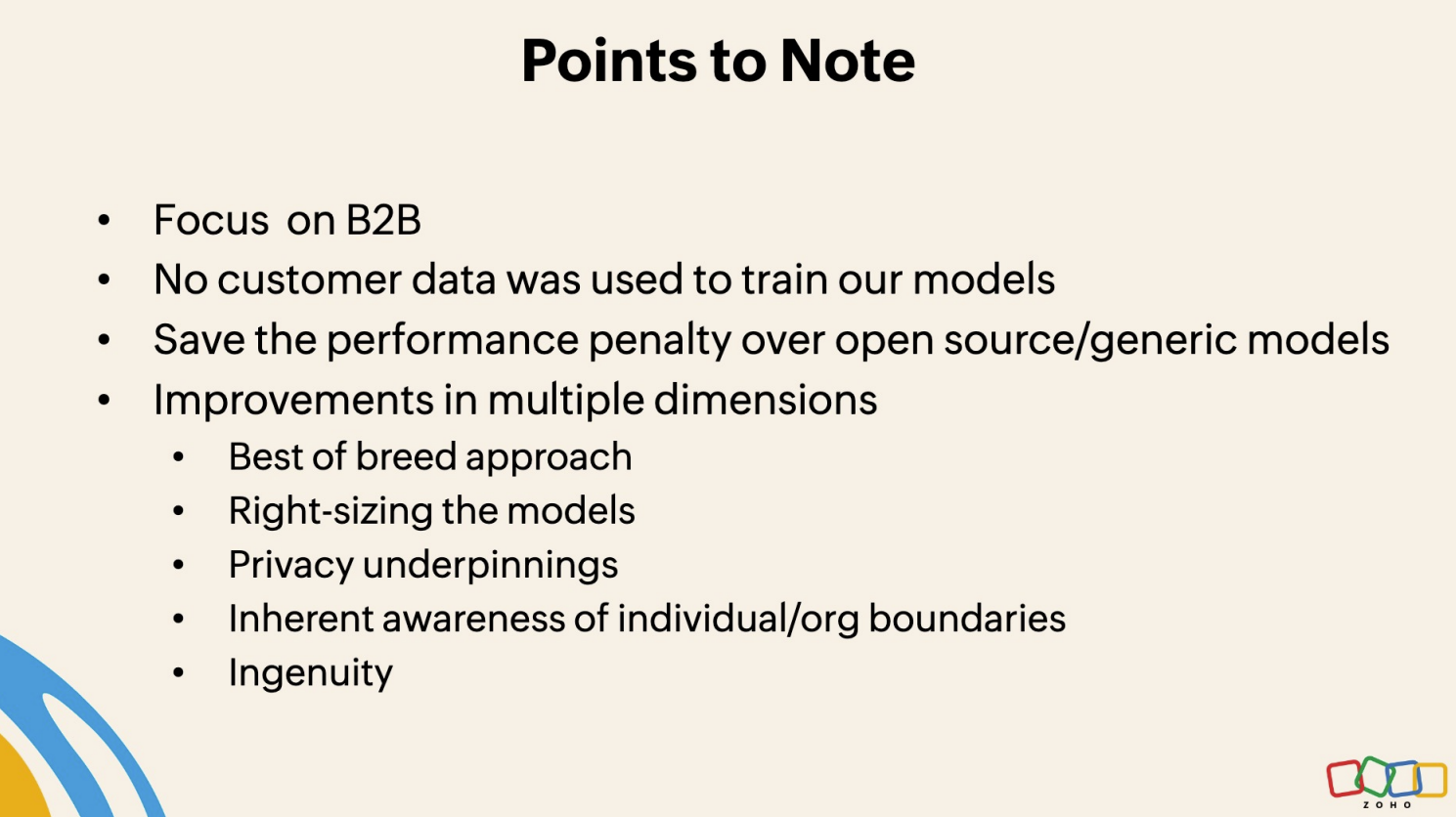

Zoho's AI strategy is to prioritize privacy and value. Its generic AI models across the Zoho platform aren't trained on consumer data and don't retain customer information. the goal is to use right-sized models that don't break the bank.

Zia LLM was trained and built entirely in India using Nvidia's platform. The foundational model was trained with Zoho product use cases in mind and can handle structured data extraction, summarization, RAG and code generation.

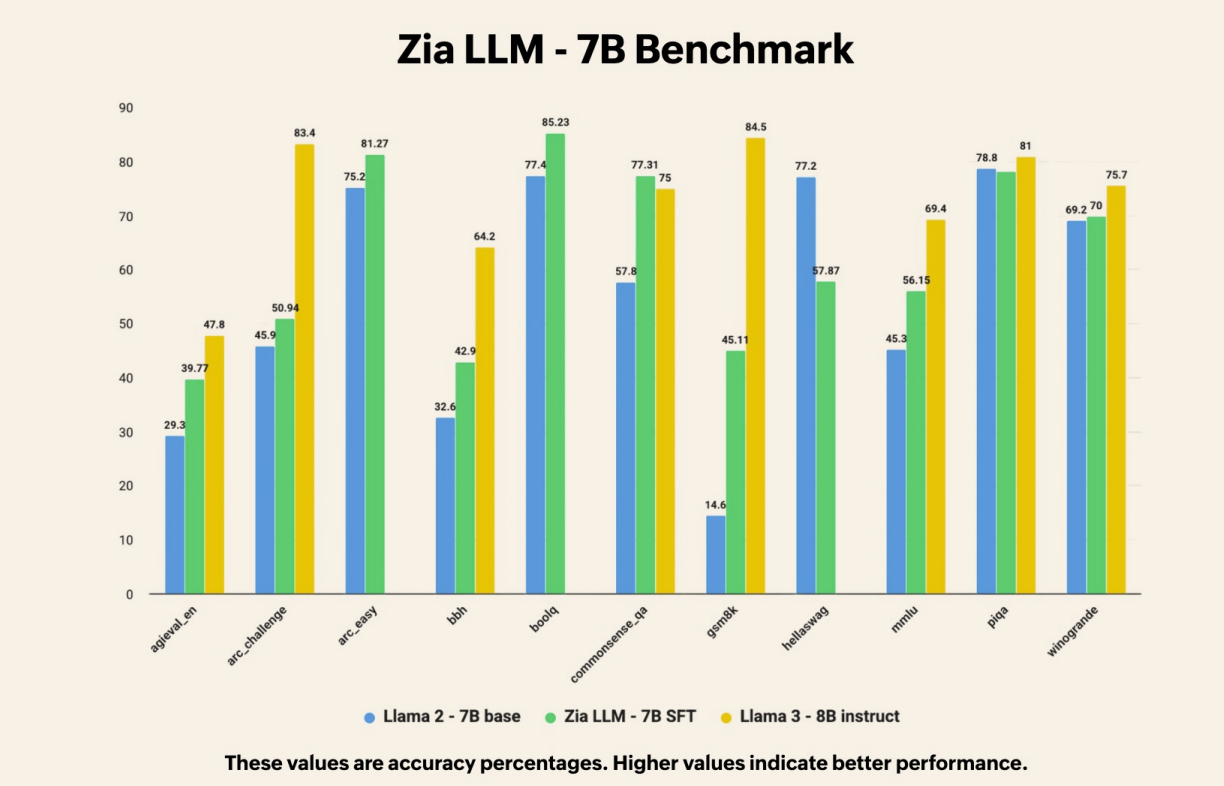

In addition, Zia LLM is family of three models with 1.3 billion, 2.6 billion and 7 billion parameters and competitive performance against comparable open source models. Zoho plans to mix and match models for the right context and power to performance balance.

Zoho also announced two Automatic Speech Recognition (ASR) models for both English and Hindi that's optimized for low compute resources. Zoho plans to support more languages in the future.

- Zoho CEO Sridhar Vembu steps back, becomes Chief Scientist

- Zoho focuses LLM efforts on Nvidia architecture

- Zoho Analytics revamp aims to connect, BI, data scientists, business users

According to Zoho, it will still support multiple LLM integrations on its platform including OpenAI's ChatGPT, Llama and DeepSeek bur reckons Zia LLM will feature a better privacy profile since customer data will remain on Zoho servers. Part of the cost equation for Zoho customers will be leveraging Zia LLM and AI agents without sending data to cloud providers.

Raju Vegesna, Chief Evangelist at Zoho, said the company isn't initially charging for its LLM or agents until it has a better view of usage and operational costs. "If there a big operational resource needed for intensive tasks we may price it, but for now we don't know what it looks like so we're not charging for anything," he said.

So far, Zia LLM has been deployed in Zoho data centers in the US, India and Europe. The model is being tested for internal use cases across Zoho's app and service portfolio. Zoho said Zia LLM will be available in the months ahead and feature regular updates to increase parameter sizes by the end of 2025.

Zoho said it is also planning to launch a reasoning language model (RLM).

“It’s good to see Zoho charting it's unique course into the AI era and is now adding its in-house Zia models,†said Constellation Research analyst Holger Mueller. “With its focus on privacy and cost effectiveness in-house built LLMs are the right strategy for Zoho. Now Zoho has to show that it can keep up with the LLM competition.â€

Why build your own LLM? B2B models are different

Zoho decided to build its own LLM from scratch for multiple reasons:

- Investing into its own LLM would give Zoho downstream effects that would improve its platform and enable new features.

- Zoho already was having success with dozens of AI models that weren't LLM-based.

- The company wanted control of the LLM layer since it would be a core part of the platform and the company would need to continually tweak. "We don't like black boxes," said Vegesna.

- Cost to performance is critical for enterprises and B2B software providers. By developing its own LLM, Zoho doesn't have to pass on additional costs to customers.

Although Zoho started LLM development within the last two years, two developments accelerated the pace. First, Zoho partnered with Nvidia. "B2B models are different than B2C and part of the technical partnership was about knowledge sharing," said Vegesna, who said Nvidia was more experienced with B2C. "With B2B, you don't worry about broader concepts as much. You narrow things down because you don't need the biggest model for every single tasks."

Vegesna said open source models such as Llama and DeepSeek, both supported by Zoho, also provided insights that improved development after development had started. Zia LLM was started before open source options appeared.

Zoho also had insights on how to develop Zia LLM from its own visibility into how APIs were used. Narrow models and non-LLMs were often used. Vegesna said Zoho is focused on using the right model for the right costs and optimizing for workflows.

"For the majority of use cases, narrow to smaller models will do the job," said Vegesna.

Having observability into its own platform enabled Zoho to optimize Zia LLM for the most common scenarios. That optimization should keep costs low. Vegesna said Zoho will continue to enable customers to use third party LLMs and the company hosts top open source models such as Llama, DeepSeek and Alibaba's Qwen.

"The customer will decide on the models used and Zoho LLMs will be an option," said Vegesna. "We have customers that don't want to rely on third party LLMs and we saw many of them taking open source models and optimizing them for their environments. Now we have that core technology, we can play the long game."

The agentic AI play

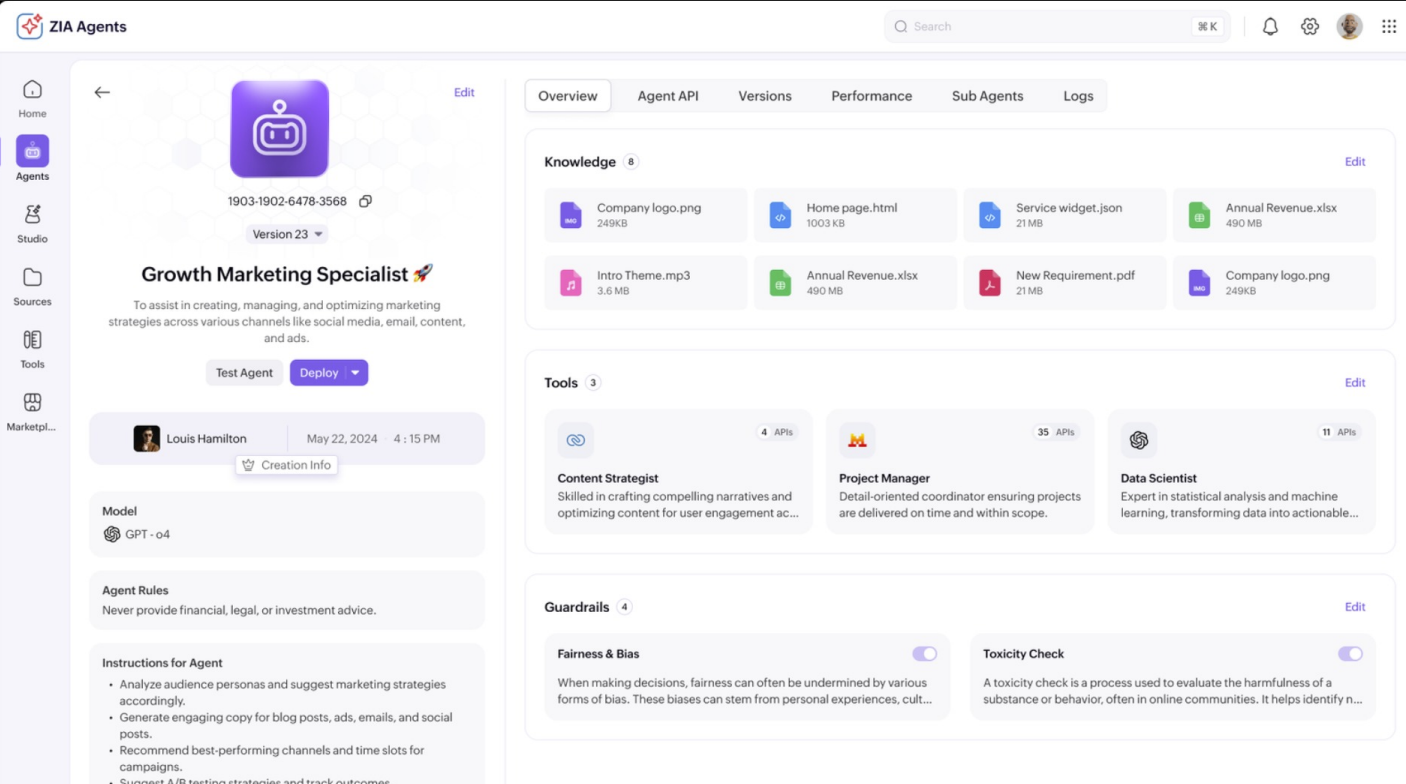

Zoho's strategy for AI agents is to offer dozens of prebuilt agents that can perform actions based on enterprise roles such as sales development, customer support and account management. The company's 40 prebuilt agents will be native in Zoho Marketplace and available for quick deployment in Zoho apps.

Zia Agents can be used within a Zoho app, across the company's stack of 55 applications or customized to specific use cases.

A few of the prebuilt Zia Agents include:

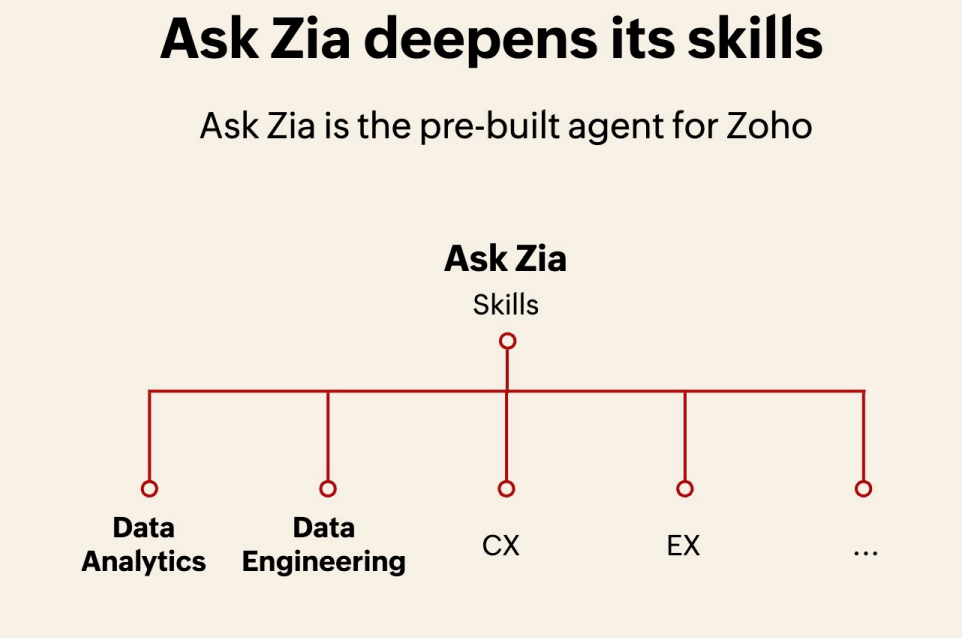

- A new version of Ask Zia, which is a conversational assistant for data engineers, analysts and data scientists, but can democratize information for business users. Ask Zia is set up to address pain points faced by each persona.

- Customer Service Agent, which processes incoming customer requests, understand context and answer directly or offload to a human. This agent will be integrated into Zoho Desk.

- Deal Analyzer, which provides insights on win probability and next-best actions.

- Revenue Growth Specialist, which looks for opportunities to upsell and cross-sell existing customers.

- Candidate Screener, which identifies candidates for job openings based on role, skills, experience and other attributes.

Ask Zia agents for finance teams and customer support teams will be added.

Building and connecting agents

A big part of Zoho's AI agent plan is Zia Agent Studio, which was announced earlier this year, but has been revamped to be fully prompt-based with an option for low code.

Zia Agent Studio can build agents that can be deployed autonomously, triggered with rule-based automation or called into customer conversations.

Zoho is betting that its ecosystem of 130 million users, 55 apps and its own developers can fuel the Agent Marketplace to cover multiple use cases. Agent Marketplace now has a dedicated section for AI agents.

The company said its MCP server is designed to work across multiple applications and runs natively in Zia Agent Studio. Zoho has a library of actions from more than 15 Zoho applications exposed in early actions.

Zoho said Zia Agent will be assigned a unique ID and mapped as a digital employee so enterprises can analyze and audit performance, analysis and workflows with guardrails.

According to Zoho, Agent2Agent (A2A) protocol support will be added to enable collaboration with agents on other platforms.

General availability for Zia LLM will be at the end of 2025. Zia Agents, Zia Agent Studio, Agent Marketplace and Zoho MCP Server are being rolled out to early access customers with general availability at the end of the year.

Going forward, Zoho outlined the following roadmap:

- Scale Zia LLM model sizes with parameter increases through 2025.

- Expand available languages used by the speech-to-text models.

- Introduce a reasoning language model (RLM).

- Add skills to Ask Zia with a focus on finance teams and customer support teams.

- Support for Agent2Agent protocol.