Results

Research Report: Inside Constellation's 2021 Enterprise Awards

A YEAR THAT HAS LED TO THE GREAT REFACTORING

Despite the chaotic pandemic environment, 2021 was an amazing year for enterprise tech IPO's, product launches, and new technologies. CXO's were given the budgets to refactor their business models, add additional talent, and invest towards a digital future. Amidst this backdrop, enterprises changed how they worked, what they sold, how they monetized, why they existed, and who they worked with. The underpinnings of what is known as the "Great Refactoring" began in 2021 and will fully emerge into 2022 and beyond..

The technology vendors named into this year's Enterprise Awards have show how they can partner with clients to be successful, show resiliency despite the harshest selling environments, and innovate despite the constraints placed on their businesses. It's with pleasure to announce the 2021 Constellation Enterprise Award winners.

The field of cognitive automation continues to grow. Auditoria.AI applies intelligent SmartBots to automate manual, error prone, and time-consuming finance processes for accounts payables and accounts receivables. Using AI, Auditoria works with ERP systems, accounting platforms, and other financial systems of record. Partnerships with Bill.com, Oracle ERP Cloud, Oracle NetSuite, Sage Intacct, Workday, and collaboration tools such as Microsoft 365 and Google Workspace have put Auditoria on the map for back office cognitive automation. In addition, Auditoria was a winner of Constellation's 2021 The Pitch awards. [R Wang]

Horizon3.ai is one of the winners of Constellation's 2021 The Pitch awards. The startup provides Red Teaming services that simulate a cyber security attack. Their penetration testing platform, NodeZero, constantly assess an organization's attack surface. From harvested credentials, misconfigurations, dangerous product defaults, and exploitable vulnerabilities to compromise your systems and data, Horizion3.ai's use of knowledge graph analycis and adaptive attack algorithms work to identify vulnerabilites . . Our core innovation is the use of knowledge graph analytics combined with adaptive attack algorithms. From a fresh round of investment to growing awareness in the market place among CISO's, this Autonomous Security Platform is gaining mindshare among the security elite. [R Wang]

Mastering data is the foundation of digital transformation. Varada provides customers with a dynamic and adaptive big data indexing solution. The proliferation of data requires the ability to run any query including ad hoc, experimental analytics and vast discovery projects. Varada has emerged as a critical solution for organizations building digital proficiency and competing on decision velocity. As one of the 2021 The Pitch award winners for data to decisions, Varada is a stand out solution. [R Wang]

Traditional IT vendors have often failed and few have excelled in the cloud era. Oracle is one remarkable exception and with solid execution in 2021. This success spans the spectrum of SaaS, PaaS and IaaS, Oracle even managed to change their cloud rankings at a reputable analyst firm. This type of improvement has not happened in over a decade. Across the major cloud vendors, Oracle has the most enterprise IT friendly cloud, and plans to match market leader AWS in 2022 in terms of locations and regions offered. Locations matter for cloud due to data residency and performance requirements. On the PaaS side Oracle has advanced its autonomous vision, which surprisingly remains un-answered but almost the entirety of the competition. Larry Ellison's vision of the "chip to click" stack materlalizes in no place better than Oracle Exadata and its various offerings. Interestingly, Oracle is gaining a second revenue stream for databases, with a number of smart, code based innovations around its mySQL offering with HeatWave. Finally Oracle has been in a strong position on the SaaS side for years, but has managed to improve breadth and depth of its enterprise suite offerings, once again. At the moment there is no more complete and functional rich enterprise automation suite than Oracle Fusion. Customers hope Oracle does not rest on in its laurels. With the largest planned acquisition in its history - Cerner, this would cements its offerings in the largest vertical of the US economy and guarantee future revenue streams while locking out competitors from this key vertical. [Holger Mueller]

CEO Bill McDermott and team continue to add great talent to the team, expand offerings, and make key AI acquisitions required for future growth. This has led to strong quarter to quarter revenue growth and a $115 billion market cap with admission to the Fortune 50 club. Acquisitions this year include tuck-ins with DotWalk, Intellibot, Lightstep, Gekkobrain, Mapwize, and Swarm64. Significant partnerships with 3Clogic, Celonis and Microsoft highlight a more alliance friendly approach. System integrators are clamoring to poach and hire Servicenow talent. [R Wang]

Accenture and CEO Julie Sweet pulled out all the stops to lead the market with significant revenue growth and strategic partnerships. The Q1 2022 numbers showed a $3.21 billion increase YoY from Q1 2021 or 27% increase. These numbers reflected both growth in outsourcing revenues and consulting revenues. The firm's 360° value approach paid off with 20% or more growth in all regions and service lines. Key cloud partnerships and strong hires led to a 58% increase in stock price for 2021. As clients continue to deploy digital transformation projects, Accenture has kept ahead with hiring of scarce skills and preparation for new business models in the Great Refactoring. [R Wang]

Wipro is in the midst of a major turnaround. CEO,Thierry Delaporte has made significant hires to the management team including a new CRO, a new CEO of European Operations, and head of business development. The company has not been shy about entering new markets with acquisitions. In fact, 2021 was a busy year for acquisitions including Capco, Ampion, Edgile, and Leanswift. The investment of $1 billion into cloud transformation with the Fullstride offering has been well received. Most importantly in 2021, Constellation witnessed Wipro undergoing a massive cultural shift and making the right moves for the future. [R Wang]

With this acquisition, Oracle will breathe new life into Cerner. Customers hope Oracle puts Epic on alert for its abuse of market position and priciing and inject much needed competition in this market. [R Wang]

and

The Ford Motor Company and Google announced a six-year extensive business and technology partnership. Ford's VP of Strategy, David McClelland clarified that Ford chose Google for leadership in AI and ML, robustness of the Android operating system, strength of Google Assistant for voice technology, and mapping and navigation technology. Thomas Kurian, CEO of Google Cloud noted that Google and Ford will build a co-creation digital transformation team focused on manufacturing, purchasing, and factory floor modernization. Other potential opportunities include new retail experiences, new ownership offers based on connected vehicle data, and other product development modernization efforts involving AI and data. Ford was clear that Google was the preferred cloud vendor and other partnerships with Amazon for Alexa and Apple for Car Play would remain as choices for customers. This partnership with Google benefits Ford by allowing our employees to harness the power of data in smarter and innovative ways. Customers will get better and smarter in-vehicle experiences. Given what's been publicly discussed by Google and Ford, this is the beginning of many such Data Driven Digital Network partnerships by Google in each strategic industry. One can expect to see more of these as industries collapsed around value chains and tech partnerships around data and AI improve the competitive landscape. While every cloud vendor will be a strategic partner, the real question is which industry leaders will build, partner, or be punished in a world of digital giants. [R Wang]

After the unfortunate and early passing of Mark Hurd, Saffra Catz was given the sole CEO role and has delivered. Amongst the few IT vendors being the trusted partners of CIOs and CTOs, Oracle is the strongest and most relevant provider of technology. The combination of Ellison and Catz forms one of the very few 20+year partnerships at the highest executive level and they understand each - blindly. The division of labor is clear - Ellison does technology and Catz the 'rest'. But for the technology to work, the rest needs to work as well, making sure that sales and marketing work, making sure consulting services and partners deliver and that support keeps customers in good shape. That all will growing the cloud business of Oracle, even recently climbing on the #3 stop according to a prominent analyst firm. The Cerner acquisition is Catz's boldest move, and if cleared and successful, will mark as the biggest move that Oracle has done in its history - so Catz has the company in its reins during the most transformative time in IT -having successfully evolved Oracle from 'still relevant' to 'relevant' and into growth mode.

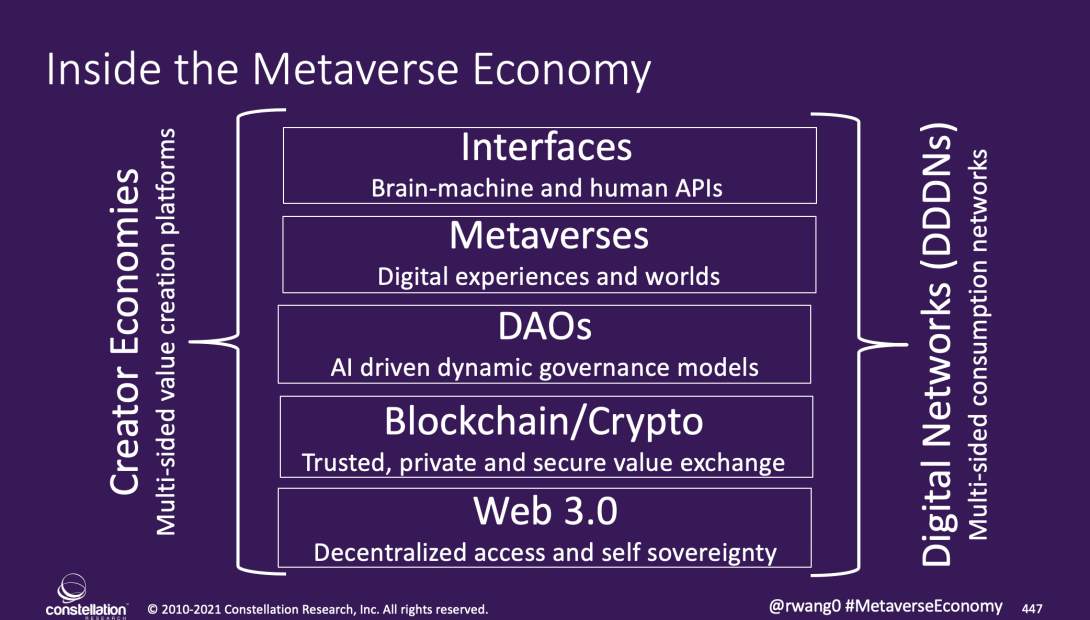

Much hype has been made about the Metaverse. However, very few organizations have fully grasped the impact that the metaverse will have on experiences and engagement inside the enterprise. More than just gaming worlds or hardware devices, the metaverse economy brings new opportunities for enterprises to bring their physical presence and 3-D digital presences together in one unified offering to their stakeholders – customers, employees, partners, and suppliers.

Constellation predicts that advances in the metaverse economy will provide a critical element of the “Great Refactoring†ahead and a $15 trillion market by 2030. [R Wang]

As the story goes, Freshworks started after CEO Girish Mathrubootham struggled through a nightmarish service experience with a broken television. Originally founded in Chennai, India, now based in San Mateo, CA, Freshworks went public with a valuation of $12 billion making it the first Indian SaaS company to be listed on the NASDAQ. Freshworks focuses on the experience side of software, looking to deliver customer and employee experiences with an emphasis on sales and support, IT services management, and call center software on the cloud. Unlike some competitors that have sought to glamorize the allure of experiences, Freshworks has focused on a core belief that delivering experiences should be as simple as actually experiencing them. This has led to simplified pricing models and streamlined product offerings. What makes the Freshworks IPO especially interesting is the emergence of a new, more service-born and customer-focused CRM and engagement player to take on platforms like Salesforce. [Liz Miller / R Wang]

The rapidly growing category of digital adoption platforms (DAPs) -- tools that help companies and workers get more from the IT systems and applications they use -- had a major proof point in the IPO of WalkMe last June. The firm is a leading Israeli-based SaaS company whose stock value has held overall even in the volatile markets, making what is arguably the category leaders a notable winner. WalkMe's code-free platform uses specially tuned algorithms to provide visibility to an organization's Chief Information Officer (CIO) and business leaders so they can then use the platform to better optimize business performance, by improving user experience, productivity and efficiency for employees and customers. While DAPs are still often flying under the radar in parts of the IT industry, WalkMe's debut has solidifying the category in the financial markets while also showing the category has legs in helping organizations strategically achieve higher returns from their IT and talent investments.

Sprinklr is one of the top tech companies in the New York City metropolitan area and its IPO will fund future tuck-in acquisitions and complete their vision to compete head on with Salesforce.com. [R Wang]

Qualtrics has brought a lot of value to shareholders and investors and in its current iteration has added some top talent including a new COO and Chief Revenue Ops leaders, Abhi Ingle from AT&T. [R Wang]

[R Wang]

|

UKG was faced with the challenge to merge two strong brands, Kronos and Ultmate Software. Coming up with the new acronym UKG for Ultimate Kronos Group and combining it with the new smiley logo, was one of the most daring branding moves in tech. But it worked off, not only did it rally customers and employees to the joint company, but through smart sponsoring of events and athletes, the brand has seen more exposure with HCM decision makers and users than much larger competitors in the space. [Holger Mueller]

Unit4 hosted a US based customer advisory event in Boston, Massachusetts. The event brought together HCM, Finance, IT, and operations professionals. On their first day, CEO, Mike Ettling kicked off the event with an inspiring keynote, featuring Mark Gallagher and Steve Cadigan, looking at the post-COVID future. Customers got a sneak peak into the new People Experience Suite and ERPx, Unit 4 also announced their acquisition news and Industry Mesh announcement along with customer awards.

Data to Decisions Digital Safety, Privacy & Cybersecurity Future of Work Marketing Transformation Matrix Commerce New C-Suite Next-Generation Customer Experience Tech Optimization Innovation & Product-led Growth Leadership Chief Analytics Officer Chief Customer Officer Chief Data Officer Chief Digital Officer Chief Executive Officer Chief Financial Officer Chief Information Officer Chief Information Security Officer Chief Marketing Officer Chief People Officer Chief Privacy Officer Chief Procurement Officer Chief Revenue Officer Chief Supply Chain Officer Chief Experience Officer

Five Trends Shaping the Cloud Security Conversation

This blog is based on a new Constellation Research report Preparing for the New Age of Cloud Security, Dec 7, 2021, by Liz Miller and me,

1. Zero Trust

One of the most popular slogans today is also one of the most confusing: Zero Trust. Of course we want to trust business partners and service providers to provide predictable outcomes. But “zero trust” is a technicality, a policy perspective that dispels the traditional class system which grants selected individuals or systems more privileged access than others. That’s the road to security hell, for it is increasingly possible to fake or falsely assume a trusted position, and thence wreak limitless damage.

A zero-trust access control policy means that all agents within an system are dealt with equally, regardless of identity or history. Essentially it equates to the “need to know” principle: all actors must have a demonstrable need for a given level of access.

Sadly “zero trust” has become a catch phrase, much abused in security marketing. All it really means is do not trust people on their word. Equally, we should not accept “zero trust” as a technical description on its word alone. It must go with tangible security controls.

2. Exponentially Increasing Client-Device Capabilities

Moore’s Law leads to periodic paradigm shifts in computing focus, from the server to the client and back again. In the 1980s and 90s, mainframe computing gave way to minicomputers, workstations, and microcomputers. Then the sheer volume of data processing required computing to swing back to huge back-end systems and shared resources, which became known as the cloud aka “someone else’s computers”.

The pendulum has swung yet again, driven by cryptography, with mounting preference (nay, mandates) for secure microprocessing units (MCUs) on the client side. Local encryption key generation and storage, and integrated transaction signing are now standard in mobile devices, and IoT device capability is heading the same way. Managing fleets of connected automobiles, smart electricity meters, and medical devices -- to cite some popular examples -- takes a dynamic mix of processing and storage at the edge and in the cloud.

High quality client-side cryptography is the key (pardon the pun) to genuine strong authentication; that is, using personally controlled tangible devices, individually accountable and resistant to phishing.

The FIDO Alliance has brought about the biggest improvements ever seen in end user authentication, by consumerising cryptography. Most users are blithely unaware that when they unlock a virtual credit card on their mobile phone via facial recognition, they are invoking hardware security and public key cryptography which until recently was confined to HSMs costing $25,000 each.

3. Quantum-Safe Cryptography

Quantum computing promises radical new architectures surpassing the classical limits of arithmetic and sequential data processing. In cybersecurity, there is a dark side top this power: many encryption algorithms, especially those used for digital signatures and authentication, are based on “one-way functions” that are easy to compute in one direction but practically impossible to reverse. There has always been an arms race between cryptologists and attackers who uncover secret keys through brute force attacks using powerful conventional computers (like graphics cards and custom gate arrays). The main weapon against these brute force attack is for vendors and sysadmins to regularly increase the lengths of the keys used to protect data.

Quantum computers will one day punch through the computational barriers that underpin most of today’s digital signature algorithms. For now, the threat tends to be exaggerated; some of the world’s best cryptologists advise the arms race will continue, with longer keys still offering protection for many years to come. Meanwhile the National Institute of Technology and Standards (NIST) is conducting a methodical search -- and sponsoring competitions -- the next generation of quantum-safe cryptographic techniques, and practical quantum engineering progresses in leaps and bounds.

This is an especially difficult area to predict with confidence. The maintenance of enterprise cryptographic services must be left to experts. The better cloud providers, offering managed services, original research and insights, will be watching and preparing for the next generation of encryption.

4. Cloud Hardware Security Modules

For decades, hardware security modules (HSMs) have been specialised core components of critical security operations such as payments gateways, ATMs, and high-volume website encryption. Cloud HSMs are critical new building blocks for the future of the virtualized cryptographic processing, offering:

- compact, certifiable, tamper-resistant cryptographic primitives running in firmware

- secure execution environments for running partitioned custom code

- secure elements (dedicated microchips) holding private keys which rarely if ever are released outside the safe hardware

- physically robust enclosures with tamper detection to scrub code and keys in the event of a mechanical or electronic intrusion

- certification to high levels of assurance such as Common Criteria Evaluation Assurance Level 6 (EAL6) or FIPS 140-2 Level 4, and

- options to rent either shared or dedicated modules.

5. Data Protection as a Service

Many security challenges have more to do with regulatory trends and competitive pressure than fraud or overt criminality. Increasingly stringent data protection rules are driving demand for encryption and confidentiality.

Managed cryptography is needed in response to the long-standing challenge of keeping encryption systems up to date. I see encryption, tokenization and anonymization (with qualifications) being delivered to customers as services via APIs, with the algorithmic complexity abstracted away. Ideally, higher order data protection services will come to be expressed in an algorithm-neutral manner.

True anonymisation is a promise that is difficult to keep, but a range of technologies are available for providing qualified degrees of data-hiding -- including homomorphic encryption, fully homomorphic encryption (FHE), and Differential Privacy. These are highly technical methods, needing careful fine tuning and awareness of the compromises they entail.

Traditionally encrypted data loses all structure and cannot be processed for routine tasks such as sorting, reporting and statistical analysis. Homomorphic encryption is a class of algorithms that preserve some structure and enable some processing. Fully homomorphic encryption promises to enable all regular processing to be performed on encrypted data. This is new technology, not yet fully proven or accepted by the academic and regulatory communities. With FHE and similar techniques still in flux, I see these being offered as a cloud service in innovative new ways.

“Infostructure”

In conclusion, with data itself becoming a critical new asset class, we need a broader, more integrated definition of “data protection” to transcend today’s siloed approaches to privacy and cybersecurity. The technical complexity, regulatory risk, and intensity of ongoing R&D will all drive the virtualization of cloud-based data protection, and a new breed of infostructure services.

Digital Safety, Privacy & Cybersecurity New C-Suite Tech Optimization FIDO SaaS PaaS IaaS Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain CRM ERP CCaaS UCaaS Collaboration Enterprise Service Chief Executive Officer Chief Information Officer Chief Digital Officer Chief Data Officer Chief Information Security Officer Chief Privacy Officer Chief Technology Officer

ConstellationTV Episode 24

On CRTV Episode 24, Constellation analysts Liz Miller and Holger Mueller close out the year with news updates & interviews with Steve Forcum of Avaya and Sridhar Vembu of Zoho.

On ConstellationTV <iframe src="https://player.vimeo.com/video/659441492?h=8ac1a32a1e&title=0&byline=0&portrait=0&speed=0&badge=0&autopause=0&player_id=0&app_id=58479" width="640" height="360" frameborder="0" allow="autoplay; fullscreen; picture-in-picture"Monday's Musings: Prepping for Digital Transformation In ERP

Market Forces And Modernization Drive Digital Transformation Initiatives In ERP

Enterprises must navigate the constant demand for change and the growing pressures for transformation. Existing ERP systems designed for the last century meet post-pandemic requirements built on agility and decentralization. A perfect storm emerges for ERP modernization. Consequently, digital transformation and modernization of existing ERPs provide leaders with opportunities to:

- Unlock opportunities for growth and differentiation. The pandemic has show how digital channels must connect with digital business models and new monetization methods. Digital transformation also enables differentiated service offerings and improved value exchange with stakeholders.

- Optimize operations. Connected services and supply chains can be optimized with analytics, automation, and AI. Every business process will be evaluated to determine how to fully automate, augment the machine with a human, augment the human with a machine, and introduce a human touch. Process optimization meets reduced errors and greater efficiency.

- Mitigate risk. New approaches to digital transformation allow the creation of business graphs. Decisions made over time evolve into a knowledge graph which helps identity, mitigate, and prevent risks to the organization.

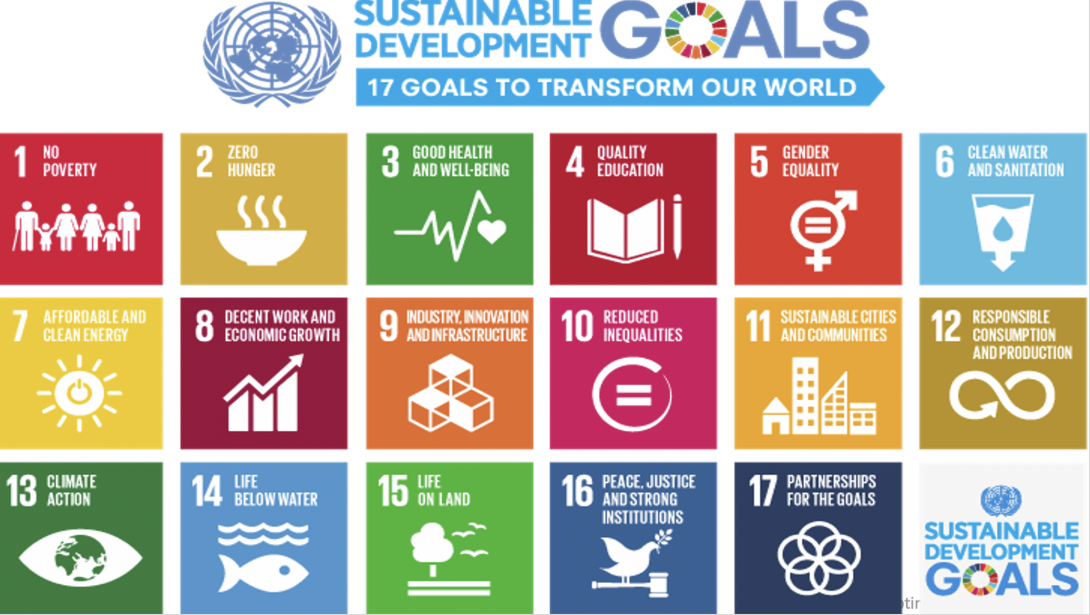

- Drive sustainability. New approaches will improve the measuring and management of sustainability reporting. The capturing of this information over time will provide the foundation for end to end monitoring of ESGs.

Design With The End In Mind

Success will require a technology strategy that anticipates needs in the future and understands why every design must be built for continuous change and adaptation. ERP systems must support a future of analytics, automation, and AI. If anything, the Great Refactoring impacts the future of ERP. Design criteria must include attributes such as resiliency, continuous integration footprints, and cloud based analytics in real-time. Leaders should consider:

- Work backwards. Define the future state and how the organization will achieve these goals. Digital transformation requires form following function. Hence, requirements must be defined. Outcomes must be specified. Once the design has been established, modernization plans can begin.

- Take a technology platform approach. ERP modernization must be driven by a multi-sided platform approach. Future network models support a wide range of interactions among stakeholders such as customers, partners, suppliers, and employees. These platforms must be automated and support greater resiliency.

- Address new security realities. How data is acquired, stored, updated, managed, and removed must be considered from both a privacy and a security perspective. Data encryption and security models must be designed with the highest level of safety.

The Bottom Line: Get Ready For The Great Refactoring

Massive changes in purchasing behavior, supply chain disruptions, inflation, new regulatory onslaught, and shifting work-life priorities all create a backdrop for a great refactoring ahead. These pressures will test existing legacy systems and create business and technology opportunities for upgrades. The move to the right ERP platform will mitigate future risk and create growth opportunities for leaders who proactively design, build, manage, and operate a path forward for Digital Transformation and ERP modernization.

The Cloud Reaches an Inflection Point for the CIO in 2022

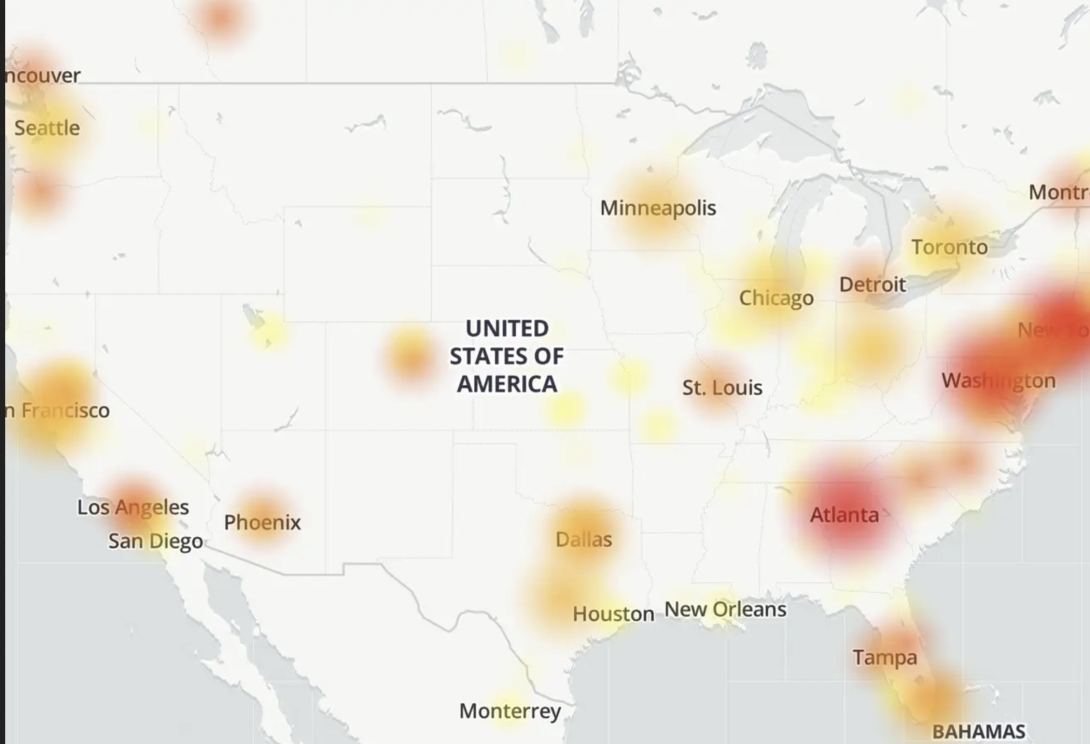

Ultimately, this month's substantial Amazon outage will be seen as a watershed event in the evolution of the cloud. The outage itself was just the right kind -- due to its length, breadth, and visibility -- that will ensure it that will have substantial, long term ramifications for how businesses rely on a single cloud provider going forward. Yet it was also just one of a series of major realizations that many Chief Information Officers (CIOs) are having right now about the cloud and its overall role in the future of IT.

Not that the cloud isn't the end-game when it comes to most IT capabilities. Far from it. The cloud in all its various forms will certainly continue to be the leading model for IT service delivery. However, the concept of what the cloud actually consists of doesn't stand still either. Both the infrastructure-as-a-Service (IaaS) and Software-as-a-Service (SaaS) industries have both grown, matured, and become so central to an extent that some of their latent strategic challenges are becoming evident at last. Estimates vary, but most of IT will move to the cloud by 2030, somewhere between 90-95%. However, this cloud won't be the public clouds we've grown up together with so far. The cloud of the next decade will be more structured and controlled, yet far more nuanced, having most likely traveled through the crucible of the current set of underlying issues that have begun to accumulate and gain our attention.

Overcoming Today's Cloud Challenges to Unlock The Future of IT

For now though, a confluence of critical factors are converging to make the CIO tread with considerably more care when it comes to adopting cloud. Most of these factors aren't a major surprise by themselves. However, it's their growing urgency to address that collectively that is gaining the attention of top-level IT leaders. Here are the top issues with cloud in my conversations with IT executives over the last two years:

- The diaspora of enterprise data out to the cloud. SaaS is especially problematic in this regard, storing data in commercial and private clouds usually outside the control of IT and in often entirely uncertain locations. But far from gaining growing influence over enterprise data as hoped, the CIO is finding the cloud is greatly complicating master data strategies and other vital strategic efforts. These include creating a single view of the customer, developing seamless customer experiences across IT silos, and supporting analytics/artificial intelligence (AI) efforts, which are all fueled by ready open access to data, particularly across IT applications. Having APIs and microservices certainly helps, but they are still not consistently offered or readily amenable to many use cases. The reality is that cloud is still proliferating faster for most of IT departments than they can fully reach the data stored in it. CIOs are increasingly looking at platform-based solutions to this problem, most notably customer data platforms in the short term.

- Commercial clouds have evolved and proliferated beyond ready manageability. If one thing was apparent from Amazon's re:Invent confab this year, is that clouds are becoming highly sophisticated and complex in their overall offerings. In the attempt to provide all the major types of capabilities customers might want, the result has been that commercial clouds are offering 5-10 different types of database services, myriad analytics tools, and many flavors of machine learning (ML) capabilities in an attempt to be everything to the market. Amazon alone offers over 450 instance types for its popular EC2 compute service, for example. This complexity, which I've cited as the prime challenge for IT today, is exacerbated by cloud services that continue to add new capabilities without refactoring or reconciling them in some way. It's not even clear that simplifying commercial cloud services can be done, given the extent that customers depend on legacy cloud APIs/services. So we are now witnessing the first major accumulations of technical debt at the hyperscaler level. This will begin to favor new cloud entrants with cleaner, fresher product architectures.

- Big bets on a primary commercial cloud are creating lock-in, preventing differentiation, and creating fragility. Many organizations are still taking the primary vendor route, selecting one major cloud provider for much or most of their needs. The hope is this will make staff education, customer support, vendor accountability, and costs easier to manage. But in reality, what I'm hearing from CIOs is that this isn't helping them as much as they hoped. The strategy is also bringing with it equally challenging downsides, including overcommitting to one provider that isn't able to respond in kind. Another key factor is that many higher order cloud services have different strengths/weaknesses, so one cloud is not usually going to be the answer in the areas of the business with competitive impact. Thus the favored cloud vendor approach does not provide a differentiated edge against business competitors. It also contributes to creating an unhealthy monoculture of cloud vendors. Multicloud and cross-cloud have emerged has approaches to cope with the challenges of overreliance on single cloud vendors, and indeed, cloud proliferation of all kinds.

- Cloud is the vector for most cybersecurity attacks. Because it connects companies to the rest of the world, the public cloud is the source of most concerted cyberattacks, and so IT leaders are now starting to question their relatively open connection to the Internet in some cases. Companies are beginning to address this more systematically with strategies like zero trust, but the fact remains that the fast growing costs of dealing with cybersecurity and ransomware are largely due to the cloud. A reckoning is coming, and most organizations will be more carefully, deliberately, and purposefully connected to the cloud in the near future. This will limit some of the cloud's value creation potential and the pace of adoption/transformation, but make it safer to use.

- With edge, cloud is sprawling everywhere. One of the hottest trends in cloud is the current focus on edge networks, edge computing, and associated value-add services. With edge, the cloud can be put wherever it needs to be, avoiding the disadvantages of being overly centralized whenever it makes sense. But edge will also be the most varied and heterogenous part of the cloud. This is anathema when it comes to the seamless, homogenous fabric that is often touted as one of the cloud's signature advantages. The edge is bringing back proprietary hardware, local devices, new stacks, and very differnt techniques, most notably including having customers deploying their own hardware. While cloud providers are trying to preserve the pay-as-you-go nature of cloud in their edge offerings, the model will inevitably blur and look more like on-premises again. Put simply, the edge is significantly complicating how the CIO manages their cloud footprint, even as it offers more choice. This is creating demand for ever more specialized, and therefore rarified, skills within IT that can successfully architect, design, and operate cloud solutions with edge-based elements, further straining already very limited cloud talent availability.

- Subscription costs are painful as they grow as an overall share of IT. One of the complaints about the cloud I hear most often from CIOs is that fact that subscription costs are so much higher than paying traditional maintenance fees. "It feels like I'm paying for everything all over again every year", is the common refrain I hear, even as this view neglects the reality of shedding most capital expenditures in the calculus. This year's cloud spend consumed an average of 10% of enterprise IT budgets, and will grow to 15% or more by the middle of the decade. However, with some estimates putting current cloud prices at 10-12 times what it costs for a cloud provider to deliver the service if IT moved it on-premises, there is growing pressure from all quarters, from the CFO to the end-customer, to reduce ever-expanding cloud costs, often with hybrid cloud or tier 2 cloud service providers (CSPs.) There are many indications that discount cloud providers are gaining in populariy for non-critical use cases.

- Geopolitical issues with cloud providers loom. With China actively blocking many overseas cloud endpoints and Europe experimenting with Gaia-X with mixed success with own region-centric offering, many governments and a number of overseas CIOs I talk with are nervous about having all their eggs in one specific commercial basket. The provisions of the Cloud Act in the United States are also quite problematic for many global cloud customers, making regional tier-2 cloud service providers an attractive prospect in my experience. Although many organizations would prefer access to the richer tools and services of the leading hyperscalers -- which are also making their runtimes and SDKs more cloud-neutral all the time anyway -- in practice they are rarely adopting a significant percentage of them. But the fundamental issue is that cloud has become a key source of supply chain risk today. This means true, real-time multi-cloud management is now necessary, as well as ensuring compliance with various regional regulations and governing rules. The key takeaway: All it takes to change the cloud landscape quite quickly is the stroke of a government pen, geopolitical shifts in the wind, or a sudden industry push for more regulation-friendly clouds, regionally-sourced cloud services, or cloud providers that are more compliant with important corporate social responsibility measures, like sustainability. CIOs must prepare themselves for rapid switching to new cloud providers.

Taken together, cloud is becoming a top-level challenge to manage, evolve, and readily cope with incoming turbulence in the operating environment. This is true even as commercial clouds -- public, private, hybrid, and edge -- continue to offer an ever-wider range of virtually impossible benefits to ignore, including agility, scale, elasticity, and reduction in capital expenditure, along with the architectural and application development models of the future.

Preparing for the Next Strategic Revolutions in Cloud

Just as CIOs are getting their IT portfolio adjusted for the coming post-pandemic era, there is the wave of cloud innovations that are about come, beyond today's leading topics of multicloud, edge, and cloud-native, and which no one knows with certainty but are likely given the developments above:

- Industry clouds. So far industry-specific cloud formulations have had less impact than hoped, but mostly because the right offerings have been slow to arrive. Yet industry-focused clouds now stand to be on the big growth stories of the decade in bringing vertical-ready solutions to accelerate digital transformation. While clouds for specific industries used to be more talk than realization, major advances are now being made, with a solid example being the Microsoft and SAP partnership to deliver SAP supply chain solutions through Microsoft Cloud for Manufacturing. Industry clouds are bringing ready-to-run, pay-as-you-go solutions much closer to the average business in an impactful way, and will become a much larger part of the cloud market in the coming decade.

- Cloud coalitions. Similar to industry clouds, many cloud companies are themselves increasingly partnering to create offerings they could not themselves offer alone. The IBM spinoff of Kyndrl is currently pursuing a form of this strategy for example, with Microsoft and VMWare. Non-competitive tier-2 cloud providers are also building colaations to take on hyperscalers in emerging niches for example, including streaming, hyperlocal compute/storage, gaming, and crypto/DeFi.

- Cloud/edge brokers. The sheer scale of providers of regional cloud and edge services is overwhelming and inherently hard to serve well by the hyperscaler cloud firms. Demand for more distributed cloud services/providers is leading to an emergence of new solutions such as Edgevana, which can provide centralized, convenient, and efficient access to highly decentralized providers of data centers and edge presence.

- Sustainable clouds. Corporate social responsibility policies and governance in general is going to further partiion cloud offerings into additional categories of those that can meet the requirements, and those that can't. While not always entire new vendors or offerings, cloud providers will increasingly be asked to provide classes of cloud service that fully comply with their Environmental, Social, and Governance (ESG) mandates in distinct ways that will open competition to new entrants.

- Geo-clouds. By the end of the decade, it's possible that future geopolitical events will require that commercial clouds be more sharply defined by the physical boundaries of where they are allowed to provide service. Global instability and regional events of major significant between nation states may meaningfully disconnect many organizations from their historical cloud providers of choice. In addition, new laws, regulations, and/or other practical factors may require organizations to procure cloud service within specific national/regional boundaries or with pre-certified providers. CIOs must be prepared for this evenuality, and thus investment in robust muliticloud management capabilities in coming years will go a long way towards mitigating this risk

- Metaverses. The rise of cloud-centric virtual worlds with their own currencies, economies, digital assets, and communities is going to entail major commercial investment in unique individual market offerings that combines the necessary compute, digital experiences, data, blockchains, and payments into compelling virtual worlds. These will be distinct clouds in their own right, with identities, capabilities, and differentiation that will exist in the form of digital autonomous organizations and other structures, even if they are often new offshoots of existing cloud estates from the major vendors in practice.

As usual in the tech industry, the changes ahead of us over the next decade will be greater than what we've already experienced in the last several. In many cases we are still working at the fundamentals. Most organizations are simply having a difficult time matching their tech velocity with the cloud, which is still evolving more rapidly. The CIO must now develop effective cloud strategies with matching capabilities (talent and resources) that can map out a changing path forward while effectively managing and hedging the much more contractual as-a-service model. Just managing cloud contracts today can require as much support from the legal team as from IT staff. Developed an overarching and integrated cloud strategy is vital in a time with so many external providers (IaaS, PaaS, and SaaS), combined with all the other factors above. For many IT leaders, genuine cloud agnosticism will become a core strategy across cloud operations, management, and automation. Data must be relentlessly drawn back into the positive control of organizations, with the recognition that some low criticality data may never be reachable.

However, perhaps most of all, the CIO in 2022 will need to better develop their plans around strategic concepts like price arbitrage, complexity management, lightweight innovation/pathfinding, integrated operations, disaster recovery, and vendor dependencies in a way they've never quite had to before. In a healthy rebalancing, it's quite likely that hybrid cloud will be become more important than in the recent past for some organizations that now realize they have more mission critical elements running in the public cloud than they would now like. So too will the long neglected topic of workload versaility rise in importance. Containerization and adoption of serverless where possible will also become even more vital to cloud strategy next year, as the ability to move workloads among commercial clouds will finally get the attention it's long deserved.

Many CIOs next year will now manage their cloud estates in a more portfolio-like manner, while driving unnecessary dependencies and vendor commitments out of the mix whenever possible. This will result in a more resilient and vibrant cloud ecosystem for most organizations. It will also increase competition among cloud vendors while increasing choice for customers. Or at least that is the direction that cloud in the enterprise should be headed.

Additional Reading

A Blueprint for a Post-Pandemic CIO Playbook

Observability Lessons Learned From the AWS East-1 Outage

Is Anthos the edge Google needs in enterprise cloud? | ZDNet

Trends: Quantum Computing Market Cap Tops $174 Billion

How Headless Revolutionized Content Management

New C-Suite Tech Optimization Chief Executive Officer Chief Information Officer Chief Digital Officer Chief Information Security OfficerNext-Gen CX is Now. Re-Think Accordingly.

Customer experience is a funny thing. Organizations can dream up any number of amazing, captivating, creative and “delightful” moments in time. We can map and track how these moments are delivered through an increasingly expansive network of digital and traditional engagement channels. We can orchestrate how a series of moments can string together into robust journeys across which our customers can turn and weave at their own speed. We can dream and do a LOT of things. We just can’t “control” or “manage” a customer’s experience.

Try as we may—and we have all tried REALLY hard—no single person or function can OWN the customer experience. That title is held by each individual customer that chooses to engage. What exceptional CX-driven organizations have come to understand is that the devil is in the details of how we dream and how we deliver those experiences. And those details…those moments…need to be rethought and rearchitected from the ground up for a new type of recipient.

This is why I found recent conversations with Avaya about this idea of “Experience Thinking” and the subsequent action (and new ecosystem) of “Experience Building” to be so fascinating. Here are a couple takeaways:

New economies have bred new customers… they all demand new thinking.

Everyone is now a resident of the digital universe. There are nuanced differences in expectation of the digital native or the digital late bloomer. But everyone expects a healthy dose of value in each engagement. Each individual interacting has assigned their own value and their own personal use cases for each of the channels they choose to utilize — bots for self-service, voice powered search for knowledge on the go, click to talk to get to a real person to get to the real answers, social to get advice — and are no longer waiting for the brands they do business with meet them where they already are.

Avaya calls these the “Everything Customer”: the customers that rely on mobile apps, subscription services and demand ready, immediate answers and access to value. To serve this customer Avaya stresses that organizational response needs to be far more encompassing than simple channel or even locational transformation. They couldn’t be more spot on.

Serving this new Everything Customer demands thinking that is agile and flexible, not held within the linear construct of operational norms or held back by technological barriers. It is about providing as much self-service information as providing on-demand experts and expertise. It fundamentally asks organizations to rearchitect strategy and process around the Everything Customer BEFORE rearchitecting technology, data or channels.

Everything Customers will expect to work with Everything Employees. Experience—and the expectation of fully connected, self-service, smart and personalized experience—is not limited to customers. Just as digital transformation heightened expectations of the Everything Customer, their corporate peer group, the Everything Employee, similarly expects mobile, digital, cloud and self-service, smart systems that help them reach THEIR goals and experiences. Technology is no longer just present as part of their jobs…technology is there to help individuals and teams be more successful in their jobs, regardless of where they choose to sit while doing their job. The expectation is that tools, especially AI-empowered smart tools, will be ubiquitous and available to serve the needs of any employee.

This is why data from Avaya indicating that 32% of organizations report that employees are struggling to adapt is all the more troubling. It seems that businesses, despite their best intentions, continue to deliver tools that don’t actually satisfy, connect with or event assist this new modern Everything (everywhere) Employee.

Hybrid work, the future of work, the mundane reality of going to work…whatever and wherever any of it may be…is meaningless if the tools being provided can’t (or won’t) be used. The “Great Resignation” is real, and it will have a massive impact on an organization’s ability to deliver and capitalize on customer experiences. There is, however, hope…if we can apply Experience Thinking and then get busy building the “Now-Generation”.

The time to build is now.

CX and EX both demand next generation experiences. One problem: Next generation is right now. To deliver exceptional experiences, employees must have the tools and the operational bandwidth to build new experiences. To scale the delivery of these new experiences, teams must be empowered by tools built for the speed of the cloud and the intelligence of AI. Take, as an example, the case of DHL Supply Chain, the world’s leading contract logistics provider.

Based in Singapore, DHL Supply Chain needed to accelerate business expansion projects to meet the increased demand while meeting customer’s increased demands for service and support. This would not just be an issue of rolling out more call center agents or deploying new tools, DHL Supply Chain needed to rethink how to retool and reimagine the experience of DHL Contact Center Services to address everything from retention to the tools to remain best-in-class in the industry.

They needed to innovate experiences to power rapid regional expansion, scaling contact center offerings to Japan, Korea, Australia and beyond. To ensure that CX and EX could scale simultaneously, DHL Supply Chain turned to cloud and service, more specifically tools like Avaya OneCloud to ensure that every agent could deliver service while every customer’s experience counted. As a result, scale, expansion and delivery of exceptional experiences could happen in a timeline both customers AND employees could appreciate…with a bottom line benefit to the business that DHL will appreciate.

Rethinking isn’t an isolated exercise.

In fact, to accomplish transformation quickly and successfully, it takes a village, which is exactly why Avaya has leaned so heavily into their recently announced “Experience Builders” program. This week at their annual conference, ENGAGE 2021, Avaya Experience Buildersä took center stage showcasing the expansive partnership ecosystem aligning services, products and expertise to form a massive Experience village. Experience Builders does not just bring Avaya and its partners and integrators together—and if we are being honest here, that is already something that a staggering number of technology providers do and label it a community. Avaya takes the ecosystem father to include technology developers, customers and citizen developers to create a global network designed to build better experiences for employees and customers. It is not just about building moments or even channels, but potentially building new technologies, integrations and processes to actually transform and deliver experiences.

What I find most intriguing about this ecosystem is that customers, from a customer’s development team to business leaders are as much a part of this process of co-innovation and collaboration as are developers at Avaya and all of their network of partners. This means that real problems can be tossed into the community and everything from small tweaks to big transformational shifts can be iterated and delivered to solve known and unknown challenges faced by (or because of) the Everything Customer and the Everything Employee.

Introduced in October at the Gitex global gathering of enterprise and government technology, the Experience Builders ecosystem has grown to include over 150,000 developers, 100,000 customers and, as Avaya CMO Simon Harrison points out, a network of tens of thousands of global partners all focused on making “Experience as a Service” a reality. This is about bringing the talent, the skills and the problem solving into one space so that experience has an innovation framework that can stand the test of time and scale.

“Experience Builders around the world can compose and wrap solutions around their own customers, tailored for every use case and every user,” Harrison noted prior to ENGAGE 2021. “The ambition here is to empower every citizen developer to build experiences. Every day Avaya Experience Builders are delivering, creating stronger brands, changing entire industries, and in many cases improving lives.”

Yes, we need to rethink. But we need to think and build with purpose.

We need to think and build differently because our customers and our employees expect it. If we fail to heed this call, both will walk with their wallets and their talents. The days of technology transformation for transformation’s sake has officially come to an end with both customers and employees clearly indicating that they don’t plan to wait around for usable tools or experiences. Left ignored, customer experience will stop being a profitable differentiator, instead being a reason for defection and resignation. Next is now…and the future is limitless.

P.S: Encourage you to check out Avaya’s thoughts on the shifts towards effortless experiences and the introduction of their Experience Builders ecosystem. It’s a good read.

New C-Suite Marketing Transformation Next-Generation Customer Experience Chief Customer Officer Chief Executive Officer Chief Marketing Officer Chief Digital Officer Chief Revenue OfficerAmazon re:Invent – game-changer or just another show in town?

I was privileged to attend the Amazon AWS re:Invent conference for the first time this year as an Analyst, though been to re:Invent many times as a vendor/consultant. As always, Amazon puts up a big show! Other than the changes at the helm (Adam replacing Andy), there were also some cultural changes that I sensed throughout the conference.

Here are some of their top announcements:

- AI/ML-based Demand Forecasting – AWS is bringing the power of demand prediction to the masses. Amazon.com has used this for many years as a competitive advantage to grow into a retail giant by using ML for demand forecasting, improved purchasing systems, automatic inventory/order tracking, distribution/supply chain management, operational efficiency, and customer experience. Now they are offering it to others.

- Hardware refresh – AWS gets a refresh of processors with AMD's EPYC processors (M6a), Graviton ARM processor, nVIDIA T4G tensor GPUS. Particularly this is appealing to VM users, as there is a 35% performance improvement per Amazon.

- Enterprise Robotics Toolbox – AWS IoT RoboRunner and the associated robotics accelerator program allow building robot and IoT eco-systems on the cloud.

- 5G – AWS allows spinning up private 5G mobile networks in days. This is their attempt to get into mobile and networking in a massive way.

- AWS Mainframe modernization service – The mainframe migration is slower than AWS had hoped for. This is a big push to help move those mainframe workloads to AWS.

Below are the AI/ML specific announcements from Amazon:

SageMaker, AWS' machine learning (ML) development platform, gets modest enhancements to attract a newer set of users – the business analysts. A major problem in AI, ML development now is the limitation of Data Scientists and the cost associated with building experimental models. And there is also the cost of produtionizing, optimizing them in production once it is ready to go. Amazon is trying to democratize both of these functions with several announcements covered below.

1. SageMaker Canvas

SageMaker platform gets a new tool to build no-code ML models using studio with a point-and-click interface. You can build and train models from the Canvas using wizards. You can upload data (CSV files, Amazon Redshift, Snowflake are the current options), train the models, and can perform predictions in an iterative loop until you can perfect the model. The models can be easily exported to SageMaker studio for production deployment or sharing with other Data Scientists either for improvement or for validation and/or approval.

It uses the same engine as Amazon Sagemaker for data cleanup, data merger, and to create multiple models, and for choosing the best performing ML model.

Keep in mind this is NOT the first time Amazon dabbled on this. They tried it many years ago, circa 2015, Amazon Machine Learning (which was limited to Redshift and S2 at that time). Amazon deprecated that in 2019 as adoption was slow and the interface was a bit clunky.

[Image source: Amazon]

My View: This is a good step towards democratizing ML model creation. Now business users can easily build simple models and experiment instead of relying on expensive Data Scientists. What I particularly like is the fact that it gives the accuracy of model prediction, 94.2% in the case above, which can instill confidence with a non-data scientist on whether they built a model that is good enough or if they have to escalate to an expert for fine-tuning.

The interface is still a bit awkward for the general business user. Currently, the data input is very restricted to tabular data and not integrated with their own data prep tools. The documentation is not that great, especially if you are a business user you can get lost fairly quickly. They also need to produce a lot of training videos to make this work.

It is only a slight improvement over the deprecated Amazon Machine Learning. It is very limited to 3 types and doesn't address the unstructured data or deal with video, audio, NLP, Ensembling, etc.

Verdict: Average. Overall it comes across as a mature MVP candidate. Still a lot of work needs to be done for it to be enterprise business user ready.

2. SageMaker Studio Lab

Amazon SageMaker Studio Lab (light version of SageMaker Studio based on JupyterLab) is a free machine learning (ML) development environment that provides the compute, storage (up to 15GB), and security—all at no cost—for anyone to learn and experiment with ML. This setup requires no AWS account, no credit card, and no extended sign-ups, just an email address. You don't need to configure infrastructure or manage identity and access or even sign up for an AWS account. SageMaker Studio Lab accelerates model building through GitHub integration, and it comes preconfigured with the most popular ML tools, frameworks, and libraries to get you started immediately. SageMaker Studio Lab automatically saves your work so you don't need to restart in between sessions.

My View: This option is to attract developers who wants to try before they buy and is similar to Google's CoLab environment which has become popular with ML beginners. What makes this interesting is the option to choose between CPU (12 hours) or GPU (4 hours) combination for the project. For example, a Convolutional Neural Network (CNN) or a Recurrent Neural Network (RNN) might require intensive GPU sessions whereas other algorithms might be ok to execute on CPUs. The generous 15 GB storage allows for retention of the project and data to continue experimentation over multiple sessions/days. Given that this is JupyterLab based (popular among Data Scientists) instead of yet another Amazon tool, getting traction with Data Scientists should be fairly easy. The datasets and Jupyter notebooks can be easily imported from Git repo or from the Amazon S3 bucket.

Verdict: Average. This can attract two user communities:

- The enterprise users who want to do side projects without enterprise approval to prove the concept rather than waiting for extended periods to get approval to open a paid AWS account.

- Run hackathons with a codebase or ideas from a common repository (Github) and have as many participants join the hackathon for ideation. This is a great usecase for enterprises that run hackathons often and worried about configuring a lab environment, providing access to the enterprise network, and the costs associated for all the hackathoners.

3. SageMaker Serverless Inference (and Recommender)

Another brand-new option was introduced at the re:Invent. This allows users to deploy ML models, anywhere, anytime, on the Amazon serverless platform and pay only for the time used. No need for extensive infrastructure provisioning and set-up. With this option, not only can AWS deploy quickly, but can also scale up, down, and out underlying infrastructure automatically based on demand. The charge is only for the time the code is run and the amount of data processed, not during idle times. The billing is in millisecond increments.

An added feature that goes along with the above, is the Amazon SageMaker Inference Recommender. This service enables the Data Scientists (or anyone who is deploying the ML models) to select the most optimized compute for the deployment of the ML models. It recommends the right instance type, instance count, container parameters, etc. to get the best performance at a minimal cost. It also allows MLOps engineers to run load tests against their models in a simulated environment. This is a great way to stress test ML models at varying loads to see how they will behave in production.

My View: Most often the ML model deployment is very complicated. Data Scientists are not very good with coding, infrastructure, and cloud deployments. After their experimentation is done, they have to wait for Data Engineering help, and sometimes deployment/release team help to make it work. By taking that portion out of the equation, Data Scientists can run their productionized experiments as soon as it is ready.

Verdict: Good. Probably this is the most noteworthy announcement of all. Deploy the ML model as soon as it is ready. Also, letting ML models exploit the fullest capabilities of the Cloud.

4. SageMaker Ground Truth Plus

A turnkey service that can elastically expand the expert contract workforce that is available on-demand for data labeling services to produce high-quality datasets fast and reduce costs up to 40%. Outsourcing of data labeling and image annotations becomes easier allowing Data Scientists to concentrate on more valuable work. This service also uses ML techniques, including active-learning, pre-labeling, and machine validation (This is an additional feature that was not available with the Amazon Mechanical Turk service). Currently, it is available only in the US East AWS region though Amazon expects to expand this service quickly to other regions.

My View: This already existed as part of Amazon Mechanical Turk. Besides, there are hundreds of companies specializing in Human-in-the-loop (HITL) producing high-quality, industry-specific data sets as well as synthetic data. While image annotation and data labeling are the hardest problems to solve in ML modeling, this service is just relabeling of existing services in my view. There are two distinct advantages to using Amazon's service vs others. First, the workflow, dashboarding, and outsourcing workflow management are automated. Second, Amazon claims the ML systems learn on the job and can kick in and start to "pre-label" the images on behalf of the expert workforce. This is a tall claim especially because the whole idea of having this in place is to have a human be a source of truth instead of a machine.

Verdict: Below Average. Amazon wants to automate the data labeling, image annotation, and machine validation eventually and this is a good first step in that direction.

5. SageMaker Training Compiler

A new service that aims to accelerate the training of deep learning (DL) models by compiling the python code to generate GPU kernels. It accelerates training jobs by converting DL models from their high-level language representation to hardware-optimized instructions that train faster than the ordinary frameworks. It uses graph-level optimization, dataflow-level optimizations, and backend optimizations to produce an optimized model that efficiently uses hardware resources and, as a result, trains the compiled model faster. It does not alter the final trained model but increases the efficiency using the GPU memory and fitting a larger batch size per iteration. The final trained model from the compiler-accelerated training job is identical to the one from the ordinary training job.

My View: Multi-layered DL models are very compute/GPU intensive to run. A lot of data engineering time is spent on optimizing the models for the run environment. While Amazon doesn't give specifics on how much time/cost savings after the optimization/compilation, this could be huge in cutting down the DL multi-layered neural network model training costs.

Verdict: Above Average. If it works as claimed, this can result in huge savings for enterprises as a lot of time and money is spent on training DL models.

6. Amazon Graviton v3 (Plus Trn1 and Trainium)

A custom-built ARM-based chip for AI plus a compute instance (C7G) that is built on that processor. Amazon claims a 3x boost for ML workloads while reducing the energy by 16%.

My View: Another crowded market. Not only nVIDIA is dominant in this space, but there are other smaller competitors with advanced technologies such as Blaize, Hailo, SambaNova Systems, Grahcore, and the bigger ones from AMD, Apple, Google, Intel, IBM, etc.

Verdict: Below Average.

And a few more related announcements from AWS in the AI/ML area:

- Contact Lens – A virtual call center product (based on NLP) that transcribes calls via Amazon's cloud contact center service.

- Lex – An automated chatbot designer which helps in building conversational voice and text interfaces. The designer uses ML to provide an initial design that can be refined to customize based on needs.

- Textract – A ML service that automatically extracts text, handwriting, and data from scanned documents. It can extract information from IDs, licenses, passports, etc. for specific information such as date of birth, name, address, etc.

- CodeGuru Reviewer Secret Detector – An automated tool that reviews and detects in source code/config files. Most times developers hard-code information such as API keys, passwords, etc. This augments the AI-powered code quality tool CodeGuru.

My overall POV

AWS always used to come across as a landing place to attract the digital innovators to experiment, innovate and then productionize. They always had a good story at re:Invent to attract the bleeding edge innovators. This time I felt they missed that beat a little. Overall, it came across as less innovative and more incremental story to what they already have. No earth-shattering new initiatives that blew me away. It could be because they wanted to play it safe with the change at the helm.

On the AI front, the democratization of AI is coming to move the AI modeling away from the expensive Data Scientists. AWS is giving you options to create, train, and productionize ML models with ease. They are trying to drive more people (business users) towards their cloud. They also want to compete not just for regular compute workloads but also for the newer AI/ML workloads.

Democratization of AI is coming. Amazon fired the first shots in the right direction. We need to wait and see the market traction and whether Amazon will continue to improve on these services or deprecate some of these services.

New C-Suite Data to Decisions Tech Optimization Innovation & Product-led Growth Future of Work Next-Generation Customer Experience Digital Safety, Privacy & Cybersecurity amazon ML Machine Learning LLMs Agentic AI Generative AI Robotics AI Analytics Automation Quantum Computing Cloud Digital Transformation Disruptive Technology Enterprise IT Enterprise Acceleration Enterprise Software Next Gen Apps IoT Blockchain Leadership VR business Marketing SaaS PaaS IaaS CRM ERP finance Healthcare Customer Service Content Management Collaboration CCaaS UCaaS Enterprise Service Chief Information Officer Chief Digital Officer Chief Analytics Officer Chief Data Officer Chief Executive Officer Chief Technology Officer Chief AI Officer Chief Information Security Officer Chief Product OfficerObservability Lessons Learned From the AWS East-1 Outage

Achieve Reliable Observability: Bolster Cloud-Native Observability

Modified and updated from an originally published article in DevOps.com on March 30, 2021

The recent AWS East-1 outage provides a catalyst for customers to rapidly address their AIOps and Observability capabilities, especially the monitoring/observability portion. In particular, you should be aware of the below, and be prepared for it. According to AWS, "We are seeing an impact on multiple AWS APIs in the US-EAST-1 Region. This issue is also affecting some of our monitoring and incident response tooling, which is delaying our ability to provide updates. We have identified the root cause and are actively working towards recovery."

If you haven't seen it already, AWS has posted a detailed analysis of what happened here - Summary of the AWS Service Event in the Northern Virginia (US-EAST-1) Region

In recent conversations with CXO’s, there appears to be great confusion on how to properly operationalize cloud native production environments. Here is how a typical conversation goes.

CXO: “Andy, we are thinking about getting [vendor] to use for our observability solution, what vendors do you think we should shortlist?”

AT: “Well, I don’t want to endorse any specific vendor, as they are all good at what they do. But let’s talk about what you want to do, and what they can do for you, so you can figure out whether or not they are the right fit for you.” The conversation continued for a while, but the last piece is worthy of being called out specifically.

CXO: “So, we will be running our production microservices in AWS in the ____ region. And we are planning to use this particular observability provider to monitor our Kubernetes clusters.”

AT: “Couple of items to discuss. First, you realize that this particular provider you are speaking of also runs in the same region of the same cloud provider as yours, right?”

CXO: “We didn’t know that. Is that going to be a problem?”

AT: “Definitely. you may get into a ‘circular dependency’ situation.”

CXO: “What is that?”

AT: “Well, from my enterprise architect perspective, we often recommend a separation of duties as a best practice. For example, having your developer testing the code is a bad idea, having your developer figuring out how to deploy is a bad idea. In much the same way as when your production services run in the same region as your monitoring software – how would you know about a production outage if the cloud region takes a hit, and your observability solution goes down at the same time your production services do?”

CXO: “Should we dump them and go get this other solution instead?”

AT: “No, I am not saying that. Figure out what you are trying to achieve and have a plan for it. Selection of an observability tool should fit your overall strategy.”

Always Avoid Circular Dependencies

Enterprise architects often recommend a best practice of avoiding circular dependencies. For instance, this includes not having two services depend on each other, or not to collocate monitoring, governance and compliance systems as part of the production systems themselves. If one were to monitor one’s production system, one would do it from a separate and isolated sub-system (server, data center rack, sub-net, etc.) to make sure that if your production system goes down, the monitoring system doesn’t go down, too.

The same goes for public cloud regions – although it’s unlikely, individual regions and services do experience outages. If one’s production infrastructure is running on the same services in the same region as one’s SaaS monitoring provider, not only won’t an enterprise be aware that their production systems are down, but the organization also won’t have the data to analyze what went wrong. The whole idea behind having a good observability system is to quickly know when things went bad, what went wrong, where the problem is and why it happened so one can quickly fix it. For more details check out this blog post

Apply These Five Best Practices Before The Next Cloud Outage

When/if one receives the dreaded 2 a.m. call, what will the organization consider for their plan of action? Just think it through thoroughly before it happens and have a playbook ready, so one won’t have to panic in a crisis. Hera are five best practices based from hundreds of client interactions:

- Place your observability solution outside your production workloads or cloud. Consider an observability solution that runs in a different region than your production workloads. Better yet, consider something that runs on a different cloud provider altogether. Although it is exceptionally rare, there have been instances of cloud service outages that cross regions. The chances of both cloud providers going down at the same time would be slim. For example, if the cloud region goes down (a region-wide outage in the cloud is quite possible, and seems to be more frequent of late), then your observability systems will also be down. You wouldn’t even know your production servers are down to switch to your backup systems unless you have a “hot” production backup. Not only will your customers find out about your outage before you do, but you won’t even be able to initiate your playbook, as you are not even aware that your production servers are down.

- Keep observability solutions physically near production systems to minimize latency. Consider having your observability solution in a different region/cloud provider/location, yet still close enough to your production services so latency is very low. Most cloud providers operate in close proximity, so it is easy to find one.

- Deploy on-premises and in the cloud options. For example, there are a couple of observability solutions that allow you to deploy it in any cloud and observe your production systems from anywhere – both in the cloud and on-premises.

- Build redundancy. You can also consider sending the monitoring data from your instrumentation to two observability solution locations, but that will cost you slightly more. Or, ask what the vendor’s business continuity/disaster recovery plans are. While some think the costs might be much higher, I disagree, for a couple of reasons. First, because monitoring is mainly time-series metric data, so the volume and the cost to transport is not as high as logs or traces. Second, unless your observability provider is VPC peered, the chances are your data will be routed through the internet even though they are hosted in the same cloud provider. Hence, there will not be much more additional cost. Having observability data, all the time, about your production system is very critical during outages.

- Monitor one’s full-stack observability system. While it is preferable to have the monitoring instance in every region where your production services run, it may not be feasible either because of cost or manageability. On such occasions, monitor the monitoring system. You could do synthetic monitoring by checking the monitoring API endpoints (or do random data inputs and check to ensure it worked) to make sure that your monitoring system is properly watching your production system. Better yet, find a monitoring vendor that will do this on your behalf.

Data to Decisions Tech Optimization Chief Information Officer Chief Digital Officer Chief Analytics Officer Chief Data Officer

Market Move – Ceridian acquires ADAM HCM, becoming a Caribbean and Latin America Payroll Champion

It has been quiet on the global payroll front in general – the biggest news of the year has been incremental addition of countries by practically all major global HCM vendors (see here for the Market Overview).

The faster path – basically not playing the country-by-country monopoly game – is an acquisition - and that is what Ceridian just has done, with the acquisition of ADAM HCM. Let’s dissect the press release in my custom style, it can be found here.

Toronto, ON and Minneapolis, MN, December 6, 2021

Ceridian (NYSE: CDAY; TSX: CDAY), a global leader in human capital management (HCM) technology, today announced it has acquired ADAM HCM, a leading payroll and HCM company serving customers in 33 countries across Central America, South America, and the Caribbean. This acquisition positions Ceridian as a leading HCM provider in Latin America and will create value for existing and prospective customers in key markets, including Mexico and Brazil.

MyPOV - ADAM HCM has been a specialist for Latin America, pretty much since its inception, pitching its capabilities to typically North American headquartered companies (e.g., Bayer, Cargill, GM, Intel etc.) that have been disappointed by their large HCM vendor support for both Latin American and Caribbean countries. More recently ADAM HCM has focused on larger Latin America champions, with a focus on Brazil and Mexico headquartered (e.g., Vale) companies.

Trusted by leading global brands, ADAM HCM has a strong and tenured leadership team and extensive regional knowledge and experience. Through this acquisition, ADAM HCM customers will be able to access Ceridian’s award-winning platform for global HCM, allowing them to scale and grow globally across new geographies.

MyPOV – This is a neutral statement on the intentions of Ceridian. Certainly, ADAM HCM customers will get access to Ceridian payroll supported countries but that is only a small portion of the overall potential for this acquisition. Understandably, Ceridian is trying to not open its cards here to much – but the medium and longer term is clear – leverage the ADAM HCM payroll expertise and build out Ceridian payroll... the interesting aspect to watch out for – will Ceridian first ‘just’ integrated the ADAM HCM payrolls or start with a rebuilt of them in the native Ceridian technology stack… future will tell.

“This acquisition will accelerate Ceridian’s global growth strategy by extending the Dayforce platform into Latin America, a highly appealing region for our multinational customers,†said David Ossip, Chair and CEO, Ceridian. “Together with ADAM HCM, we’ll enhance our capacity to meet rising customer demand in Latin American countries, while delivering on our brand promise to make work life better for people everywhere.â€

MyPOV – Ossip’s statement shows the real intention unequivocally – bring Ceridian to Latin America. ADAM HCM supports 30 countries across Latin America and the Caribbean. Once Ceridian has support for these countries in Dayforce, which will of course not happen overnight, but would make Ceridian the #2 amongst HCM vendors when it comes to native, on platform payroll support.

Today’s announcement will accelerate Ceridian’s commitment to deliver Dayforce Payroll in Mexico. Dayforce Payroll, Ceridian’s always-on, global payroll platform, improves accuracy by auditing payroll data in real-time while managing global compliance complexities – all within a single solution.

MyPOV – Support for Mexico payroll was announced by Ceridian earlier, this acquisition will certainly help the effort, and more importantly rationalize the investment sooner, as a larger customer base can be potentially sold to.

“We are thrilled to become part of Ceridian’s global team and broaden the reach of Dayforce into Latin America and the Caribbean,†said Brian Beneke, CEO, ADAM HCM. “In today’s borderless and fluid world of work, our customers will benefit from a single Dayforce experience that delivers innovation and experiences to help organizations and employees reach their full potential.â€

MyPOV – They usual acquired CEO positive statement, glad Beneke mentions the Caribbean – which is an underserved, under automated region when it comes to payroll support.

Overall MyPOV